Retrieval Augmented Generation

RAG AI for Unstructured Enterprise Data

Accelerate and operationalize RAG with a full-stack AI development platform for no- or low-code RAG application workflows, model evaluations, training, fine-tuning, and inference.

support for leading LLMs

uptime with open source models

governance and security controls

Enterprise business insights need a new model

As organizations look to RAG to unlock business insights, it’s difficult to know which large language model (LLM) to use for your business needs. Evaluating, comparing, and training LLMs is extremely time intensive. And development on the AI stack needed to deploy LLMs at scale—including data security, orchestration, and compute—can take years and often leads to model lock-in.

What’s more, running LLMs can be compute-intensive and cost prohibitive, and when unstructured data doesn’t have metadata that can improve data retrieval and response generation, response quality goes down and costs continue to go up.

RAG made easy, without sacrificing scale or security

Save time and money on RAG AI

Get started with RAG in minutes or hours instead of months with a full-stack AI platform and instant access to leading LLMs

RAG-ready infrastructure for enterprise scale

Prepare and embed data, evaluate LLMs of your choice, vectorize data for easy retrieval with RAG prompts, and centralize governance and security

Maximize the value of your unstructured data

Use out-of-the-box RAG AI workflows to quickly develop conversational search experiences that power unprecedented insights from enterprise data

Close the gap between data and insights with RAG

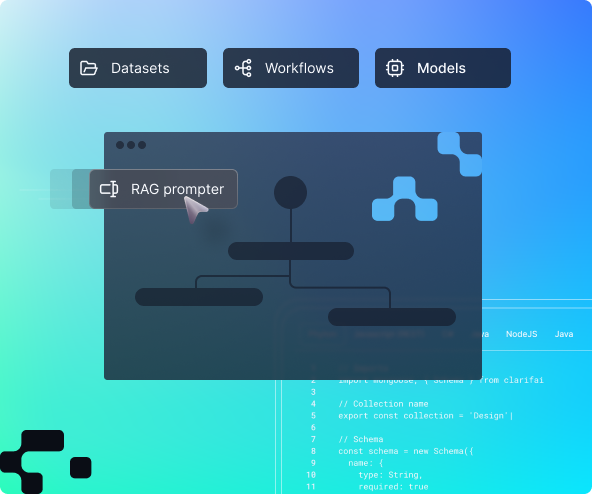

Easily evaluate LLMs (compare, analyze) to choose the right models for your use cases, or easily stitch together multiple LLMs for applications.

Shared AI resource library for AI models, annotations, datasets, workflows, including RAG prompters, to streamline development workflows.

Use Clarifai’s full-stack AI platform to integrate data sources, prepare and index data for vectorization, improve searchability of enterprise data, and secure access.

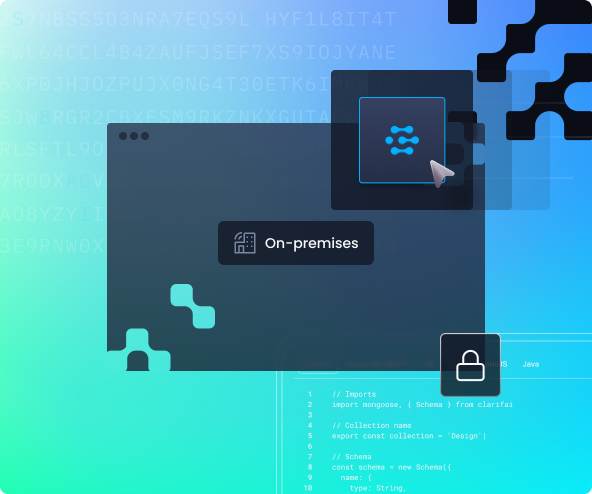

Deploy Clarifai on-prem or in your VPC so that data can stay where it is for model training, fine-tuning and inference.

Why Clarifai Platform

Clarifai provides an end-to-end, full stack enterprise AI platform to build AI faster, leveraging today's modern AI technologies like cutting-edge Large Language Models (LLMs), Generative AI, Retrieval Augmented Generation (RAG), data labeling, inference, and much more.

Build & Deploy Faster

Quickly build, deploy, and share AI at scale. Standardize workflows and improve efficiency allowing teams to launch production AI in minutes.

Reduce Development Costs

Eliminate duplicate infrastructure and licensing costs around teams using siloed custom solutions, and standardize and centralize AI for easy access.

Oversight & Security

Ensure you are building AI responsibly with integrated security, guardrails, and role-based access on what data and IP is exposed and used with AI.

Scale your AI with confidence

Clarifai was built to simplify how developers and

teams create, share, and run AI at scale

Establish an AI Operating Model and get out of prototype and into production

Build a RAG system in 4 lines of code

Make sense of the jargon around Generative AI with our glossary

Build your next AI app, test and tune popular LLMs models, and much more.