Your model.

Live in minutes.

Upload any model—open-source, third-party, or custom—and get it production-ready instantly. No DevOps grind, no lock-in, just speed, reliability, and scale built in.

Getting your models online shouldn’t take days

Most developers spend more time wrangling configs, pipelines, and vendor quirks than actually running their models. It slows iteration, kills experimentation, and wastes budget. Clarifai makes deployment instant—turning the pain of DevOps into a one-click ‘Aha!’ moment.

less compute required

inference requests/sec supported

reliability under extreme load

Upload your model. Or start with ours.

Whether you’re shipping your own model or exploring the latest open-source releases, Clarifai makes it simple to get online fast. Every model runs with the same guarantees—low latency, high throughput, and full control over your infrastructure.

Upload Your Own Model

Get lightning-fast inference for your custom AI models. Deploy in minutes with no infrastructure to manage.

GPT-OSS-120b

OpenAI's most powerful open-weight model, with exceptional instruction following, tool use, and reasoning.

DeepSeek-R1-0528-Quen3-8B

Improves reasoning and logic hrough better computation and optimization. Nears the performance of OpenAI and Gemini models.

Llama-3_2-3B-Instruct

A multilingual model by Meta optimized for dialogue and summarization. Uses SFT and RLHF for better alignment and performance.

Qwen3-Coder-30B-A3B-Instruct

A high-performing, efficient model with strong agentic coding abilities, long-context support, and broad platform compatibility.

MiniCPM4-8B

The MiniCPM4 series are efficient LLMs optimized for end-side devices, achieved through innovations in architecture, data, training, and inference.

Devstral-Small-2505-unsloth-bnb-4bit

An agentic LLM developed by Mistral AI and All Hands AI to explore codebases, edit multiple files, and support engineering agents.

Claude-Sonnet-4

State-of-the-art LLM from Anthropic that supports multimodal inputs and can generate high-quality, context-aware text completions, summaries, and more.

Phi-4-Reasoning-Plus

Microsoft's open-weight reasoning model trained using supervised fine-turning on a dataset of chain-of-thought traces and reinforcement learning.

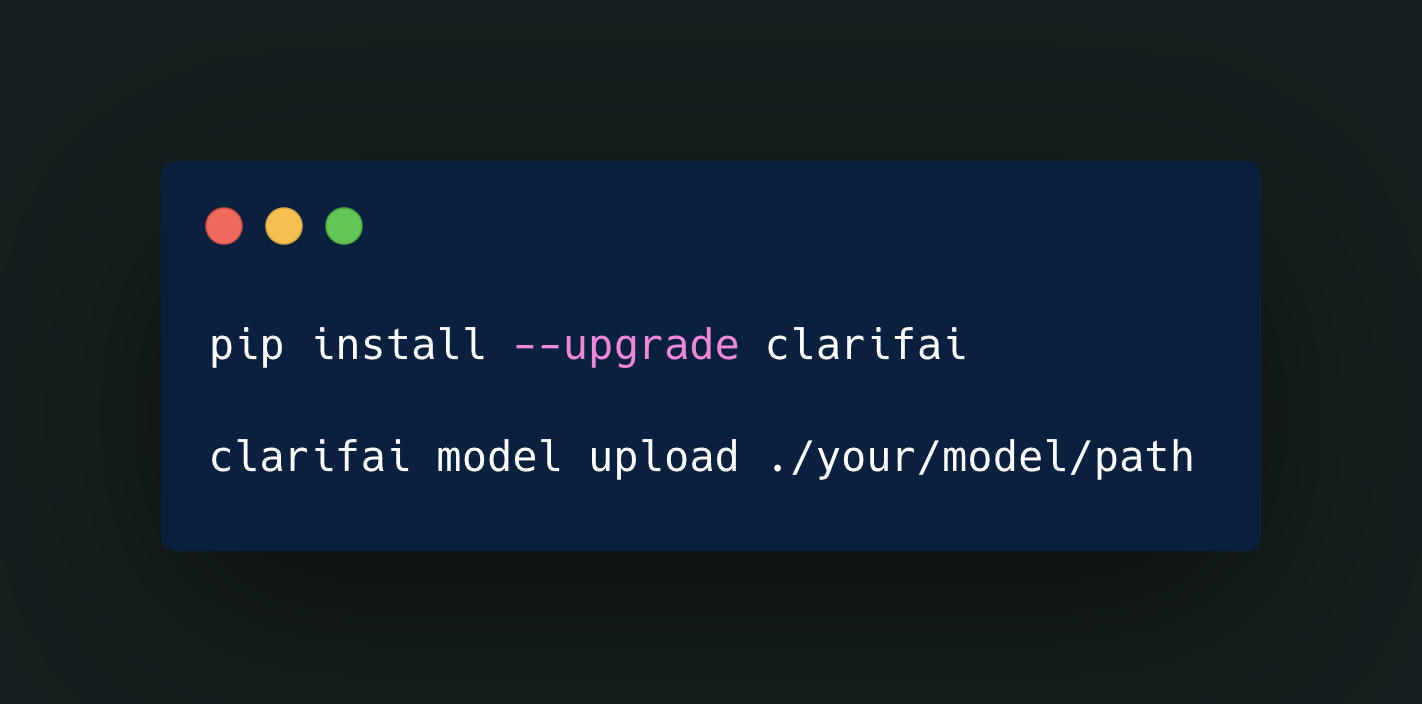

lightning fast first deploy

From upload to live in under five minutes

Skip the DevOps grind. Upload your model through the UI or CLI and Clarifai handles the rest—provisioning, orchestration, scaling, and optimization. In minutes, your model is production-ready and serving live inference.

your model, your choice

Upload anything. Deploy everywhere.

Bring your own model—from Hugging Face, GitHub, proprietary vendors, or one you trained yourself. Clarifai runs it seamlessly in the cloud, your VPC, or fully on-prem. No lock-in. No compromises. Just your model, deployed where you need it.

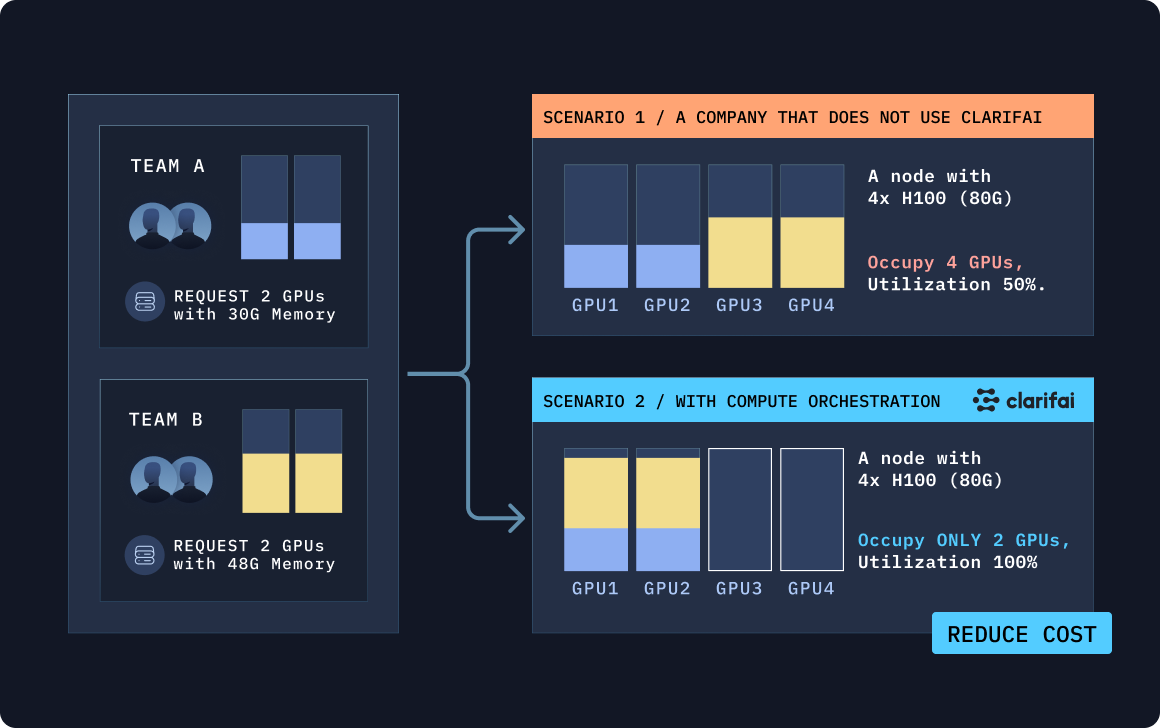

built-in cost & performance optimizations

Deploy once. Optimize automatically.

Run more on less. Clarifai’s GPU fractioning splits a single GPU across multiple workloads—so you get full utilization without extra hardware. Autoscale to zero cuts idle costs, hybrid deployments stretch your budget, and GPU-hour pricing keeps it all predictable.

unmatched time-to-first-token

Ultra low latency

Cold starts and lag kill real-time apps. Clarifai delivers sub-300ms time-to-first-token and rock-steady latency under load—so responses feel instant, and your users never wait.

Scale without slowdown

Unrivaled token throughput

Other platforms choke when traffic surges. Clarifai sustains massive concurrency with unmatched throughput—keeping your apps fast, reliable, and ready for anything.

local runners

Run compute anywhere, even from home

Prototype locally, deploy globally. Test your model changes instantly with Local Runners, then scale seamlessly into production with Compute Orchestration.

Complexity

Docs that Get You Building

From quickstarts to advanced guides, our documentation helps you move fast. Explore code snippets, setup tutorials, and best practices for deploying, scaling, and securing your models—all in one place.

Visit docs.clarifai.com