Clarifai Reasoning Engine

Unrivaled AI Inference.

Engineered for Speed.

Optimized for Agents.

The Clarifai Reasoning Engine is the breakthrough platform built for high-demand agentic AI workloads. Now, you don't have to choose between speed, latency, and price.

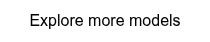

GPT-OSS-120B benchmarked

output tokens/sec throughput

time to first answer token

per million tokens (blended)

Unbeatable speed and efficiency. Independently validated.

Artificial Analysis, an independent benchmarking firm, ranked Clarifai in the “most attractive quadrant” among inference providers. The results highlight that Clarifai delivers both performance and cost-efficiency—showing you don’t need exotic hardware to achieve fast, affordable, and reliable inference.

fast and affordable

Output speed vs Price

Most providers force a trade-off: pay more for speed or settle for lower performance. Artificial Analysis results show Clarifai avoids that compromise—delivering high throughput at competitive cost per token. Scale inference workloads without blowing your budget.

%20.png?width=2000&name=AA%20-%20Output%20Speed%20vs%20Price%20(10%20Sep%2025)%20.png)

Low Latency, High Throughput

Latency vs Output speed

Long waits for the first token ruin user experience. Benchmarking proves Clarifai combines fast time to first token with strong sustained output speed. Users get instant responses and reliable performance—without compromise.

%20.png?width=2000&name=AA%20-%20Latency%20vs%20Output%20Speed%20(10%20Sep%2025)%20.png)

Trending models. Inference ready.

Skip setup and scale instantly. Run today's most popular open-source models with low latency, high throughput, and full production reliability.

Or upload your own models and get access to Clarifai's Compute Orchestration benefits.

Upload Your Own Model

Get lightning-fast inference for your custom AI models. Deploy in minutes with no infrastructure to manage.

GPT-OSS-120b

OpenAI's most powerful open-weight model, with exceptional instruction following, tool use, and reasoning.

DeepSeek-V3_1

Hybrid model that supports both thinking mode and non-thinking mode, this upgrade brings improvements in multiple aspects

Llama-4-Scout-17B-16E-Instruct

Natively multimodal AI models that leverage a mixture-of-experts architecture to offer industry-leading multimodal performance.

Qwen3-Next-80B-A3B-Thinking

80B-parameter, sparsely activated reasoning-optimized LLM for complex reasoning tasks with extreme efficiency in ultra-long context inference.

MiniCPM4-8B

The MiniCPM4 series are efficient LLMs optimized for end-side devices, achieved through innovations in architecture, data, training, and inference.

Devstral-Small-2505-unsloth-bnb

An agentic LLM developed by Mistral AI and All Hands AI to explore codebases, edit multiple files, and support engineering agents.

Claude-Sonnet-4

State-of-the-art LLM from Anthropic that supports multimodal inputs and can generate high-quality, context-aware text completions, summaries, and more.

Phi-4-Reasoning-Plus

Microsoft's open-weight reasoning model trained using supervised fine-turning on a dataset of chain-of-thought traces and reinforcement learning.

Beyond benchmarks:

Built for real-world scale

Clarifai provides an end-to-end, full stack enterprise AI platform to build AI faster, leveraging today's modern AI technologies like cutting-edge Large Language Models (LLMs), Generative AI, Retrieval Augmented Generation (RAG), data labeling, inference, and much more.

OpenAI compatible & Developer-friendly APIs

Integrate Clarifai models seamlessly into your workflows. Our inference APIs return OpenAI-compatible outputs for effortless migration, and we provide REST APIs and SDKs in popular languages so you can deploy and monitor models with just a few lines of code.

import os

from openai import OpenAI

# Change these two parameters to point to Clarifai!

client = OpenAI(

base_url="https://api.clarifai.com/v2/ext/openai/v1",

api_key="YOUR_PAT",

)

response = client.chat.completions.create(

model="https://clarifai.com/openai/chat-completion/models/gpt-oss-120b",

messages=[

{"role": "user", "content": "What is the capital of France?"}

]

)

print(response.choices[0].message.content)

Flexibility without lock-in

Run your models anywhere—across clouds, on-prem, or even air-gapped. Clarifai is both hardware-agnostic and vendor-agnostic, supporting NVIDIA, AMD, Intel, TPUs, and more. By optimizing compute across environments, customers see up to 90% less compute required for the same workloads, turning flexibility into significant cost savings.

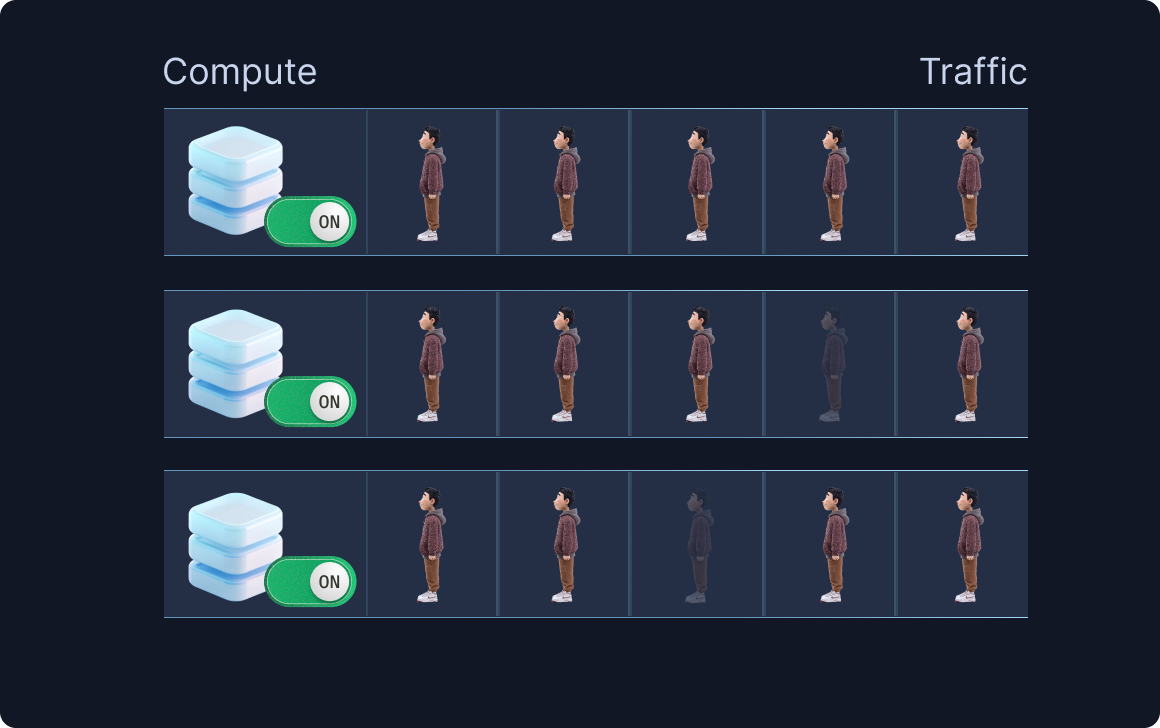

Traffic-based autoscaling

Traffic surges can overwhelm your models, while overprovisioning GPUs drains your budget. Clarifai automatically scales inference workloads up to meet peak demand and back down when idle—delivering responsive performance without wasted resources. And with 99.99% uptime under extreme load, you can count on reliability no matter the traffic.

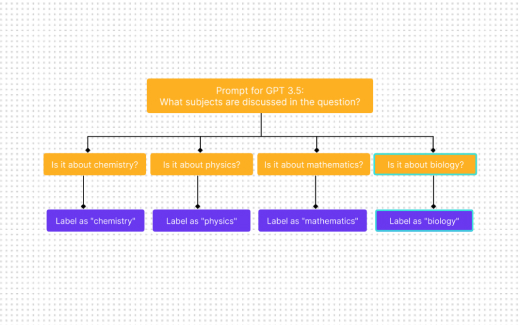

Combine models into Workflows

One model rarely solves everything. With Clarifai, you can chain multiple models and custom logic into flexible workflows—blending vision, language, and generative AI for richer results, from simple predictions to complex pipelines.