Compute Orchestration

Introducing Compute Orchestration

Take advantage of Clarifai’s unified control plane to orchestrate your AI workloads: Optimize your AI compute, avoid vendor lock-in, and control spend more efficiently.

Recognized in the GigaOm Radar for

AI Infrastructure v1 Report

Clarifai’s Compute Orchestration has made enterprise AI not only more powerful, but also dramatically more cost-effective. With GPU fractioning and autoscaling, we’ve been able to cut compute costs by over 70% while scaling with ease. This is true production-grade AI, without the usual complexity.

We’ve finally found an AI platform that gives us full control without vendor lock-in. Clarifai’s Compute Orchestration lets us deploy any model on any hardware, across any environment—cloud, on-prem, or even air-gapped. That level of flexibility is a game-changer for enterprise AI.

Clarifai has accelerated our AI journey from prototype to production in record time. The rich library of pre-built models and workflows, combined with seamless orchestration, means we can innovate faster and deploy with confidence. This is how enterprise AI should work.

Clarifai’s Compute Orchestration has made enterprise AI not only more powerful, but also dramatically more cost-effective. With GPU fractioning and autoscaling, we’ve been able to cut compute costs by over 70% while scaling with ease. This is true production-grade AI, without the usual complexity.

Easily deploy any model, on any compute, at any scale

Manage your AI compute, costs, and performance through a single intuitive control plane. Bring your own unique AI workloads or leverage our full-stack AI platform to customize them for your needs, with powerful tools for data management, training, evaluation, and more. Then seamlessly orchestrate your workloads across any compute.

Deploy any model on any environment—whether in our SaaS or your cloud, on-premises, or air-gapped. Use Compute Orchestration with any hardware accelerator: GPUs, CPUs, TPUs. A secure, enterprise-grade infrastructure with team access control ensures efficient deployments without compromising the integrity of your environment.

less compute required

inference requests / sec supported

reliability under extreme load

Orchestrate your AI workloads in a unified control plane

Use compute as efficiently as possible

Clarifai optimizes your resources automatically and reduces compute costs using GPU fractioning, batching, autoscaling, spot instances, and more.

Deploy on any hardware or environment

Seamlessly deploy models in any CPU, GPU, or accelerator in our SaaS, or BYO cloud and on-premises, or air-gapped environment.

Maintain security and flexibility

Deploy into your VPC or on-premises Kubernetes clusters without opening inbound ports, VPC peering, or custom IAM roles.

Manage All Your AI Compute

Clarifai can manage any combination of compute clusters through a single cloud control plane, whether they're deployed in our SaaS cloud, your cloud VPC, or your bare metal clusters

For our most security-conscious customers, our control plane can be deployed into the same cluster used for compute, allowing for fully self-hosted deployments, even in air-gapped environments.

Go From Prototype to Production More Quickly

Configure servers into node pools and compute clusters to handle different workload needs across teams. Bring the convenience of serverless autoscaling to any compute.

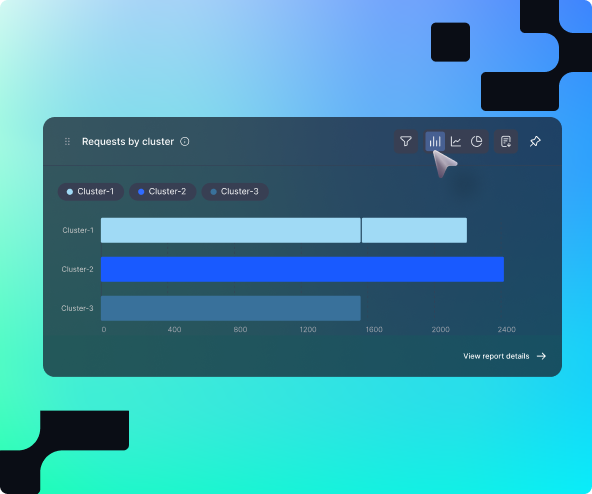

Unified view to monitor your performance, costs, and usage across all your deployments. Manage your AI spend across teams and projects.

Save time using a robust UI, SDK, and CLI that streamlines model build and config. Deploy your own models or 100’s of out-of-the-box pretrained models with the push of a button.

Compute orchestration allows you to efficiently run any AI workload

Run any inference workload in Clarifai. Select a popular model from our community, build one using Clarifai's tools, or upload your own, and we'll build it into a secure container and manage dependencies. Clusters and nodepools let you centrally manage any compute. Access shared SaaS compute instantly, deploy dedicated SaaS instances at the push of a button, or connect your own VPC or on-prem compute via a simple helm install.

Customize each model deployment with settings tailored for the workload, from sandbox to high-throughput production. Clarifai orchestrates all of your workloads, optimizing your compute efficiency automatically based on inference demand and autoscaling settings. Monitor your costs and performance through the single pane of glass Control Center.

Why Clarifai Platform

Clarifai’s end-to-end, full-stack enterprise AI platform lets you build and run your AI workloads faster. With over a decade of experience supporting millions of custom models and billions of operations for the largest enterprises and governments, Clarifai pioneered compute innovations like custom scheduling, batching, GPU fractioning, and autoscaling. With compute orchestration, Clarifai now empowers users to efficiently run any model, anywhere, at any scale.

Build & Deploy Faster

Quickly build, deploy, and share AI at scale. Standardize workflows and improve efficiency allowing teams to launch production AI in minutes.

Reduce Development Costs

Eliminate duplicate infrastructure and licensing costs from teams using siloed custom solutions, and standardize and centralize AI for easy access.

Oversight & Security

Ensure you’re building AI responsibly with integrated security, guardrails, and role-based access to control what data and IP is exposed and used with AI.

Integrate with your existing AI stack

Scale your AI with confidence

Clarifai was built to simplify how developers and

teams create, share, and run AI at scale

Establish an AI Operating Model and get out of prototype and into production

Build a RAG system in 4 lines of code

Make sense of the jargon around Generative AI with our glossary

Build your next AI app, test and tune popular LLMs models, and much more.