Enterprise-Grade H100 Hosting for AI Models

Run GPT-OSS-120B and custom models on NVIDIA H100s, and more — with benchmark leading performance.

GPU SHOWCASE

NVIDIA H100. Scale without limits.

Power your AI workloads with the latest NVIDIA GPUs with Clarifai. Optimized for large-scale inference, reasoning, and AI agents.

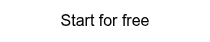

NVIDIA H100

The proven workhorse of modern AI, H100 GPUs power today’s largest inference fleets worldwide. With 80 GB of HBM3 memory, 3.35 TB/s bandwidth, and nearly 2 PFLOPS of tensor compute, H100s offer a rock-solid balance of speed, cost, and availability. Backed by a mature software ecosystem and global supply, they’re the reliable backbone for enterprise LLM deployment.

PROVEN PERFORMANCE

Fastest and cheapest GPU inference. Independently verified.

Clarifai's performance with GPT-OSS-120B sets the standard for large-model inference on GPUs. Benchmarked by Artificial Analysis, our hosted model outpaces other GPU-based providers and nearly rivals ASIC-specialists.

output tokens/sec throughput

time to first answer token

per million tokens (blended)

CLARIFAI ADVANTAGE

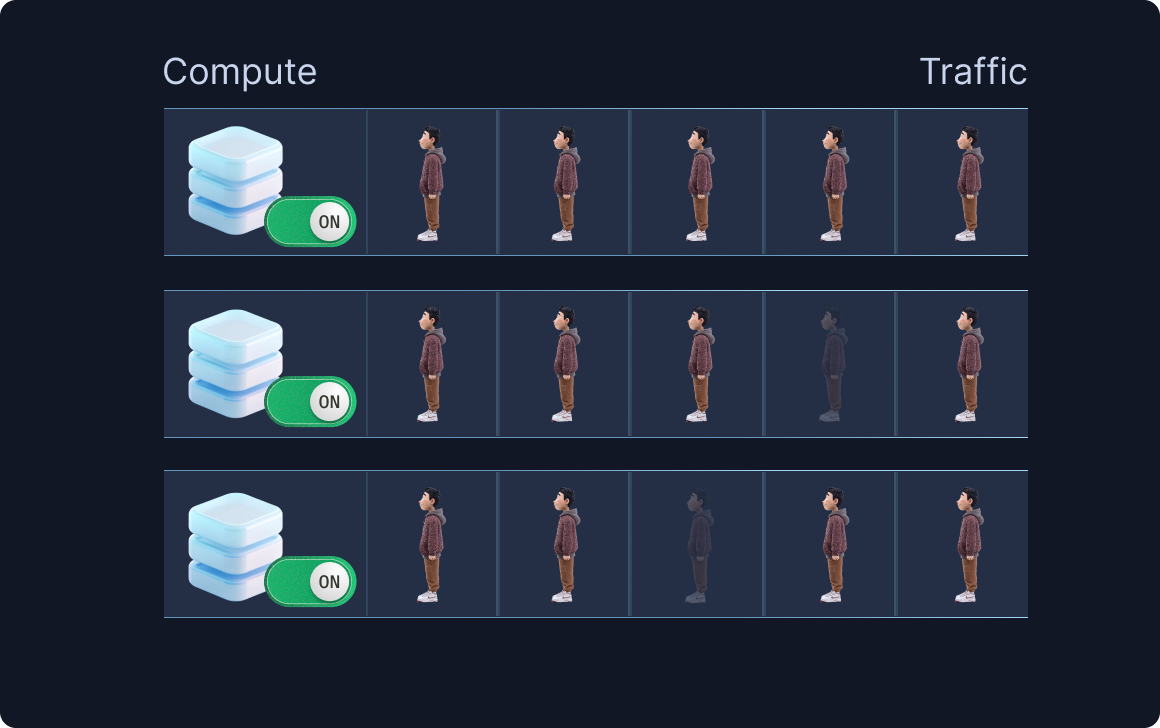

Not just GPU rental—full workload orchestration

Most providers stop at raw compute. Clarifai goes further with Compute Orchestration—the engine that makes your GPUs work harder, cost less, and scale seamlessly.

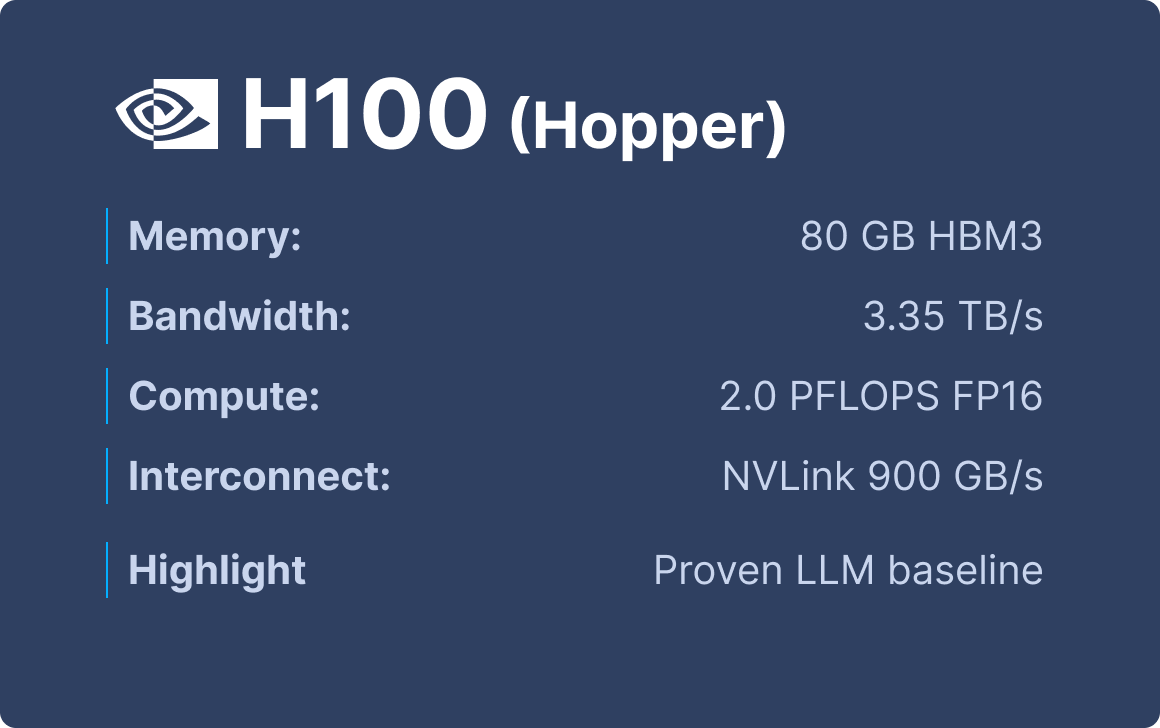

Smart Autoscaling

Scale up for peak demand and down to zero when idle — with traffic-based load balancing.

GPU Fractioning

Run multiple models or workloads on a single GPU for 2-4x higher utilization.

Cross-Cloud + On-Prem Flexibility

Deploy anywhere: AWS, Azure, GPC, or your own datacenter—all managed from one control plane.

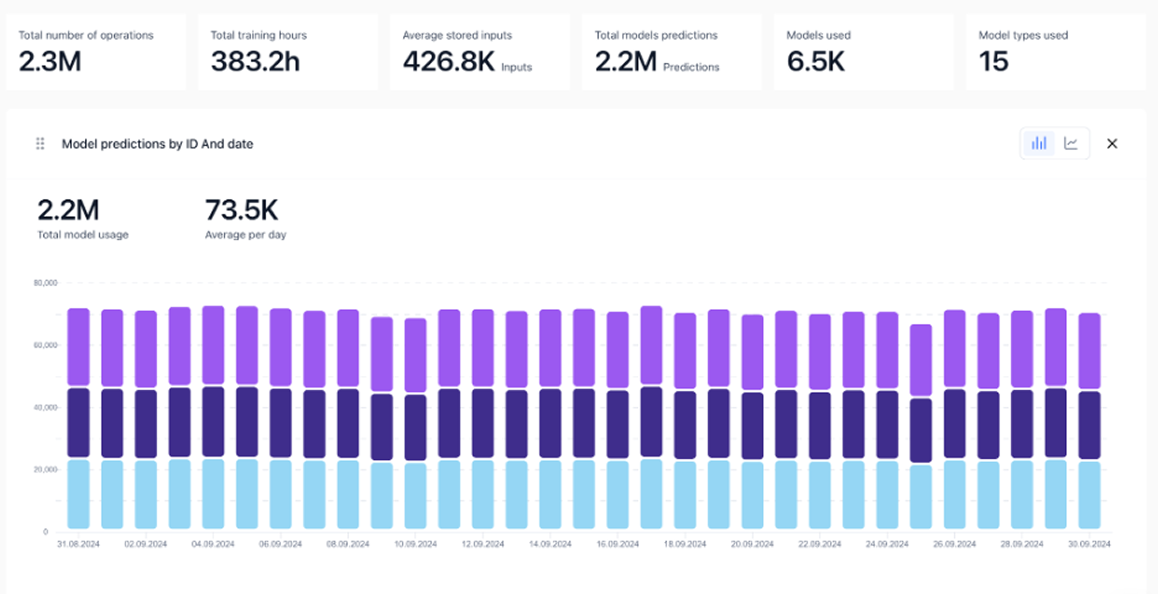

Unified Control & Governance

Monitor usage, optimize costs, and enforce enterprise-grade security policies from a single dashboard.

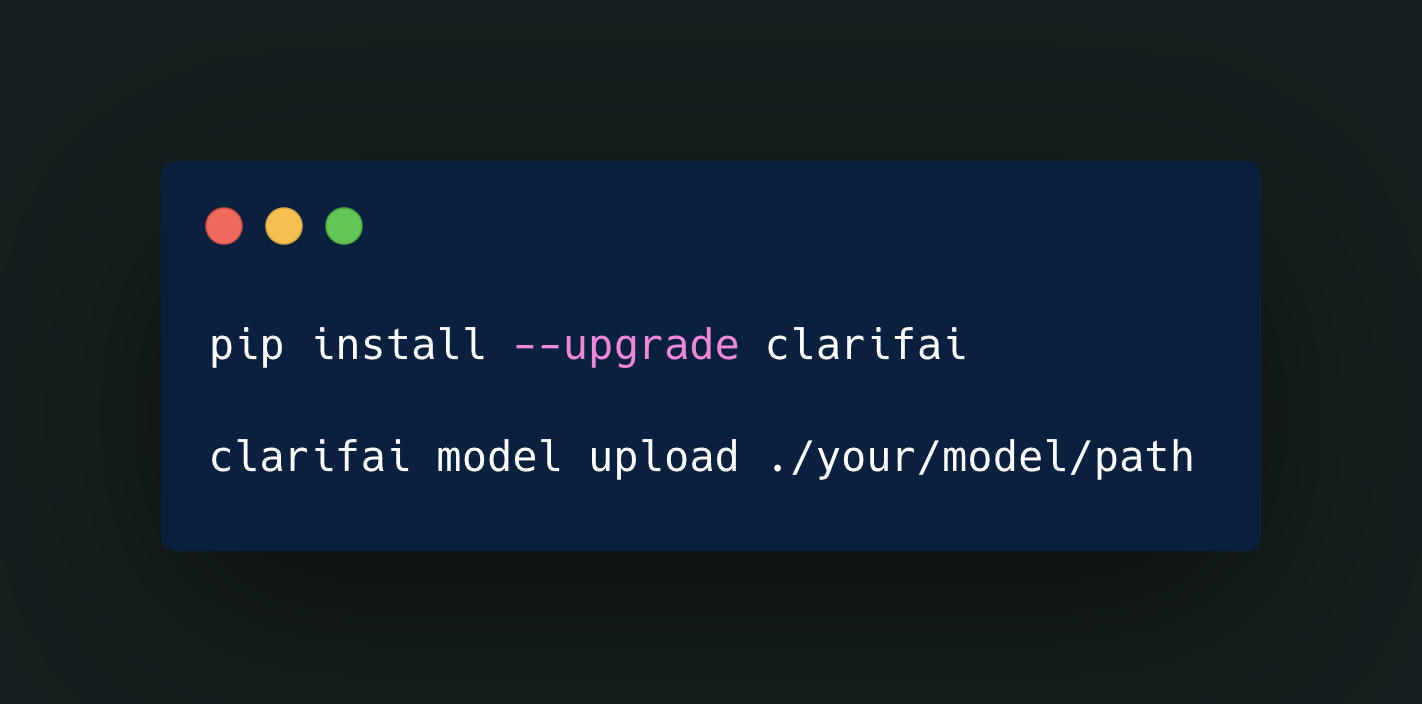

Seamless Model Deployment

Spin up GPT-OSS-120B, third-party models, or your own custom models in minutes with Clarifai's SDKs and UIs.

%20.png?width=1000&height=558&name=Output%20Speed%20vs%20Price%20(29%20Sep%2025)%20.png)