Automated Data Labeling

Automate Your Data Engine

Streamline your labeling tasks with AI-powered automation and efficient human review workflows.

Automate data labeling with AI models and human review

Data is growing rapidly, while the human capacity is fixed or barely growing. Labeling datasets manually is resource-intensive and requires significant investment in annotators. Yet, as humans, we are error-prone and long, repetitive tasks lead to errors. Manual labeling often takes weeks or months, making it slow to scale and create large datasets.

AI-assisted labeling tools decrease label creation time by 75% while auto annotation helps you cut human costs by 50%. Auto annotation workflows combined with unsupervised or semi supervised data engines speed model development by up to 100x. Manage large datasets with fully integrated vector search, and an enterprise-grade AI platform.

cycle time improvement

lower human costs or more

scale to larger datasets

Build AI datasets with confidence

Clarifai was built to simplify how developers and teams create, share, and run AI at scale.

Ingest individual inputs or archives

Auto label as you ingest data with customizable ontologies. Complete support for imagery, video and text formats.

Index your inputs for search

Auto-index your input data using customizable embedding vectors for semantic similarity search and easier dataset management.

Manage datasets for labeling

Create, version and manage your datasets for data labeling, model training and evaluation. Export your data via SDK.

Faster supervised labeling with AI Assist

Standardize across popular models and frameworks to foster collaborate and innovate

Resource Monitoring

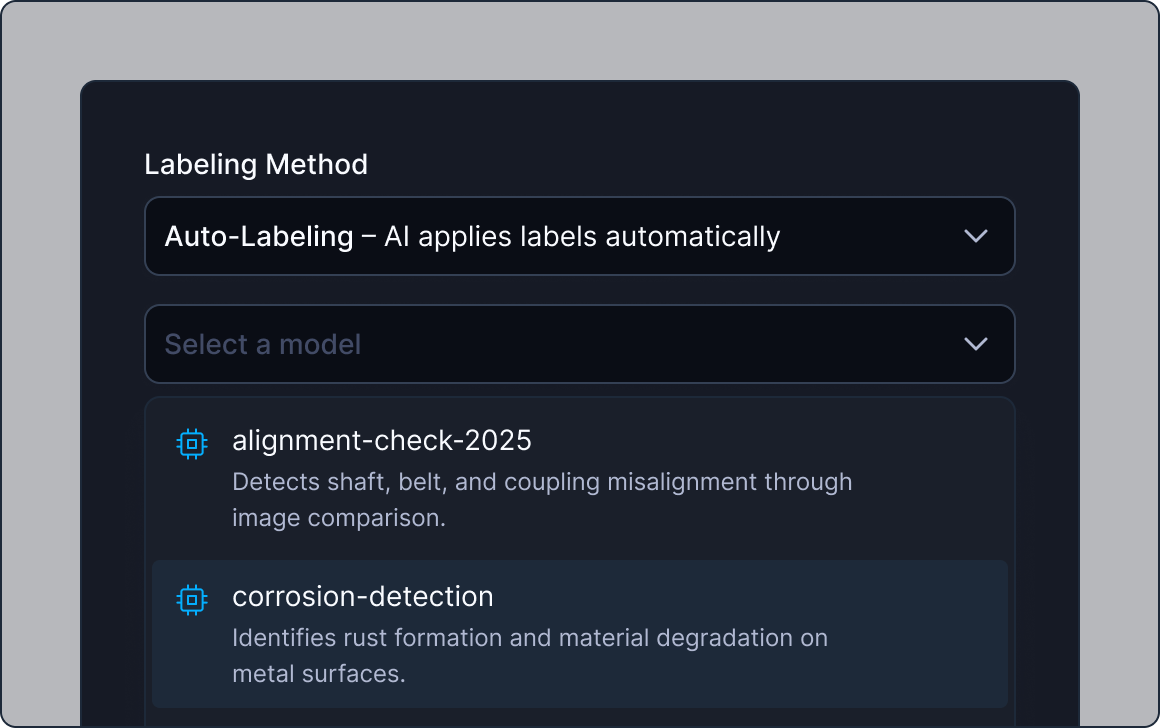

Automate your data labeling

Automated labeling with AI models including uploaded or custom-trained to your task

Complexity

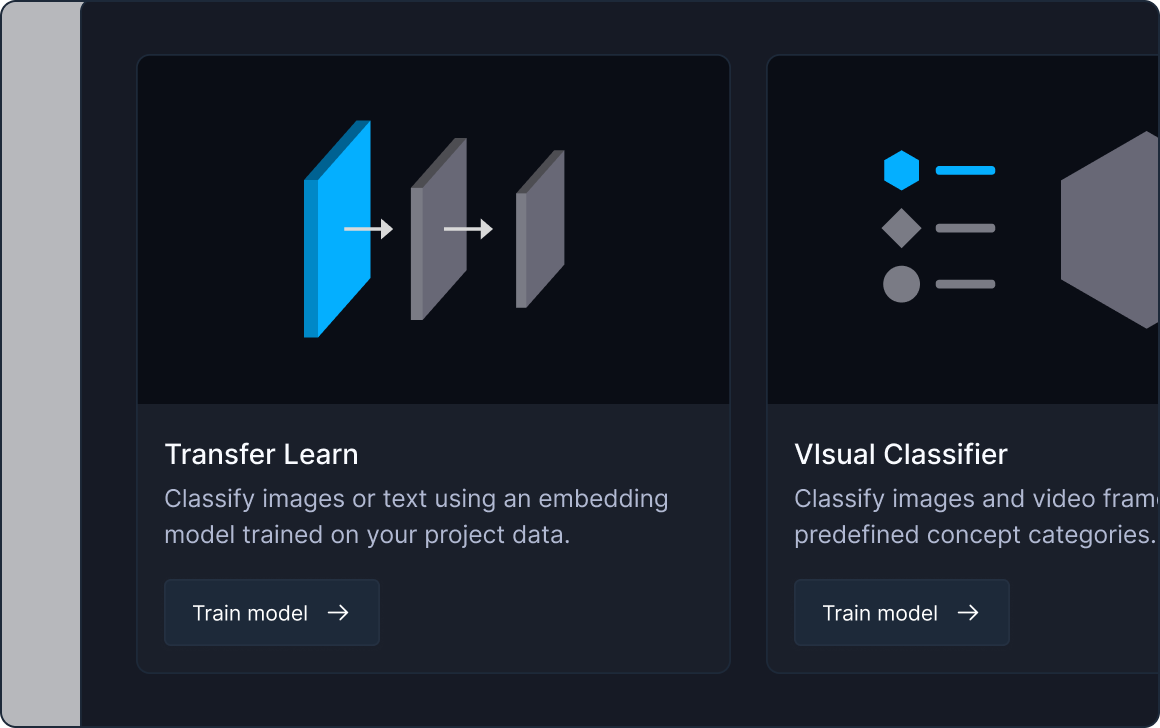

One-click custom model training

Standardize across popular models and frameworks to foster collaborate and innovate

Complexity

Powerful semi-supervised auto annotation

Standardize across popular models and frameworks to foster collaborate and innovate

Complexity

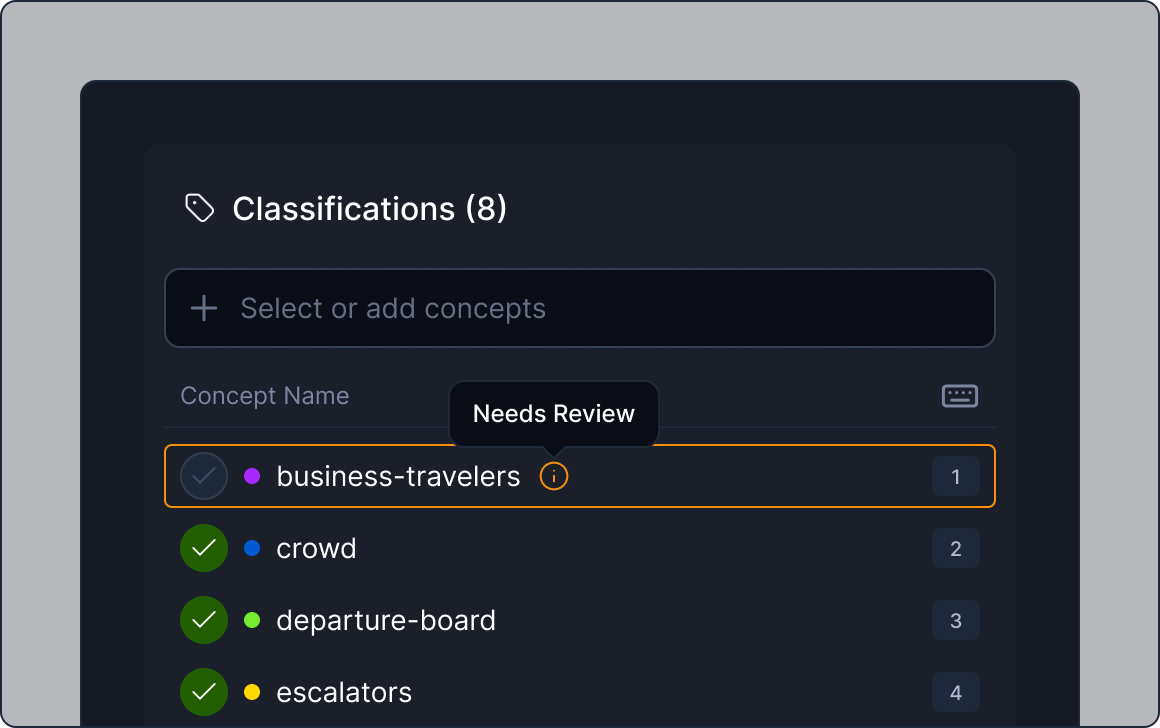

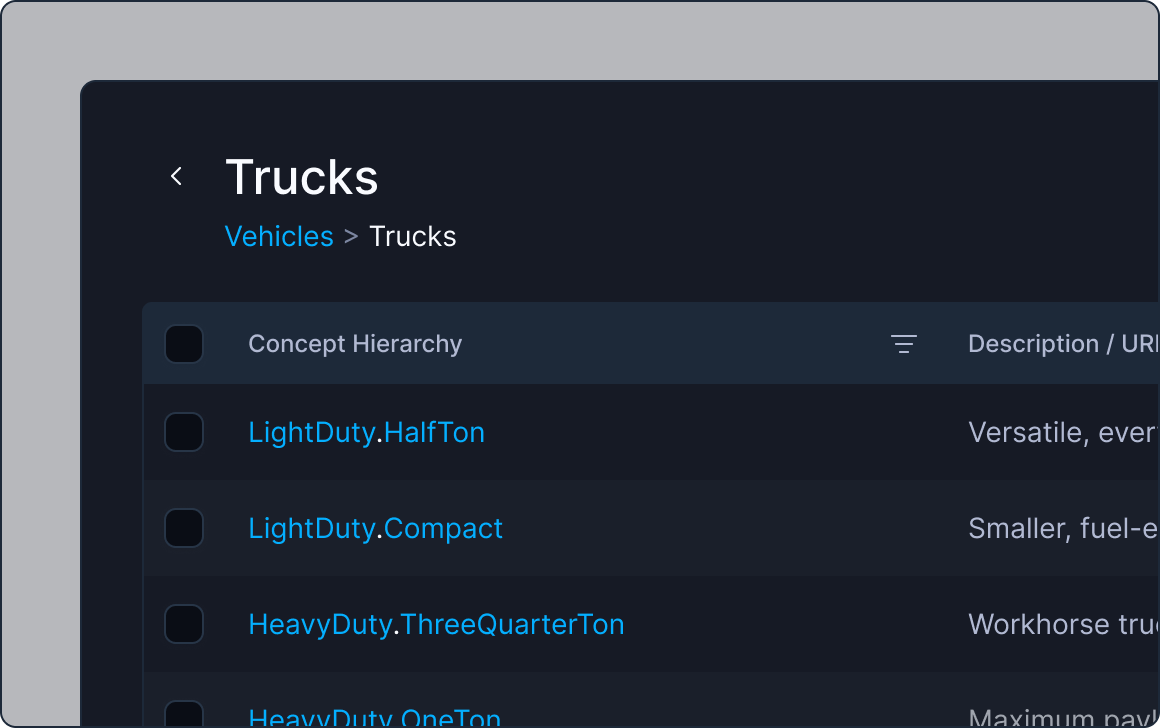

Support advanced hierarchical ontologies

Customizable labeling ontologies, including advanced hierarchical ontologies for complex use cases.

Reduction in per-label time for humans

“AI Assist and Auto Annotation helped us accelerate our model development using the same techniques that the best Silicon Valley companies are using.”

Supported Types

Data labeling types

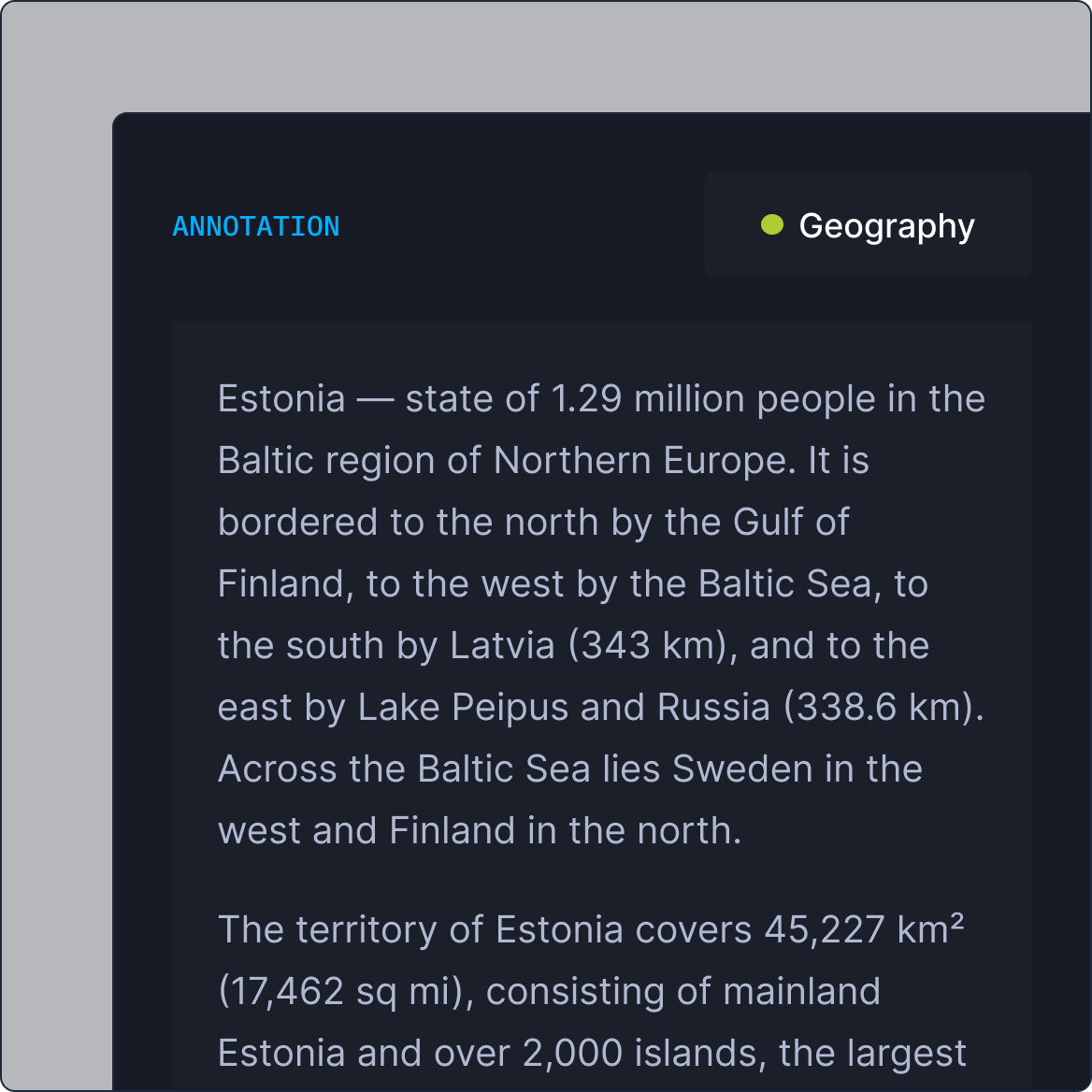

Assign a single, specific label to an image or text input.

Associate an image or text input with multiple labels simultaneously to build robust models that accurately classify inputs with multiple labels.

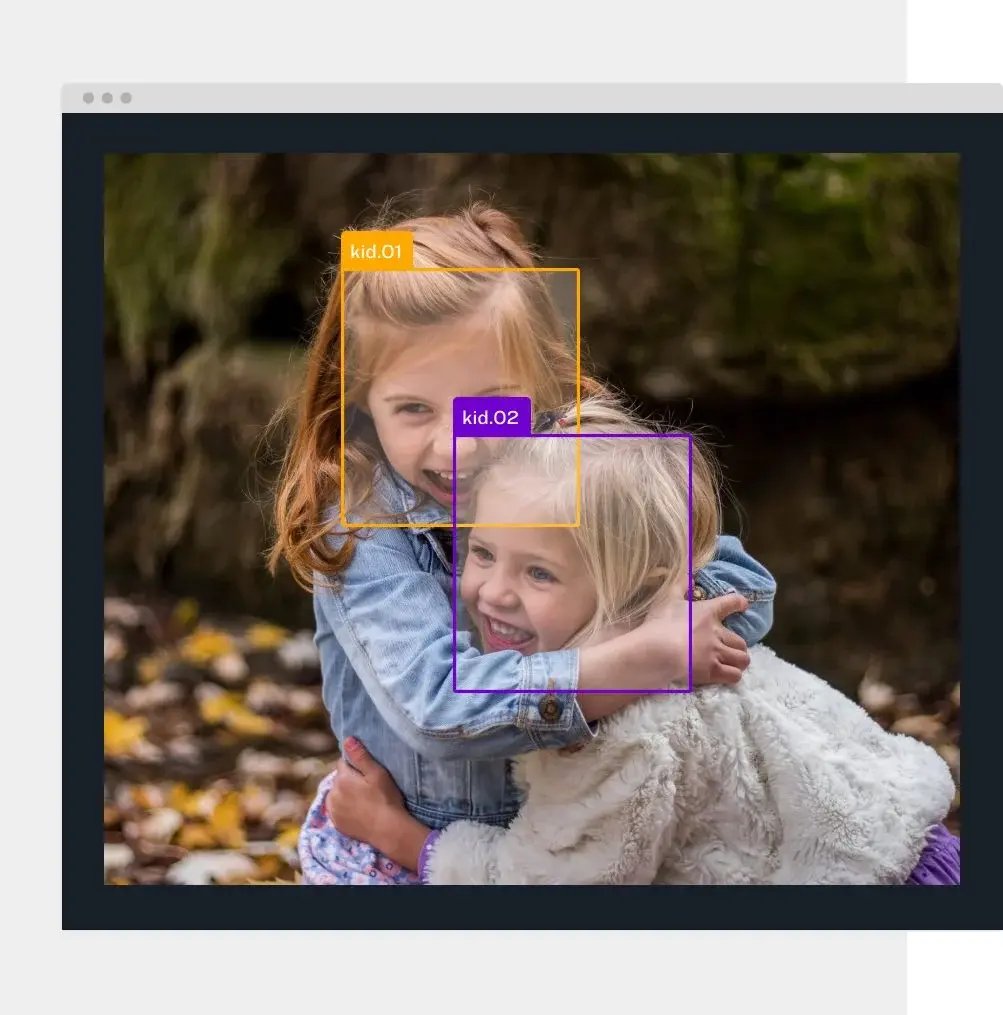

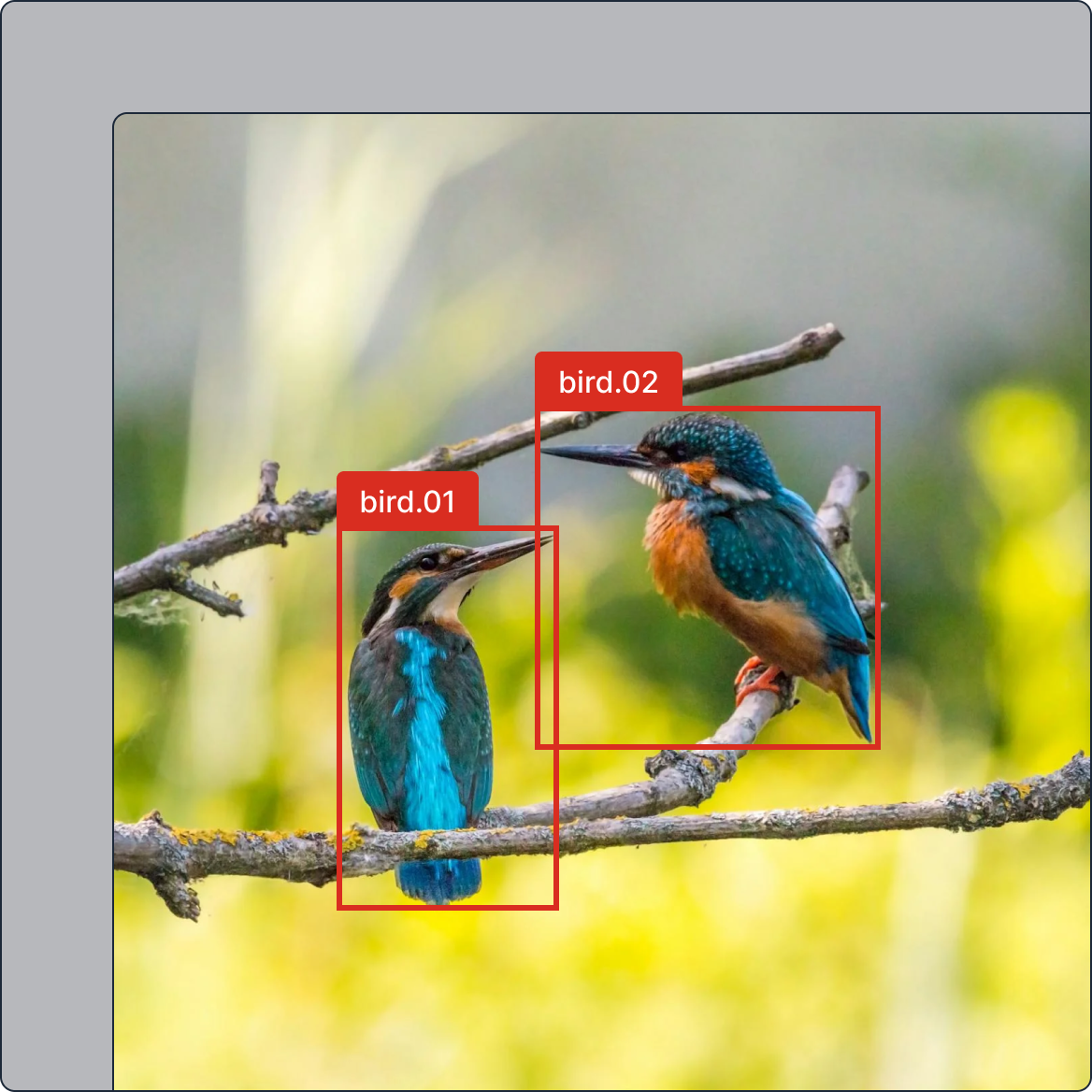

Localize and identify objects within an image by labeling 2d bounding boxes. Object detection models power many computer vision applications that rely on precise localization and classification of objects within images.

Polygon labels are used in computer vision to provide precise pixel-level annotations of objects within images. Power custom semantic and instance segmentation models.

Annotate objects or regions of interest within a video sequence over multiple frames. Follow objects across frames using AI-powered tracks.

Integrate with your existing AI stack

Scale your AI with confidence

Clarifai was built to simplify how developers and

teams create, share, and run AI at scale

Establish an AI Operating Model and get out of prototype and into production

Build a RAG system in 4 lines of code

Make sense of the jargon around Generative AI with our glossary

Build your next AI app, test and tune popular LLMs models, and much more.