Large language models have emerged as powerful tools capable of generating human-like text and answering a wide range of questions. These models, such as GPT-4, LLaMA, and PaLM, have been trained on vast amounts of data, allowing them to mimic human-like responses and provide valuable insights. However, it is important to understand that while these language models are incredibly impressive, they are limited to answering questions based on the information on which they were trained.

When faced with questions that have not been trained on, they either mention that they do not possess the knowledge or may hallucinate possible answers. The solution for the lack of knowledge in LLMs is either finetuning the LLM on your own data or providing factual information along with the prompt given to the model, allowing it to answer based on that information.

We offer a way to leverage the power of Generative AI, specifically an AI model like ChatGPT, to interact with data-rich but traditionally static formats like PDFs. Essentially, we’re trying to make a PDF “conversational,” transforming it from a one-way data source into an interactive platform.There are several reasons why this is both necessary and useful:

Large Language Models Limitations: Models like ChatGPT have a limit on the length of the input text they can handle - in GPT-3's case, a maximum of 2048 tokens. If we want the model to reference a large document, like a PDF, we can’t simply feed the entire document into the model simultaneously.

Making AI More Accurate: By providing factual information from the document in the prompt, we can help the model provide more accurate and context-specific responses. This is particularly important when dealing with complex or specialized texts, such as scientific papers or legal documents.So how do we overcome these challenges? The procedure involves several steps:

1. Loading the Document: The first step is to load and split the PDF into manageable sections. This is done using specific libraries and modules designed for this task.

2. Creating Embeddings and Vectorization: This is where things get particularly interesting. An ‘embedding’ in AI terms is a way of representing text data as numerical vectors. By creating embeddings for each section of the PDF, we translate the text into a language that the AI can understand and work with more efficiently. These embeddings are then used to create a ‘vector database’ - a searchable database where each section of the PDF is represented by its embedding vector.

3. Querying: When a query or question is posed to the system, the same process of creating an embedding is applied to the query. This query embedding is then compared with the embeddings in the vector database to find the most relevant sections of the PDF. These sections are then used as the input to ChatGPT, which generates an answer based on this focused, relevant data.This system allows us to circumvent the limitations of large language models and use them to interact with large documents in an efficient and accurate way. It prevents “hallucinations” and gives actual factual data. The procedure opens up a new realm of possibilities, from aiding research to improving accessibility, making the vast amounts of information stored in PDFs more approachable and usable.

In this article, we will delve into an example of letting the LLM answer the questions by giving factual information along with the prompt. So, let's dive in:

Here we are using 8 documents from the International crisis group that covers various causes of conflicts, provide detailed analysis and offer a practical solution for the crisis. You can even follow this even with your data. Also, One thing to keep in mind when providing data to the model is that the LLMs can’t process the large prompts and they will give fault answers if they are so long.

How to overcome this Challenge?

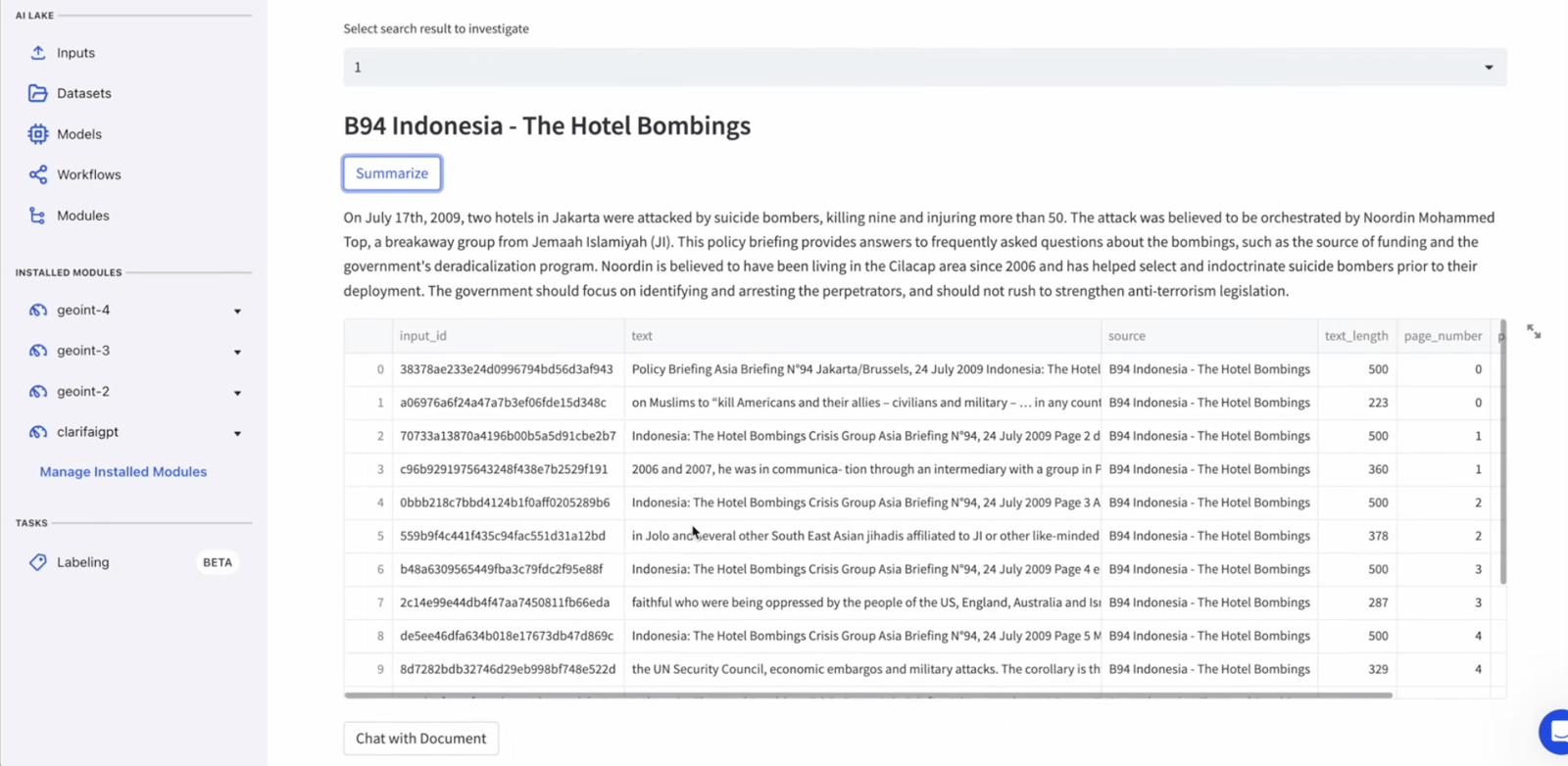

After uploading the PDF files they get converted into chunks of 300 words each. This contains chunk source, Page Number, Chunk Index and Text Length.

Once it’s done the Platform is able to generate Embeddings for each one. If you are not familiar an Embedding is a vector that represents the meaning in a given text. This is a good way to find a similar text which will eventually help in answering a prompt.

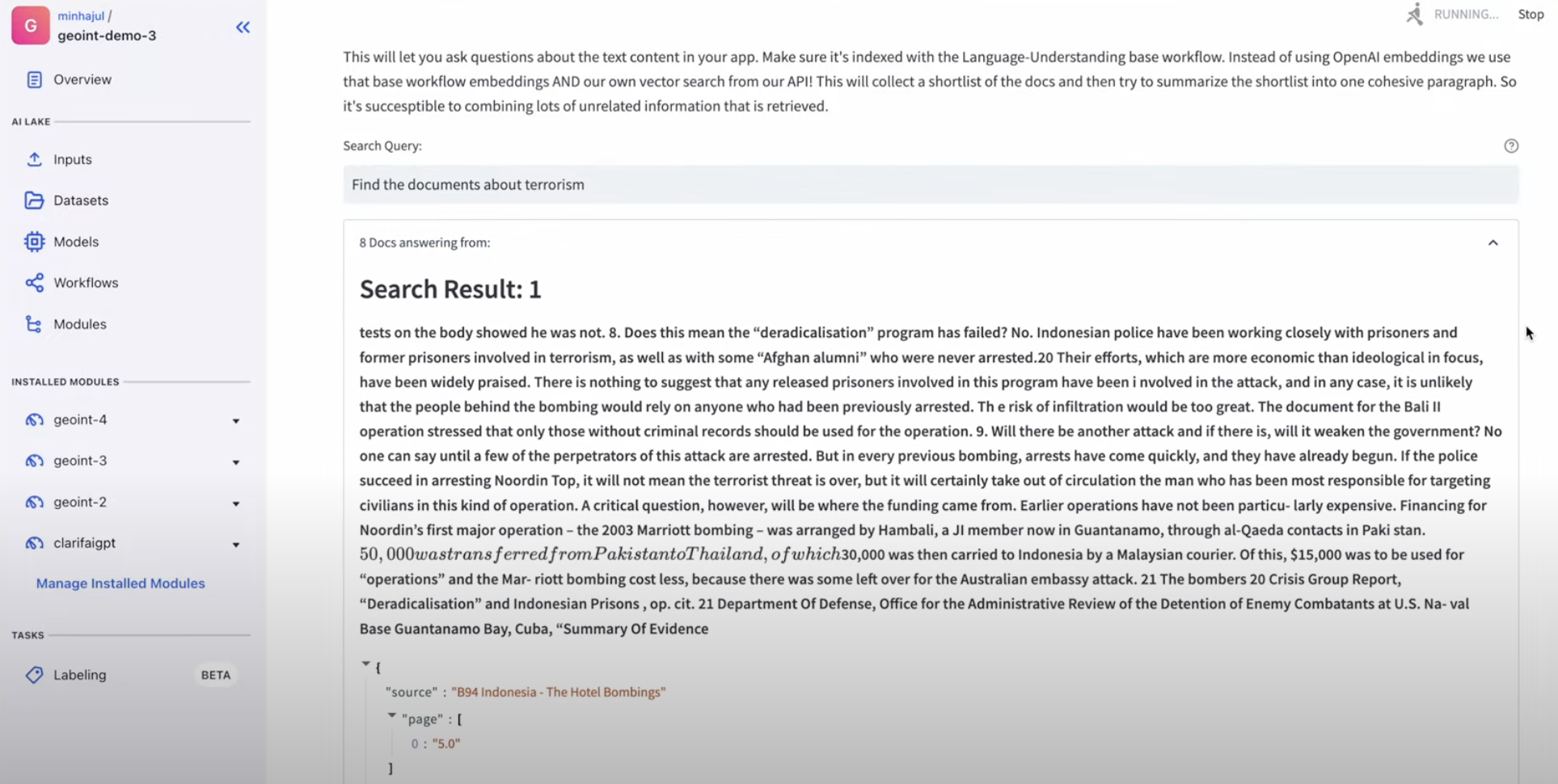

Given the query “Find the documents about terrorism ” first it calculates the embedding for that query and compares this with the already existing embeddings of the text chunks and finds the most relevant text to the query.

This also returns the source, page number and a similarity score that represents how close the query and the text chunks are. This also identifies the people, organizations, locations, time stamps etc present in the text chunks.

Let’s take a look at one individual saefuddin zuhri and select the document to examine.

In this instance, we will focus on the similarity score and select document 1, which will provide us with a summary and a list of sources related to that summary.

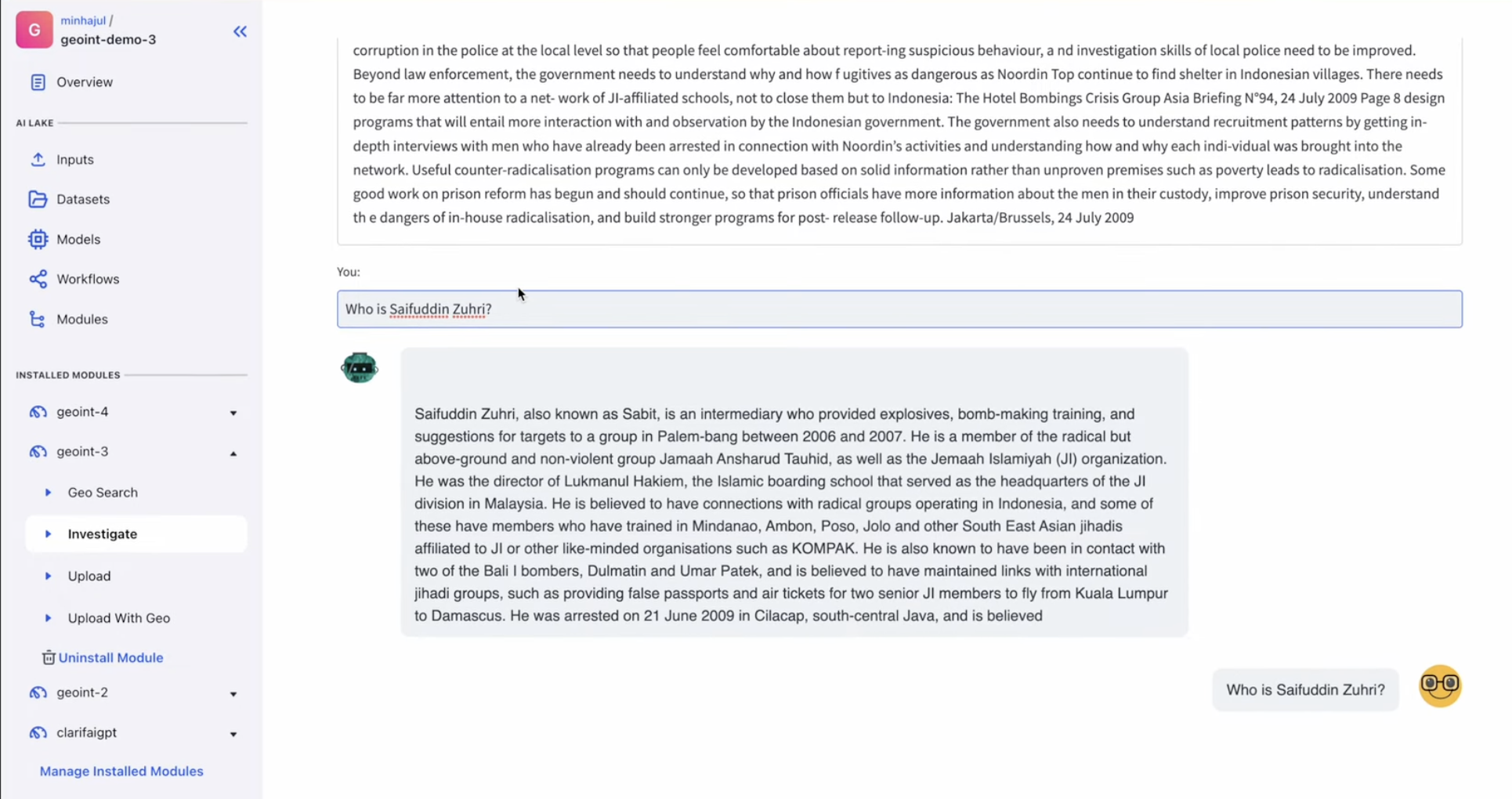

Let’s ask the question Who is Saefuddin Zuhri? behind the scenes this will eventually prepend the above summarized text along with the query, So that the model can only answer based on the factual information given.

Here is the answer from the model which it didn’t have any idea before.

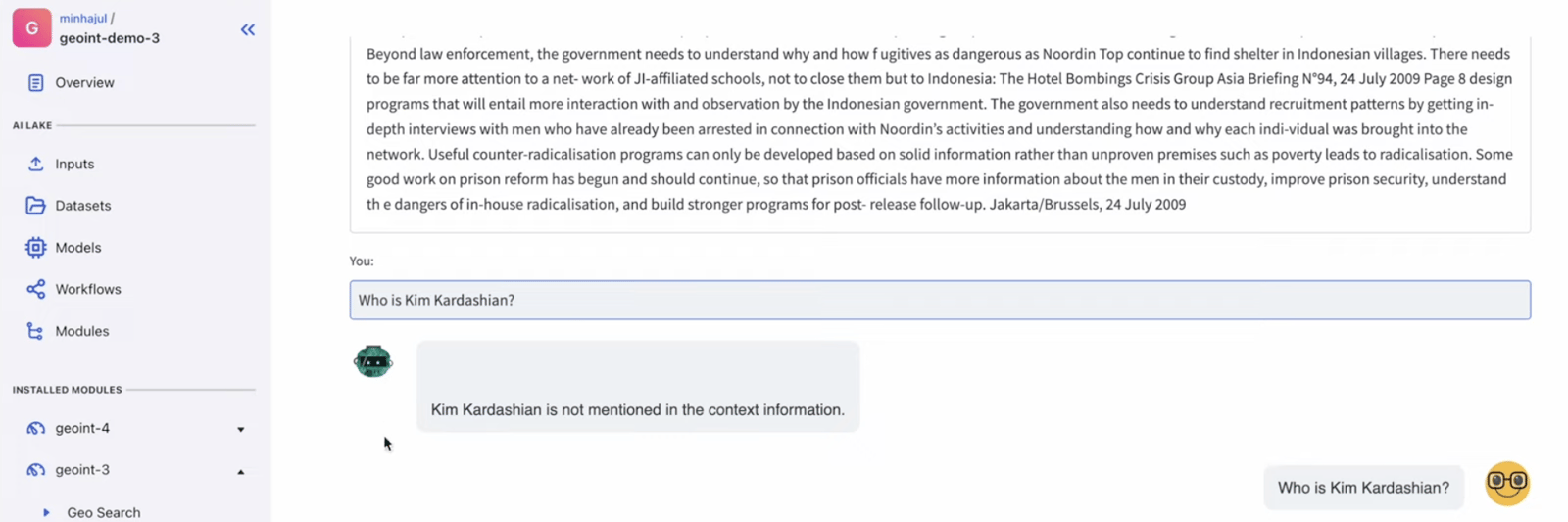

Also if we try to ask the model the question outside the context of given data it simply return that it is not mentioned in the context information.

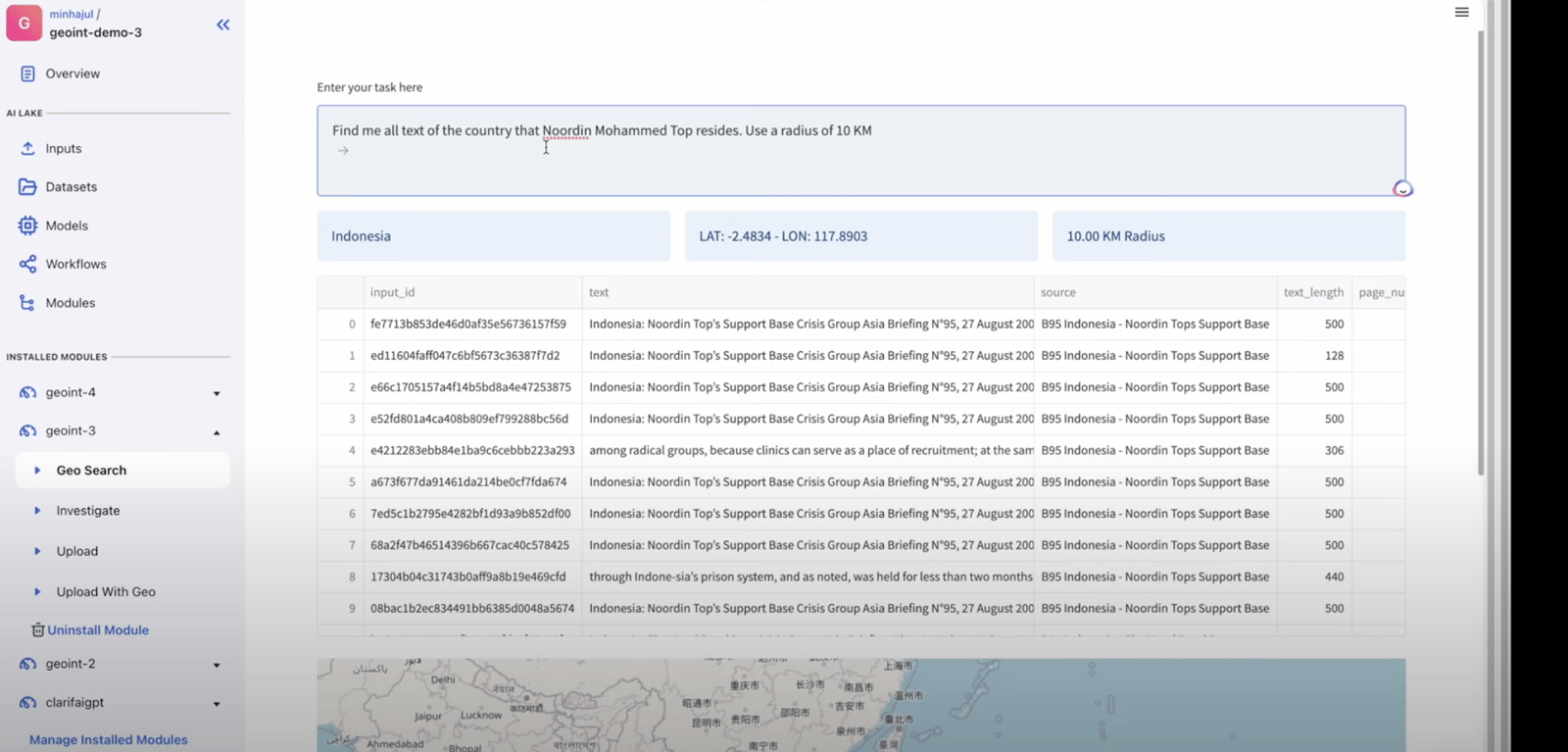

The other important ability of the platform is to investigate geographical locations and plot them on a map, Here is how it works:

Given a query to find the location where the “Noordin Mohammed” resides using the radius of 10KM. Here is the result as we will be provided with the list of source chunks where the location data was found.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy