How to Build an AI Model—Step‑by‑Step Guide

Introduction: Why Building an AI Model Matters Today

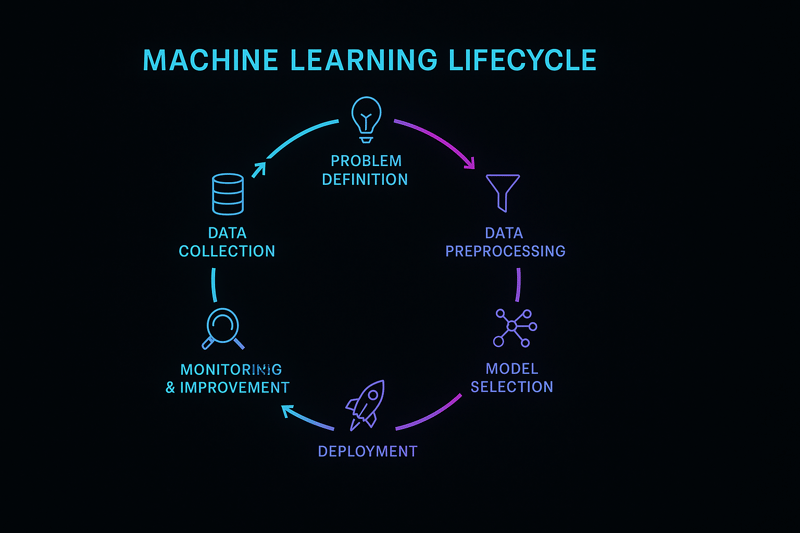

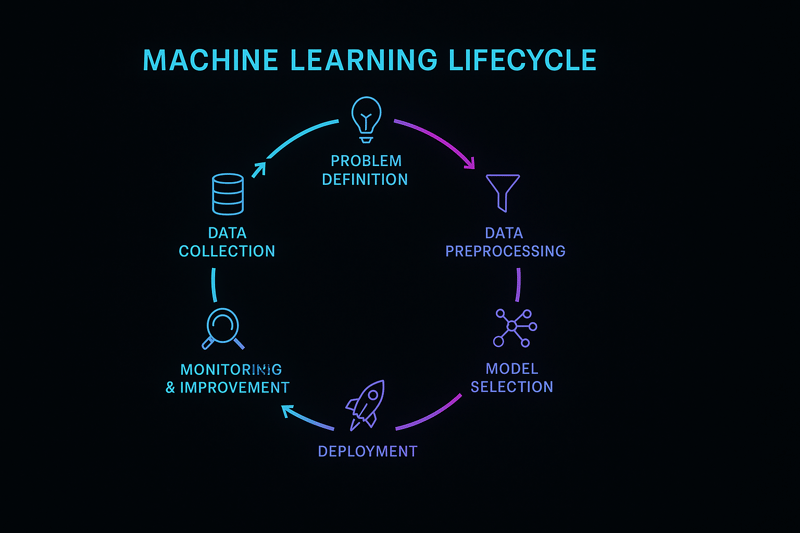

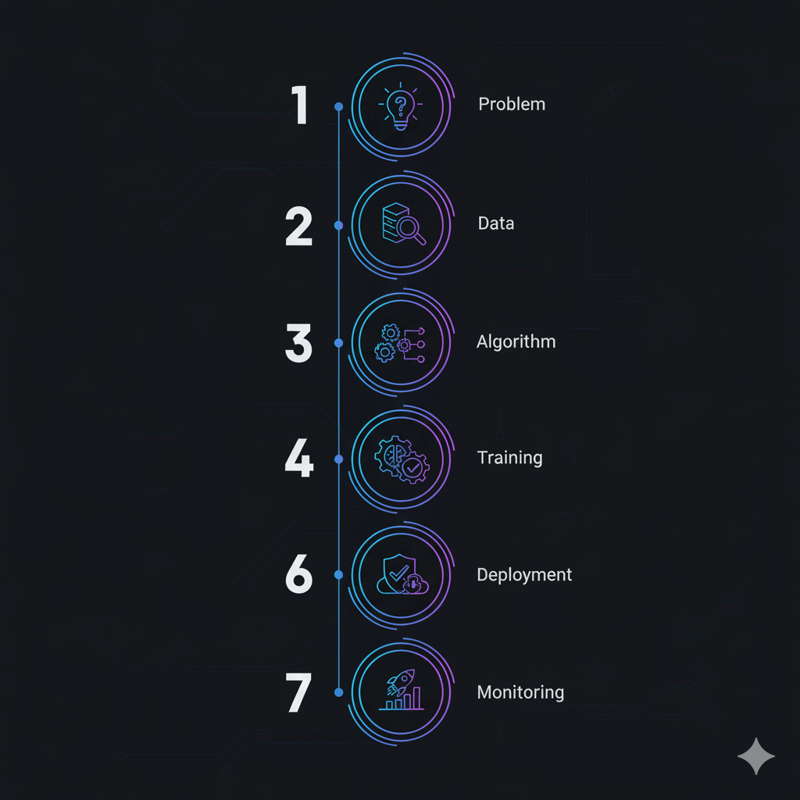

Artificial intelligence has moved from being a buzzword to a critical driver of business innovation, personal productivity, and societal transformation. Companies across sectors are eager to leverage AI for automation, real‑time decision-making, personalized services, advanced cybersecurity, content generation, and predictive analytics. Yet many teams still struggle to move from concept to a functioning AI model. Building an AI model involves more than coding; it requires a systematic process that spans problem definition, data acquisition, algorithm selection, training and evaluation, deployment, and ongoing maintenance. This guide will show you, step by step, how to build an AI model with depth, originality, and an eye toward emerging trends and ethical responsibility.

Quick Digest: What You’ll Learn

- What is an AI model? You’ll learn how AI differs from machine learning and why generative AI is reshaping innovation.

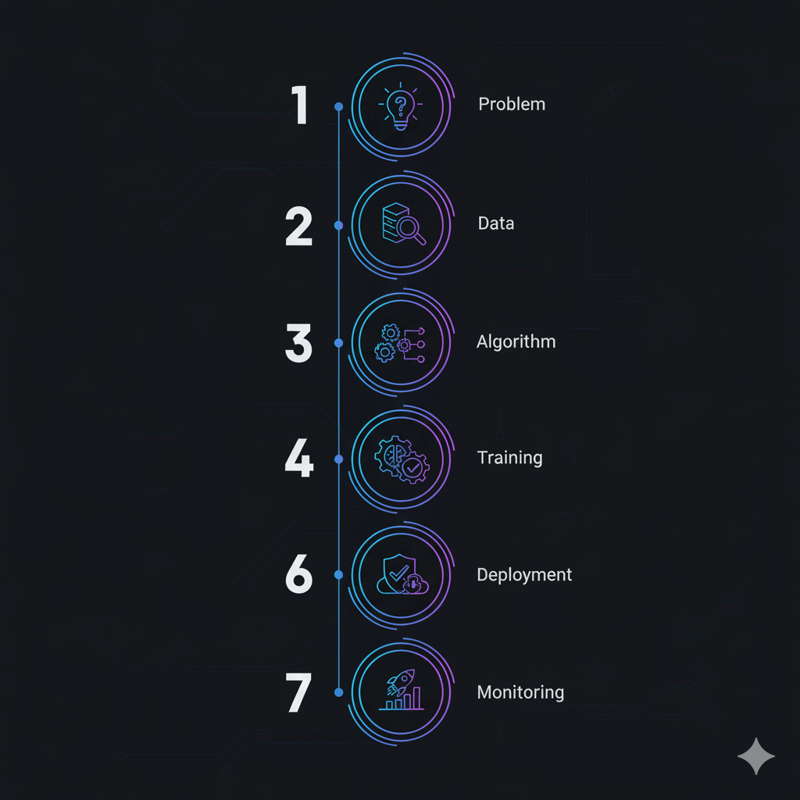

- Step‑by‑step instructions: From defining the problem and gathering data to selecting the right algorithms, training and evaluating your model, deploying it to production, and managing it over time.

- Expert insights: Each section includes a bullet list of expert tips and stats drawn from research, industry leaders, and case studies to give you deeper context.

- Creative examples: We’ll illustrate complex concepts with clear examples—from training a chatbot to implementing edge AI on a factory floor.

Quick Summary—How do you build an AI model?

Building an AI model involves defining a clear problem, collecting and preparing data, choosing appropriate algorithms and frameworks, training and tuning the model, evaluating its performance, deploying it responsibly, and continuously monitoring and improving it. Along the way, teams should prioritize data quality, ethical considerations, and resource efficiency while leveraging platforms like Clarifai for compute orchestration and model inference.

Defining Your Problem: The Foundation of AI Success

How do you identify the right problem for AI?

The first step in building an AI model is to clarify the problem you want to solve. This involves understanding the business context, user needs, and specific objectives. For instance, are you trying to predict customer churn, classify images, or generate marketing copy? Without a well‑defined problem, even the most advanced algorithms will struggle to deliver value.

Start by gathering input from stakeholders, including business leaders, domain experts, and end users. Formulate a clear question and set SMART goals—specific, measurable, attainable, relevant, and time‑bound. Also determine the type of AI task (classification, regression, clustering, reinforcement, or generation) and identify any regulatory requirements (such as healthcare privacy rules or financial compliance laws).

Expert Insights

- Failure to plan hurts outcomes: Many AI projects fail because teams jump into model development without a cohesive strategy. Establish a clear objective and align it with business metrics before gathering data.

- Consider domain constraints: A problem in healthcare might require HIPAA compliance and explainability, while a finance project may demand robust security and fairness auditing.

- Collaborate with stakeholders: Involving domain experts early helps ensure the problem is framed correctly and relevant data is available.

Creative Example: Predicting Equipment Failure

Imagine a manufacturing company that wants to reduce downtime by predicting when machines will fail. The problem is not “apply AI,” but “forecast potential breakdowns in the next 24 hours based on sensor data, historical logs, and environmental conditions.” The team defines a classification task: predict “fail” or “not fail.” SMART goals might include reducing unplanned downtime by 30 % within six months and achieving 90 % predictive accuracy. Clarifai’s platform can help coordinate the data pipeline and deploy the model in a local runner on the factory floor, ensuring low latency and data privacy.

Collecting and Preparing Data: Building the Right Dataset

Why does data quality matter more than algorithms?

Data is the fuel of AI. No matter how advanced your algorithm is, poor data quality will lead to poor predictions. Your dataset should be relevant, representative, clean, and well‑labeled. The data collection phase includes sourcing data, handling privacy concerns, and preprocessing.

- Identify data sources: Internal databases, public datasets, sensors, social media, web scraping, and user input can all provide valuable information.

- Ensure data diversity: Aim for diversity to reduce bias. Include samples from different demographics, geographies, and use cases.

- Clean and preprocess: Handle missing values, remove duplicates, correct errors, and normalize numerical features. Label data accurately (supervised tasks) or assign clusters (unsupervised tasks).

- Split data: Divide your dataset into training, validation, and test sets to evaluate performance fairly.

- Privacy and compliance: Use anonymization, pseudonymization, or synthetic data when dealing with sensitive information. Techniques like federated learning enable model training across distributed devices without transmitting raw data.

Expert Insights

- Quality > quantity: Netguru warns that poor data quality and inadequate quantity are common reasons AI projects fail. Collect enough data, but prioritize quality.

- Data grows fast: The AI Index 2025 notes that training compute doubles every five months and dataset sizes double every eight months. Plan your storage and compute infrastructure accordingly.

- Edge case handling: In edge AI deployments, data may be processed locally on low‑power devices like the Raspberry Pi, as shown in the Stream Analyze manufacturing case study. Local processing can enhance security and reduce latency.

Creative Example: Constructing an Image Dataset

Suppose you’re building an AI system to classify flowers. You could collect images from public datasets, upload your own photos, and ask community contributors to share pictures from different regions. Then, label each image according to its species. Remove duplicates and ensure images are balanced across classes. Finally, augment the data by rotating and flipping images to improve robustness. For privacy‑sensitive tasks, consider generating synthetic examples using generative adversarial networks (GANs).

Choosing the Right Algorithm and Architecture

How do you decide between machine learning and deep learning?

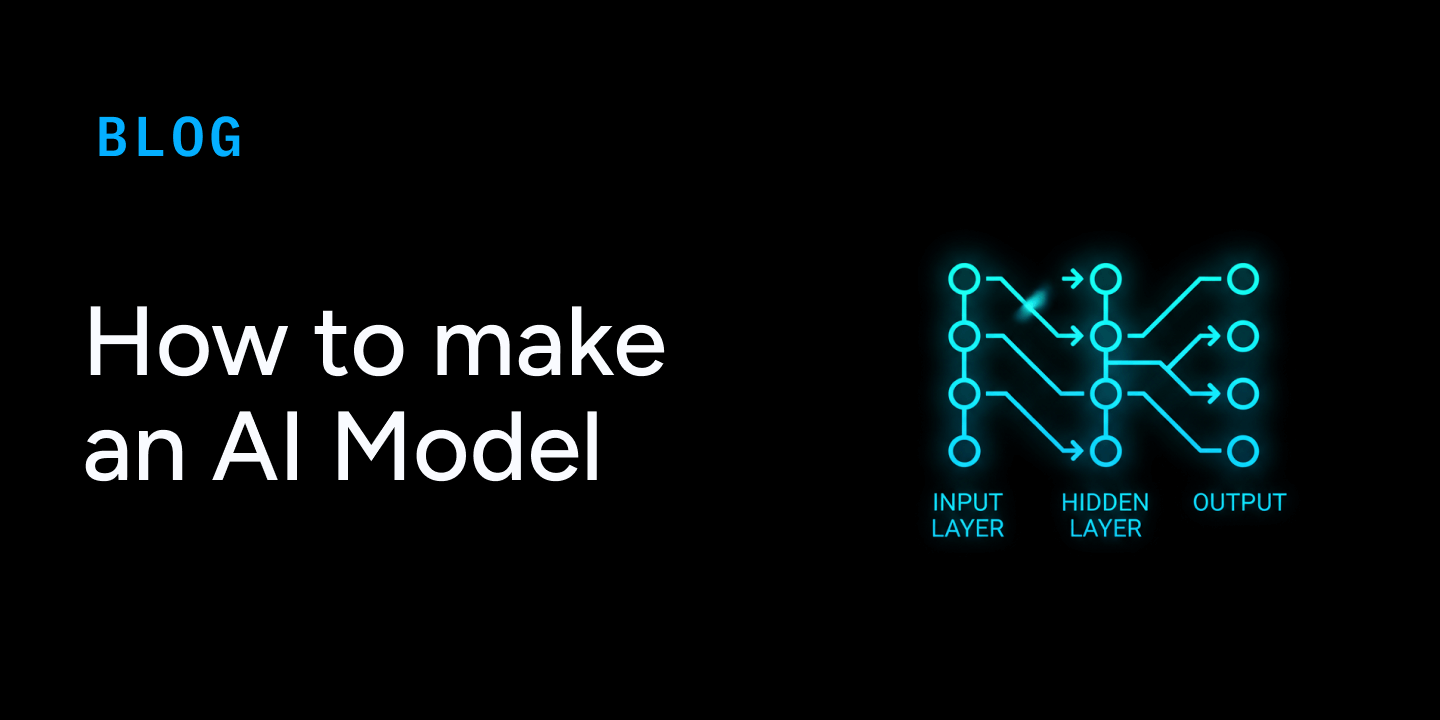

After defining your problem and assembling a dataset, the next step is selecting an appropriate algorithm. The choice depends on data type, task, interpretability requirements, compute resources, and deployment environment.

- Traditional Machine Learning: For small datasets or tabular data, algorithms like linear regression, logistic regression, decision trees, random forests, or support vector machines often perform well and are easy to interpret.

- Deep Learning: For complex patterns in images, speech, or text, convolutional neural networks (CNNs) handle images, recurrent neural networks (RNNs) or transformers process sequences, and reinforcement learning optimizes decision‑making tasks.

- Generative Models: For tasks like text generation, image synthesis, or data augmentation, transformers (e.g., GPT‑family), diffusion models, and GANs excel. Generative AI can produce new content and is particularly useful in creative industries.

- Hybrid Approaches: Combine traditional models with neural networks or integrate retrieval‑augmented generation (RAG) to inject current knowledge into generative models.

Expert Insights

- Match models to tasks: Techstack highlights the importance of aligning algorithms with problem types (classification, regression, generative).

- Generative AI capabilities: MIT Sloan stresses that generative models can outperform traditional ML in tasks requiring language understanding. However, domain‑specific or privacy‑sensitive tasks may still rely on classical approaches.

- Explainability: If decisions must be explained (e.g., in healthcare or finance), choose interpretable models (decision trees, logistic regression) or use explainable AI tools (SHAP, LIME) with complex architectures.

Creative Example: Picking an Algorithm for Text Classification

Suppose you need to classify customer feedback into categories (positive, negative, neutral). For a small dataset, a Naive Bayes or support vector machine might suffice. If you have large amounts of textual data, consider a transformer‑based classifier like BERT. For domain‑specific accuracy, a fine‑tuned model on your data yields better results. Clarifai’s model zoo and training pipeline can simplify this process by providing pretrained models and transfer learning options.

Selecting Tools, Frameworks and Infrastructure

Which frameworks and tools should you use?

Tools and frameworks enable you to build, train, and deploy AI models efficiently. Choosing the right tech stack depends on your programming language preference, deployment target, and team expertise.

- Programming Languages: Python is the most popular, thanks to its vast ecosystem (NumPy, pandas, scikit‑learn, TensorFlow, PyTorch). R suits statistical analysis; Julia offers high performance; Java and Scala integrate well with enterprise systems.

- Frameworks: TensorFlow, PyTorch, and Keras are leading deep‑learning frameworks. Scikit‑learn offers a rich set of machine‑learning algorithms for classical tasks. H2O.ai provides AutoML capabilities.

- Data Management: Use pandas and NumPy for tabular data, SQL/NoSQL databases for storage, and Spark or Hadoop for large datasets.

- Visualization: Tools like Matplotlib, Seaborn, and Plotly help plot performance metrics. Tableau or Power BI integrate with business dashboards.

- Deployment Tools: Docker and Kubernetes help containerize and orchestrate applications. Flask or FastAPI expose models via REST APIs. MLOps platforms like MLflow and Kubeflow manage model lifecycle.

- Edge AI: For real‑time or privacy‑sensitive applications, use low‑power hardware such as Raspberry Pi or Nvidia Jetson, or specialized chips like neuromorphic processors.

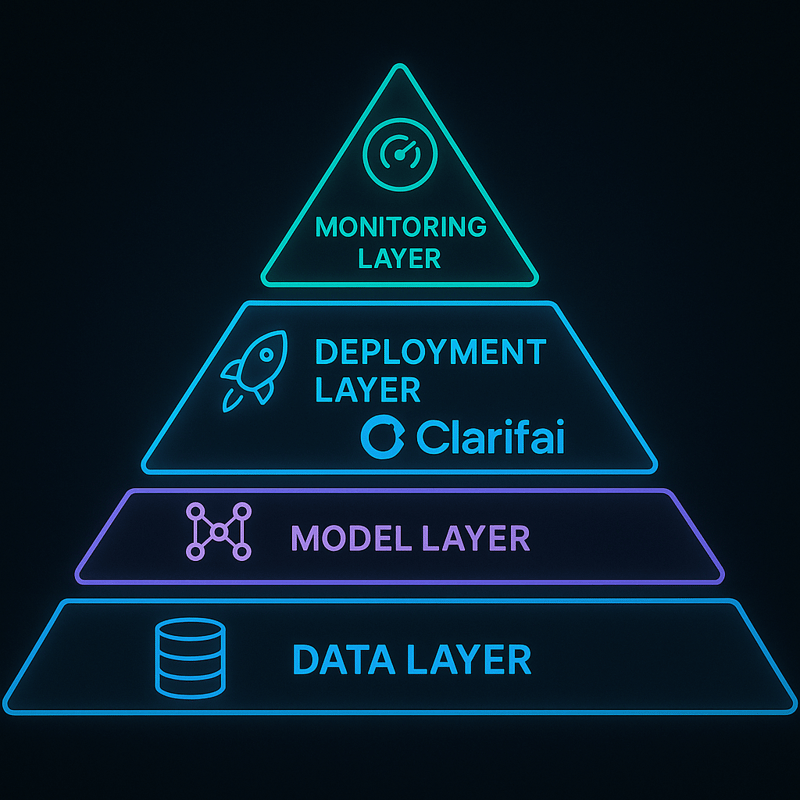

- Clarifai Platform: Clarifai offers model orchestration, pretrained models, workflow editing, local runners, and secure deployment. You can fine‑tune Clarifai models or bring your own models for inference. Clarifai’s compute orchestration streamlines training and inference across cloud, on‑premises, or edge environments.

Expert Insights

- Framework choice matters: Netguru lists TensorFlow, PyTorch, and Keras as leading options with robust communities. Prismetric expands the list to include Hugging Face, Julia, and RapidMiner.

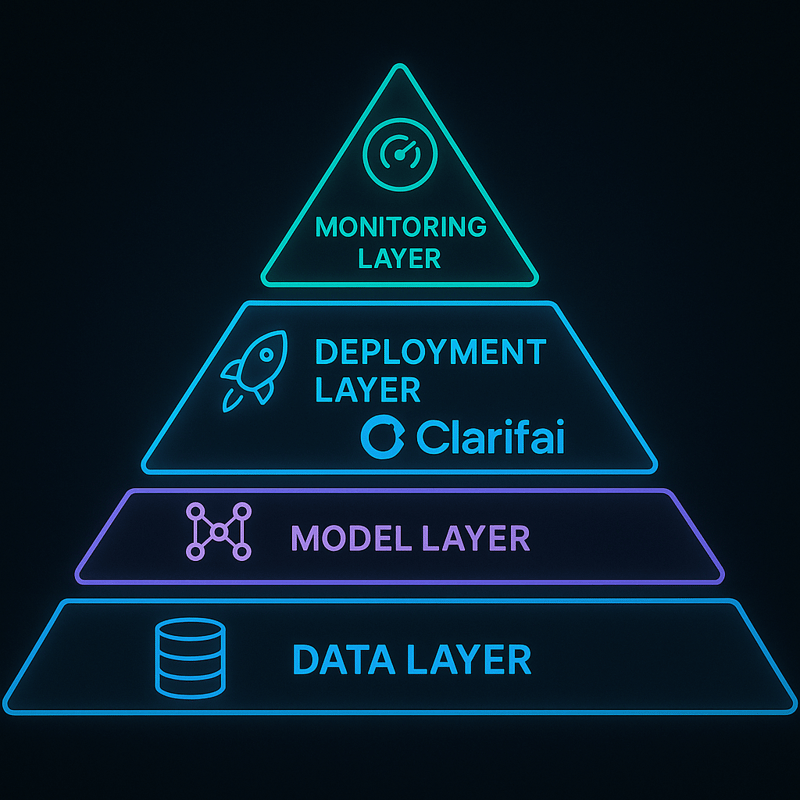

- Multi‑layer architecture: Techstack outlines the five layers of AI architecture: infrastructure, data processing, service, model, and application. Choose tools that integrate across these layers.

- Edge hardware innovations: The 2025 Edge AI report describes specialized hardware for on‑device AI, including neuromorphic chips and quantum processors.

Creative Example: Building a Chatbot with Clarifai

Let’s say you want to create a customer‑support chatbot. You can use Clarifai’s pretrained language models to recognize user intent and generate responses. Use Flask to build an API endpoint and containerize the app with Docker. Clarifai’s platform can handle compute orchestration, scaling the model across multiple servers. If you need on‑device performance, you can run the model on a local runner in the Clarifai environment, ensuring low latency and data privacy.

Training and Tuning Your Model

Training and Tuning Your Model

How do you train an AI model effectively?

Training involves feeding data into your model, calculating predictions, computing a loss, and adjusting parameters via backpropagation. Key decisions include choosing loss functions (cross‑entropy for classification, mean squared error for regression), optimizers (SGD, Adam, RMSProp), and hyperparameters (learning rate, batch size, epochs).

- Initialize the model: Set up the architecture and initialize weights.

- Feed the training data: Forward propagate through the network to generate predictions.

- Compute the loss: Measure how far predictions are from true labels.

- Backpropagation: Update weights using gradient descent.

- Repeat: Iterate for multiple epochs until the model converges.

- Validate and tune: Evaluate on a validation set; adjust hyperparameters (learning rate, regularization strength, architecture depth) using grid search, random search, or Bayesian optimization.

- Avoid over‑fitting: Use techniques like dropout, early stopping, and L1/L2 regularization.

Expert Insights

- Hyperparameter tuning is key: Prismetric stresses balancing under‑fitting and over‑fitting and suggests automated tuning methods.

- Compute demands are growing: The AI Index notes that training compute for notable models doubles every five months; GPT‑4o required 38 billion petaFLOPs, whereas AlexNet needed 470 PFLOPs. Use efficient hardware and adjust training schedules accordingly.

- Use cross‑validation: Techstack recommends cross‑validation to avoid overfitting and to select robust models.

Creative Example: Hyperparameter Tuning Using Clarifai

Suppose you train an image classifier. You might experiment with learning rates from 0.001 to 0.1, batch sizes from 32 to 256, and dropout rates between 0.3 and 0.5. Clarifai’s platform can orchestrate multiple training runs in parallel, automatically tracking hyperparameters and metrics. Once the best parameters are identified, Clarifai allows you to snapshot the model and deploy it seamlessly.

Evaluating and Validating Your Model

How do you know if your AI model works?

Evaluation ensures that the model performs well not just on the training data but also on unseen data. Choose metrics based on your problem type:

- Classification: Use accuracy, precision, recall, F1 score, and ROC‑AUC. Analyze confusion matrices to understand misclassifications.

- Regression: Compute mean squared error (MSE), root mean squared error (RMSE), and mean absolute error (MAE).

- Generative tasks: Measure with BLEU, ROUGE, Frechet Inception Distance (FID) or use human evaluation for more subjective outputs.

- Fairness and robustness: Evaluate across different demographic groups, monitor for data drift, and test adversarial robustness.

Divide the data into training, validation, and test sets to prevent over‑fitting. Use cross‑validation when data is limited. For time series or sequential data, employ walk‑forward validation to mimic real‑world deployment.

Expert Insights

- Multiple metrics: Prismetric emphasises combining metrics (e.g., precision and recall) to get a holistic view.

- Responsible evaluation: Microsoft highlights the importance of rigorous testing to ensure fairness and safety. Evaluating AI models on different scenarios helps identify biases and vulnerabilities.

- Generative caution: MIT Sloan warns that generative models can sometimes produce plausible but incorrect responses; human oversight is still needed.

Creative Example: Evaluating a Customer Churn Model

Suppose you built a model to predict customer churn for a streaming service. Evaluate precision (the percentage of predicted churners who actually churn) and recall (the percentage of all churners correctly identified). If the model achieves 90 % precision but 60 % recall, you may need to adjust the threshold to catch more churners. Visualize results in a confusion matrix, and check performance across age groups to ensure fairness.

Deployment and Integration

How do you deploy an AI model into production?

Deployment turns your trained model into a usable service. Consider the environment (cloud vs on‑premises vs edge), latency requirements, scalability, and security.

- Containerize your model: Use Docker to package the model with its dependencies. This ensures consistency across development and production.

- Choose an orchestration platform: Kubernetes manages scaling, load balancing, and resilience. For serverless deployments, use AWS Lambda, Google Cloud Functions, or Azure Functions.

- Expose via an API: Build a REST or gRPC endpoint using frameworks like Flask or FastAPI. Clarifai’s platform provides an API gateway that seamlessly integrates with your application.

- Secure your deployment: Implement SSL/TLS encryption, authentication (JWT or OAuth2), and authorization. Use environment variables for secrets and ensure compliance with regulations.

- Monitor performance: Track metrics such as response time, throughput, and error rates. Add automatic retries and fallback logic for robustness.

- Edge deployment: For latency‑sensitive or privacy‑sensitive use cases, deploy models to edge devices. Clarifai’s local runners let you run inference on‑premises or on low‑power devices without sending data to the cloud.

Expert Insights

- Modular design: Techstack encourages building modular architectures to facilitate scaling and integration.

- Edge case: The Amazon Go case study demonstrates edge AI deployment, where sensor data is processed locally to enable cashierless shopping. This reduces latency and protects customer privacy.

- MLOps tools: OpenXcell notes that integrating monitoring and automated deployment pipelines is crucial for sustainable operations.

Creative Example: Deploying a Fraud Detection Model

A fintech company trains a model to identify fraudulent transactions. They containerize the model with Docker, deploy it to AWS Elastic Kubernetes Service, and expose it via FastAPI. Clarifai’s platform helps orchestrate compute resources and provides fallback inference on a local runner when network connectivity is unstable. Real‑time predictions appear within 50 milliseconds, ensuring high throughput. The team monitors the model’s precision and recall to adjust thresholds and triggers an alert if performance drops below 90 % precision.

Continuous Monitoring, Maintenance and MLOps

Why is AI lifecycle management crucial?

AI models are not “set and forget” systems; they require continuous monitoring to detect performance degradation, concept drift, or bias. MLOps combines DevOps principles with machine learning workflows to manage models from development to production.

- Monitor performance metrics: Continuously track accuracy, latency, and throughput. Identify and investigate anomalies.

- Detect drift: Monitor input data distributions and output predictions to identify data drift or concept drift. Tools like Alibi Detect and Evidently can alert you when drift occurs.

- Version control: Use Git or dedicated model versioning tools (e.g., DVC, MLflow) to track data, code, and model versions. This ensures reproducibility and simplifies rollbacks.

- Automate retraining: Set up scheduled retraining pipelines to incorporate new data. Use continuous integration/continuous deployment (CI/CD) pipelines to test and deploy new models.

- Energy and cost optimization: Monitor compute resource usage, adjust model architectures, and explore hardware acceleration. The AI Index notes that as training compute doubles every five months, energy consumption becomes a significant issue. Green AI focuses on reducing carbon footprint through efficient algorithms and energy‑aware scheduling.

- Clarifai MLOps: Clarifai provides tools for monitoring model performance, retraining on new data, and deploying updates with minimal downtime. Its workflow engine ensures that data ingestion, preprocessing, and inference are orchestrated reliably across environments.

Expert Insights

- Continuous monitoring is vital: Techstack warns that concept drift can occur due to changing data distributions; monitoring allows early detection.

- Energy‑efficient AI: Microsoft highlights the need for resource‑efficient AI, advocating for innovations like liquid cooling and carbon‑free energy.

- Security: Ensure data encryption, access control, and audit logging. Use federated learning or edge deployment to maintain privacy.

Creative Example: Monitoring a Voice Assistant

A company deploys a voice assistant that processes millions of voice queries daily. They monitor latency, error rates, and confidence scores in real time. When the assistant starts misinterpreting certain accents (concept drift), they collect new data, retrain the model, and redeploy it. Clarifai’s monitoring tools trigger an alert when accuracy drops below 85 %, and the MLOps pipeline automatically kicks off a retraining job.

Security, Privacy, and Ethical Considerations

How do you build responsible AI?

AI systems can create unintended harm if not designed responsibly. Ethical considerations include privacy, fairness, transparency, and accountability. Data regulations (GDPR, HIPAA, CCPA) demand compliance; failure can result in hefty penalties.

- Privacy: Use data anonymization, pseudonymization, and encryption to protect personal data. Federated learning enables collaborative training without sharing raw data.

- Fairness and bias mitigation: Identify and address biases in data and models. Use techniques like re‑sampling, re‑weighting, and adversarial debiasing. Test models on diverse populations.

- Transparency: Implement model cards and data sheets to document model behavior, training data, and intended use. Explainable AI tools like SHAP and LIME make decision processes more interpretable.

- Human oversight: Keep humans in the loop for high‑stakes decisions. Autonomous agents can chain actions together with minimal human intervention, but they also carry risks like unintended behavior and bias escalation.

- Regulatory compliance: Keep up with evolving AI laws in the US, EU, and other regions. Ensure your model’s data collection and inference practices follow guidelines.

Expert Insights

- Trust challenges: The AI Index notes that fewer people trust AI companies to safeguard their data, prompting new regulations.

- Autonomous agent risks: According to Times Of AI, agents that chain actions can lead to unintended consequences; human supervision and explicit ethics are vital.

- Responsibility in design: Microsoft emphasizes that AI requires human oversight and ethical frameworks to avoid misuse.

Creative Example: Handling Sensitive Health Data

Consider an AI model that predicts heart disease from wearable sensor data. To protect patients, data is encrypted on devices and processed locally using a Clarifai local runner. Federated learning aggregates model updates from multiple hospitals without transmitting raw data. Model cards document the training data (e.g., 40 % female, ages 20–80) and known limitations (e.g., less accurate for patients with rare conditions), while the system alerts clinicians rather than making final decisions.

Industry‑Specific Applications & Real‑World Case Studies

Healthcare: Improving Diagnostics and Personalized Care

In healthcare, AI accelerates drug discovery, diagnosis, and treatment planning. IBM Watsonx.ai and DeepMind’s AlphaFold 3 help clinicians understand protein structures and identify drug targets. Edge AI enables remote patient monitoring—portable devices analyze heart rhythms in real time, improving response times and protecting data.

Expert Insights

- Remote monitoring: Edge AI allows wearable devices to analyze vitals locally, ensuring privacy and reducing latency.

- Personalization: AI tailors treatments to individual genetics and lifestyles, enhancing outcomes.

- Compliance: Healthcare AI must adhere to HIPAA and FDA guidelines.

Finance: Fraud Detection and Risk Management

AI transforms the financial sector by enhancing fraud detection, credit scoring, and algorithmic trading. Darktrace spots anomalies in real time; Numeral Signals uses crowdsourced data for investment predictions; Upstart AI improves credit decisions, allowing inclusive lending. Clarifai’s model orchestration can integrate real‑time inference into high‑throughput systems, while local runners ensure sensitive transaction data never leaves the organization.

Expert Insights

- Real‑time detection: AI models must deliver sub‑second decisions to catch fraudulent transactions.

- Fairness: Credit scoring models must avoid discriminating against protected groups and should be transparent.

- Edge inference: Processing data locally reduces risk of interception and ensures compliance.

Retail: Hyper‑Personalization and Autonomous Stores

Retailers leverage AI for personalized experiences, demand forecasting, and AI‑generated advertisements. Tools like Vue.ai, Lily AI, and Granify personalize shopping and optimize conversions. Amazon Go’s Just Walk Out technology uses edge AI to enable cashierless shopping, processing video and sensor data locally. Clarifai’s vision models can analyze customer behavior in real time and generate context‑aware recommendations.

Expert Insights

- Customer satisfaction: Eliminating checkout lines improves the shopping experience and increases loyalty.

- Data privacy: Retail AI must comply with privacy laws and protect consumer data.

- Real‑time recommendations: Edge AI and low‑latency models keep suggestions relevant as users browse.

Education: Adaptive Learning and Conversational Tutors

Educational platforms utilize AI to personalize learning paths, grade assignments, and provide tutoring. MagicSchool AI (2025 edition) plans lessons for teachers; Khanmigo by Khan Academy tutors students through conversation; Diffit helps educators tailor assignments. Clarifai’s NLP models can power intelligent tutoring systems that adapt in real time to a student’s comprehension level.

Expert Insights

- Equity: Ensure adaptive systems do not widen achievement gaps. Provide transparency about how recommendations are generated.

- Ethics: Avoid recording unnecessary data about minors and comply with COPPA.

- Accessibility: Use multimodal content (text, speech, visuals) to accommodate diverse learning styles.

Manufacturing: Predictive Maintenance and Quality Control

Manufacturers use AI for predictive maintenance, robotics automation, and quality assurance. Bright Machines Microfactories simplify production lines; Instrumental.ai identifies defects; Vention MachineMotion 3 enables adaptive robots. The Stream Analyze case study shows that deploying edge AI directly on the production line (using a Raspberry Pi) improved inspection speed 100‑fold and maintained data security.

Expert Insights

- Localized AI: Processing data on devices ensures confidentiality and reduces network dependency.

- Predictive analytics: AI can reduce downtime by predicting equipment failure and scheduling maintenance.

- Scalability: Edge AI frameworks must be scalable and flexible to adapt to different factories and machines.

Future Trends and Emerging Topics

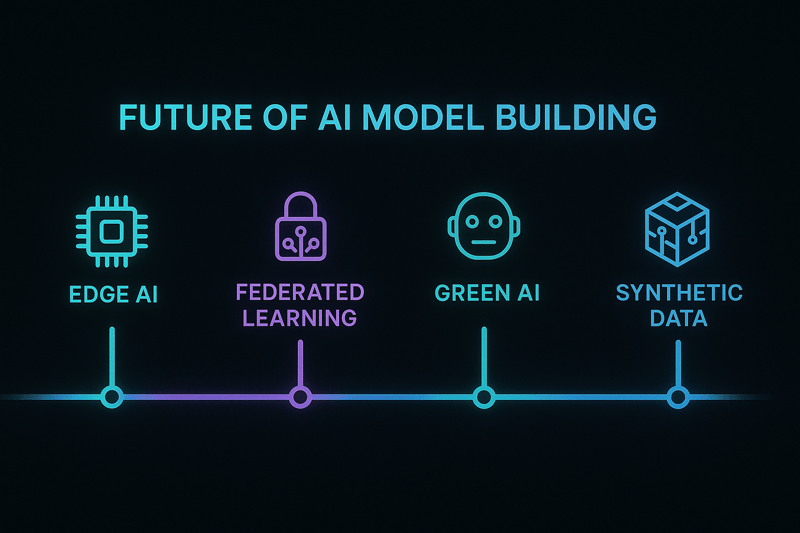

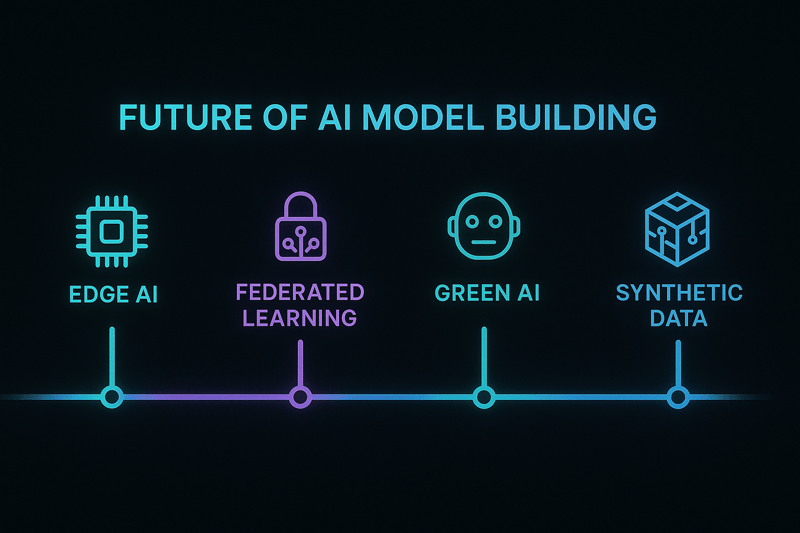

What will shape AI development in the next few years?

As AI matures, several trends are reshaping model development and deployment. Understanding these trends helps ensure your models remain relevant, efficient, and responsible.

Multimodal AI and Human‑AI Collaboration

- Multimodal AI: Systems that integrate text, images, audio, and video enable rich, human‑like interactions. Virtual agents can respond using voice, chat, and visuals, creating highly personalized customer service and educational experiences.

- Human‑AI collaboration: AI is automating routine tasks, allowing humans to focus on creativity and strategic decision‑making. However, humans must interpret AI‑generated insights ethically.

Autonomous Agents and Agentic Workflows

- Specialized agents: Tools like AutoGPT and Devin autonomously chain tasks, performing research and operations with minimal human input. They can speed up discovery but require oversight to prevent unintended behavior.

- Workflow automation: Agentic workflows will transform how teams handle complex processes, from supply chain management to product design.

Green AI and Sustainable Compute

- Energy efficiency: AI training and inference consume vast amounts of energy. Innovations such as liquid cooling, carbon‑free energy, and energy‑aware scheduling reduce environmental impact. New research shows training compute is doubling every five months, making sustainability crucial.

- Algorithmic efficiency: Emerging algorithms and hardware (e.g., neuromorphic chips) aim to achieve equivalent performance with lower energy usage.

Edge AI and Federated Learning

- Federated learning: Enables decentralized model training across devices without sharing raw data. Market value for federated learning could reach $300 million by 2030. Multi‑prototype FL trains specialized models for different locations and combines them.

- 6G and quantum networks: Next‑gen networks will support faster synchronization across devices.

- Edge Quantum Computing: Hybrid quantum‑classical models will enable real‑time decisions at the edge.

Retrieval‑Augmented Generation (RAG) and AI Agents

- Mature RAG: Moves beyond static information retrieval to incorporate real‑time data, sensor inputs, and knowledge graphs. This significantly improves response accuracy and context.

- AI agents in enterprise: Domain‑specific agents automate legal review, compliance monitoring, and personalized recommendations.

Open‑Source and Transparency

- Democratization: Low‑cost open‑source models such as Llama 3.1, DeepSeek R1, Gemma, and Mixtral 8×22B offer cutting‑edge performance.

- Transparency: Open models enable researchers and developers to inspect and improve algorithms, increasing trust and accelerating innovation.

Expert Insights for the Future

- Edge is the new frontier: Times Of AI predicts that edge AI and multimodal systems will dominate the next wave of innovation.

- Federated learning will be critical: The 2025 Edge AI report calls federated learning a cornerstone of decentralized intelligence, with quantum federated learning on the horizon.

- Responsible AI is non‑negotiable: Regulatory frameworks worldwide are tightening; practitioners must prioritize fairness, transparency, and human oversight.

Pitfalls, Challenges & Practical Solutions

What can go wrong, and how do you avoid it?

Building AI models is challenging; awareness of potential pitfalls enables you to proactively mitigate them.

- Poor data quality and bias: Garbage in, garbage out. Invest in data collection and cleaning. Audit data for hidden biases and balance your dataset.

- Over‑fitting or under‑fitting: Use cross‑validation and regularization. Add dropout layers, reduce model complexity, or gather more data.

- Insufficient computing resources: Training large models requires GPUs or specialized hardware. Clarifai’s compute orchestration can allocate resources efficiently. Explore energy‑efficient algorithms and hardware.

- Integration challenges: Legacy systems may not interact seamlessly with AI services. Use modular architectures and standardized protocols (REST, gRPC). Plan integration from the project’s outset.

- Ethical and compliance risks: Always consider privacy, fairness, and transparency. Document your model’s purpose and limitations. Use federated learning or on‑device inference to protect sensitive data.

- Concept drift and model degradation: Monitor data distributions and performance metrics. Use MLOps pipelines to retrain when performance drops.

Creative Example: Over‑fitting in a Small Dataset

A startup built an AI model to predict stock price movements using a small dataset. Initially, the model achieved 99 % accuracy on training data but only 60 % on the test set—classic over‑fitting. They fixed the issue by adding dropout layers, using early stopping, regularizing parameters, and collecting more data. They also simplified the architecture and implemented k‑fold cross‑validation to ensure robust performance.

Conclusion: Building AI Models with Responsibility and Vision

Creating an AI model is a journey that spans strategic planning, data mastery, algorithmic expertise, robust engineering, ethical responsibility, and continuous improvement. Clarifai can help you on this journey with tools for compute orchestration, pretrained models, workflow management, and edge deployments. As AI continues to evolve—embracing multimodal interactions, autonomous agents, green computing, and federated intelligence—practitioners must remain adaptable, ethical, and visionary. By following this comprehensive guide and keeping an eye on emerging trends, you’ll be well‑equipped to build AI models that not only perform but also inspire trust and deliver real value.

Frequently Asked Questions (FAQs)

Q1: How long does it take to build an AI model?

Building an AI model can take anywhere from a few weeks to several months, depending on the complexity of the problem, the availability of data, and the team’s expertise. A simple classification model might be up and running within days, while a robust, production‑ready system that meets compliance and fairness requirements could take months.

Q2: What programming language should I use?

Python is the most popular language for AI due to its extensive libraries and community support. Other options include R for statistical analysis, Julia for high performance, and Java/Scala for enterprise integration. Clarifai’s SDKs provide interfaces in multiple languages, simplifying integration.

Q3: How do I handle data privacy?

Use anonymization, encryption, and access controls. For collaborative training, consider federated learning, which trains models across devices without sharing raw data. Clarifai’s platform supports secure data handling and local inference.

Q4: What is the difference between machine learning and generative AI?

Machine learning focuses on recognizing patterns and making predictions, whereas generative AI creates new content (text, images, music) based on learned patterns. Generative models like transformers and diffusion models are particularly useful for creative tasks and data augmentation.

Q5: Do I need expensive hardware to build an AI model?

Not always. You can start with cloud‑based services or pretrained models. For large models, GPUs or specialized hardware improve training efficiency. Clarifai’s compute orchestration dynamically allocates resources, and local runners enable on‑device inference without costly cloud usage.

Q6: How do I ensure my model remains accurate over time?

Implement continuous monitoring for performance metrics and data drift. Use automated retraining pipelines and schedule regular audits for fairness and bias. MLOps tools make these processes manageable.

Q7: Can AI models be creative?

Yes. Generative AI creates text, images, video, and even 3D environments. Combining retrieval‑augmented generation with specialized AI agents results in highly creative and contextually aware systems.

Q8: How do I integrate Clarifai into my AI workflow?

Clarifai provides APIs and SDKs for model training, inference, workflow orchestration, data annotation, and edge deployment. You can fine‑tune Clarifai’s pretrained models or bring your own. The platform handles compute orchestration and allows you to run models on local runners for low‑latency, secure inference.

Q9: What trends should I watch in the near future?

Keep an eye on multimodal AI, federated learning, autonomous agents, green AI, quantum and neuromorphic hardware, and the growing open‑source ecosystem. These trends will shape how models are built, deployed, and managed.

Training and Tuning Your Model

Training and Tuning Your Model