Artificial Intelligence (AI) is evolving at an unprecedented pace, paving the way for transformative advancements across numerous sectors. At the heart of this rapid evolution is an exceptional class of AI foundation models. These models, akin to master linguists, have the capability to understand and generate human‑like text based on the input they receive. They are the bedrock, the fundamental architecture upon which many AI applications are constructed and fine‑tuned.

With the vast array of foundation models available, selecting the ideal one to fit your unique requirements can be an intricate endeavor. It’s not a one‑size‑fits‑all situation – different tasks demand different AI foundation models. As such, an informed decision is crucial to ensure optimal outcomes. But how can you navigate this labyrinth of models and make the right choice? In this blog, we’ll pull back the curtain on these sophisticated models. We’ll delve deep into their workings, their strengths, their limitations, and most importantly, the critical factors that should guide your selection process. We’ll explore key considerations like the model’s complexity and size, training data and computational resources, the specific use case it excels in, the ease of implementation, and the ethical and societal implications of deploying these models.

By the end of this blog, our aim is to equip you with the knowledge and understanding required to make an informed decision on the best AI foundation model for your specific needs. So, whether you’re developing a chatbot, creating a text‑generation application, or innovating a new AI‑powered solution, you’ll have a clearer vision of the path ahead.

Think of AI foundation models as the multi‑talented athletes of the AI world. They can adapt to a variety of tasks without needing a lot of special training. Some shining stars in this arena include GPT‑3, GPT‑4, ChatGPT, Cohere, AI21, and Anthropic Claude. For example, consider ChatGPT. It can help with diverse tasks, such as drafting emails, writing code and answering questions. It interacts in a conversational way; the dialogue format makes it possible for ChatGPT to answer follow‑up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests.

These statistics underscore the strategic importance of foundation models and the urgency of choosing the right one.

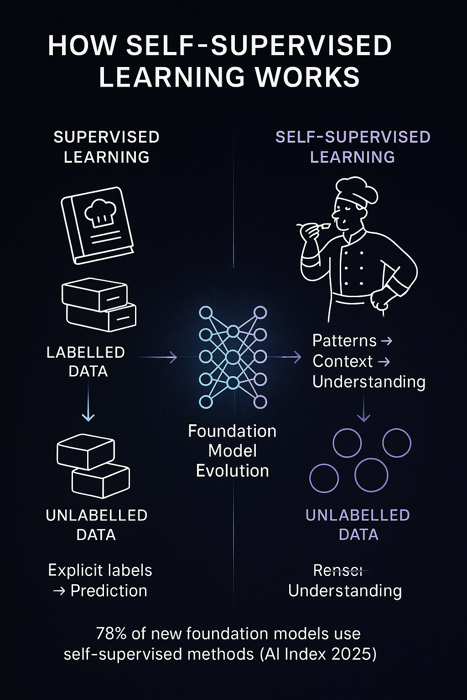

Self‑supervised learning is a bit like learning to cook by taste‑testing. Without using explicit recipes (or “labels” in machine learning), the model learns to understand data by spotting patterns and associations within it. This is different from supervised learning, where the model is trained on a dataset with explicit labels – in other words, where each piece of data has a corresponding output that the model is supposed to predict. On the contrary, self‑supervised learning does not rely on these labels. Instead, it draws insights from the input data itself, uncovering patterns and relationships that may not be immediately apparent or are not specifically indicated by a label. This gives self‑supervised learning its power and versatility.

Continuing the cooking analogy, a self‑taught chef learns by exploring various ingredients, cooking techniques, and by experimenting with different flavour combinations. They don’t necessarily follow explicit recipes but instead leverage their understanding of the ingredients and techniques to create dishes. They can taste and adjust, taste and adjust, until they’ve achieved the flavour profile they’re aiming for. They learn the underlying principles of cooking – how flavours work together, how heat changes food, and what spices to use.

Similarly, self‑supervised learning models like GPT‑3 learn by exploring vast amounts of data. They are not given explicit “recipes” or labels, but instead are allowed to examine the “ingredients” – in this case, tokens or words in text data – and understand their associations and contextual relationships. They learn the structure of sentences, the meaning of words in different contexts, and the typical ways that ideas are expressed in human language. They can then generate text that follows these patterns, effectively “cooking up” human‑like text based on the “taste‑testing” they’ve done during training. This method allows self‑supervised models to be incredibly versatile. Just like a self‑taught chef can create a wide range of dishes based on their understanding of ingredients and cooking techniques, GPT‑3 can generate a wide range of text, from writing essays and articles to answering questions, translating languages, and even writing poetry. This versatility has led to an explosion of applications in natural language processing and beyond. Moreover, because self‑supervised learning models learn from unlabeled data, they can take advantage of the vast amounts of such data available on the internet. This ability to learn from so much data is another key aspect of their power.

Although self‑supervised learning provides a strong foundation, relying solely on it can leave models with certain biases or blind spots. Models trained on internet data may replicate harmful stereotypes or misinformation. They also may not excel at niche tasks requiring domain‑specific knowledge. Fine‑tuning on curated datasets or adding safety‑training steps is often necessary to achieve reliable performance and mitigate bias. The Stanford AI Index notes that while performance on benchmarks like MMMU improved drastically in 2024, AI‑related incidents increased by 56 % at the same time, highlighting the need for responsible development.

Self‑supervised learning teaches models to find patterns in unlabeled data. By predicting missing pieces in text, images or audio, models learn general representations. This approach avoids costly labelling, scales effectively and forms the backbone of modern foundation models. However, it must be complemented with fine‑tuning and safety measures to mitigate biases and ensure domain‑specific performance.

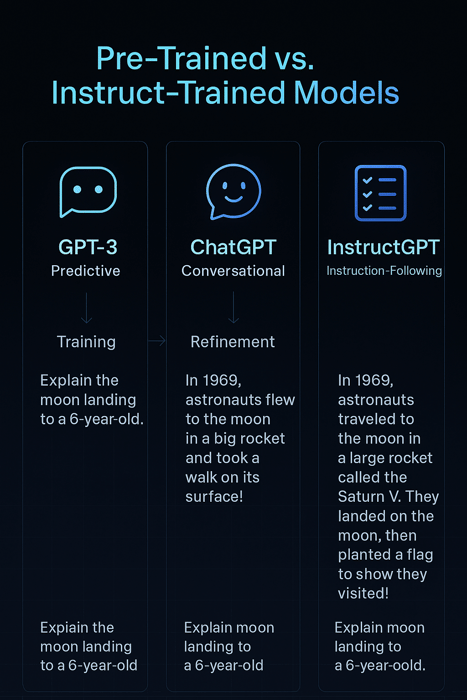

A pre‑trained model is like an assistant, excellent at predicting and completing your sentences, much like an autocomplete function on your smartphone. When you’re drafting an email or writing a report, it’s quite useful as it draws on its broad learning to guess the next word you might need. However, this type of assistant can sometimes get carried away with its predictive ability, straying from the specific task you’ve set. Imagine asking this assistant to find you a vegan dessert recipe. It might provide you with a fascinating history of veganism or describe the health benefits of a vegan diet, instead of focusing on your actual request: a vegan dessert recipe.

An instruct‑trained model behaves like an obedient assistant. These models are trained to follow instructions closely, making them ideal for carrying out specific tasks. For example, when asked for a vegan dessert recipe, they are more likely to respond directly to the task at hand, providing a straightforward answer (and a delicious recipe). The “instruct” in InstructGPT refers to a specific type of fine‑tuning used to train the model to follow instructions in a prompt and provide detailed responses. This makes it more suitable for tasks that require understanding and following explicit instructions in the input.

The main difference between these models lies in the training data and the specific fine‑tuning procedures they undergo:

The table below summarizes the pros and cons:

|

Model type |

Training procedure |

Pros |

Cons |

Suitable for |

|

Pre‑trained |

Self‑supervised pre‑training on huge unlabeled datasets |

Versatile; strong general knowledge; adapt quickly to new tasks |

May hallucinate; less obedient; potential bias from training data |

Broad exploration, creative writing, tasks where diverse answers are acceptable |

|

Instruction‑tuned |

Pre‑trained model further fine‑tuned with supervised instruction data and RLHF |

Follows prompts; safer outputs; better alignment |

Training cost; narrower focus; may require licensing of proprietary data |

Customer support, code assistants, summarization where instruction fidelity is critical |

When deciding which model type to use, consider:

Pre‑trained models learn general patterns from massive unlabeled text and excel at open‑ended tasks. Instruction‑tuned models refine these abilities using curated prompts and human feedback, making them better at following directions and producing safer outputs. Choose pre‑trained models for exploration and broad coverage; choose instruction‑tuned models when adherence to instructions, safety and reliability are paramount.

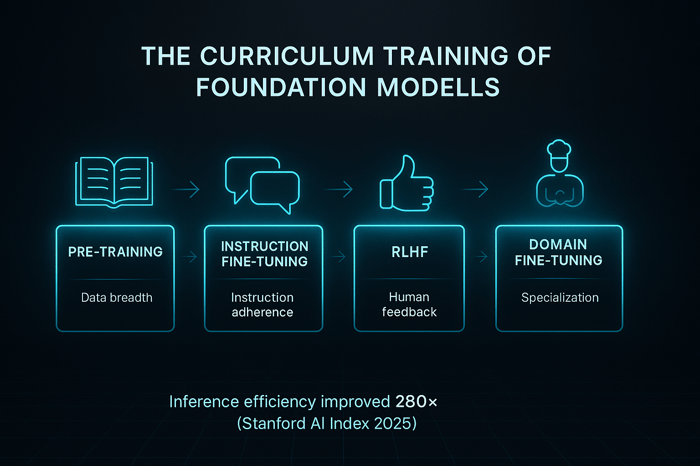

Similar to a student progressing through school, AI foundation models also follow a “curriculum.” They start with a broad education (pre‑training on a diverse range of text), get more specialized training and get better at following instructions (supervised instruction training), then benefit from practical coaching (reinforcement learning through human feedback). Lastly, they get a sort of “PhD” by specializing further (fine‑tuning on custom data).

|

Stage |

Analogy |

Purpose |

Typical techniques |

|

Pre‑training |

Elementary–high school |

Acquire general knowledge and language understanding |

Self‑supervised learning on massive datasets |

|

Instruction tuning |

Undergraduate |

Learn to follow prompts and style |

Supervised fine‑tuning with curated Q&A data |

|

RLHF |

Master’s |

Align with human preferences and reduce harmful outputs |

Reinforcement learning with human feedback rankings |

|

Tool use & agents |

Professional school |

Develop problem‑solving and tool‑using skills |

Reinforcement learning with verifiers; agentic training |

|

Domain‑specific fine‑tuning |

PhD |

Specialize for a domain or application |

Fine‑tuning on domain‑specific labeled datasets |

Foundation models follow a staged curriculum: broad pre‑training, instruction tuning, reinforcement learning (RLHF and RLVR), tool/agent training and domain‑specific fine‑tuning. Each stage builds new capabilities—from basic language understanding to instruction following, safety alignment and specialized skills. Understanding this curriculum helps practitioners decide which stage to adopt for their use case.

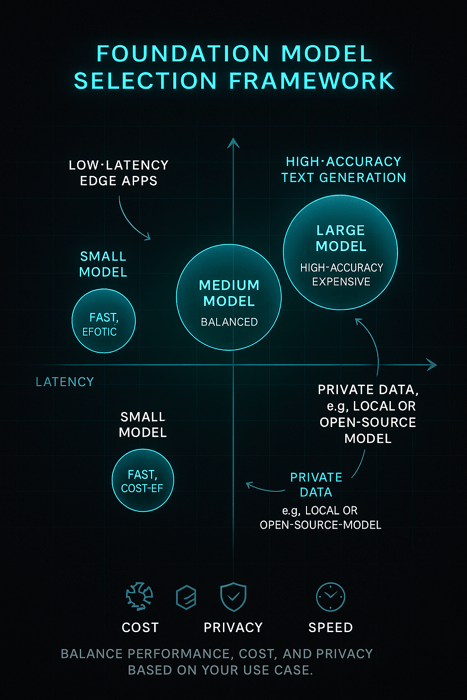

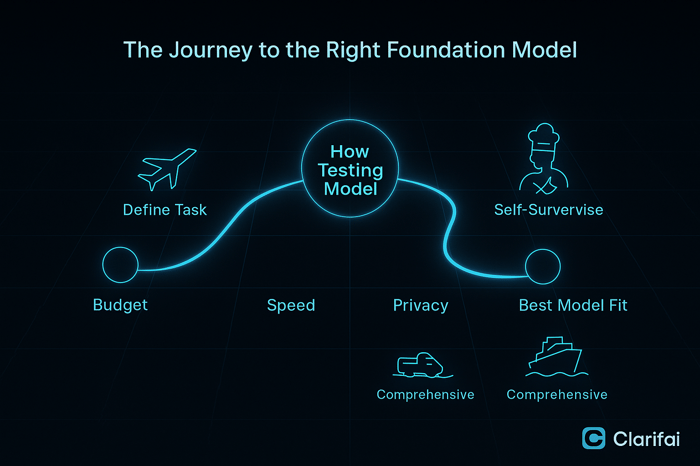

Selecting the right AI foundation model for your machine learning tasks can often feel like a complex puzzle, where numerous factors must harmoniously align to give you the best results. Like creating an exquisite gourmet dish, each ingredient or consideration – be it cost, latency, performance, privacy, or the type of training – has a unique role to play. Choosing the right balance of these components allows you to effectively address your specific AI needs.

Once your MVP is validated and you have a clear understanding of your specific requirements, you may find it more cost‑effective to transition to a smaller model. When it comes to machine learning models, size often goes hand in hand with cost. Larger models, renowned for their comprehensive capabilities and powerful performance, are typically more expensive not only to train but also to maintain and utilize. They can act as a robust springboard for getting initial insights or for validating your minimum viable product (MVP). However, it’s also important to consider the financial implications of using larger models, especially in the long run. Once your MVP is validated and you have a clear understanding of your specific requirements, you may find it more cost‑effective to transition to a smaller model. These smaller models, while perhaps not as comprehensive in their abilities, can be more tailored to your specific needs, providing an excellent balance between cost and functionality.

In the realm of AI and machine learning, latency refers to the response speed of a model – essentially, how quickly it can process input and provide output. Larger, more complex models can be likened to a detailed artist: they take their time to create an intricate, detailed masterpiece. However, their slow and meticulous process might not be suitable for applications that require real‑time or near‑instantaneous responses. In these cases, it might be beneficial to leverage model distillation techniques, where the knowledge and abilities of larger, more complex models are “distilled” into smaller, faster ones. This way, you can benefit from the depth of larger models while also maintaining the speed required for your specific applications.

Choosing an AI foundation model and ensuring performance in machine learning is all about finding the best fit for your specific task. If your task is highly specific and niche, such as identifying different species of birds from images, a smaller, specialized model that’s been fine‑tuned for this task might be the most effective choice. However, if your requirements are broader and more varied – for instance, if you’re building a versatile AI assistant that needs to summarize text, translate languages, answer diverse questions, and more – a foundation model could be your ideal solution. AI foundation models, owing to their wide‑ranging training, can handle a variety of tasks and excel at generalization, making them a valuable choice for multifaceted applications.

When working with sensitive or private data, privacy considerations take center stage. Some models, especially those that are closed‑source, often require sending data to their servers via APIs for processing. This can raise potential privacy and data security concerns, particularly when handling confidential information. If privacy is a key requirement for your application, you might want to consider alternatives such as open‑source models, which allow for local data processing. Another option might be to train your own smaller, specialized model that can operate on your private data locally. This approach could be likened to keeping your secret recipes in your home kitchen rather than sending them to a restaurant to be prepared.

The choice between a pre‑trained and instruct‑trained model hinges largely on your specific use case and requirements. Pre‑trained models, having been trained on a wide array of data, offer powerful predictive capabilities and can provide valuable insights for a wide range of tasks. However, if your task involves closely following specific instructions or guidelines, an instruct‑trained model might be a more suitable choice. These models are specially trained to understand and adhere to given instructions, providing more controlled and precise outputs. Thus, the choice between pre‑trained and instruct‑trained models is largely dependent on the nature of your task and the level of control or freedom you want your model to have.

Keeping an eye on current research and trends in machine learning can also be beneficial. Often, the state‑of‑the‑art models in a particular domain (e.g., transformer models for NLP tasks) provide the best performance. Clarifai is constantly updating our collection of models to add the latest and greatest for you to try.

To navigate these factors systematically, consider the CRISP framework below (Cost, Response time, Importance of task, Security, Provider ecosystem):

Selecting the right foundation model requires balancing cost, latency, performance, privacy and task requirements. Use a structured framework like CRISP to evaluate these factors. Consider the importance of the task and the vendor ecosystem because switching providers is rare. Use specialized fine‑tuning for niche tasks and instruction‑tuned models for tasks that require strict adherence to instructions and safety.

Embarking on the journey and choosing between AI foundation models for your specific use case may seem like a daunting endeavor at first. However, when armed with the right knowledge and considerations, you can navigate the vast landscape of AI with confidence and clarity. Consider the process akin to charting a map for a grand voyage. To plot your course, you need to understand your starting point and your destination. Here, these translate to a clear understanding of your task requirements, your available resources, and the desired outcome of your project.

The “cost” factor is comparable to your travel budget; it defines the affordability of the model. Larger, more comprehensive models may provide an extensive range of capabilities but might also require significant resources to train, maintain, and utilize. “Latency”, akin to the time it takes to travel, is another critical point of consideration. Depending on the nature of your application, you may need a model that delivers quick responses, necessitating the choice of a model that strikes a balance between complexity and speed. “Performance” equates to how well the model can carry out the task. Just as you’d choose the best mode of transportation for your journey, select a model that excels at your specific task – be it a niche, specialized application or a broad, multifaceted one.

“Privacy” is like choosing a secure and safe route for your journey. If you’re handling sensitive data, you need to ensure that your chosen model can process and handle this data securely, respecting all necessary privacy considerations. Keeping an eye on the current state‑of‑the‑art (SOTA) models is like staying informed about the latest, most efficient routes and modes of transport. These models, built on the forefront of AI research, often provide the best performance and could guide your choice of model. Remember, the world of AI and machine learning is vast and varied. There is no single “right” model that fits all scenarios. The optimal choice is the one that aligns best with your needs, resources, and constraints. It’s about finding the model that can best take you from your starting point to your destination, navigating any obstacles that arise along the way.

Choosing a foundation model is like planning a voyage. Understand your budget (cost), the urgency of your journey (latency), the suitability of your transport (performance), the need for a secure route (privacy) and the constantly changing map (state‑of‑the‑art models and regulations). Stay informed about rapid improvements and global dynamics to chart a path that aligns with your goals.

In conclusion, choosing the right AI foundation model is a nuanced process guided by a range of considerations. However, with careful analysis and an understanding of your requirements, it’s a task that can be navigated successfully, paving the way for powerful, effective AI solutions. The statistics and expert insights shared in this updated guide underscore the tremendous growth of AI adoption and the rapid evolution of model capabilities. By understanding self‑supervised learning, differentiating between pre‑trained and instruction‑tuned models, mastering the curriculum of foundation models and applying a structured framework for evaluation, you can make informed decisions that align with your organization’s goals.

Key takeaways:

By building on the original article and incorporating up‑to‑date data and expert perspectives, this guide aims to provide comprehensive, actionable information to help you choose the right foundation model for your use case.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy