This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.

This release brings Trinity Mini, the latest open-weight model from Arcee AI, now available directly on Clarifai. Trinity Mini is a 26B parameter Mixture-of-Experts (MoE) model with 3B active parameters, designed for reasoning-heavy workloads and agentic AI applications.

The model builds on the success of AFM-4.5B, incorporating advanced math, code, and reasoning capabilities. Training was performed on 512 H200 GPUs using Prime Intellect’s infrastructure, ensuring efficiency and scalability at every stage.

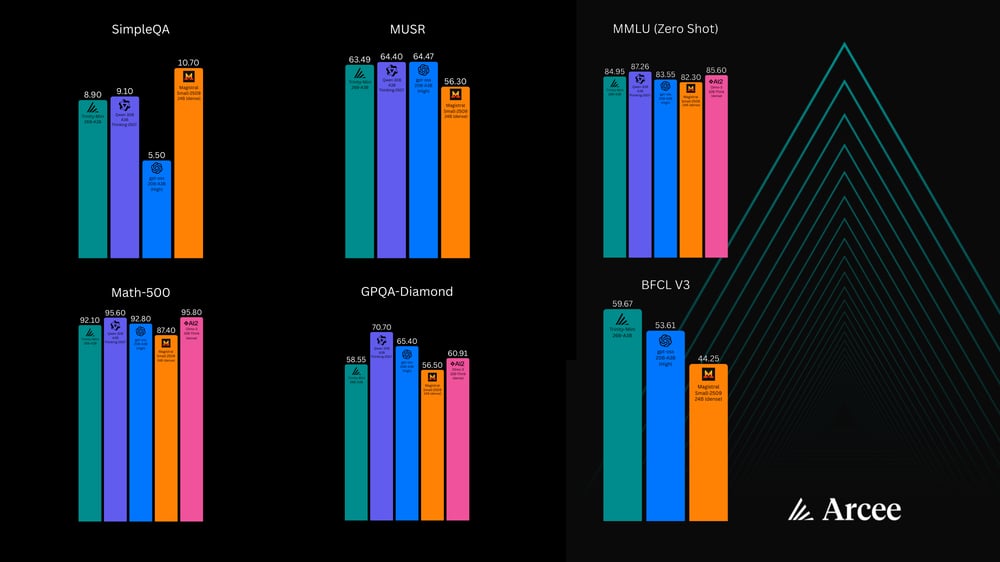

On benchmarks, Trinity Mini shows strong reasoning performance across multiple evaluation suites. It scores 8.90 on SimpleQA, 63.49 on MUSR, and 84.95 on MMLU (Zero Shot). For math-focused workloads, the model reaches 92.10 on Math-500, and on advanced scientific tasks such as GPQA-Diamond and BFCL V3 it scores 58.55 and 59.67. These results place Trinity Mini close to larger instruction-tuned models while retaining the efficiency benefits of its compact MoE design.

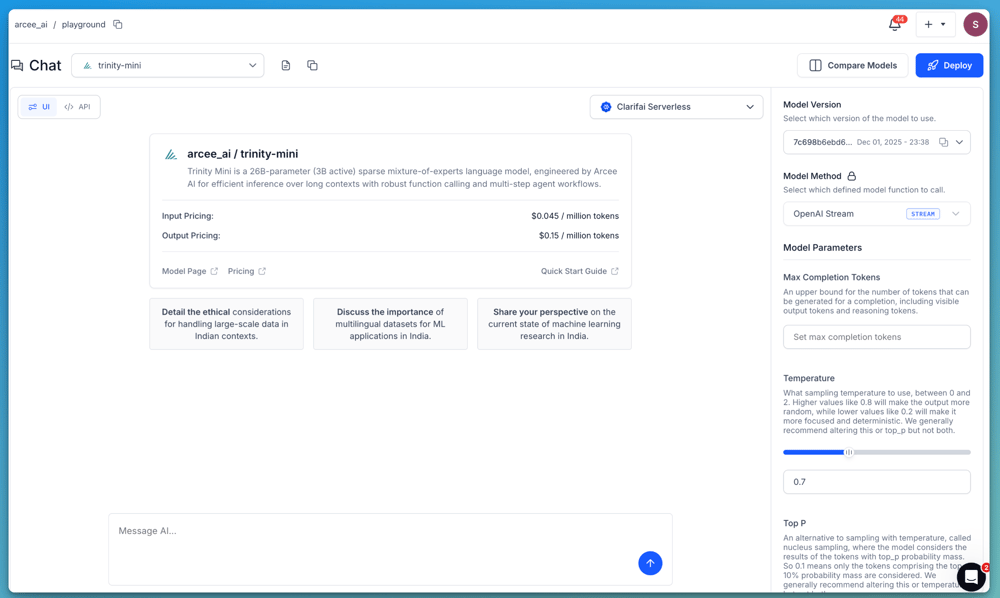

We have partnered with Arcee to make Trinity Mini fully accessible through the Clarifai, giving you an easy way to explore the model, evaluate its outputs and compare it against other reasoning models available on the platform and integrate it into your own applications.

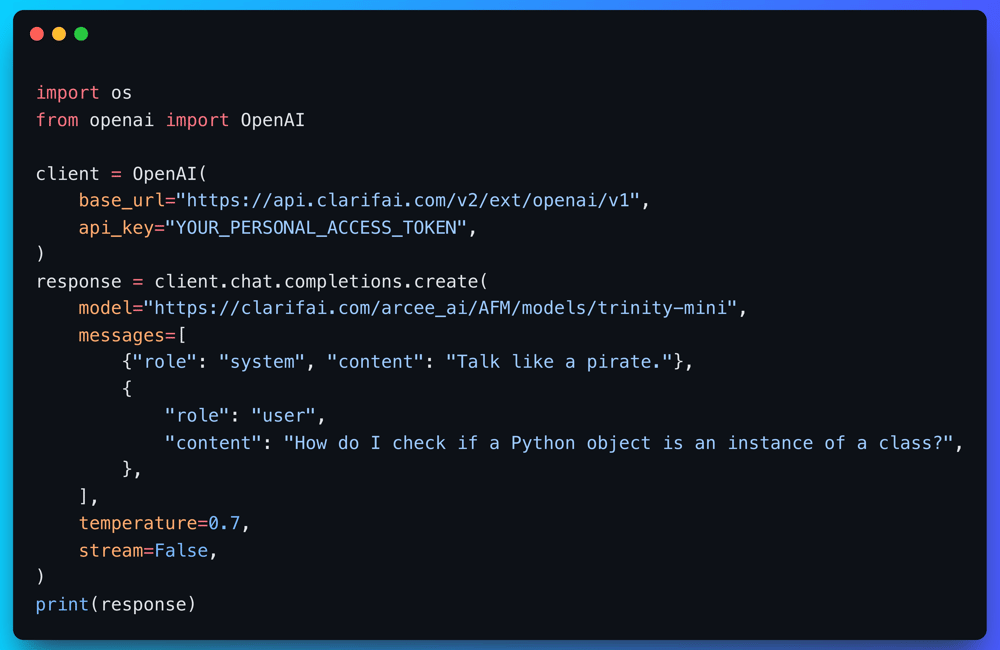

Developers can also start using Trinity Mini right away using Clarifai’s OpenAI Compatable API. Check out the guide here.

You can also access Trinity Mini via Clarifai on OpenRouter. Check it out here.

We are upgrading our infrastructure, and several legacy models will be decommissioned on December 31, 2025. These older task-specific models are being retired as we transition to newer, more capable model families that now cover the same workflows with better performance and stability.

All affected functionality remains fully supported on the platform through current models. If you need help choosing replacements, we have prepared a detailed guide covering recommended alternatives for text classification, image recognition, image moderation, OCR and document workflows. Checkout the decommissioning and alternatives guide

Ministral-3-14B-Reasoning-2512 is now available on Clarifai. This is the largest model in the Ministral 3 family and is designed for math, coding, STEM workloads and multi-step reasoning. The model combines a ~13.5B-parameter language backbone with a ~0.4B-parameter vision encoder, allowing it to accept both text and image inputs.

It supports context lengths up to 256k tokens, making it suitable for long documents and extended reasoning pipelines. The model runs efficiently in BF16 on 32 GB VRAM, with quantized variations requiring less memory, which makes it practical for private deployments and custom agent use cases.

Try Ministral-3-14B-Reasoning-2512

GLM-4.6 is now available on Clarifai. This model unifies reasoning, coding and agentic capabilities into a single system, making it suitable for multi-skill assistants, tool-using agents and complex automation workflows. It supports a 200k token context window, allowing it to handle long documents, extended plans and multi-step tasks in one sequence.

The model delivers strong performance across reasoning and coding evaluations, with improvements in real-world coding agents such as Claude Code, Cline, Roo Code and Kilo Code. GLM-4.6 is designed to interact cleanly with tools, generate structured outputs and operate reliably inside agent frameworks.

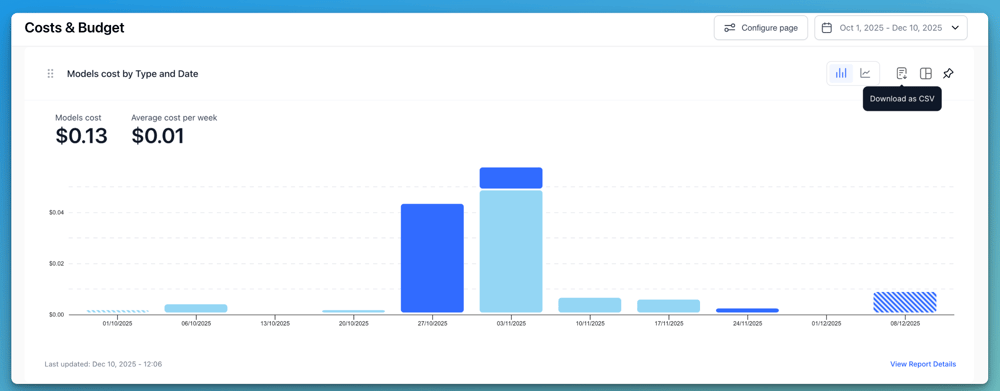

The Control Center gives users a unified view of usage, performance and activity across their models and deployments. It is designed to help teams track consumption patterns, monitor workloads and better understand how their applications are running on Clarifai.

In this release We added a new option to export the data of each chart as a CSV file, making it easier to analyze metrics outside the dashboard or integrate them into custom reporting workflows.

Updated account navigation with a cleaner layout and reordered menu items.

Added support for custom profile pictures across the platform.

PAT values in code snippets are now masked by default.

There are several more usability and account-management improvements included in this release. Check them out here

Added platform specification support in config.yaml and the new --platform CLI option.

Improved model loading validation for more reliable initialization.

Refactored Dockerfile templates into a cleaner multi-stage build to simplify runner image creation.

Additional SDK enhancements include better dependency parsing, improved environment handling, OpenAI Responses API support and multiple runner reliability fixes. Learn more here

You can start building with Trinity Mini on Clarifai today. Test the model in the Playground, and integrate it into your workflows through the OpenAI-compatible API. Trinity Mini is fully open-weight and production-ready, making it easy to build agents, reasoning pipelines or long-context applications without additional setup.

If you need help choosing the right configuration or want to deploy the model at scale, our team can guide you. Reachout to the team here.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy