Large Language Models (LLMs) have gained a lot of attention recently and achieved impressive results in various NLP tasks. Building on this momentum, it’s crucial to dive deeper into specific applications of LLMs, such as their usage in the task of few-shot Named Entity Recognition (NER). This leads us to the focus of our ongoing exploration — a comparative analysis of LLMs’ performance in few-shot NER. We are trying to understand:

Check out our previous blog post on what NER is and current state-of-the-art (SOTA) few-shot NER methods.

In this blog post, we continue our discussion to find out whether LLMs reign supreme in few-shot NER. To do this, we’ll be looking at a few recently released papers that address each of the questions above. Recent research indicates that when there is a wealth of labeled examples for a certain entity type, LLMs still lag behind supervised methods for that particular entity type. Yet, for most entity types there’s a lack of annotated data. Novel entity types are continually springing up, and creating annotated examples is a costly and lengthy process, particularly in high-value fields like biomedicine where specialized knowledge is necessary for annotation. As such, few-shot NER remains a relevant and important task.

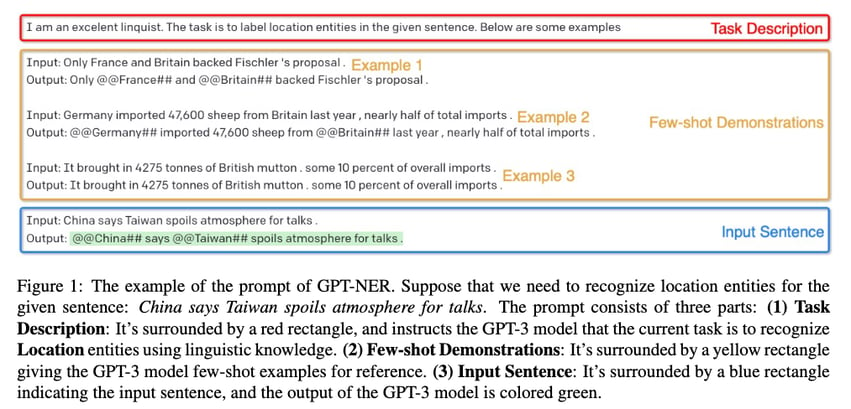

To find out, let’s take a look at GPT-NER by Shuhe Wang et al. which was published in April 2023. The authors proposed to transform the NER sequence labeling task (assigning classes to tokens) into a generation task (producing text), which should make it easier to deal with for LLMs, and GPT models. The figure below is an example of how the prompts are constructed to obtain labels when the model is given an instruction along with a few examples.

GPT-NER Prompt construction example (Shuhe Wang et al.)

To transform the task into something more easily digestible for LLMs, the authors add special symbols marking the locations of the named entities: for example, France becomes @@France##. After seeing a few examples of this, the model then has to mark the entities in its answers in the same way. In this setting, only one type of entity (e.g. location or person) is detected using one prompt. If multiple entity types need to be detected, the model has to be queried several times.

The authors used GPT-3 and conducted experiments over 4 different NER datasets. Unsurprisingly, supervised models continue to outperform GPT-NER in fully supervised baselines, as LLMs are usually seen as generalists. LLMs also suffer from hallucination, a phenomenon where LLMs generate text that isn’t real, or is incorrect or nonsensical. The authors claimed that, in their case, the model tended to over-confidently mark non-entity words as named entities. To counteract the issue of hallucination, the authors propose a self-verification strategy: when the model says something is an entity, it is then asked a yes/no question to verify whether the extracted entity belongs to the specified type. Using this self-verification strategy further improves the model’s performance but does not yet bridge the gap in performance when compared to supervised methods.

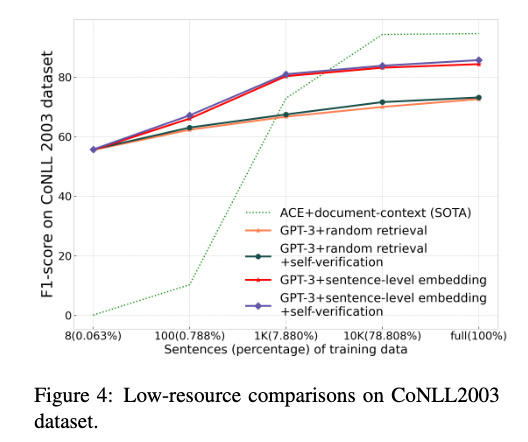

A fascinating point from this paper is that GPT-NER exhibits impressive proficiency in low-resource and few-shot NER setups. The figure below shows the performance of the supervised model is far below GPT-3 when the training set is very small.

GPT-NER vs supervised methods in a low-resource setting on a dataset (Shuhe Wang et al.)

That seems to be very promising. Does this mean the answer ends here? Not at all. Details in the paper reveal a few things about the GPT-NER methodology that might not seem obvious at first glance.

A lot of details in the paper focus on how to select the few examples from the training dataset to supply within the LLM prompt (the authors call these “few-shot demonstration examples”). The main difference between this and a true few-shot setting is that the latter only has a few training examples available whereas the former has a lot more, i.e. we aren’t spoiled with choice in a true few-shot setting. In addition, the best demonstration example retrieval method uses a fine-tuned NER model. All this suggests that an apple-to-apple comparison should be made but was not done in this paper. A benchmark should be created where the best few-shot method and pure-LLM methods are compared using the same (few) training examples using datasets like Few-NERD.

That being said, it’s still fascinating that LLM-based methods like GPT-NER can achieve almost comparable performance against SOTA NER methods.

Due to their popularity, OpenAI’s GPT series models, such as the GPT-3 series (davinci, text-davinci-001), have been the main focus for initial studies. In a paper titled “A Comprehensive Capability Analysis of GPT-3 and GPT-3.5 Series Models“ that was first published in March 2023, Ye et al. claimed that while GPT-3 and ChatGPT achieve the best performance over 6 different NER datasets among the OpenAI GPT series models in the zero-shot setting, performance varies in the few-shot setting (1-shot and 3-shot), i.e. there is no clear winner.

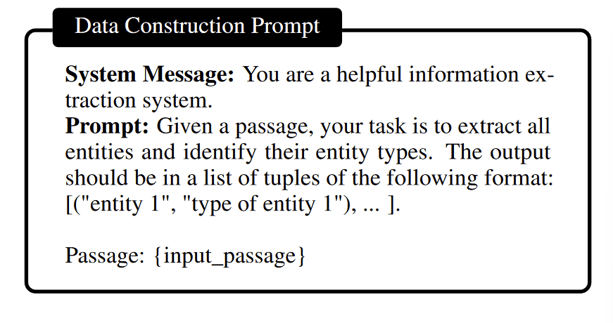

In previous studies, a variety of prompting methods have been presented. However, Zhou et al. put forth a unique approach where they utilized the method of targeted distilling. Instead of simply applying an LLM as is to the NER task via prompting, they train a smaller model, called a student, that aims to replicate the capabilities of a generalist language model on a specific task (in this case, named entity recognition).

A student model is created in two main steps. First, they take samples of a large text dataset and use ChatGPT to find named entities in these samples and identify their types. Then these automatically annotated data are used as instructions to fine-tune a smaller, open-source LLM. The authors name this method “mission-focused instruction tuning”. This way, the smaller model learns to replicate the capabilities of the stronger model which has more parameters. The new model only needs to perform well on a specific class of tasks, so it can actually outperform the model it learned from.

Prompting an LLM to generate entity mentions and their types (Zhou et al.)

This methodology enabled Zhou et al. to significantly outperform ChatGPT and a few other LLMs in NER.

Instead of few-shot NER, the authors focused on open-domain NER, which is a sub-task of NER that works across a wide variety of domains. This direction of research has proven to be an interesting exploration of the applications of GPT models and instruction tuning. The paper’s experiments show promising results, indicating they could potentially revolutionize the way we approach NER tasks and increase the systems’ efficiency and precision.

At the same time, there have been efforts focused on using open-source LLMs, which offer more transparency and options for experimentation. For example, Li et al. have recently proposed to leverage the internal representations within a large language model (specifically, LLaMA-2) and supervised fine-tuning to create better NER and text classification models. The authors claim to achieve state-of-the-art results on the CoNLL-2003 and OntoNotes datasets. Such extensions and modifications are only possible with open-source models, and it is a promising sign that they have been getting more attention and may also be extended to few-shot NER in the future.

Few-Shot NER using LLMs is still a relatively unexplored field. There are several trends and open-ended questions in this domain. For instance, ChatGPT is still commonly used, but given the emergence of other proprietary and open-source LLMs, this could shift in the future. The answers to these questions might not just shape the future of NER, but also have a considerable impact on the broader field of machine learning.

Try out one of the LLMs on the Clarifai platform today. We also have a full blog post on how to Compare Top LLMs with LLM Batteground.

Also, if you're looking to scale and deploy custom models, open-source models, or third-party models, Clarifai’s Compute Orchestration lets you deploy AI workloads on your dedicated cloud compute or within your own VPCs, on-premise, hybrid, or edge environments from a unified control plane.

Can’t find what you need? Consult our docs page or send us a message in our Community Discord channel.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy