-png.png?width=1300&height=867&name=ChatGPT%20Image%20Nov%2024%2c%202025%2c%2011_22_04%20PM%20(1)-png.png)

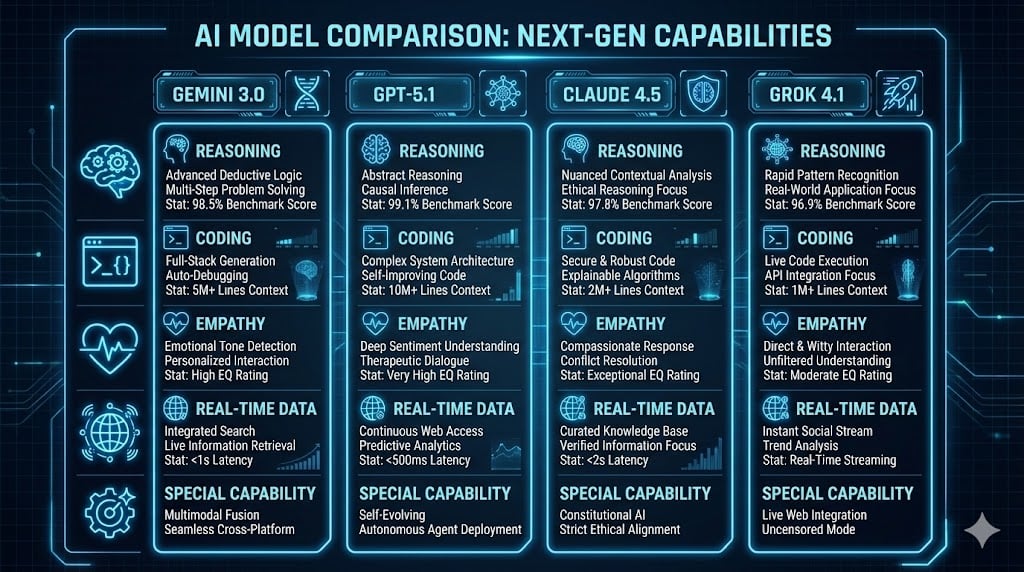

Artificial intelligence is changing faster than most people can keep up. By late 2025, a new generation of large‑language models (LLMs) has appeared that pushes the boundaries of reasoning, context memory and emotional intelligence. Google’s Gemini 3.0 Pro, OpenAI’s GPT‑5.1, Anthropic’s Claude Sonnet 4.5 and xAI’s Grok 4.1 represent the cutting edge. Each model was designed to excel at different tasks—reasoning, coding, adaptability and empathy—and the choice of model now profoundly shapes what you can build.

This article provides a clear, research‑backed comparison of these models, explains where Clarifai’s orchestration platform fits in and helps you pick the right AI companion. We draw on independent benchmarks, official announcements and expert commentary, and we incorporate practical examples and creative analogies to make complex ideas easy to grasp. The result is a human‑centred guide for developers, product managers and decision‑makers looking to harness AI safely and effectively.

|

Question |

Answer |

|

Why is Gemini 3.0 in the spotlight? |

It leads the field in reasoning and multimodal understanding. Gemini 3.0 broke the 1 500 Elo barrier on LMArena, scored record marks on Humanity’s Last Exam and ARC‑AGI‑2, and offers a 1 million‑token context window. |

|

What sets GPT‑5.1 apart? |

OpenAI introduced Instant and Thinking modes: Instant is fast and expressive; Thinking is slower but deeper, reaching up to 196 K tokens. It also adds safe automation tools like apply_patch and shell for controlled code execution. |

|

Why is Claude 4.5 called the coding specialist? |

Its 200 K token context plus memory and context‑editing tools enable long‑running coding or research tasks. Claude leads verified bug‑fixing benchmarks like SWE‑Bench with a 77.2 % score. |

|

What makes Grok 4.1 unique? |

Grok blends a 2 M token context with training on emotional intelligence, giving it high EQ Bench scores and the ability to respond empathetically. It also integrates real‑time retrieval for up‑to‑date information. |

|

Where does Clarifai help? |

Clarifai’s platform orchestrates these models. It routes queries based on complexity and cost, grounds answers using vector search and caches responses to reduce token usage. |

The latest generation of LLMs marks a paradigm shift. Prior models acted primarily as text predictors; the new ones serve as agents that can plan, reason and operate tools. The names may be catchy, but the technology behind them is serious. Let’s unpack what distinguishes each model.

Gemini 3.0 Pro is built for complex thinking. It uses native multimodality, meaning it processes text, images and video in a unified architecture. This cross‑modal integration lets it understand charts, photos and code simultaneously, which is invaluable for research and design. Gemini offers a Deep Think mode: by allocating more computation time per query, the model produces more nuanced answers. On the Humanity’s Last Exam, a challenging test across philosophy, engineering and humanities, Gemini scores 37.5 % in standard mode and 41 % with Deep Think. On ARC‑AGI‑2, which assesses abstract visual reasoning, its Deep Think score climbs to 45.1 %, nearly double GPT‑5.1’s 17.6 %.

Gemini’s 1 M‑token context allows it to process huge documents or code bases without losing track of earlier sections. This is ideal for legal analysis, scientific research or summarizing multi‑chapter reports. Antigravity, Google’s agentic interface, hooks the model into an editor, terminal and browser, letting it search, write code and navigate files from within a single conversation. However, this tight integration with Google infrastructure may create vendor lock‑in for organizations using other cloud providers.

Expert Insight:

GPT‑5.1 is the latest iteration of ChatGPT. It introduces a dual‑mode system—Instant and Thinking. Instant mode is optimized for warm, personable answers and rapid brainstorming, while Thinking mode leans into deeper reasoning with context windows up to 196 K tokens. An Auto router can switch between these modes seamlessly, balancing speed and depth.

What makes GPT‑5.1 attractive to developers is its tool integration. The apply_patch function allows the model to generate unified diffs and apply them to code; shell runs commands in a sandbox, enabling safe unit tests or builds. Prompt caching saves state for up to a day, so long conversations don’t require re‑sending earlier context, reducing cost and latency.

In benchmarks, GPT‑5.1 performs respectably across the board: it scores around 31.6 % on Humanity’s Last Exam and high 80s on GPQA Diamond (a PhD‑level science test). It achieves 100 % on AIME (math contest) when allowed to execute code but drops to about 71 % without tools. These numbers show strong reasoning when combined with tool execution.

Expert Insight:

Anthropic’s Claude Sonnet 4.5 positions itself as a coding and research powerhouse. Its 200 K token context means the model can ingest entire codebases or technical books. It supplements this with context editing and memory tools: Claude can automatically prune stale data when it approaches token limits and store information in external memory files for retrieval across sessions. These features allow Claude to run for hours on a single prompt, a capability that no other mainstream model matches.

Benchmarks support this specialization. Claude achieves 77.2 % on SWE‑Bench Verified, beating Gemini and GPT‑5 for real‑world bug fixes. On OSWorld, which measures open‑source project contributions, it scores 61.4 %, again leading the pack. However, Claude can occasionally produce superficial or buggy code when pushed beyond typical workloads; pairing it with unit tests and human review is wise.

Expert Insight:

xAI’s Grok 4.1 is the outlier in this group. Instead of pure logic, Grok focuses on emotional intelligence (EQ) and real‑time information. It trains on human preference data to deliver empathetic responses, achieving high EQ Bench scores (around 1 586 Elo). Grok’s 2 M‑token context window is the largest among these models, allowing it to track extended conversations or huge documents. It integrates real‑time browsing to fetch current events or social‑media trends.

Grok excels at creative writing and companionship tasks. However, it sometimes fails simple logic questions (e.g., comparing the weight of bricks and feathers). Its output should be double‑checked for factual accuracy, especially on technical topics.

Expert Insight:

Benchmarks help quantify each model’s strengths. The table below consolidates key metrics from independent evaluations and official releases (numbers rounded for clarity). Note: always consider your own testing; benchmarks are proxies.

|

Category |

Gemini 3.0 Pro |

GPT‑5.1 |

Claude 4.5 |

Grok 4.1 |

Key Takeaway |

|

Reasoning (Humanity’s Last Exam, ARC‑AGI‑2) |

37.5 % standard / 41 % Deep Think; 31.1 % standard / 45.1 % Deep Think on ARC‑AGI‑2 |

~31.6 % on HLE; 17.6 % on ARC‑AGI‑2 |

mid‑20 % (HLE) |

~30 % (HLE) |

Gemini dominates high‑level reasoning; GPT‑5.1 is competitive but behind |

|

Coding & Bug Fixing (LiveCodeBench, SWE) |

2 439 Elo on LiveCodeBench; 76.2 % on SWE‑Bench |

2 243 Elo; 74.9 % on SWE |

~2 300 Elo; 77.2 % on SWE |

~79 % tasks solved |

Claude leads bug fixing; Gemini leads algorithmic coding |

|

Empathy (EQ Bench) |

~1 460 Elo (Gemini 2.5) |

~1 570 Elo |

N/A |

1 586 Elo |

Grok excels at empathy; GPT‑5.1 improved |

|

Context & Cost |

1–2 M tokens; approx $2 in/$12 out per M tokens |

16–196 K tokens; approx $1.25 in/$10 out |

200 K tokens; approx $3 in/$15 out |

2 M tokens; approx $3 in/$15 out |

Longer contexts increase cost; GPT‑5.1 is cheapest |

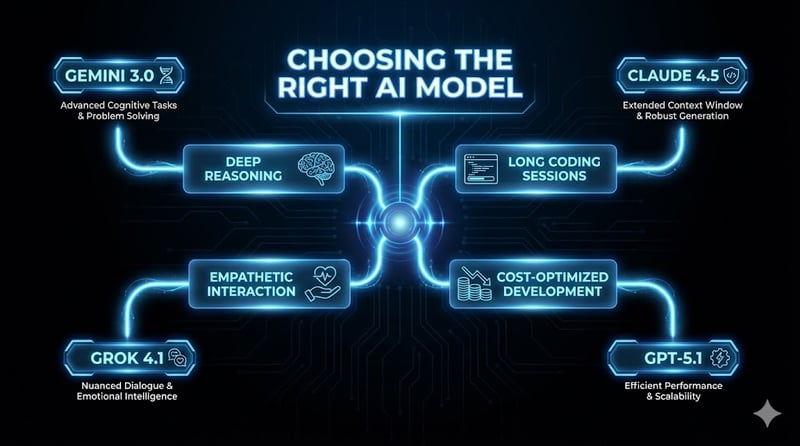

No single AI fits every job. Selecting the right model depends on task complexity, budget, safety and user experience. Let’s explore common scenarios and offer recommendations.

You don’t always need a full paragraph to decide which model to use. Here’s a condensed reference for common scenarios:

Agentic AI refers to models that can plan, execute and adapt to achieve goals. Here’s how each model supports agentic workflows.

Gemini’s Antigravity platform gives the model direct access to a development environment. It can open files, search the web, run commands and test code inside Google’s ecosystem. The Deep Think toggle instructs the model to allocate extra compute to complex tasks. Together, these features enable multi‑step research and software tasks with minimal human intervention.

GPT‑5.1’s apply_patch function lets it generate patch files, while shell executes commands in a sandbox. These tools are critical for building automated DevOps pipelines or letting the model compile and run code safely. Prompt caching further supports long conversations without repeated context.

Claude’s standout agentic features are context editing—it automatically removes irrelevant data to stay within token limits—and an external memory tool to store information persistently. Checkpoints allow you to roll back to earlier states if the model drifts. These capabilities let Claude run autonomously for hours, a game changer for research projects or large refactorings.

Grok doesn’t offer explicit agentic tools like patching or memory. Instead, it integrates real‑time browsing and a large context window, enabling it to fetch and synthesize current information in the middle of a conversation. For example, you could ask Grok to monitor social‑media trends over days and provide daily digests, something other models can only do with external tooling.

Clarifai’s platform wraps these capabilities into a single pipeline. It can route a user’s intent to the appropriate model, retrieve documents via vector search, cache results, and even run models on local hardware for compliance. For agentic workflows, this orchestration is critical: one pipeline might classify a query using a small GPT‑5 model, use Clarifai’s search to pull relevant data, send reasoning to Gemini, then use Claude for code generation and Grok for empathetic summarisation.

Cost influences model choice. GPT‑5.1 is the most affordable at around $1.25 per million input tokens and $10 for output. Gemini 3 Pro costs roughly $2 input/$12 output with search grounding available in a free tier. Claude 4.5 and Grok 4.1 are similar at $3 input/$15 output, reflecting their large contexts and specialized capabilities. Clarifai helps mitigate costs through caching, routing simple tasks to cheaper models and using local runners.

Context windows matter because they define how much information a model can consider at once. Gemini and Grok lead with 1–2 M tokens. GPT‑5.1 offers a practical 16–196 K range. Claude sits at 200 K but extends via memory tools. Larger contexts allow long narratives, but they increase cost and risk data leakage. Use Clarifai to manage what goes into each model’s context through retrieval and summarization.

Hallucination—confidently wrong answers—is a key challenge. Grok 4.1 cuts hallucinations from ~12 % to around 4 % after training improvements. GPT‑5.1 uses post‑training to reduce sycophancy and increase honesty. Gemini 3 demonstrates robust reasoning, which reduces pattern‑matching errors, though long contexts still pose privacy concerns. Claude 4.5 introduces safety filters across finance, law and medicine, called ASL‑3 alignment.

Clarifai provides evaluation dashboards to monitor hallucination rates, latency and cost. Retrieval‑augmented generation grounds outputs on trusted documents. A/B tests allow you to compare models on your actual workflows. Together, these tools help ensure safe and reliable deployment.

Modern applications often need more than one model. Clarifai advocates multi‑model orchestration. A typical pipeline combines several steps: intent classification (use a light GPT‑5 model to detect if a query is technical or emotional), retrieval and generation (pull relevant documents via Clarifai’s vector search and route responses to Gemini, Claude or Grok as appropriate), and monitoring (use Clarifai’s dashboards to track hallucination rates and user satisfaction).

The pace of AI innovation won’t slow down. Several trends are emerging:

Q1: Do I need to pick just one model?

A: Not anymore. The best results often come from combining models—use Clarifai to orchestrate them based on task type, cost and compliance needs.

Q2: Is GPT‑5.1 good enough for most tasks?

A: Yes, GPT‑5.1 strikes a good balance between cost, performance and availability. For everyday chat, coding or research, it may suffice. Use Gemini or Claude when deeper reasoning or longer context is required.

Q3: How do I handle privacy with huge context windows?

A: Avoid sending sensitive data directly. Use Clarifai’s retrieval to feed only relevant snippets to the model, and consider on‑prem or local runner deployments for regulated industries.

Q4: Can Grok be used for technical writing?

A: Grok excels at narrative and empathy but may produce factual errors. Combine it with a reasoning model or run retrieval checks before publishing.

Q5: Are these models available now?

A: Yes. Gemini 3.0, GPT‑5.1, Claude 4.5 and Grok 4.1 are available via APIs and platforms like Clarifai. Pricing and features may change, so always consult the latest documentation and tests.

There is no single “best” AI model. Each of the latest LLMs—Gemini 3.0, GPT‑5.1, Claude 4.5 and Grok 4.1—brings unique strengths. Gemini sets the standard for reasoning and multimodal understanding. GPT‑5.1 delivers versatile performance at a lower cost with developer‑friendly tools. Claude 4.5 excels at long‑horizon coding and research thanks to its 200 K context and memory systems. Grok brings empathy and real‑time data to the conversation.

The optimal strategy may involve mixing and matching these capabilities, often within the same workflow. Clarifai’s orchestration platform provides the glue that holds these diverse models together, letting you route requests, retrieve knowledge, and monitor performance. As you explore the possibilities, stay mindful of your budget, privacy constraints and the evolving ethics of AI. With the right combination of models and tools, you can build systems that are not only powerful but also responsible and human‑centric.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy