Horizontal vs. Vertical Scaling: Which Strategy Should You Choose?

Introduction

Scaling AI workloads is no longer optional—it's a necessity in a world where user expectations and data volumes are accelerating. Whether you are deploying a computer vision model at the edge or orchestrating large‑scale language models in the cloud, you must ensure your infrastructure can grow seamlessly. Vertical scaling (scale up) and horizontal scaling (scale out) are the two classic strategies for expansion, but many engineering teams struggle to decide which approach better suits their needs. As a market leader in AI, Clarifai often works with customers who ask, "How should we scale our AI models effectively without breaking the bank or sacrificing performance?"

This comprehensive guide explains the fundamental differences between vertical and horizontal scaling, highlights their advantages and limitations, and explores hybrid strategies to help you make an informed decision. We'll integrate insights from academic research, industry best practices and real‑world case studies, and we’ll highlight how Clarifai’s compute orchestration, model inference, and local runners can support your scaling journey.

Quick Digest

- Scalability is the ability of a system to handle increasing load while maintaining performance and availability. It’s vital for AI applications to support growth in data and users.

- Vertical scaling increases the resources (CPU, RAM, storage) of a single server, offering simplicity and immediate performance improvements but limited by hardware ceilings and single points of failure.

- Horizontal scaling adds more servers to distribute workload, improving fault tolerance and concurrency, though it introduces complexity and network overhead.

- Decision factors include workload type, growth projections, cost, architectural complexity and regulatory requirements.

- Hybrid (diagonal) scaling combines both approaches, scaling up until hardware limits are reached and then scaling out.

- Emerging trends: AI‑driven predictive autoscaling using hybrid models, Kubernetes Horizontal and Vertical Pod Autoscalers, serverless scaling, and green computing all shape the future of scalability.

Introduction to Scalability and Scaling Strategies

Quick Summary: What is scalability, and why does it matter?

Scalability refers to a system's capability to handle increasing load while maintaining performance, making it crucial for AI workloads that grow rapidly. Without scalability, your application may experience latency spikes or failures, eroding user trust and causing financial losses.

What Does Scalability Mean?

Scalability is the property of a system to adapt its resources in response to changing workload demands. In simple terms, if more users request predictions from your image classifier, the infrastructure should automatically handle the additional requests without slowing down. This is different from performance tuning, which optimises a system’s baseline efficiency but does not necessarily prepare it for surges in demand. Scalability is a continuous discipline, crucial for high‑availability AI services.

Key reasons for scaling include handling increased user load, maintaining performance and ensuring reliability. Research highlights that scaling helps support growing data and storage needs and ensures better user experiences. For instance, an AI model that processes millions of transactions per second demands infrastructure that can scale both in compute and storage to avoid bottlenecks and downtime.

Why Scaling Matters for AI Applications

AI applications often handle variable workloads—ranging from sporadic spikes in inference requests to continuous heavy training loads. Without proper scaling, these workloads may cause performance degradation or outages. According to a survey on hyperscale data centres, the combined use of vertical and horizontal scaling dramatically increases energy utilisation. This means organisations must consider not only performance but also sustainability.

For Clarifai’s customers, scaling is particularly important because model inference and training workloads can be unpredictable, especially when models are integrated into third‑party systems or consumer apps. Clarifai’s compute orchestration features help users manage resources efficiently by leveraging auto‑scaling groups and container orchestration, ensuring models remain responsive even as demand fluctuates.

Expert Insights

- Infrastructure experts emphasise that scalability should be designed in from day one, not bolted on later. They warn that retrofitting scaling solutions often incurs significant technical debt.

- Research on green computing notes that combining vertical and horizontal scaling dramatically increases power consumption, highlighting the need for sustainability practices.

- Clarifai engineers recommend monitoring usage patterns and gradually introducing horizontal and vertical scaling based on application requirements, rather than choosing one approach by default.

Understanding Vertical Scaling (Scaling Up)

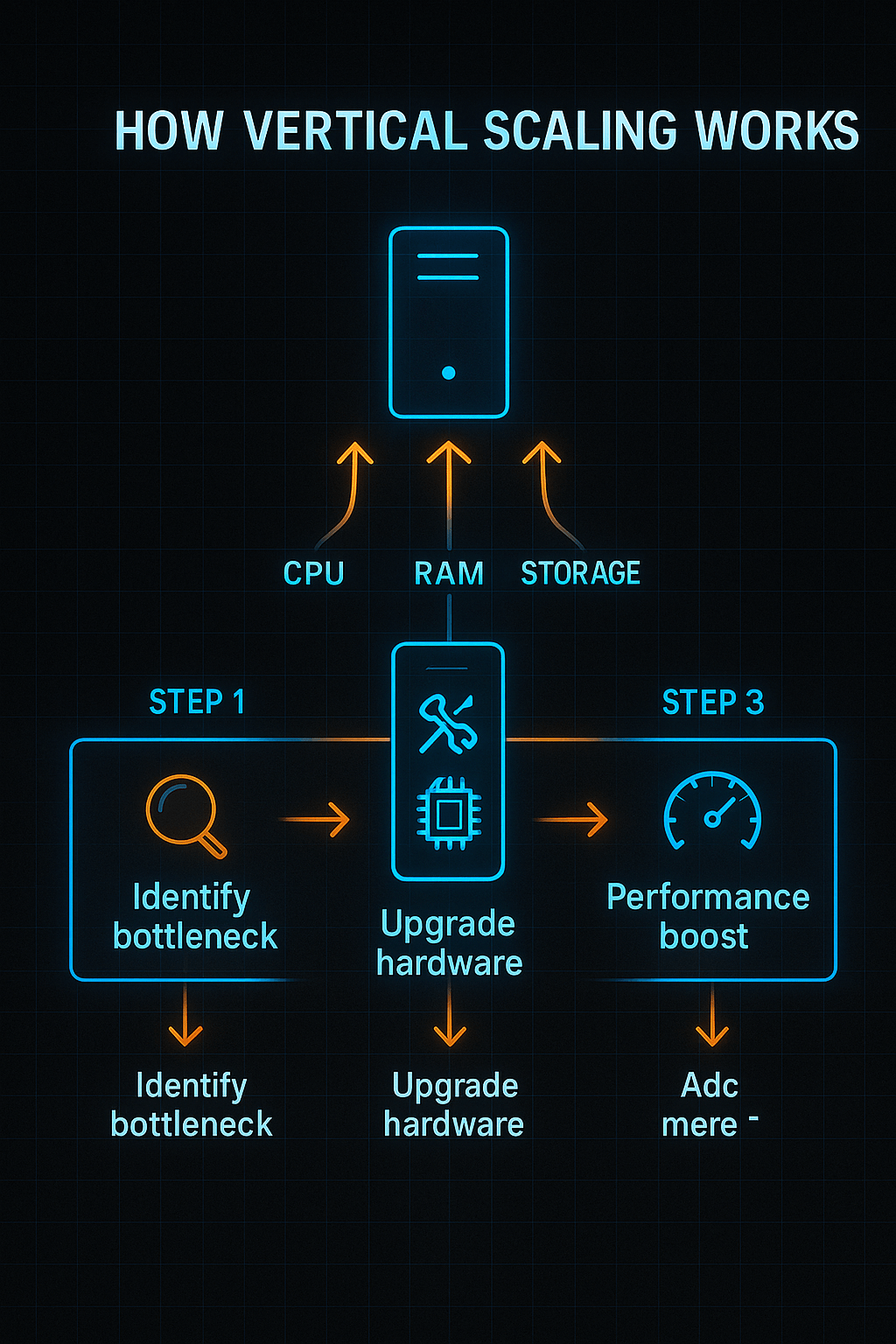

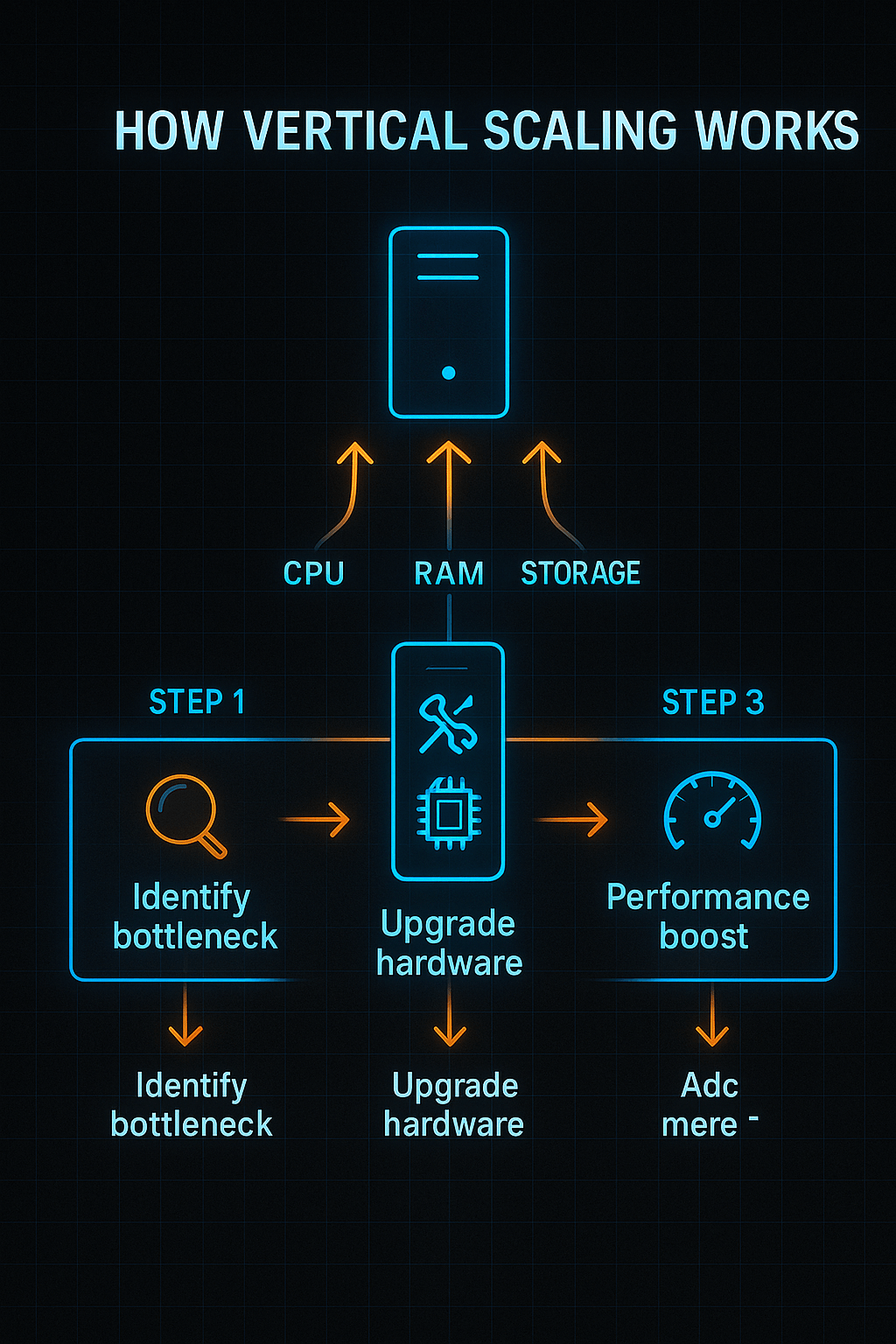

Quick Summary: What is vertical scaling?

Vertical scaling increases the resources (CPU, RAM, storage) of a single server or node, providing an immediate performance boost but eventually limited by hardware constraints and potential downtime.

What Is Vertical Scaling?

Vertical scaling, also known as scaling up, means augmenting the capacity of a single machine. You can add more CPU cores, increase memory, upgrade to faster storage, or move the workload to a more powerful server. For cloud workloads, this often involves resizing an instance to a larger instance type, such as upgrading from a medium GPU instance to a high‑performance GPU cluster.

Vertical scaling is straightforward because it doesn’t require rewriting the application architecture. Database administrators often scale up database servers for quick performance gains; AI teams may expand GPU memory when training large language models. Because you only upgrade one machine, vertical scaling preserves data locality and reduces network overhead, resulting in lower latency for certain workloads.

Advantages of Vertical Scaling

- Simplicity and ease of implementation: You don’t need to add new nodes or handle distributed systems complexity. Upgrading memory on your local Clarifai model runner could yield immediate performance benefits.

- No need to modify application architecture: Vertical scaling keeps your single‑node design intact, which suits legacy systems or monolithic AI services.

- Faster interprocess communication: All components run on the same hardware, so there are no network hops; this can reduce latency for training and inference tasks.

- Better data consistency: Single‑node architectures avoid replication lag, making vertical scaling ideal for stateful workloads that require strong consistency.

Limitations of Vertical Scaling

- Hardware limitations: There’s a cap on the CPU, memory and storage you can add—known as the hardware ceiling. Once you reach the maximum supported resources, vertical scaling is no longer viable.

- Single point of failure: A vertically scaled system still runs on one machine; if the server goes down, your application goes offline.

- Downtime for upgrades: Hardware upgrades often require maintenance windows, leading to downtime or degraded performance during scaling operations.

- Cost escalation: High‑end hardware becomes exponentially more expensive as you scale; purchasing top‑tier GPUs or NVMe storage can strain budgets.

Real‑World Example

Imagine you’re training a large language model on Clarifai’s local runner. As the dataset grows, the training job becomes I/O bound because of insufficient memory. Vertical scaling might involve adding more RAM or upgrading to a GPU with more VRAM, allowing the model to load more parameters in memory, resulting in faster training. However, once the hardware capacity is maxed out, you’ll need an alternative strategy, such as horizontal or hybrid scaling.

Clarifai Product Integration

Clarifai’s local runners let you deploy models on‑premises or on edge devices. If you need more processing power for inference, you can upgrade your local hardware (vertical scaling) without changing the Clarifai API calls. Clarifai also provides high‑performance inference workers in the cloud; you can start with vertical scaling by choosing larger compute plans and then transition to horizontal scaling when your models require more throughput.

Expert Insights

- Engineers caution that vertical scaling provides diminishing returns: each successive hardware upgrade yields smaller performance improvements relative to cost. This is why vertical scaling is often a stepping stone rather than a long‑term solution.

- Database specialists emphasise that vertical scaling is ideal for transactional workloads requiring strong consistency, such as bank transactions.

- Clarifai recommends vertical scaling for low‑traffic or prototype models where simplicity and fast setup outweigh the need for redundancy.

Understanding Horizontal Scaling (Scaling Out)

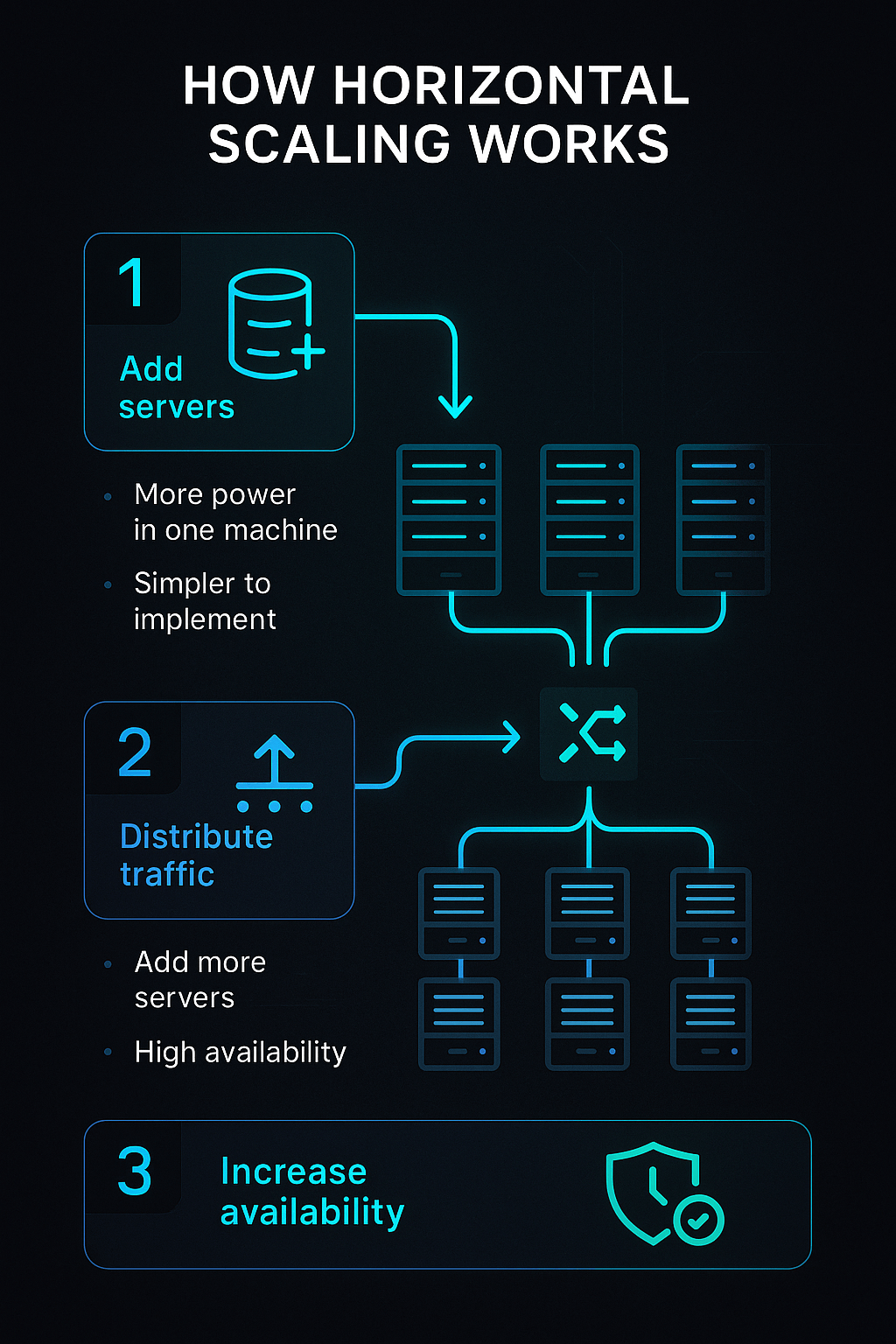

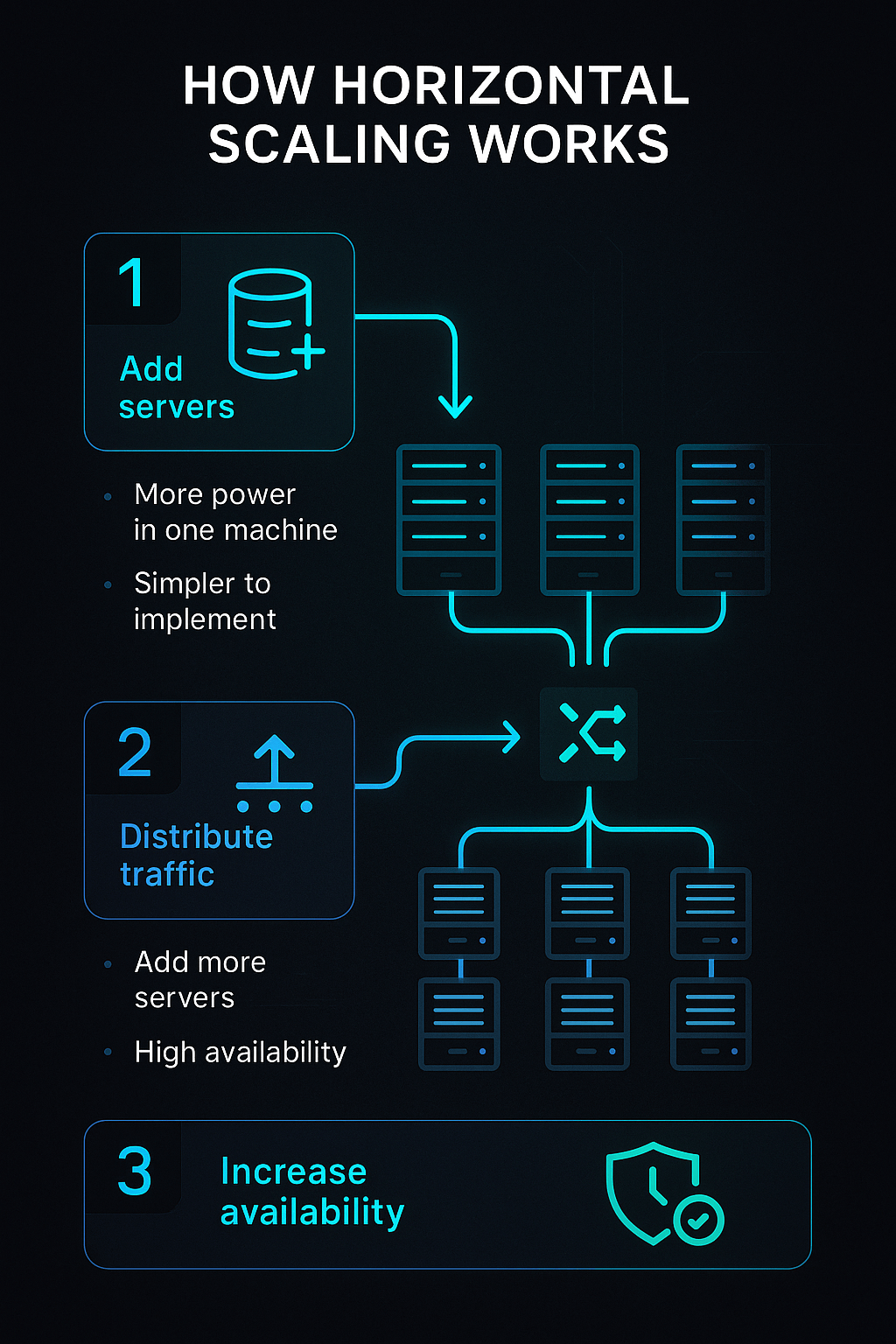

Quick Summary: What is horizontal scaling?

Horizontal scaling adds more servers or nodes to distribute workload, improving resilience and concurrency but increasing complexity.

What Is Horizontal Scaling?

Horizontal scaling, or scaling out, is the process of adding more machines to handle workload distribution. Instead of upgrading a single server, you replicate services across multiple nodes. For AI applications, this might mean deploying multiple inference servers behind a load balancer. Requests are distributed so that no single server becomes a bottleneck.

When you scale out, you must manage tasks such as load balancing, sharding, data replication and service discovery, because your application components run across different machines. Horizontal scaling is fundamental to microservices architectures, container orchestration systems like Kubernetes and modern serverless platforms.

Benefits of Horizontal Scaling

- Near‑unlimited scalability: You can add more servers as needed, enabling your system to handle unpredictable spikes. Cloud providers make it easy to spin up instances and integrate them into auto‑scaling groups.

- Improved fault tolerance and redundancy: If one node fails, traffic is rerouted to others; the system continues running. This is crucial for AI services that must maintain high availability.

- Zero or minimal downtime: New nodes can be added without shutting down the system. This property allows continuous scaling during events like product launches or viral campaigns.

- Flexible cost management: You can pay only for what you use, enabling better alignment of compute costs with real demand; but be mindful of network and management overhead.

Challenges of Horizontal Scaling

- Distributed system complexity: You must handle data consistency, concurrency, eventual consistency and network latency. Orchestrating distributed components requires expertise.

- Higher initial complexity: Setting up load balancers, Kubernetes clusters or service meshes takes time. Observability tools and automation are essential to maintain reliability.

- Network overhead: Inter‑node communication introduces latency; you need to optimise data transfer and caching strategies.

- Cost management: Although horizontal scaling spreads costs, adding more servers can still be expensive if not managed properly.

Real‑World Example

Suppose you’ve deployed a computer vision API using Clarifai to classify millions of images per day. When a marketing campaign drives a sudden traffic spike, a single server cannot handle the load. Horizontal scaling involves deploying multiple inference servers behind a load balancer, allowing requests to be distributed across nodes. Clarifai’s compute orchestration can automatically start new containers when CPU or memory metrics exceed thresholds. When the load diminishes, unused nodes are gracefully removed, saving costs.

Clarifai Product Integration

Clarifai’s multi‑node deployment capabilities integrate seamlessly with horizontal scaling strategies. You can run multiple inference workers across different availability zones, behind a managed load balancer. Clarifai’s orchestration monitors metrics and spins up or down containers automatically, enabling efficient scaling out. Developers can also integrate Clarifai inference into a Kubernetes cluster; using Clarifai’s APIs, the service can be distributed across nodes for higher throughput.

Expert Insights

- System architects highlight that horizontal scaling brings high availability: when one machine fails, the system remains operational.

- However, engineers warn that distributed data consistency is a major challenge; you may need to adopt eventual consistency models or consensus protocols to maintain data correctness.

- Clarifai advocates for a microservices approach, where AI inference is decoupled from business logic, making horizontal scaling easier to implement.

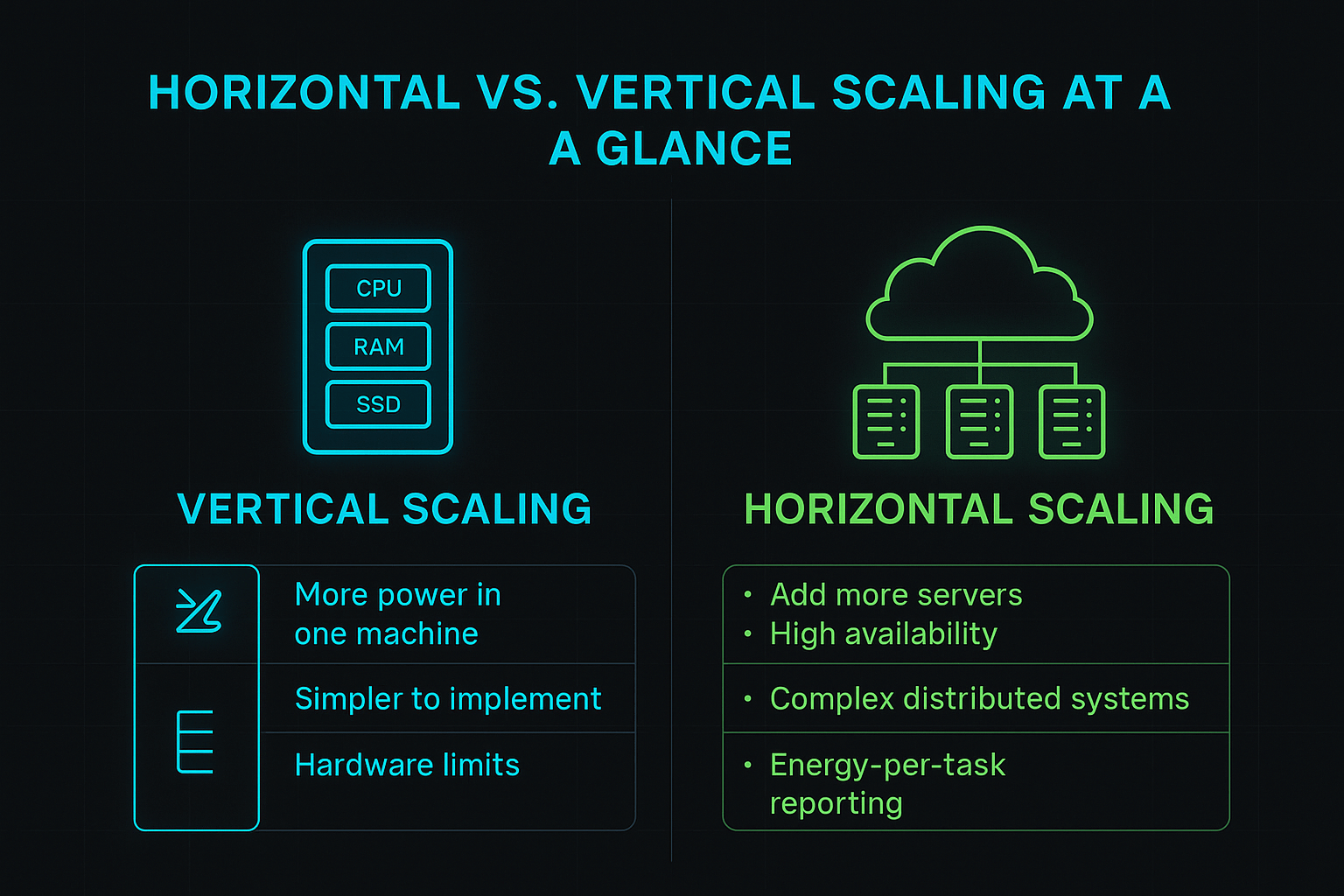

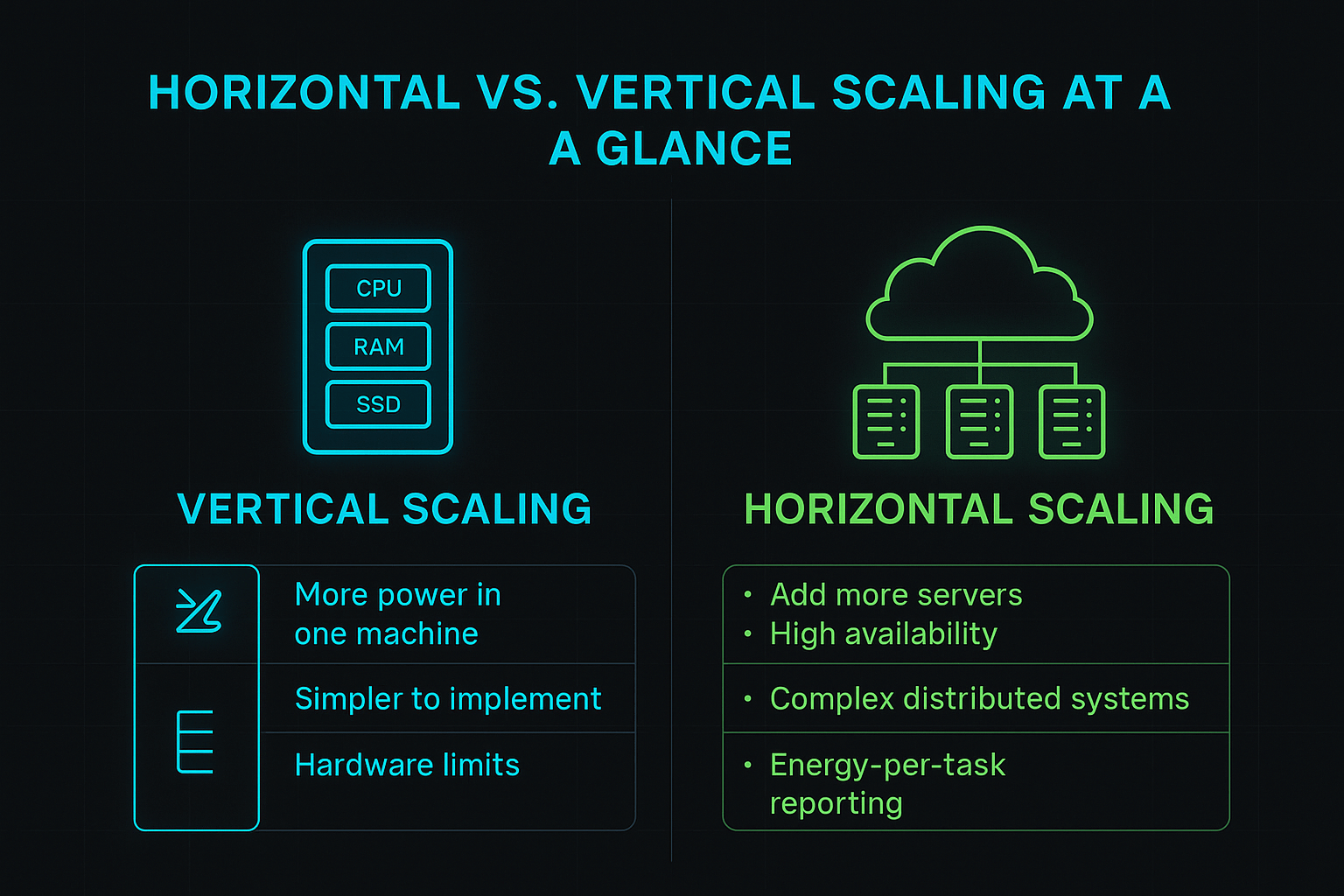

Comparing Horizontal vs Vertical Scaling: Pros, Cons & Key Differences

Quick Summary: How do horizontal and vertical scaling differ?

Vertical scaling increases resources of a single machine, while horizontal scaling distributes the workload across multiple machines. Vertical scaling is simpler but limited, whereas horizontal scaling offers better resilience and scalability at the cost of complexity.

Side‑by‑Side Comparison

To decide which approach suits your needs, consider the following key differences:

- Resource Addition: Vertical scaling upgrades an existing node (CPU, memory); horizontal scaling adds more nodes.

- Scalability: Vertical scaling is limited by hardware constraints; horizontal scaling offers near‑unlimited scalability by adding nodes.

- Complexity: Vertical scaling is straightforward; horizontal scaling introduces distributed system complexities.

- Fault Tolerance: Vertical scaling has a single point of failure; horizontal scaling improves resilience because failure of one node doesn’t bring down the system.

- Cost Dynamics: Vertical scaling might be cheaper initially but becomes expensive at high tiers; horizontal scaling spreads costs but requires orchestration tools and adds network overhead.

- Downtime: Vertical scaling often requires downtime for hardware upgrades; horizontal scaling typically allows on‑the‑fly addition or removal of nodes.

Pros and Cons

|

Strategy

|

Pros

|

Cons

|

|

Vertical scaling

|

Simplicity, minimal architectural changes, strong consistency, lower latency

|

Hardware limits, single point of failure, downtime during upgrades, escalating costs

|

|

Horizontal scaling

|

High availability, elasticity, zero downtime, near‑unlimited scalability

|

Complexity, network latency, consistency challenges, management overhead

|

Diagonal/Hybrid Scaling

Diagonal scaling combines both strategies. It involves scaling up a machine until it reaches an economically efficient threshold, then scaling out by adding more nodes. This approach allows you to balance cost and performance. For instance, you might scale up your database server to maximise performance and maintain strong consistency, then deploy additional stateless inference servers horizontally to handle surges in traffic. Companies like ridesharing or hospitality startups have adopted diagonal scaling, starting with vertical upgrades and then rolling out microservices to handle growth.

Clarifai Product Integration

Clarifai supports both vertical and horizontal scaling strategies, enabling hybrid scaling. You can choose larger inference instances (vertical) or spin up multiple smaller instances (horizontal) depending on your workload. Clarifai’s compute orchestration offers flexible scaling policies, including mixing on‑premise local runners with cloud‑based inference workers, enabling diagonal scaling.

Expert Insights

- Technical leads recommend starting with vertical scaling to simplify deployment, then gradually introducing horizontal scaling as demand grows and complexity becomes manageable.

- Hybrid scaling is particularly effective for AI services: you can maintain strong consistency for stateful components (e.g., model metadata) while horizontally scaling stateless inference endpoints.

- Clarifai’s experience shows that customers who adopt hybrid scaling enjoy improved reliability and cost efficiency, especially when using Clarifai’s orchestration to automatically manage horizontal and vertical resources.

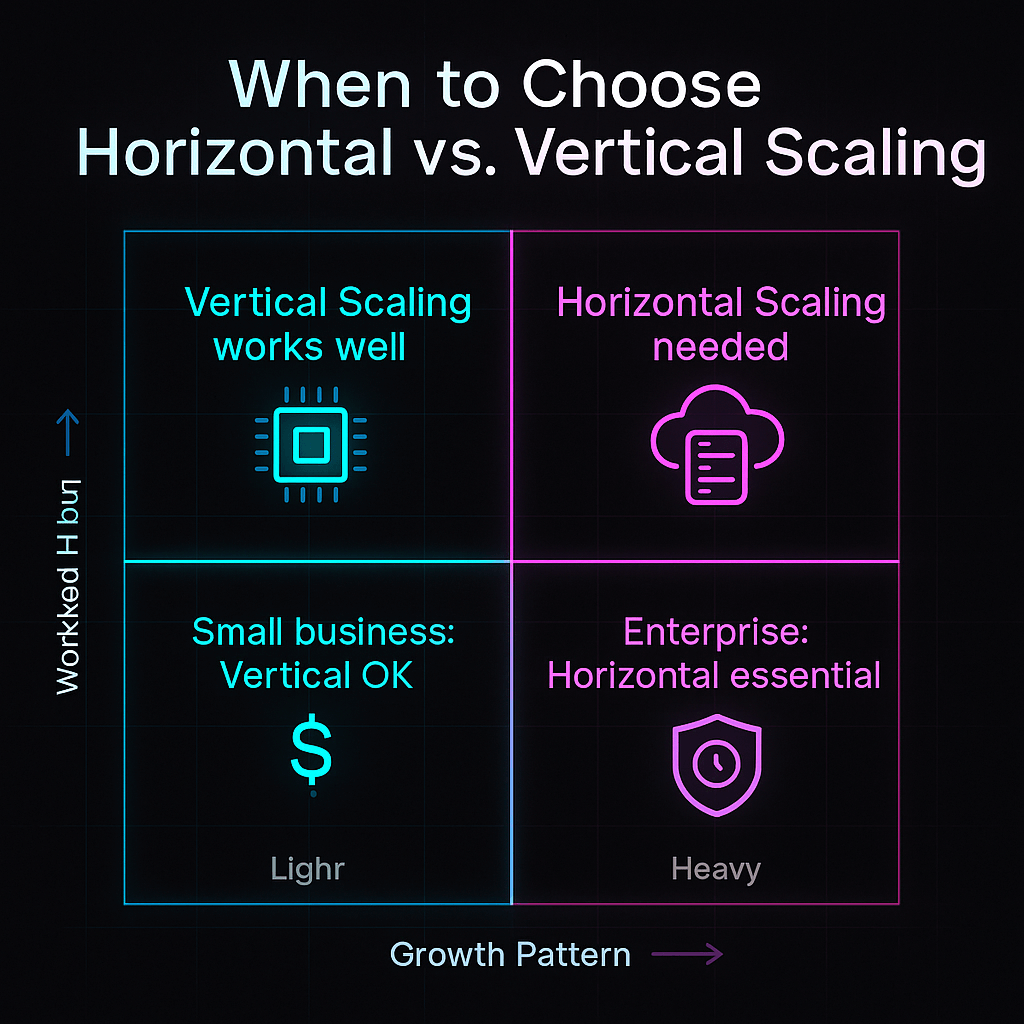

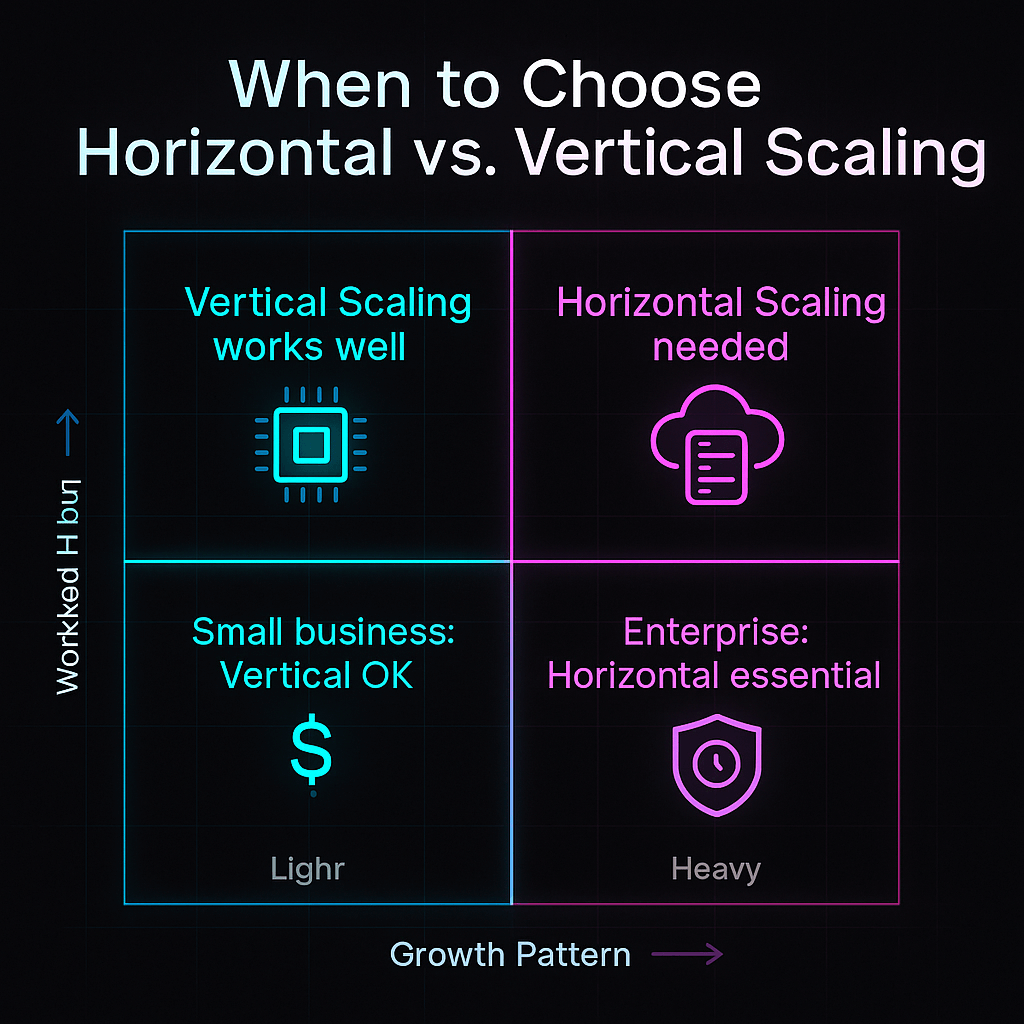

Decision Factors—How to Choose the Right Scaling Strategy

Quick Summary: How should you choose between horizontal and vertical scaling?

Choosing a scaling strategy depends on workload characteristics, growth projections, cost constraints, architectural complexity, and reliability requirements.

Key Decision Criteria

- Workload Type:

- CPU‑bound or memory‑bound workloads (e.g., large model training) may benefit from vertical scaling initially, because more resources on a single machine reduce communication overhead.

- Stateless or embarrassingly parallel workloads (e.g., image classification across many images) are suitable for horizontal scaling because requests can be distributed easily.

- Stateful vs. Stateless Components:

- Stateful services (databases, model metadata stores) often require strong consistency, making vertical or hybrid scaling preferable.

- Stateless services (API gateways, inference microservices) are ideal for horizontal scaling.

- Growth Projections:

- If you anticipate exponential growth or unpredictable spikes, horizontal or diagonal scaling is essential.

- For limited or steady growth, vertical scaling may suffice.

- Cost Considerations:

- Compare capital expenditure (capex) for hardware upgrades vs. operational expenditure (opex) for running multiple instances.

- Use cost optimisation tools to estimate the total cost of ownership over time.

- Availability Requirements:

- Mission‑critical systems may require high redundancy and failover; horizontal scaling provides better fault tolerance.

- Non‑critical prototypes may tolerate short downtime and can use vertical scaling for simplicity.

- Regulatory & Security Requirements:

- Some industries require data to remain within specific geographies; vertical scaling on local servers may be necessary.

- Horizontal scaling across regions must adhere to compliance frameworks.

Developing a Decision Framework

Create a decision matrix evaluating these factors for your application. Assign weights based on priorities—e.g., reliability may be more important than cost for a healthcare AI system. Clarifai’s customer success team often guides organisations through these decision matrices, factoring in model characteristics, user growth rates and regulatory constraints.

Clarifai Product Integration

Clarifai’s management console provides insights into model usage, latency and throughput, enabling data‑driven scaling decisions. You can start with vertical scaling by selecting larger compute plans, then monitor metrics to decide when to scale horizontally using auto‑scaling groups. Clarifai also offers consulting services to help design scaling strategies tailored to your workloads.

Expert Insights

- Architects emphasise that a one‑size‑fits‑all strategy doesn’t exist; you should evaluate each component of your system separately and choose the appropriate scaling approach.

- Industry analysts recommend factoring in environmental impact—scaling strategies that reduce energy consumption while meeting performance goals can yield long‑term cost savings and align with corporate sustainability initiatives.

- Clarifai advises starting with thorough monitoring and profiling to understand bottlenecks before investing in scaling.

Implementation Strategies and Best Practices

Quick Summary: How do you implement vertical and horizontal scaling?

Vertical scaling requires upgrading hardware or selecting larger instances, while horizontal scaling entails deploying multiple nodes with load balancing and orchestration. Best practices include automation, monitoring and testing.

Implementing Vertical Scaling

- Hardware Upgrades: Add CPU cores, memory modules or faster storage. For cloud instances, resize to a larger tier. Plan upgrades during maintenance windows to avoid downtime.

- Software Optimization: Adjust operating system parameters and allocate memory more efficiently. Fine‑tune frameworks (e.g., use larger GPU memory pools) to exploit new resources.

- Virtualisation and Hypervisors: Ensure hypervisors allocate resources properly; consider using Clarifai’s local runner on an upgraded server to maintain performance locally.

Implementing Horizontal Scaling

- Load Balancing: Use reverse proxies or load balancers (e.g., NGINX, HAProxy) to distribute requests across multiple instances.

- Container Orchestration: Adopt Kubernetes or Docker Swarm to automate deployment and scaling. Use the Horizontal Pod Autoscaler (HPA) to adjust the number of pods based on CPU/memory metrics.

- Service Discovery: Use a service registry (e.g., Consul, etcd) or Kubernetes DNS to enable instances to locate each other.

- Data Sharding & Replication: For databases, shard or partition data across nodes; implement replication and consensus protocols to maintain data integrity.

- Monitoring & Observability: Use tools like Prometheus, Grafana or Clarifai’s built‑in dashboards to monitor metrics and trigger scaling events.

- Automation & Infrastructure as Code: Manage infrastructure with Terraform or CloudFormation to ensure reproducibility and consistency.

Using Hybrid Approaches

Hybrid scaling often requires both vertical and horizontal techniques. For example, upgrade the base server (vertical) while also configuring auto‑scaling groups (horizontal). Kubernetes Vertical Pod Autoscaler (VPA) can recommend optimal resource sizes for pods, complementing HPA.

Creative Example

Imagine you’re deploying a text summarisation API. Initially, you run one server with 32 GB of RAM (vertical scaling). As traffic increases, you set up a Kubernetes cluster with an HPA to manage multiple replica pods. The HPA scales pods up when CPU usage exceeds 70 % and scales down when usage drops, ensuring cost efficiency. Meanwhile, a VPA monitors resource usage and adjusts pod memory requests to optimise utilisation. A cluster autoscaler adds or removes worker nodes, providing additional capacity when new pods need to run.

Clarifai Product Integration

- Compute Orchestration: Clarifai’s platform supports containerised deployments, making it straightforward to integrate with Kubernetes or serverless frameworks. You can define auto‑scaling policies that spin up additional inference workers when metrics exceed thresholds, then spin them down when demand drops.

- Model Inference API: Clarifai’s API endpoints can be placed behind load balancers to distribute inference requests across multiple replicas. Because Clarifai uses stateless RESTful endpoints, horizontal scaling is seamless.

- Local Runners: If you prefer running models on‑premises, Clarifai’s local runners benefit from vertical scaling. You can upgrade your server and run multiple processes to handle more inference requests.

Expert Insights

- DevOps engineers caution that improper scaling policies can lead to thrashing, where instances are created and terminated too frequently; they recommend setting cool‑down periods and stable thresholds.

- Researchers highlight hybrid autoscaling frameworks using machine‑learning models: one study designed a proactive autoscaling mechanism combining Facebook Prophet and LSTM to predict workload and adjust pod counts. This approach outperformed traditional reactive scaling in accuracy and resource efficiency.

- Clarifai’s SRE team emphasises the importance of observability—without metrics and logs, it’s impossible to fine‑tune scaling policies.

Performance, Latency & Throughput Considerations

Quick Summary: How do scaling strategies affect performance and latency?

Vertical scaling reduces network overhead and latency but is limited by single‑machine concurrency. Horizontal scaling increases throughput through parallelism, though it introduces inter‑node latency and complexity.

Latency Effects

Vertical scaling keeps data and computation on a single machine, allowing processes to communicate via memory or shared bus. This leads to lower latency for tasks such as real‑time inference or high‑frequency trading. However, even large machines can handle only so many concurrent requests.

Horizontal scaling distributes workloads across multiple nodes, which means requests may traverse a network switch or even cross availability zones. Network hops introduce latency; you must design your system to keep latency within acceptable bounds. Techniques like locality‑aware load balancing, caching and edge computing mitigate latency impact.

Throughput Effects

Horizontal scaling shines when increasing throughput. By distributing requests across many nodes, you can process thousands of concurrent requests. This is critical for AI inference workloads with unpredictable demand. In contrast, vertical scaling increases throughput only up to the machine’s capacity; once maxed out, adding more threads or processes yields diminishing returns due to CPU contention.

CAP Theorem and Consistency Models

Distributed systems face the CAP theorem, which posits that you can’t simultaneously guarantee consistency, availability and partition tolerance. Horizontal scaling often sacrifices strong consistency for eventual consistency. For AI applications that don’t require transactional consistency (e.g., recommendation engines), eventual consistency may be acceptable. Vertical scaling avoids this trade‑off but lacks redundancy.

Creative Example

Consider a real‑time translation service built on Clarifai. For lower latency in high‑stakes meetings, you might run a powerful GPU instance with lots of memory (vertical scaling). This instance processes translation requests quickly but can only handle a limited number of users. For an online conference with thousands of attendees, you horizontally scale by adding more translation servers; throughput increases massively, but you must manage session consistency and handle network delays.

Clarifai Product Integration

- Clarifai offers globally distributed inference endpoints to reduce latency by bringing compute closer to users. Using Clarifai’s compute orchestration, you can route requests to the nearest node, balancing latency and throughput.

- Clarifai’s API supports batch processing for high‑throughput scenarios, enabling efficient handling of large datasets across horizontally scaled clusters.

Expert Insights

- Performance engineers note that vertical scaling is beneficial for latency‑sensitive workloads, such as fraud detection or autonomous vehicle perception, because data stays local.

- Distributed systems experts stress the need for caching and data locality when scaling horizontally; otherwise, network overhead can negate throughput gains.

- Clarifai’s performance team recommends combining vertical and horizontal scaling: allocate enough resources to individual nodes for baseline performance, then add nodes to handle peaks.

Cost Analysis & Total Cost of Ownership

Quick Summary: What are the cost implications of scaling?

Vertical scaling may have lower upfront cost but escalates rapidly at higher tiers; horizontal scaling distributes costs over many instances but requires orchestration and management overhead.

Cost Models

- Capital Expenditure (Capex): Vertical scaling often involves purchasing or leasing high‑end hardware. The cost per unit of performance increases as you approach top‑tier resources. For on‑premise deployments, capex can be significant because you must invest in servers, GPUs and cooling.

- Operational Expenditure (Opex): Horizontal scaling entails paying for many instances, usually on a pay‑as‑you‑go model. Opex can be easier to budget and track, but it increases with the number of nodes and their usage.

- Hidden Costs: Consider downtime (maintenance for vertical scaling), energy consumption (data centres consume massive power), licensing fees for software and added complexity (DevOps and SRE staffing).

Cost Dynamics

Vertical scaling may appear cheaper initially, especially when starting with small workloads. However, as you upgrade to higher‑capacity hardware, cost rises steeply. For example, upgrading from a 16 GB GPU to a 32 GB GPU may double or triple the price. Horizontal scaling spreads cost across multiple lower‑cost machines, which can be turned off when not needed, making it more cost effective at scale. However, orchestration and network costs add overhead.

Creative Example

Assume you need to handle 100,000 image classifications per minute. You can choose a vertical strategy by purchasing a top‑of‑the‑line server for $50,000 capable of handling the load. Alternatively, horizontal scaling involves leasing twenty smaller servers at $500 per month each. The second option costs $10,000 per month but allows you to shut down servers during off‑peak hours, potentially saving money. Hybrid scaling might involve buying a mid‑tier server and leasing additional capacity when needed.

Clarifai Product Integration

- Clarifai offers flexible pricing, allowing you to pay only for the compute you use. Starting with a smaller plan (vertical) and scaling horizontally with additional inference workers can balance cost and performance.

- Clarifai’s compute orchestration helps optimise costs by automatically turning off unused containers and scaling down resources during low demand periods.

Expert Insights

- Financial analysts suggest modelling costs over the expected lifetime of the service, including maintenance, energy and staffing. They warn against focusing only on hardware costs.

- Sustainability experts emphasise that the environmental cost of scaling should be factored into TCO; investing in green data centres and energy‑efficient hardware can reduce long‑term expenses.

- Clarifai’s customer success team encourages using cost monitoring tools to track usage and set budgets, preventing runaway expenses.

Hybrid/Diagonal Scaling Strategies

Quick Summary: What is hybrid or diagonal scaling?

Hybrid scaling combines vertical and horizontal strategies, scaling up until the machine is cost efficient, then scaling out with additional nodes.

What Is Hybrid Scaling?

Hybrid (diagonal) scaling acknowledges that neither vertical nor horizontal scaling alone can accommodate all workloads efficiently. It involves scaling up a machine to its cost‑effective limit and then scaling out when additional capacity is needed. For example, you might upgrade your GPU server until the cost of further upgrades outweighs benefits, then deploy additional servers to handle more requests.

Why Choose Hybrid Scaling?

- Cost Optimisation: Hybrid scaling helps balance capex and opex. You use vertical scaling to get the most out of your hardware, then add nodes horizontally when demand exceeds that capacity.

- Performance & Flexibility: You maintain low latency for key components through vertical scaling while scaling out stateless services to handle peaks.

- Risk Mitigation: Hybrid scaling reduces the single point of failure by adding redundancy while still benefiting from strong consistency on scaled‑up nodes.

Real‑World Examples

Start‑ups often begin with a vertically scaled monolith; as traffic grows, they break services into microservices and scale out horizontally. Transportation and hospitality platforms used this approach, scaling up early on and gradually adopting microservices and auto‑scaling groups.

Clarifai Product Integration

- Clarifai’s platform allows you to run models on‑premises or in the cloud, making hybrid scaling straightforward. You can vertically scale an on‑premise server for sensitive data and horizontally scale cloud inference for public traffic.

- Clarifai’s compute orchestration can manage both types of scaling; policies can prioritise local resources and burst to the cloud when demand surges.

Expert Insights

- Architects argue that hybrid scaling is the most practical option for many modern workloads, as it provides a balance of performance, cost and reliability.

- Research on predictive autoscaling suggests integrating hybrid models (e.g., Prophet + LSTM) with vertical scaling to further optimise resource allocation.

- Clarifai’s engineers highlight that hybrid scaling requires careful coordination between components; they recommend using orchestration tools to manage failover and ensure consistent routing of requests.

Use Cases & Industry Examples

Quick Summary: Where are scaling strategies applied in the real world?

Scaling strategies vary by industry and workload; AI‑powered services in e‑commerce, media, finance, IoT and start‑ups each adopt different scaling approaches based on their specific needs.

E‑Commerce & Retail

Online marketplaces often experience unpredictable spikes during sales events. They horizontally scale stateless web services (product catalogues, recommendation engines) to handle surges. Databases may be scaled vertically to maintain transaction integrity. Clarifai’s visual recognition models can be deployed using hybrid scaling—vertical scaling ensures stable product image classification while horizontal scaling handles increased search queries.

Media & Streaming

Video streaming platforms require massive throughput. They employ horizontal scaling across distributed servers for streaming and content delivery networks (CDNs). Metadata stores and user preference engines may scale vertically to maintain consistency. Clarifai’s video analysis models can run on distributed clusters, analysing frames in parallel while metadata is stored on scaled‑up servers.

Financial Services

Banks and trading platforms prioritise consistency and reliability. They often vertically scale core transaction systems to guarantee ACID properties. However, front‑end risk analytics and fraud detection systems scale horizontally to process large volumes of transactions concurrently. Clarifai’s anomaly detection models are used in horizontal clusters to scan for fraudulent patterns in real time.

IoT & Edge Computing

Edge devices collect data and perform preliminary processing vertically due to hardware constraints. Cloud back‑ends scale horizontally to aggregate and analyse data. Clarifai’s edge runners enable on‑device inference, while data is sent to cloud clusters for further analysis. Hybrid scaling ensures immediate response at the edge while leveraging cloud capacity for deeper insights.

Start‑Ups & SMBs

Small companies typically start with vertical scaling because it’s simple and cost effective. As they grow, they adopt horizontal scaling for better resilience. Clarifai’s flexible pricing and compute orchestration allow start‑ups to begin small and scale easily when needed.

Case Studies

- An e‑commerce site adopted auto‑scaling groups to handle Black Friday traffic, using horizontal scaling for web servers and vertical scaling for the order management database.

- A financial institution improved resilience by migrating its risk analysis engine to a horizontally scaled microservices architecture while retaining a vertically scaled core banking system.

- A research lab used Clarifai’s models for wildlife monitoring, deploying local runners at remote sites (vertical scaling) and sending aggregated data to a central cloud cluster for analysis (horizontal scaling).

Expert Insights

- Industry experts note that selecting the appropriate scaling strategy depends heavily on domain requirements; there is no universal solution.

- Clarifai’s customer success team has witnessed improved user experiences and reduced latency when clients adopt hybrid scaling for AI inference workloads.

Emerging Trends & Future of Scaling

Quick Summary: What trends are shaping the future of scaling?

Kubernetes autoscaling, AI‑driven predictive autoscaling, serverless computing, edge computing and sustainability initiatives are reshaping how organisations scale their systems.

Kubernetes Auto‑Scaling

Kubernetes offers built‑in auto‑scaling mechanisms: the Horizontal Pod Autoscaler (HPA) adjusts the number of pods based on CPU or memory usage, while the Vertical Pod Autoscaler (VPA) dynamically resizes pod resources. A cluster autoscaler adds or removes worker nodes. These tools enable fine‑grained control over resource allocation, improving efficiency and reliability.

AI‑Driven Predictive Autoscaling

Research shows that combining statistical models like Prophet with neural networks like LSTM can predict workload patterns and proactively scale resources. Predictive autoscaling aims to allocate capacity before spikes occur, reducing latency and avoiding overprovisioning. Machine‑learning‑driven autoscaling will likely become more prevalent as AI systems grow in complexity.

Serverless & Function‑as‑a‑Service (FaaS)

Serverless platforms automatically scale functions based on demand, freeing developers from infrastructure management. They scale horizontally behind the scenes, enabling cost‑efficient handling of intermittent workloads. AWS introduced predictive scaling for container services, harnessing machine learning to anticipate demand and adjust scaling policies accordingly (as reported in industry news). Clarifai’s APIs can be integrated into serverless workflows to create event‑driven AI applications.

Edge Computing & Cloud‑Edge Hybrid

Edge computing brings computation closer to the user, reducing latency and bandwidth consumption. Vertical scaling on edge devices (e.g., upgrading memory or storage) can improve real‑time inference, while horizontal scaling in the cloud aggregates and analyses data streams. Clarifai’s edge solutions allow models to run on local hardware; combined with cloud resources, this hybrid approach ensures both fast response and deep analysis.

Sustainability and Green Computing

Hyperscale data centres consume enormous energy, with the combination of vertical and horizontal scaling increasing utilisation. Future scaling strategies must integrate energy‑efficient hardware, carbon‑aware scheduling and renewable energy sources to reduce environmental impact. AI‑powered resource management can optimise workloads to run on servers with lower carbon footprints.

Clarifai Product Integration

- Clarifai is exploring AI‑driven predictive autoscaling, leveraging workload analytics to anticipate demand and adjust inference capacity in real time.

- Clarifai’s support for Kubernetes makes it easy to adopt HPA and VPA; models can automatically scale based on CPU/GPU usage.

- Clarifai is committed to sustainability, partnering with green cloud providers and offering efficient inference options to reduce power usage.

Expert Insights

- Industry analysts believe that intelligent autoscaling will become the norm, where machine learning models predict demand, allocate resources and consider carbon footprint simultaneously.

- Edge computing advocates argue that local processing will increase, necessitating vertical scaling on devices and horizontal scaling in the cloud.

- Clarifai’s research team is working on dynamic model compression and architecture search, enabling models to scale down gracefully for edge deployment while maintaining accuracy.

-png.png?width=1024&height=1536&name=ChatGPT%20Image%20Sep%2018%2c%202025%2c%2001_42_48%20PM%20(1)-png.png)

Step‑by‑Step Guide for Selecting and Implementing a Scaling Strategy

Quick Summary: How do you pick and implement a scaling strategy?

Follow a structured process: assess workloads, choose the right scaling pattern for each component, implement scaling mechanisms, monitor performance and adjust policies.

Step 1: Assess Workloads & Bottlenecks

- Profile your application: Use monitoring tools to understand CPU, memory, I/O and network usage. Identify hot spots and bottlenecks.

- Classify components: Determine which services are stateful or stateless, and whether they are CPU‑bound, memory‑bound or I/O‑bound.

Step 2: Choose Scaling Patterns for Each Component

- Stateful services (e.g., databases, model registries) may benefit from vertical scaling or hybrid scaling.

- Stateless services (e.g., inference APIs, feature extraction) are ideal for horizontal scaling.

- Consider diagonal scaling—scale vertically until cost‑efficient, then scale horizontally.

Step 3: Implement Scaling Mechanisms

- Vertical Scaling: Resize servers; upgrade hardware; adjust memory and CPU allocations.

- Horizontal Scaling: Deploy load balancers, auto‑scaling groups, Kubernetes HPA/VPA; use service discovery.

- Hybrid Scaling: Combine both; use VPA for resource optimisation; configure cluster autoscalers.

Step 4: Test & Validate

- Perform load testing to simulate traffic spikes and measure latency, throughput and cost. Adjust scaling thresholds and rules.

- Conduct chaos testing to ensure the system tolerates node failures and network partitions.

Step 5: Monitor & Optimise

- Implement observability with metrics, logs and traces to monitor resource utilisation and costs.

- Refine scaling policies based on real‑world usage; adjust thresholds, cool‑down periods and predictive models.

- Review costs and optimise by turning off unused instances or resizing underutilised servers.

Step 6: Plan for Growth & Sustainability

- Evaluate future workloads and plan capacity accordingly. Consider emerging trends like predictive autoscaling, serverless and edge computing.

- Incorporate sustainability goals, selecting green data centres and energy‑efficient hardware.

Clarifai Product Integration

- Clarifai offers detailed usage dashboards to monitor API calls, latency and throughput; these metrics feed into scaling decisions.

- Clarifai’s orchestration tools allow you to configure auto‑scaling policies directly from the dashboard or via API; you can define thresholds, replic count and concurrency limits.

- Clarifai’s support team can assist in designing and implementing custom scaling strategies tailored to your models.

Expert Insights

- DevOps specialists emphasise automation: manual scaling doesn’t scale with the business; infrastructure as code and automated policies are essential.

- Researchers stress the importance of continuous testing and monitoring; scaling strategies should evolve as workloads change.

- Clarifai engineers remind users to consider data governance and compliance when scaling across regions and clouds.

Common Pitfalls and How to Avoid Them

Quick Summary: What common mistakes do teams make when scaling?

Common pitfalls include over‑provisioning or under‑provisioning resources, neglecting failure modes, ignoring data consistency, missing observability and disregarding energy consumption.

Over‑Scaling and Under‑Scaling

Over‑scaling leads to wasteful spending, especially if auto‑scaling policies are too aggressive. Under‑scaling causes performance degradation and potential outages. Avoid both by setting realistic thresholds, cool‑down periods and predictive rules.

Ignoring Single Points of Failure

Teams sometimes scale up a single server without redundancy. If that server fails, the entire service goes down, causing downtime. Always design for failover and redundancy.

Complexity Debt in Horizontal Scaling

Deploying multiple instances without proper automation leads to configuration drift, where different nodes run slightly different software versions or configurations. Use orchestration and infrastructure as code to maintain consistency.

Data Consistency Challenges

Distributed databases may suffer from replication lag and eventual consistency. Design your application to tolerate eventual consistency, or use hybrid scaling for stateful components.

Security & Compliance Risks

Scaling introduces new attack surfaces, such as poorly secured load balancers or misconfigured network policies. Apply zero‑trust principles and continuous compliance checks.

Neglecting Sustainability

Failing to consider the environmental impact of scaling increases energy consumption and carbon emissions. Choose energy‑efficient hardware and schedule non‑urgent tasks during low‑carbon periods.

Clarifai Product Integration

- Clarifai’s platform provides best practices for securing AI endpoints, including API key management and encryption.

- Clarifai’s monitoring tools help detect over‑scaling or under‑scaling, enabling you to adjust policies before costs spiral.

Expert Insights

- Incident response teams emphasise the importance of chaos engineering—deliberately injecting failures to discover weaknesses in scaling architecture.

- Security experts recommend continuous vulnerability scanning across all scaled resources.

- Clarifai encourages a proactive culture of observability and sustainability, embedding monitoring and green initiatives into scaling plans.

Conclusion & Recommendations

Quick Summary: Which scaling strategy should you choose?

There is no one‑size‑fits‑all answer—evaluate your application’s requirements and design accordingly. Start small with vertical scaling, plan for horizontal scaling, embrace hybrid strategies and adopt predictive autoscaling. Sustainability should be a core consideration.

Key Takeaways

- Vertical scaling is simple and effective for early‑stage or monolithic workloads, but it has hardware limits and introduces single points of failure.

- Horizontal scaling delivers elasticity and resilience, though it requires distributed systems expertise and careful orchestration.

- Hybrid (diagonal) scaling offers a balanced approach, leveraging the benefits of both strategies.

- Emerging trends like predictive autoscaling, serverless computing and edge computing will shape the future of scalability, making automation and AI integral to infrastructure management.

- Clarifai provides the tools and expertise to help you scale your AI workloads efficiently, whether on‑premise, in the cloud or across both.

Final Recommendations

- Start with vertical scaling for prototypes or small workloads, using Clarifai’s local runners or larger instance plans.

- Implement horizontal scaling when traffic increases, deploying multiple inference workers and load balancers; use Kubernetes HPA and Clarifai’s compute orchestration.

- Adopt hybrid scaling to balance cost, performance and reliability; use VPA to optimise pod sizes and cluster autoscaling to manage nodes.

- Monitor and optimise constantly, using Clarifai’s dashboards and third‑party observability tools. Adjust scaling policies as your workloads evolve.

- Plan for sustainability, selecting green cloud options and energy‑efficient hardware; incorporate carbon‑aware scheduling.

If you are unsure which approach to choose, reach out to Clarifai’s support team. We help you analyse workloads, design scaling architectures and implement auto‑scaling policies. With the right strategy, your AI applications will remain responsive, cost efficient and environmentally responsible.

Frequently Asked Questions (FAQ)

What is the main difference between vertical and horizontal scaling?

Vertical scaling adds resources (CPU, memory, storage) to a single machine, while horizontal scaling adds more machines to distribute workload, providing greater redundancy and scalability.

When should I choose vertical scaling?

Choose vertical scaling for small workloads, prototypes or legacy applications that require strong consistency and are easier to manage on a single server. It’s also suitable for stateful services and on‑premise deployments with compliance constraints.

When should I choose horizontal scaling?

Horizontal scaling is ideal for applications with unpredictable or rapidly growing demand. It offers elasticity and fault tolerance, making it perfect for stateless services, microservices architectures and AI inference workloads.

What is diagonal scaling?

Diagonal (hybrid) scaling combines vertical and horizontal strategies. You scale up a machine until it reaches a cost‑efficient threshold and then scale out by adding nodes. This approach balances performance, cost and reliability.

How does Kubernetes handle scaling?

Kubernetes provides the Horizontal Pod Autoscaler (HPA) for scaling the number of pods, the Vertical Pod Autoscaler (VPA) for adjusting resource requests, and a cluster autoscaler for adding or removing nodes. Together, these tools enable dynamic, fine‑grained scaling of containerised workloads.

What is predictive autoscaling?

Predictive autoscaling uses machine‑learning models to forecast workload demand and allocate resources proactively. This reduces latency, prevents over‑provisioning and improves cost efficiency.

How can Clarifai help with scaling?

Clarifai’s compute orchestration and model inference APIs support both vertical and horizontal scaling. Users can choose larger inference instances, run multiple inference workers across regions, or combine local runners with cloud services. Clarifai also offers consulting and support for designing scalable, sustainable AI deployments.

Why should I care about sustainability in scaling?

Hyperscale data centres consume substantial energy, and poor scaling strategies can exacerbate this. Choosing energy‑efficient hardware and leveraging predictive autoscaling reduces energy usage and carbon emissions, aligning with corporate sustainability goals.

What’s the best way to start implementing scaling?

Begin by monitoring your existing workloads to identify bottlenecks. Create a decision matrix based on workload characteristics, growth projections and cost constraints. Start with vertical scaling for immediate needs, then adopt horizontal or hybrid scaling as traffic increases. Use automation and observability tools, and consult experts like Clarifai’s engineering team for guidance.

-png.png?width=1024&height=1536&name=ChatGPT%20Image%20Sep%2018%2c%202025%2c%2001_42_48%20PM%20(1)-png.png)