-png.png?width=1440&height=720&name=DeepSeek%20API%20(1)-png.png)

DeepSeek models, including DeepSeek‑R1 and DeepSeek‑V3.1, are accessible directly through the Clarifai platform. You can get started without needing a separate DeepSeek API key or endpoint.

https://api.clarifai.com/v2/ext/openai/v1

Authenticate with your Personal Access Token (PAT) and specify the model URL, such as DeepSeek‑R1 or DeepSeek‑V3.1.

Clarifai handles all hosting, scaling, and orchestration, letting you focus purely on building your application and using the model’s reasoning and chat capabilities.

DeepSeek encompasses a range of large language models (LLMs) designed with diverse architectural strategies to optimize performance across various tasks. While some models employ a Mixture-of-Experts (MoE) approach, others utilize dense architectures to balance efficiency and capability.

DeepSeek-R1 is a dense model that integrates reinforcement learning (RL) with knowledge distillation to enhance reasoning capabilities. It employs a standard transformer architecture augmented with Multi-Head Latent Attention (MLA) to improve context handling and reduce memory overhead. This design enables the model to achieve high performance in tasks requiring deep reasoning, such as mathematics and logic.

DeepSeek-V3 adopts a hybrid approach, combining both dense and MoE components. The dense part handles general conversational tasks, while the MoE component activates specialized experts for complex reasoning tasks. This architecture allows the model to efficiently switch between general and specialized modes, optimizing performance across a broad spectrum of applications.

To provide more accessible options, DeepSeek offers distilled versions of its models, such as DeepSeek-R1-Distill-Qwen-7B. These models are smaller in size but retain much of the reasoning and coding capabilities of their larger counterparts. For instance, DeepSeek-R1-Distill-Qwen-7B is based on the Qwen 2.5 architecture and has been fine-tuned with reasoning data generated by DeepSeek-R1, achieving strong performance in mathematical reasoning and general problem-solving tasks.

DeepSeek models were designed to balance reasoning power with efficiency:

|

Architecture type |

Characteristics |

Pros |

Cons |

|

Dense (e.g., R1) |

All parameters participate in every inference step. RL‑fine‑tuned for reasoning |

Excellent logical consistency; good for mathematics, coding, algorithm design |

Higher latency and cost; cannot scale beyond ~70 B parameters without huge infrastructure |

|

Hybrid MoE (e.g., V3) |

Combines dense core with MoE specialists; only a fraction of experts activate per token |

Scales to hundreds of billions of parameters with manageable inference cost; long context windows |

Slightly higher engineering complexity; slower to converge during training |

|

Distilled |

Smaller models distilled from R1 or V3; typically 7–13 B parameters |

Low latency and cost; easy to deploy on edge devices |

Slightly reduced reasoning depth; context window limited compared with V3 |

DeepSeek models can be accessed on Clarifai in three ways: through the Clarifai Playground UI, via the OpenAI-compatible API, or using the Clarifai SDK. Each method provides a different level of control and flexibility, allowing you to experiment, integrate, and deploy models according to your development workflow.

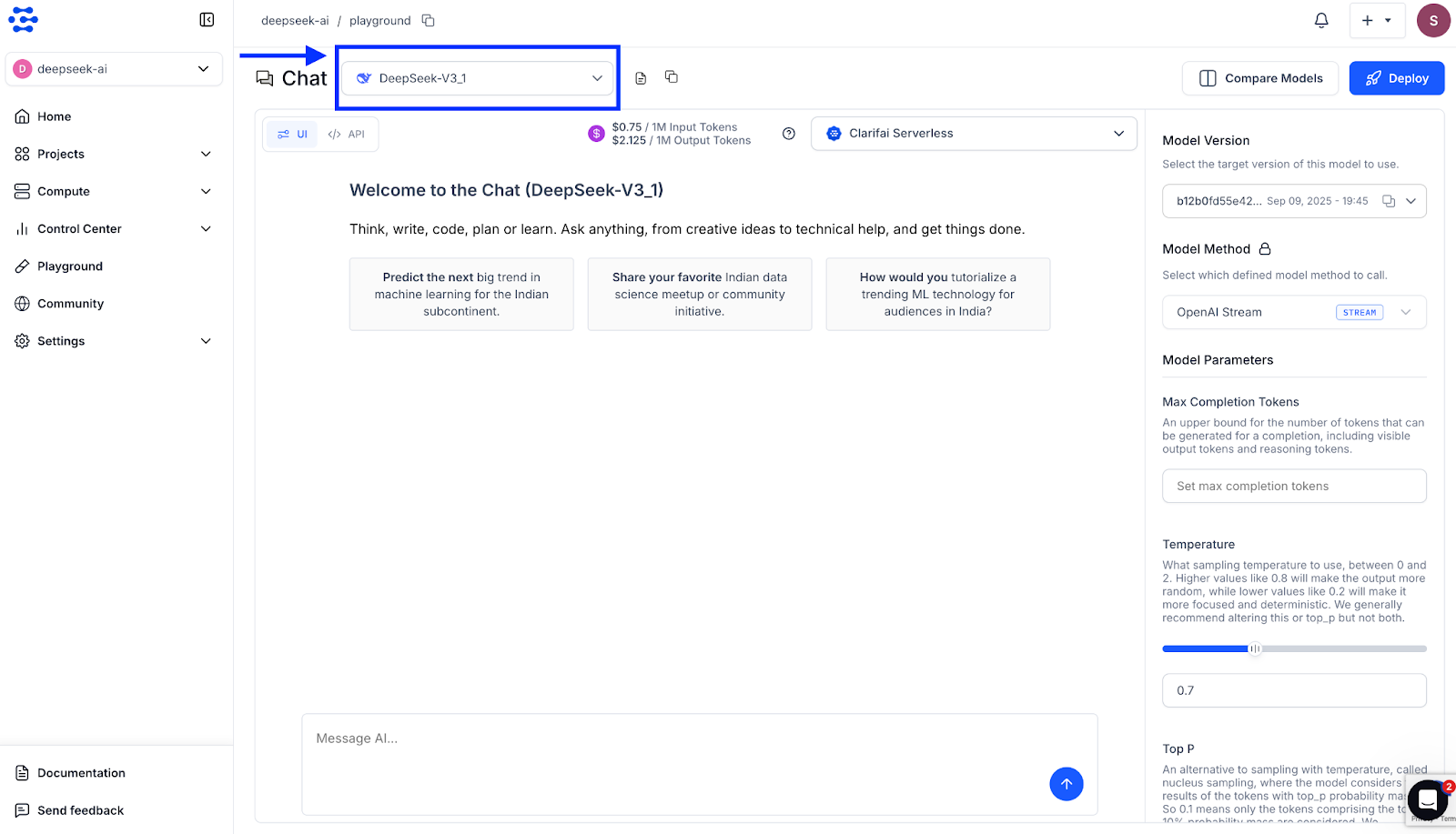

The Playground provides a fast, interactive environment to test prompts and explore model behavior.

You can select any DeepSeek model, including DeepSeek‑R1, DeepSeek‑V3.1, or distilled versions available on the community. You can input prompts, adjust parameters such as temperature and streaming, and immediately see the model responses. The Playground also allows you to compare multiple models side by side to test and evaluate their responses.

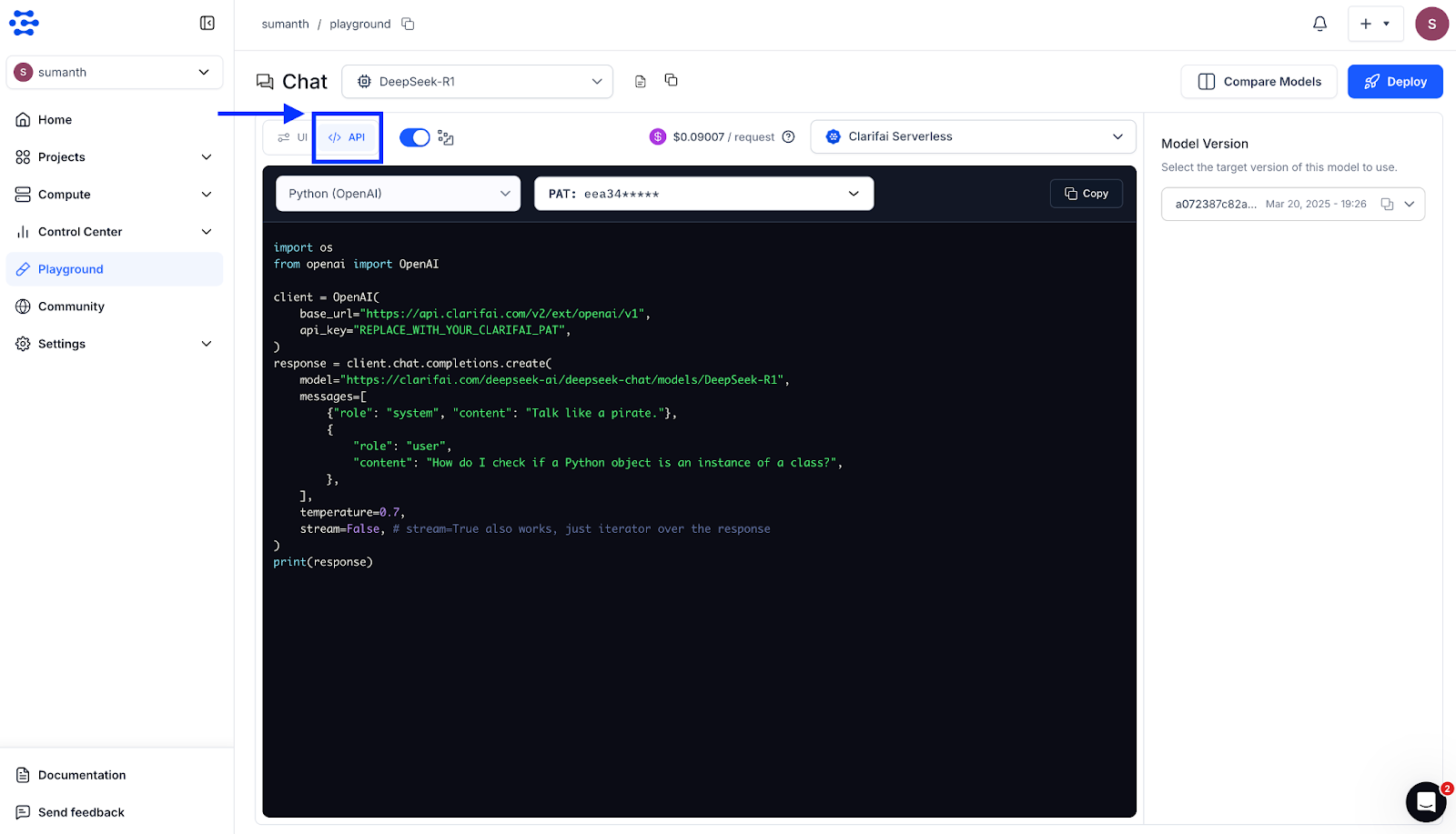

Within the Playground itself, you have the option to view the API section, where you can access code snippets in multiple languages, including cURL, Java, JavaScript, Node.js, the OpenAI-compatible API, the Clarifai Python SDK, PHP, and more.

You can select the language you need, copy the snippet, and directly integrate it into your applications. For more details on testing models and using the Playground, see the Clarifai Playground Quickstart

Try it: The Clarifai Playground is the fastest way to test prompts. Navigate to the model page and click “Test in Playground”.

Clarifai provides a drop-in replacement for the OpenAI API, allowing you to use the same Python or TypeScript client libraries you are familiar with while pointing to Clarifai’s OpenAI-compatible endpoint. Once you have your PAT set as an environment variable, you can call any Clarifai-hosted DeepSeek model by specifying the model URL.

Python Example

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.clarifai.com/v2/ext/openai/v1",

api_key=os.environ["CLARIFAI_PAT"]

)

response = client.chat.completions.create(

model="https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-R1",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me a three sentence bedtime story about a unicorn."}

],

max_completion_tokens=100,

temperature=0.7

)

print(response.choices[0].message.content)

TypeScript Example

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.clarifai.com/v2/ext/openai/v1",

apiKey: process.env.CLARIFAI_PAT,

});

const response = await client.chat.completions.create({

model: "https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-R1",

messages: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: "Who are you?" }

],

});

console.log(response.choices?.[0]?.message.content);

Clarifai’s Python SDK simplifies authentication and model calls, allowing you to interact with DeepSeek models using concise Python code. After setting your PAT, you can initialize a model client and make predictions with just a few lines.

import os

from clarifai.client import Model

model = Model(

url="https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-V3_1",

pat=os.environ["CLARIFAI_PAT"]

)

response = model.predict(

prompt="What is the future of AI?",

max_tokens=512,

temperature=0.7,

top_p=0.95,

thinking="False"

)

print(response)

For modern web applications, the Vercel AI SDK provides a TypeScript toolkit to interact with Clarifai models. It supports the OpenAI-compatible provider, enabling seamless integration.

import { createOpenAICompatible } from "@ai-sdk/openai-compatible";

import { generateText } from "ai";

const clarifai = createOpenAICompatible({

baseURL: "https://api.clarifai.com/v2/ext/openai/v1",

apiKey: process.env.CLARIFAI_PAT,

});

const model = clarifai("https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-R1");

const { text } = await generateText({

model,

messages: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: "What is photosynthesis?" }

],

});

console.log(text);

This SDK also supports streaming responses, tool calling, and other advanced features.In addition to the above, DeepSeek models can also be accessed via cURL, PHP, Java, and other languages. For a complete list of integration methods, supported providers, and advanced usage examples, refer to the documentation.

DeepSeek models on Clarifai support advanced inference features that make them suitable for production-grade workloads. You can enable streaming for low-latency responses, and tool calling to let the model interact dynamically with external systems or APIs. These capabilities work seamlessly through Clarifai’s OpenAI-compatible API.

Streaming returns model output token by token, improving responsiveness in real-time applications like chat interfaces or dashboards. The example below shows how to stream responses from a DeepSeek model hosted on Clarifai.

import os

from openai import OpenAI

# Initialize the OpenAI-compatible client for Clarifai

client = OpenAI(

base_url="https://api.clarifai.com/v2/ext/openai/v1",

api_key=os.environ["CLARIFAI_PAT"]

)

# Create a chat completion request with streaming enabled

response = client.chat.completions.create(

model="https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-V3_1",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain how transformers work in simple terms."}

],

max_completion_tokens=150,

temperature=0.7,

stream=True

)

print("Assistant's Response:")

for chunk in response:

if chunk.choices and chunk.choices[0].delta and chunk.choices[0].delta.content is not None:

print(chunk.choices[0].delta.content, end="")

print("\n")

Streaming helps you render partial responses as they arrive instead of waiting for the entire output, reducing perceived latency.

Streaming vs. non‑streaming:

In traditional requests, the server returns the entire completion once it is finished. For interactive applications (e.g., chatbots or dashboards), waiting for long responses can harm perceived latency. Streaming returns tokens as soon as they are generated, letting you start rendering partial outputs. Table 3 highlights differences:

|

Response mode |

Pros |

Cons |

Use cases |

|

Non‑streaming |

Simple to implement; no need to aggregate partial responses |

User waits until entire response finishes; less responsive |

Batch processing, offline summarisation |

|

Streaming |

Low perceived latency; allows progressive rendering; can interrupt early |

Slightly more code complexity; may require asynchronous handling |

Chatbots, dashboards, generative UIs |

Tool calling enables a model to invoke external functions during inference, which is especially useful for building AI agents that can interact with APIs, fetch live data, or perform dynamic reasoning. DeepSeek-V3.1 supports tool calling, allowing your agents to make context-aware decisions. Below is an example of defining and using a tool with a DeepSeek model.

import os

from openai import OpenAI

# Initialize the OpenAI-compatible client for Clarifai

client = OpenAI(

base_url="https://api.clarifai.com/v2/ext/openai/v1",

api_key=os.environ["CLARIFAI_PAT"]

)

# Define a simple function the model can call

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Returns the current temperature for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country, for example 'New York, USA'"

}

},

"required": ["location"],

"additionalProperties": False

}

}

}

]

# Create a chat completion request with tool-calling enabled

response = client.chat.completions.create(

model="https://clarifai.com/deepseek-ai/deepseek-chat/models/DeepSeek-V3_1",

messages=[

{"role": "user", "content": "What is the weather like in New York today?"}

],

tools=tools,

tool_choice='auto'

)

# Print the tool call proposed by the model

tool_calls = response.choices[0].message.tool_calls

print("Tool calls:", tool_calls)

For more advanced inference patterns, including multi-turn reasoning, structured output generation, and extended examples of streaming and tool calling, refer to the documentation

Choosing the right access method. While the Playground is ideal for experimentation, the API offers fine‑grained control and the SDK abstracts away low‑level details. Table 2 summarizes considerations:

|

Access method |

Best for |

Advantages |

Limitations |

|

Playground |

Early exploration and prompt engineering |

Interactive UI; easy parameter tuning; model comparison; shows code snippets |

Not suitable for automated workflows; manual input |

|

OpenAI‑compatible API |

Production and server‑side integration |

Drop‑in replacement for the OpenAI API; supports Python, TypeScript and cURL; clarifai handles scaling |

Requires writing boilerplate code and handling rate limits |

|

Clarifai Python SDK |

Python developers wanting convenience |

Simplifies authentication and predictions; supports batching and streaming; handles error management |

Less control over HTTP details; only Python |

|

Vercel AI SDK |

Modern web and edge applications |

TypeScript wrapper; integrates with ai package; supports streaming & tool calling |

Requires Node.js environment; adds dependency |

Clarifai hosts multiple DeepSeek variants. Choosing the right one depends on your task:

|

Model |

Architecture & parameters |

Context window |

Strengths |

Recommended tasks |

Considerations |

|

DeepSeek‑R1 |

Dense transformer, RL‑fine‑tuned; ~32 B parameters |

32 k tokens |

Excellent reasoning and coding ability |

Mathematical reasoning, algorithm design, debugging, complex problem solving |

Higher latency and cost; smaller context window |

|

DeepSeek‑V3.1 |

Hybrid MoE + dense; total 671 B (37 B active) |

128 k tokens |

Balances chat and reasoning; long context; strong performance on MMLU, DROP, code tasks |

Summarisation, long‑document Q&A, general chat, reasoning tasks with long inputs |

More complex architecture; might require careful prompt engineering |

|

Distilled models (e.g., R1‑Distill‑Qwen‑7B) |

Distillation of R1 or V3; ~7 B parameters |

8–16 k tokens |

Fast and cost‑effective; easy to host on consumer hardware |

Real‑time applications, edge devices, moderate reasoning |

Lower reasoning depth; shorter context |

|

DeepSeek‑OCR (planned) |

OCR model for image‑to‑text |

N/A (image input) |

Extracts text from images; extends DeepSeek capabilities |

Document scanning, receipt digitisation, ID verification |

Not yet available on Clarifai (as of Oct 2025) |

Why should you choose DeepSeek via Clarifai over self‑hosting or other providers? Key benefits include:

An annotated diagram or infographic could depict these benefits by linking cost declines to MoE architectures and showing ROI uplift percentages.

Running DeepSeek models on Clarifai offers cost savings, scalability, open‑source flexibility, and leading reasoning performance. AI adoption is mainstream, investment is surging and generative‑AI projects deliver substantial ROI. Clarifai’s managed infrastructure lowers the barrier to entry while ensuring security and compliance.

DeepSeek’s reasoning abilities and long context make it suitable for various applications:

Additionally, upcoming models such as DeepSeek‑OCR (announced but not yet available at the time of writing) will enable text extraction from images and documents, further broadening use cases.

DeepSeek models can power chatbots, coding copilots, document analysers, tutors and moderation tools. Evaluate task complexity, context length, latency and integration requirements. Adoption is growing: 21 % of organisations have redesigned workflows, and generative AI delivers significant cost savings and revenue gains.

Q1: Do I need a DeepSeek API key?

No. When using Clarifai, you only need a Clarifai Personal Access Token. Do not use or expose the DeepSeek API key unless you are calling DeepSeek directly (which this guide does not cover).

Q2: How do I switch between models in code?

Change the model value to the Clarifai model ID, such as openai/deepseek-ai/deepseek-chat/models/DeepSeek-R1 for R1 or openai/deepseek-ai/deepseek-chat/models/DeepSeek-V3.1 for V3.1.

Q3: What parameters can I tweak?

You can adjust temperature, top_p and max_tokens to control randomness, sampling breadth and output length. For streaming responses, set stream=True. Tool calling requires defining a tool schema.

Q4: Are there rate limits?

Clarifai enforces soft rate limits per PAT. Implement exponential backoff and avoid retrying 4XX errors. For high throughput, contact Clarifai to increase quotas.

Q5: Is my data secure?

Clarifai processes requests in secure environments and complies with major data‑protection standards. Store your PAT securely and avoid including sensitive data in prompts unless necessary.

Q6: Can I fine‑tune DeepSeek models?

DeepSeek models are MIT‑licensed. Clarifai plans to offer private hosting and fine‑tuning for enterprise customers in the near future. Until then, you can download and fine‑tune the open‑source models on your own infrastructure.

You now have a fast, standard way to integrate DeepSeek models, including R1, V3.1, and distilled variants, into your applications. Clarifai handles all infrastructure, scaling, and orchestration. No separate DeepSeek key or complex setup is needed. Try the models today through the Clarifai Playground or API and integrate them into your applications.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy