Quick Summary: What is the difference between AI, machine learning, deep learning and large language models? AI is the broad pursuit of machines that can perform tasks requiring intelligence; machine learning (ML) is a subset where systems learn patterns from data; deep learning uses neural networks with many layers to learn complex features; large language models (LLMs) are deep learning models trained on huge text datasets to generate and understand language. This article uses these distinctions as a foundation to explore the most important ML concepts and algorithms and shows how platforms like Clarifai can help you build and deploy them.

Artificial intelligence has moved from science fiction to boardroom priority. To navigate this rapidly changing landscape, it helps to distinguish between the major categories of AI. Machine learning algorithms rely on data and statistical techniques to make predictions or decisions without explicit programming. Deep learning is a subfield of ML where neural networks with many layers learn hierarchical representations of data. Large language models like GPT and domain‑specific small language models (SLMs) are built using deep learning and specialize in understanding and generating human language. Understanding these layers of the AI stack is important because each comes with different capabilities, resource requirements and ethical considerations. A 2024 survey cited by MIT Sloan found that 64 % of senior data leaders considered generative AI the most transformative technology. Clarifai supports both traditional ML and modern LLM workflows through its model training, compute orchestration and inference services, making it easier to experiment across the AI spectrum.

Quick Summary: What does this article cover in one glance? It outlines the fundamental learning paradigms, deep learning architectures, representation and transfer learning, distributed and reinforcement learning extensions, probabilistic models, generative AI, optimization and AutoML, explainable AI, emerging trends, real‑world applications, key algorithms and a conclusion with FAQs. Each section provides expert insights, citations and practical examples, with guidance on how Clarifai’s platform can accelerate your work.

This article is divided into thematic sections so you can either skim or dive deep. We start by explaining the core learning paradigms—supervised, unsupervised, semi‑supervised, self‑supervised and reinforcement learning—and then explore how different neural network architectures work. We discuss representation and transfer learning, federated and distributed learning, and advanced topics like multi‑agent reinforcement learning and probabilistic models. We then pivot to generative AI, optimization and AutoML, and the growing field of explainable AI. A dedicated section on emerging trends highlights machine unlearning, small language models, agentic AI, IoT convergence and more. We also explore real‑world applications and how Clarifai’s compute orchestration, local runners and inference API can help you deploy models across cloud and edge environments. Finally, we walk through ten key machine learning algorithms with intuitive explanations and sample code.

According to the AI Index 2025 report, business adoption of AI jumped from 55 % in 2023 to 78 % in 2024, and private investment in AI in the U.S. reached $109.1 billion, with $33.9 billion directed toward generative AI. This rapid acceleration underscores the need for both foundational knowledge and awareness of cutting‑edge trends, which we provide throughout this guide.

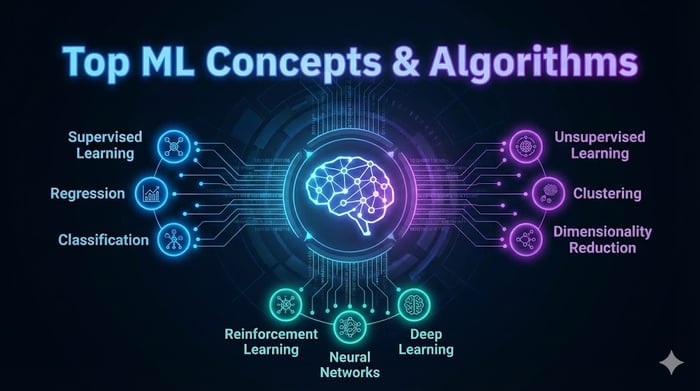

Quick Summary: What are the main ways machines learn? They learn through supervised learning (using labelled examples), unsupervised learning (finding patterns without labels), semi‑supervised learning (combining few labels with many unlabeled examples), self‑supervised learning (generating labels from data itself), and reinforcement learning (learning through trial and error to maximize rewards).

Supervised learning is the workhorse of machine learning. Models are trained on pairs of input features and corresponding output labels. Once trained, these models can predict the output for unseen inputs. Tasks include classification (predicting discrete categories such as spam/not spam emails) and regression (predicting continuous values like house prices). Success depends on the quality and quantity of labelled data and the choice of algorithm. If you want to build a supervised model quickly, Clarifai’s AutoML tools can ingest your labelled dataset, train multiple candidate models and recommend the best performer.

Unlike supervised learning, unsupervised learning deals with unlabeled data. Algorithms like K‑means clustering group similar data points by minimizing within‑cluster variance. Dimensionality reduction techniques such as PCA convert correlated features into uncorrelated principal components, allowing you to visualize high‑dimensional data. Unsupervised learning is widely used for discovering customer segments, anomaly detection, or compressing data before feeding into other algorithms. Clarifai’s vector‑search capabilities can embed high‑dimensional inputs into a searchable space for clustering and similarity search.

Labelled data can be expensive to obtain—imagine hand‑labelling thousands of medical images. Semi‑supervised learning offers a compromise: a small set of labelled examples guides the learning from a much larger set of unlabeled data, often using techniques like self‑training or graph‑based propagation. This paradigm is crucial for domains where labels are rare, and many modern LLMs also rely on semi‑supervised pre‑training. If you have a large unlabeled dataset but only a handful of annotated samples, Clarifai’s annotation workflow and human‑in‑the‑loop tools can help you bootstrap a semi‑supervised pipeline.

Self‑supervised learning (SSL) goes a step further by creating surrogate tasks that generate labels from the data itself. For example, in natural language, the model is trained to predict the next word given previous words, or to fill in masked tokens. In computer vision, the model might predict whether two patches belong to the same image. By solving these pretext tasks, the model learns useful representations that can later be fine‑tuned for specific tasks. This approach has allowed LLMs to scale to trillions of parameters using unlabelled data. You can experiment with self‑supervised pre‑training using Clarifai’s open model hub and fine‑tune on your downstream tasks without starting from scratch.

In reinforcement learning (RL), agents learn optimal behaviours by interacting with an environment. They take actions and receive rewards or penalties, learning strategies that maximize cumulative reward over time. RL has powered breakthroughs in robotics, game playing (e.g., AlphaGo), and real‑time decision systems. Components of RL include the agent, environment, state, actions and a reward signal. Clarifai’s compute orchestration can spin up GPU clusters to train RL agents efficiently and scale across multiple simulations.

Expert Insights: Self‑supervised pre‑training has emerged as a critical stepping stone for LLMs, enabling them to harness vast amounts of unlabeled data and achieve human‑level performance in language tasks. Semi‑supervised learning, once a niche academic topic, is now essential for domains where labelling is prohibitively expensive—medical imaging, legal documents and biological data. Reinforcement learning’s ability to tackle sequential decision‑making problems makes it an important foundation for autonomous vehicles and robotics.

Quick Summary: How do neural networks and deep learning architectures work? Neural networks are computational graphs of interconnected neurons that transform inputs through layers of learned weights. Convolutional neural networks (CNNs) excel at vision tasks, recurrent neural networks (RNNs) handle sequences, and graph neural networks (GNNs) operate on graph‑structured data. Deep learning models learn hierarchical features by stacking many layers.

At their core, neural networks approximate complex functions by composing many simple operations. Each neuron takes weighted inputs, applies an activation function and passes output to the next layer. By stacking layers, networks can model highly non‑linear relationships. Training involves adjusting weights using optimization algorithms like gradient descent to minimize a loss function. Deep networks can overfit, so techniques such as dropout, batch normalization and early stopping are used to generalize better. Clarifai’s platform lets you deploy both pretrained and custom neural networks to power vision, language and audio applications.

CNNs are tailored for grid‑structured data like images. They use convolutional layers to learn spatial hierarchies—early layers detect edges and textures, while deeper layers recognize higher‑level features like faces or objects. The weight sharing and local connectivity of CNNs make them parameter‑efficient and translation‑invariant. CNNs power everything from image classification to object detection and segmentation. Clarifai offers pretrained vision models built with CNNs that you can fine‑tune for your domain and deploy via its inference API.

When inputs form a sequence—sentences, time series, audio—recurrent neural networks (RNNs) and their variants like LSTMs and GRUs capture temporal dependencies by maintaining hidden state across time steps. They have been largely supplanted by transformers, which rely on self‑attention to model long‑range dependencies more efficiently. However, RNNs remain instructive for understanding sequence modelling and are still used in resource‑constrained environments.

Standard neural networks assume input data lies on a Euclidean grid. Many problems—social networks, molecular structures, knowledge graphs—are inherently graph‑structured. Graph neural networks generalize deep learning to graphs, allowing nodes to aggregate information from their neighbours. DataCamp notes that GNNs can predict properties of nodes, edges and entire graphs, and they were inspired by convolutional networks and embedding techniques. They excel at tasks like link prediction and recommendation systems but face challenges: networks are often shallow, scaling is hard and memory requirements are high. If you need to deploy a GNN for a custom application, Clarifai’s local runners let you run your model on‑prem or edge hardware and expose it securely via a public endpoint.

Expert Insights: The deep learning revolution has been driven not just by algorithms but also by parallel compute. GPUs, TPUs and specialized accelerators have enabled networks with billions of parameters to train in days. For practitioners, understanding the right architecture for the job—CNNs for images, sequence models or transformers for text, GNNs for graph data—is essential. Clarifai abstracts much of the deployment complexity via compute orchestration, letting you choose the appropriate hardware and scaling strategy.

Quick Summary: Why are representations and transfer learning important? Representation learning discovers meaningful patterns and features from raw data, enabling better performance and interpretability. Transfer learning reuses knowledge from a source task to improve a related target task. Together, they allow you to leverage existing models and small datasets effectively.

Modern ML models are only as good as the features they learn. Representation learning aims to transform raw inputs (pixels, words, signals) into informative patterns that capture underlying factors of variation. Learned representations should be compact, disentangled and generalizable—for example, a vision model might learn edges, shapes and textures that transfer across tasks. Representation learning underlies the success of autoencoders, self‑supervised LLMs and deep generative models. When using Clarifai, you can access pretrained embeddings for images and text or train your own models and index vectors for efficient similarity search.

Building a model from scratch requires lots of data and compute. Transfer learning addresses this by reusing a model trained on a large dataset as a starting point for a different but related problem. For example, a CNN trained on ImageNet can be repurposed to classify medical images by fine‑tuning its later layers. This not only speeds up training but often results in better performance due to rich feature representations. Transfer learning is central to language models: GPT‑style architectures are pretrained on web‑scale corpora and fine‑tuned for chat, summarization or domain‑specific tasks. Clarifai’s platform allows you to upload your own models, fine‑tune them on your data and deploy them with minimal overhead.

Expert Insights: Representation learning is moving toward unsupervised and self‑supervised paradigms to avoid manual feature engineering. Transfer learning has democratized deep learning by enabling individuals and small teams to build state‑of‑the‑art models without huge datasets. However, be mindful of domain shift: a model trained on photographs may not transfer well to medical scans. Fine‑tuning requires careful hyperparameter choices. Clarifai provides resources and community examples to help you apply transfer learning effectively.

Quick Summary: How can we train models without moving data? Federated learning trains models across decentralized devices where data stays local; model updates are aggregated centrally to build a global model. Distributed learning divides computation across multiple machines to speed up training. Both approaches help address privacy and scalability challenges.

In traditional centralized learning, data from all users is collected in a single location. This raises privacy concerns and may conflict with data sovereignty laws. Federated learning offers an alternative: each client (e.g., smartphone, hospital) trains a local model on its data; only the model updates (gradients or weights) are sent to a central server, where they are averaged to update a global model. This preserves user privacy and reduces the need to transfer large datasets. However, challenges include handling heterogeneity in client hardware and data distribution. Federated learning is especially useful for applications like keyboard prediction, personalized recommendations and healthcare. Clarifai’s local runners can complement federated scenarios by allowing developers to run models locally and integrate them with cloud workflows.

Large datasets and models require splitting computation across multiple GPUs or machines. Distributed training techniques like data parallelism and model parallelism break up training tasks to accelerate convergence. Frameworks such as Horovod, DeepSpeed and PyTorch Distributed automate this process. For RL and simulation workloads that generate vast amounts of experience, distributed training is indispensable. With Clarifai’s compute orchestration, you can launch clusters in shared SaaS, dedicated SaaS, or self‑managed VPC environments. Autoscaling ensures that resources match your workload demands, and integrated monitoring provides visibility into performance and cost.

Expert Insights: Federated learning is poised to expand as privacy regulations tighten and organizations seek to keep data on‑premise. However, model quality can suffer due to non‑IID data distributions and limited compute on edge devices. Hybrid approaches combine federated and centralized training to balance privacy and performance. Distributed training remains essential for cutting‑edge research; getting it right requires understanding bottlenecks in networking and hardware. Clarifai’s platform offers flexible deployment options—from serverless endpoints to dedicated clusters—to support both paradigms.

Quick Summary: What happens when multiple agents learn together? Multi‑agent reinforcement learning (MARL) extends RL to environments with multiple interacting agents. Each agent must adapt not only to the environment but also to other agents’ behaviours.

In MARL, multiple agents simultaneously interact with a shared environment. They may cooperate, compete or both. A simple example is traffic control: autonomous cars must coordinate at intersections without collisions. Non‑stationarity is a major challenge—because agents are learning and adapting, the environment is constantly changing. A common framework for MARL is the Markov game, a multi‑player generalization of Markov decision processes. Techniques like independent Q‑learning train agents separately, while centralized training with decentralized execution uses a joint critic during training and independent policies at deployment. Clarifai’s compute orchestration can run large‑scale MARL simulations on GPU clusters, while its inference API allows real‑time deployment of trained policies.

Multi‑agent systems underpin swarm robotics, automated negotiation, supply‑chain optimization and multi‑player games. They are also increasingly used in generative design, where multiple generative agents collaborate to explore a design space. Key challenges include scalability, credit assignment and the stability of joint learning. Clarifai’s platform helps by providing observability into training metrics and enabling reproducible experiments across distributed environments.

Expert Insights: MARL research is moving toward emergent communication protocols, enabling agents to share information efficiently. It also intersects with agentic AI, where digital agents autonomously plan and execute tasks. The Splunk article on AI trends notes that agentic and autonomous systems are already driving innovation and that this market could reach $62 billion by 2026. As these technologies mature, platforms like Clarifai that can orchestrate complex multi‑agent workloads will become increasingly valuable.

Quick Summary: Why model uncertainty? Probabilistic and Bayesian methods treat model parameters and predictions as random variables, allowing you to quantify uncertainty and update beliefs based on new evidence.

At the heart of Bayesian learning lies Bayes’ theorem:

P(θ∣D)=P(D∣θ)P(θ)P(D)P(\theta \mid D) = \frac{P(D \mid \theta) P(\theta)}{P(D)}P(θ∣D)=P(D)P(D∣θ)P(θ)

where P(θ∣D)P(\theta \mid D)P(θ∣D) is the posterior distribution of parameters θ\thetaθ given data DDD, P(D∣θ)P(D \mid \theta)P(D∣θ) is the likelihood, P(θ)P(\theta)P(θ) is the prior belief about θ\thetaθ, and P(D)P(D)P(D) is the marginal likelihood. This framework allows you to update your prior beliefs after observing data. Bayesian methods provide a principled way to incorporate prior knowledge and measure uncertainty, which is especially important in medical diagnosis, finance and any domain where the cost of wrong decisions is high.

Naive Bayes classifiers assume that features are conditionally independent given the class label. Despite this simplification, they are surprisingly effective and computationally efficient for text classification, spam filtering and sentiment analysis. More advanced Bayesian models include Bayesian linear regression, Gaussian processes and Bayesian neural networks, which place probability distributions over weights. Probabilistic programming frameworks like PyMC and Stan make it easier to specify and sample from complex models.

Quantifying uncertainty helps inform decisions, allocate resources and detect outliers. For example, in autonomous driving, a model might estimate the probability of an obstacle’s position; the car can then choose a safer trajectory if uncertainty is high. Bayesian models also enable active learning, where the algorithm selects the most informative data points to label. Clarifai supports custom Python models through its local runners, so practitioners can deploy Bayesian inference models and integrate them into production pipelines while retaining control over data and compute.

Expert Insights: Bayesian thinking encourages us to view predictions as distributions rather than point estimates. This is increasingly important when building AI systems that must be trustworthy and robust. By integrating probabilistic reasoning into our models, we acknowledge what we don’t know and can plan accordingly. Tools like Clarifai enable secure deployment of custom Bayesian models, bridging the gap between experimentation and production.

Quick Summary: How do generative models create new content? Generative AI learns the underlying distribution of data to produce new samples—text, images, music or code—that resemble the training data. Major families include generative adversarial networks (GANs), variational autoencoders (VAEs) and diffusion models. Smaller models known as small language models (SLMs) are emerging to address the limitations of large language models.

Traditional ML models are discriminative: they model the decision boundary between classes. Generative models instead learn to model the entire data distribution, allowing them to generate new samples. By learning the joint probability P(x,y)P(x, y)P(x,y), generative models can both generate synthetic data and perform inference. This makes them useful for data augmentation, simulation and creative applications.

Introduced by Ian Goodfellow, GANs pit two neural networks against each other: a generator that produces fake samples and a discriminator that tries to distinguish real from fake. Through this adversarial game, the generator learns to produce realistic data. GANs have revolutionized image synthesis, style transfer and super‑resolution. Variants such as conditional GANs allow you to control attributes of the generated output, while cycle‑GANs translate between domains (e.g., photographs to paintings). Clarifai’s generative capabilities allow you to experiment with GANs and run inference on your own content generation models.

Variational Autoencoders (VAEs) encode inputs into a latent space and decode them back, learning a probability distribution over latent variables. They are useful for unsupervised representation learning and generative modelling, though they often produce blurrier images than GANs. Diffusion models, such as those behind state‑of‑the‑art image generators, start from noise and iteratively denoise to produce images. They offer better mode coverage and image quality at the cost of slower inference.

Large language models are powerful but resource‑hungry. Small language models aim to offer similar capabilities with fewer parameters. Forbes notes that SLMs are 5–10× smaller than LLMs, making them cheaper to run, easier to customize and less prone to hallucinations. SLMs like Qwen deliver enterprise‑grade performance while running on commodity hardware, which is especially useful for privacy‑sensitive environments. Clarifai’s platform can host custom SLMs, enabling domain‑specific text and code generation with lower latency and cost.

Generating high‑quality outputs requires careful prompt engineering: crafting the right instructions, constraints and examples. Good prompts reduce hallucination and bias. Equally important is alignment: ensuring generative models behave according to human values and legal constraints. Safety techniques include reinforcement learning from human feedback (RLHF), adversarial training and post‑training filters. Clarifai offers moderation and bias‑detection tools to help you build responsible generative applications.

Expert Insights: Generative AI is revolutionizing creative industries, product design and software development. However, it raises questions about originality, copyright and misinformation. Models like DALL·E, Stable Diffusion and ChatGPT demonstrate both potential and risk. SLMs and domain‑specific generative models may strike a better balance between capability and control. The Splunk report highlights agentic and autonomous AI systems as one of the top trends for 2026; generative models will be key components of these agents, enabling them to produce content and decisions autonomously.

Quick Summary: How do models learn and how can we automate their training? Optimization algorithms like gradient descent adjust model parameters to minimize error, while AutoML automates model selection, hyperparameter tuning and deployment.

Training an ML model involves minimizing a loss function that measures the difference between predictions and true labels. The most common optimization algorithm is gradient descent, which iteratively updates parameters in the direction of the steepest descent. Variants include stochastic gradient descent (SGD), which uses mini‑batches for efficiency, and advanced optimizers like Adam, RMSProp and LAMB that adapt learning rates during training. Regularization techniques like L1/L2 penalties and dropout help prevent overfitting.

Choosing hyperparameters—learning rate, depth of a tree, number of neighbors—is often more impactful than the choice of algorithm. Methods include grid search, random search, Bayesian optimization and evolutionary strategies. Monitoring metrics such as cross‑validation accuracy or AUC helps identify optimal settings. Clarifai’s platform integrates with hyperparameter tuning frameworks and logs experiment metadata so you can reproduce and compare results.

AutoML platforms aim to democratize ML by automating data preprocessing, feature engineering, model selection and tuning. They typically build ensembles of models and choose the best performing one based on a validation metric. GeeksforGeeks notes that AutoML democratizes ML by reducing expertise requirements. This is especially relevant as the field faces a talent shortage and high deployment failure rates. Clarifai’s AutoML capabilities allow users to upload data, specify an objective and automatically train and evaluate models. Under the hood, it uses techniques like neural architecture search and meta‑learning to find strong performers.

Expert Insights: While optimization remains a fundamental skill, AutoML is becoming indispensable for non‑experts and organizations that need quick results. However, expert oversight is still necessary to interpret results, ensure fairness and address domain‑specific nuances. AutoML systems can sometimes overfit or select overly complex models; using them in combination with human judgement yields the best outcomes. Clarifai’s compute orchestration ensures AutoML runs efficiently on the right hardware and scales to meet enterprise workloads.

Quick Summary: How can we trust AI models? Explainable AI (XAI) provides insights into how models make decisions, while ethical considerations address fairness, bias, privacy and regulatory compliance.

Explainable AI encompasses techniques that help humans understand, trust and debug machine learning models. Qlik explains that XAI seeks to shed light on why a model produced a particular output, boosting trust and enabling regulatory compliance. Benefits include improved decision‑making, faster model optimization, increased adoption and meeting legal requirements. Approaches range from global explanations, which summarize how features contribute to overall model behaviour, to local explanations, which explain individual predictions. There is also a distinction between directly interpretable models (e.g., linear models, decision trees) and post‑hoc explanations that approximate complex models (e.g., SHAP values, LIME).

AI systems can perpetuate or amplify biases present in training data. Ensuring fairness requires evaluating models across different demographic groups, using fairness metrics (e.g., demographic parity, equalized odds) and applying debiasing techniques (reweighting, adversarial debiasing). Transparency in data collection and model design is critical. Clarifai’s monitoring tools can track model performance across subsets of data and alert you to potential bias.

Regulations like the GDPR and upcoming AI Act in Europe emphasize data protection, transparency and accountability. Privacy‑enhancing technologies such as differential privacy and federated learning help protect user data. XAI practices and documentation will be crucial to meet compliance requirements. Clarifai’s data governance features allow you to control who can access your models, manage consent and audit usage.

Expert Insights: There is a trade‑off between model complexity and interpretability: more accurate models (e.g., deep neural networks) are often harder to explain. Emerging research aims to build inherently interpretable models or to develop more faithful explanation methods. Ethical AI is not just about technical solutions but also about inclusive teams—women represent only 12 % of AI researchers—and clear governance structures. Integrating XAI, fairness and privacy considerations into development and deployment is essential for long‑term success.

Quick Summary: What new developments are shaping the future of ML? Small language models, machine unlearning, agentic AI, interoperability and MLOps, IoT convergence and AI‑optimized hardware** are key trends. Adoption is surging and inference costs are falling.

Small language models (SLMs) are increasingly attractive for businesses that need privacy, lower costs and domain specificity. Forbes notes that SLMs are 5–10× smaller than LLMs yet still powerful, reducing hallucinations and running efficiently on commodity hardware. Models like Qwen demonstrate that size is not everything. Additionally, vertical AI—models trained on industry‑specific data—offers better performance for healthcare, finance and retail. The Splunk article highlights the rise of vertical AI and projects a 21 % CAGR in its market through 2034. Clarifai’s platform enables you to deploy SLMs or vertical models and manage their lifecycle.

As privacy regulations tighten, machine unlearning techniques allow models to “forget” specific training data, reducing privacy risks and storage costs. This is crucial for compliance with “right to be forgotten” laws. Implementing machine unlearning requires efficient removal of the influence of particular samples without retraining from scratch. Tools like Clarifai’s model versioning and dataset management can help keep track of data provenance and enable unlearning workflows.

Agentic AI refers to AI systems that can autonomously plan and execute tasks, interacting with tools and environments. Splunk notes that agentic and autonomous systems are leading innovation and will create a market valued at $62 billion by 2026. This growth underscores the need for frameworks that coordinate multiple AI modules. Machine Intelligence Quotient (MIQ) measurements are emerging to standardize how we assess AI capabilities. Clarifai’s compute orchestration and reasoning engine enable agentic pipelines where different models coordinate to accomplish complex tasks.

With the proliferation of models and frameworks, interoperability is critical. The Open Neural Network Exchange (ONNX) provides a common format for deploying models across platforms. MLOps practices ensure models make it from prototype to production; Without them, 80 % of ML models never reach deployment. AutoML, monitoring, CI/CD and versioning are essential components of MLOps. Clarifai’s platform offers built‑in MLOps capabilities, including model registry, deployment management and monitoring.

AI at the edge brings intelligence to sensors, cameras and IoT devices. The convergence of IoT and machine learning enhances predictive maintenance, smart cities and real‑time decision making. GeeksforGeeks highlights the growth of IoT+ML systems for predictive analytics. Clarifai’s local runners provide a bridge between cloud and edge by exposing locally hosted models via secure public endpoints; they support edge devices that need to perform inference close to where data is generated.

As models grow, specialized chips like TPUs and custom accelerators are replacing general‑purpose GPUs. Splunk notes that AI‑optimized hardware is reshaping data center infrastructure and driving energy use. Sustainability is becoming a critical concern: U.S. data centers could consume 8 % of the country’s power by 2030. Companies are investing in renewable energy and efficient cooling. Clarifai’s compute orchestration lets users choose environmentally friendly deployment options and scale only when necessary to conserve resources.

As AI adoption grows, a talent shortage in AI infrastructure and ethics is emerging. GMI Cloud predicts a “talent war” for experts who can manage distributed GPU environments and ensure ethical compliance (paraphrased from industry blogs). Women currently represent only 12 % of AI researchers, underscoring the need for greater diversity. Ethical challenges include bias, stolen data and deepfakes. Clarifai offers training resources and community forums to support aspiring engineers and emphasizes responsible AI practices.

Expert Insights: The pace of AI innovation means trends quickly become mainstream. Adopting SLMs, practicing machine unlearning and investing in MLOps will help organizations stay competitive. The AI Index report shows that inference costs have dropped 280× since November 2022, enabling widespread deployment of complex models. The Splunk report emphasizes agentic systems, multimodal AI and vertical models as key growth areas. Preparing for AI’s future requires continuous learning, ethical vigilance and the right platform support.

Quick Summary: How are ML concepts used in the real world? ML powers applications in computer vision, natural language processing, generative content creation and more. Clarifai provides tools for model deployment, scaling and monitoring across these domains.

In retail, vision models detect objects on shelves to optimize inventory; in manufacturing they identify defects; in healthcare they assist with diagnostics. These tasks rely on CNNs and object detection algorithms. Clarifai offers ready‑to‑use vision models that can be integrated via API or fine‑tuned with your data. Its compute orchestration ensures that inference requests are routed to the right hardware for fast, cost‑efficient processing. Deployments can be in shared SaaS, dedicated SaaS, self‑managed VPC or on‑premises environments.

NLP applications include sentiment analysis, chatbots, document summarization and information extraction. Classifiers trained using logistic regression, SVMs or neural networks categorize text; language models generate responses. Clarifai’s platform supports the deployment of LLMs and SLMs, enabling conversational interfaces and classification tasks. Its local runners allow organizations to keep sensitive text data on‑prem while still leveraging cloud‑based orchestration. Pre‑built models such as Named Entity Recognition can accelerate development.

Generative models are transforming creative industries—from generating marketing copy to creating art, music and video. For example, a GAN can produce realistic fashion photos for e‑commerce, while a diffusion model can render high‑quality concept art. Clarifai’s generative tools make it possible to experiment with these models and deploy them in production. Compute orchestration scales resources dynamically, ensuring that generative inference tasks run smoothly even when demand spikes.

Modern applications increasingly involve multimodal inputs. In autonomous vehicles, cameras, lidar and radar data must be fused. In smart homes, voice commands and sensor data trigger actions. Splunk’s report highlights the rise of multimodal AI, where models process images, audio and text simultaneously. Clarifai supports multimodal inference via its APIs and allows you to orchestrate flows where different models handle specific modalities and then combine their outputs.

Expert Insights: Deploying ML in the real world requires not only algorithms but also robust infrastructure. Clarifai’s compute orchestration abstracts away the complexity of scaling and managing compute. Local runners provide privacy‑preserving deployment on edge devices. Inference routing ensures requests hit the best available resources, balancing latency and cost. Whether you are building a vision system for manufacturing or a text classifier for legal documents, Clarifai’s platform reduces operational burden and accelerates time to market.

Quick Summary: Which algorithms should every practitioner know? This section covers the intuition, use cases and simple code examples for ten essential algorithms. For each algorithm, we explain when to use it and highlight its strengths and limitations.

Below is a table listing the algorithms and primary tasks they solve. Long explanations follow after the table.

|

Algorithm |

Primary Task |

Example Use Case |

|

Linear Regression |

Regression |

Predict house prices from features like size and location |

|

Logistic Regression |

Classification |

Predict whether an email is spam or not |

|

Decision Trees |

Classification/Regression |

Classify loan applicants based on credit history |

|

Random Forest |

Ensemble learning |

Predict customer churn using multiple decision trees |

|

Gradient Boosting (e.g., XGBoost) |

Ensemble learning |

Forecast demand using an ensemble of weak learners |

|

Support Vector Machines (SVM) |

Classification/Regression |

Identify handwritten digits |

|

K‑Nearest Neighbors (KNN) |

Classification/Regression |

Recommender systems based on customer similarity |

|

Naive Bayes |

Probabilistic classification |

Text sentiment analysis |

|

K‑Means Clustering |

Clustering |

Group shoppers into segments based on behavior |

|

Principal Component Analysis (PCA) |

Dimensionality reduction |

Compress genomic data for visualization |

|

Neural Networks/Deep Learning |

Universal approximation |

Recognize faces or translate languages |

Linear regression assumes a linear relationship between input features and a continuous output. The model fits a line (or hyperplane) that minimizes the squared error between predicted and actual values. GeeksforGeeks describes linear regression as mapping data points to an optimized linear function, useful for predictions like exam scores from study hours. It is interpretable but struggles with non‑linear relationships. Here is a simple Scikit‑learn example:

from sklearn.linear_model import LinearRegression

import numpy as np

# Data: hours studied vs exam score

X = np.array([[2], [4], [6], [8]])

y = np.array([65, 75, 78, 90])

model = LinearRegression().fit(X, y)

print("Predicted score after 5 hours:", model.predict([[5]])[0])

Despite its name, logistic regression is a classification algorithm. It models the probability that an input belongs to a particular class using a sigmoid function. It is widely used for binary and multiclass classification. To interpret logistic regression, examine the weights: positive weights increase the log‑odds of belonging to the positive class. Multinomial logistic regression generalizes the technique to more than two classes. An example using Scikit‑learn:

from sklearn.linear_model import LogisticRegression

X = [[0.5, 1.5], [1.0, 1.5], [1.5, 1.0], [3.0, 2.5]]

y = [0, 0, 1, 1]

model = LogisticRegression().fit(X, y)

print("Probability of class 1:", model.predict_proba([[1.2, 1.4]])[0][1])

Decision trees partition the feature space into regions based on a series of questions. At each node, the algorithm chooses a feature and a threshold to split the data to maximize information gain (for classification) or minimize variance (for regression). Scikit‑learn notes that decision trees are non‑parametric supervised methods that learn simple decision rules from data. They are intuitive and interpretable but prone to overfitting. To construct a tree in Scikit‑learn:

from sklearn.tree import DecisionTreeClassifier

X = [[2, 3], [3, 4], [6, 5], [7, 8]]

y = [0, 0, 1, 1]

tree = DecisionTreeClassifier(max_depth=2).fit(X, y)

print("Prediction:", tree.predict([[4, 4]]))

A random forest builds an ensemble of decision trees, each trained on a random subset of data and features. Predictions are aggregated via majority vote (classification) or averaging (regression). This randomness reduces overfitting and improves generalization. GeeksforGeeks explains that random forests handle missing values, show feature importance and perform well with complex data. A simple implementation:

from sklearn.ensemble import RandomForestClassifier

rf = RandomForestClassifier(n_estimators=100, max_depth=3, random_state=42)

rf.fit(X, y)

print("Random forest prediction:", rf.predict([[4, 4]]))

Boosting techniques train models sequentially, where each new model corrects the errors of the combined ensemble. Gradient boosting optimizes an arbitrary differentiable loss function using gradient descent. XGBoost is a popular implementation that adds regularization and tree‑pruning techniques. Boosting models often outperform random forests but may require more tuning and are sensitive to overfitting if the learning rate is too high.

from sklearn.ensemble import GradientBoostingClassifier

gb = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, max_depth=3)

gb.fit(X, y)

print("Gradient boosting prediction:", gb.predict([[4, 4]]))

SVMs find the optimal hyperplane that maximally separates classes in feature space. They work well in high‑dimensional spaces and can use different kernel functions (linear, polynomial, radial basis) to model non‑linear boundaries. SVMs are robust to outliers and effective even when the number of features exceeds the number of samples. They are commonly used in text classification, image recognition and bioinformatics.

from sklearn.svm import SVC

svm = SVC(kernel='rbf', C=1.0, gamma='scale').fit(X, y)

print("SVM prediction:", svm.predict([[4, 4]]))

KNN is a lazy learner: it stores the training data and delays computation until prediction time. To classify a new sample, KNN finds the k closest neighbors (using a distance metric like Euclidean distance) and assigns the majority class. The algorithm is simple and non‑parametric but can be slow for large datasets. It also works for regression by averaging neighbors’ values. Example:

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier(n_neighbors=3).fit(X, y)

print("KNN prediction:", knn.predict([[4, 4]]))

Naive Bayes applies Bayes’ theorem assuming feature independence. It calculates the probability of each class given the input and chooses the class with the highest posterior probability. Despite the naive assumption, it performs surprisingly well on text data where the independence assumption holds approximately. Variants include Gaussian (continuous data), Multinomial (discrete counts) and Bernoulli (binary features). Example:

from sklearn.naive_bayes import MultinomialNB

X = [[3, 1, 0], [2, 0, 1], [0, 2, 3], [0, 3, 4]] # counts of words

labels = [0, 0, 1, 1]

nb = MultinomialNB().fit(X, labels)

print("Naive Bayes prediction:", nb.predict([[1, 1, 2]]))

K‑means is an unsupervised algorithm that partitions data into k clusters. It iteratively assigns points to the nearest centroid and updates centroids to the mean of assigned points. K‑means is scalable and simple but assumes spherical clusters and can converge to local minima. Choosing the right number of clusters is often done via the elbow method or silhouette score.

from sklearn.cluster import KMeans

kmeans = KMeans(n_clusters=2, random_state=42).fit(X)

print("Cluster assignment for [4, 4]:", kmeans.predict([[4, 4]])[0])

PCA reduces the dimensionality of data by projecting it onto a set of orthogonal components that capture the maximum variance. It helps visualize high‑dimensional data and speeds up training of other models. PCA is commonly used in image compression, genomics and exploratory data analysis.

from sklearn.decomposition import PCA

pca = PCA(n_components=2).fit(X)

print("Transformed point:", pca.transform([[4, 4]]))

Neural networks can be considered a general algorithm family rather than a single algorithm. Feed‑forward networks approximate any function given enough data and hidden units; convolutional networks handle images; recurrent and transformer models handle sequences. Deep learning models require careful tuning and significant compute, but they deliver state‑of‑the‑art performance across many domains. Clarifai makes it easy to deploy and scale neural networks without managing infrastructure.

Expert Insights: Choosing the right algorithm depends on your problem. Linear and logistic regression offer interpretability and speed; decision trees and random forests capture non‑linear relationships; gradient boosting delivers accuracy at the cost of complexity; SVMs shine in high‑dimensional spaces; KNN is simple but scales poorly; Naive Bayes is strong for text classification; K‑means and PCA are foundational tools for unsupervised learning; neural networks provide unmatched flexibility but require more resources. Clarifai supports all these algorithms either through built‑in models or by allowing you to upload custom ones.

Machine learning is a broad field encompassing diverse paradigms, architectures and algorithms. To master ML in 2025 and beyond, practitioners should: (1) understand core learning paradigms and when to apply them; (2) grasp neural network architectures and their strengths; (3) leverage representation learning and transfer learning to make the most of limited data; (4) explore federated, distributed and multi‑agent systems to tackle privacy and scalability; (5) incorporate probabilistic thinking to measure uncertainty; (6) experiment with generative models responsibly; (7) automate and optimize workflows through AutoML and modern optimization techniques; (8) prioritize explainability, fairness and ethics; (9) stay abreast of emerging trends such as SLMs, agentic AI, machine unlearning, IoT convergence and AI‑optimized hardware; and (10) build proficiency with foundational algorithms.

Clarifai’s platform offers a unified solution that spans these areas: compute orchestration to deploy any model on any infrastructure; local runners for secure on‑prem inference; inference API with autoscaling; and AutoML capabilities that democratize model building. By leveraging Clarifai’s tools, organizations can accelerate ML development, deploy models across cloud and edge environments and ensure responsible AI practices. As AI adoption continues to grow and trends like agentic AI and machine unlearning take hold, having a robust platform and a solid understanding of ML fundamentals will be the difference between leading the pack and being left behind.

AI is the broader field focused on creating machines that can perform tasks requiring intelligence. ML is a subset of AI where algorithms learn patterns from data rather than being explicitly programmed. Deep learning and large language models are subfields of ML.

Training time depends on the algorithm, dataset size and hardware. Simple models like linear regression train in seconds on a laptop; deep neural networks can take hours or days on GPUs. Clarifai’s compute orchestration lets you scale training across clusters to reduce time.

Python dominates due to its libraries (Scikit‑learn, TensorFlow, PyTorch). R is popular for statistical modelling, and Julia offers high performance. Clarifai’s APIs are accessible via Python, JavaScript and REST.

Upload your model to the Clarifai platform, create a deployment and configure compute resources. You can choose shared SaaS for convenience, dedicated SaaS for isolation, self‑managed VPC for control or on‑premises for data sovereignty. Clarifai’s documentation walks you through the steps.

SLMs are smaller, cheaper to run and easier to customize than large language models. They reduce hallucinations and privacy risks. They are ideal for enterprise applications where domain specificity and cost efficiency matter.

In federated learning, data never leaves the device; only model updates are shared, protecting user privacy. It is especially useful for sensitive data such as medical records or personal text messages.

XAI builds trust by allowing users and regulators to understand model decisions. It helps detect biases, debug models and comply with regulations. Tools like SHAP and LIME provide local and global explanations.

Agentic AI refers to AI systems that can plan and execute tasks autonomously, often interacting with tools and APIs. The market for agentic AI could reach $62 billion by 2026. Clarifai’s reasoning engine can be part of such agentic pipelines.

Yes. Transfer learning allows you to adapt a pretrained model to a new task with relatively little data. Clarifai’s platform supports uploading models, fine‑tuning and deploying them via its AutoML and training pipelines.

Challenges include data quality, scalability, privacy, interpretability and reliability. Without MLOps, 80 % of models fail to reach production. Platforms like Clarifai help by offering end‑to‑end tools for training, deployment, monitoring and governance.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

Developer advocate specialized in Machine learning. Summanth work at Clarifai, where he helps developers to get the most out of their ML efforts. He usually writes about Compute orchestration, Computer vision and new trends on AI and technology.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy