%20vs%20A2A%20(Agent-to-Agent)%20Clearly%20Explained_blog_hero.png?width=1000&height=556&name=MCP%20(Model%20Context%20Protocol)%20vs%20A2A%20(Agent-to-Agent)%20Clearly%20Explained_blog_hero.png)

AI is getting incredibly smart. We're moving past single, giant AI models towards teams of specialized AI agents working together. Think of them like expert helpers, each tackling a specific task – from automating business processes to being your personal assistant. These agent teams are popping up everywhere.

But there's a catch. Right now, getting these different agents to actually talk to each other smoothly is a big challenge. Imagine trying to run a global company where every department speaks a different language and uses incompatible tools. That's kind of where we are with AI agents. They're often built differently, by different companies, and live on different platforms. Without standard ways to communicate, teamwork gets messy and inefficient.

This feels a lot like the early days of the internet. Before universal rules like HTTP came along, connecting different computer networks was a nightmare. We face a similar problem now with AI. As more agent systems appear, we desperately need a universal communication layer. Otherwise, we'll end up tangled in a web of custom integrations, which just isn't sustainable.

Two protocols are starting to address this: Google’s Agent-to-Agent (A2A) protocol and Anthropic’s Model Context Protocol (MCP).

Google's A2A is an open effort (backed by over 50 companies!) focused on letting different AI agents talk directly to each other. The goal is a universal language so agents can find each other, share info securely, and coordinate tasks, no matter who built them or where they run.

Anthropic's MCP, on the other hand, tackles a different piece of the puzzle. It helps individual language model agents (like chatbots) access real-time information, use external tools, and follow specific instructions while they're working. Think of it as giving an agent superpowers by connecting it to outside resources.

These two protocols solve different parts of the communication problem: A2A focuses on how agents communicate with each other (horizontally), while MCP focuses on how a single agent connects to tools or memory (vertically).

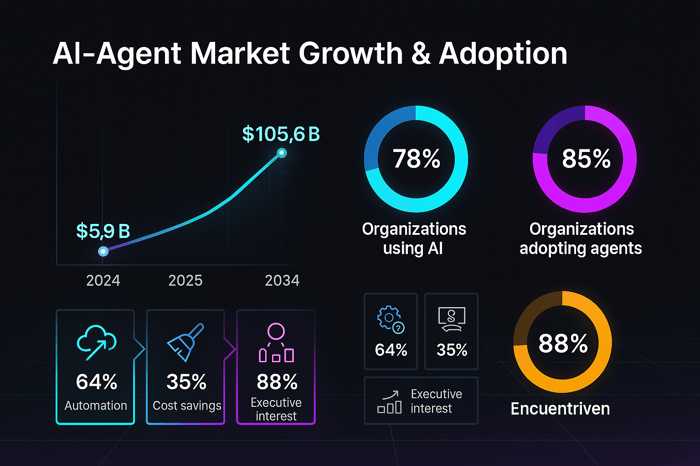

According to a 2025 index of AI agent usage, 78 % of global organizations already use AI tools in their daily operations and 85 % have integrated agents into at least one workflow. The market is expanding rapidly: research from Global Market Insights valued the global AI‑agents market at USD 5.9 billion in 2024 and expects it to grow to USD 7.7 billion in 2025 and USD 105.6 billion by 2034, a 38.5 % compound annual growth rate. As AI agents become ubiquitous in customer service, supply chains and knowledge work, the cost of integrating them through point‑to‑point APIs balloons.

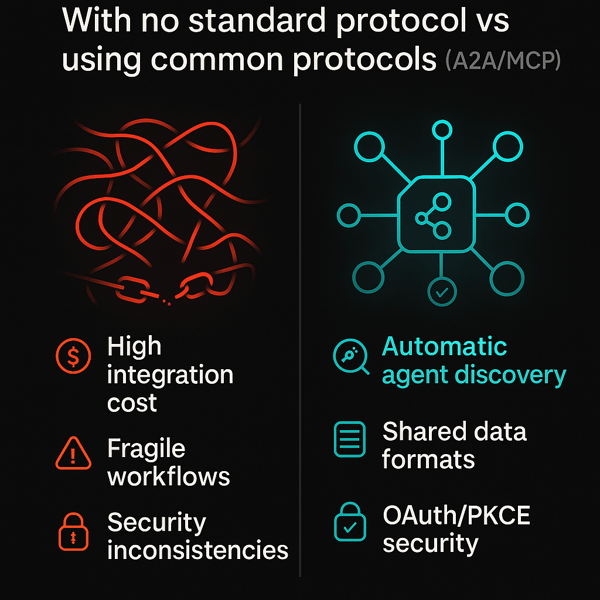

|

Scenario |

Pros |

Cons |

|

No common protocol |

• Flexibility to build custom integrations. • Ability to optimize for specific environments. |

• High integration cost—every new agent requires bespoke adapters. • Fragile workflows when one vendor changes its API. • Security and compliance inconsistencies across agents. |

|

Common protocol (e.g., A2A, MCP) |

• Agents can discover each other automatically through standardized metadata.. • Shared formats reduce integration time and testing overhead. • Security schemes like OAuth 2.0 and PKCE offer consistent authentication. • Easier to build multi‑agent workflows across vendors. |

• Requires alignment on standards and versions. • May restrict access to vendor‑specific features. • Early specifications may evolve rapidly, requiring updates. |

Imagine a travel company uses three independent agents: one to search flights, another to book hotels, and a third to send confirmation emails. Without a common protocol, engineers would have to write custom wrappers so that the hotel‑booking agent understands the flight‑search agent’s JSON response. With a shared protocol, each agent exposes a well‑known agent card describing its capabilities and accepted data formats. The orchestration logic can automatically route tasks between agents without bespoke glue code.

Valuable Stats & Data: Researchers from report that 64 % of current AI‑agent use cases involve business‑process automation, and 35 % of organizations using agents have already reported cost savings. At the same time, 88 % of executives say they are either piloting or scaling autonomous agents. These figures underline that the agent paradigm is not a futuristic concept but a mainstream, enterprise‑scale trend.

Analyst Commentary: Google’s agent‑to‑agent team notes that “universal interoperability is essential for fully realizing the potential of collaborative AI agents”. This echoes industry analysts who argue that multi‑agent systems could become the next layer of IT infrastructure. According to the same survey, 51 % of companies already use multiple governance methods (human approval, access controls, monitoring) to manage agents, a sign that ad‑hoc approaches are straining governance models. Therefore, standards like A2A and MCP are emerging as the “HTTP of agents,” providing common ground for discovery, messaging and security.

A common language for AI agents refers to a set of open protocols and data formats that allow independent agents to discover each other, exchange information, and collaborate securely. As multi‑agent adoption accelerates—78% of organizations already use AI tools, and 85% have integrated agents into workflows—such standards reduce integration cost, ensure consistent security, and simplify governance.

Google's Agent-to-Agent (A2A) protocol is a big step towards making AI agents communicate and coordinate more effectively. The main idea is simple: create a standard way for independent AI agents to interact, no matter who built them, where they live online, or what software framework they use.

A2A aims to do three key things:

Create a universal language all agents understand.

Ensure information is exchanged securely and efficiently.

Make it easy to build complex workflows where different agents team up to reach a common goal.

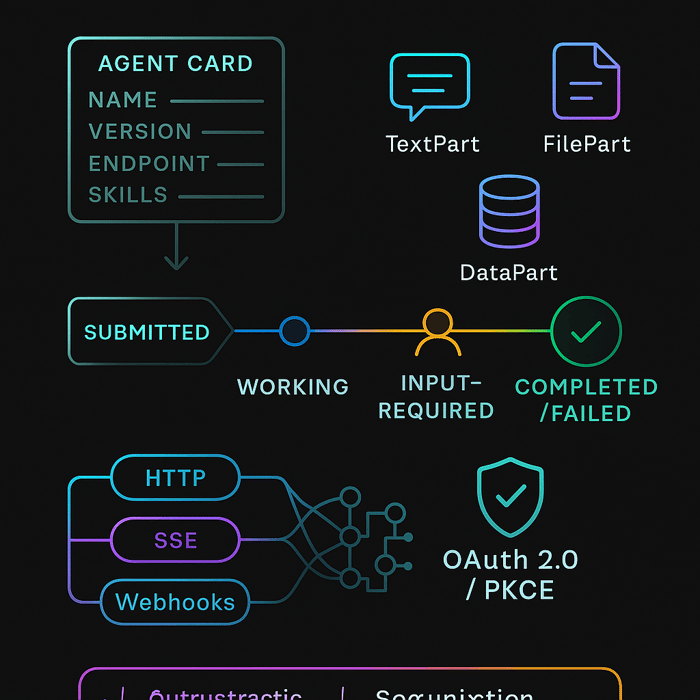

Let's peek at the main components that make A2A work:

How does one AI agent learn what another can do? Through an Agent Card. Think of it like a digital business card. It's a public file (usually found at a standard web address like /.well-known/agent.json) written in JSON format.

This card tells other agents crucial details:

Where the agent lives online (its address).

Its version (to make sure they're compatible).

A list of its skills and what it can do.

What security methods it requires to talk.

The data formats it understands (input and output).

Agent Cards enable capability discovery by letting agents advertise what they can do in a standardized way. This allows client agents to identify the most suitable agent for a given task and initiate A2A communication automatically. It’s similar to how web browsers check a robots.txt file to know the rules for crawling a website. Agent Cards allow agents to discover each other’s abilities and figure out how to connect, without needing any prior manual setup.

A2A organizes interactions around Tasks. A Task is simply a specific piece of work that needs doing, and it gets a unique ID so everyone can track it.

Each Task goes through a clear lifecycle:

Submitted: The request is sent.

Working: The agent is actively processing the task.

Input-Required: The agent needs more information to continue, typically prompting a notification for the user to intervene and provide the necessary details.

Completed / Failed / Canceled: The final outcome.

This structured process brings order to complex jobs spread across multiple agents. A "client" agent kicks off a task by sending a Task description to a "remote" agent capable of handling it. This clear lifecycle ensures everyone knows the status of the work and holds agents accountable, making complex collaborations manageable and predictable.

How do agents actually exchange information? Conceptually, they communicate through messages, which are implemented under the hood using standard protocols like JSON-RPC, webhooks, or server-sent events (SSE)depending on the context. A2A messages are flexible and can contain multiple parts with different types of content:

TextPart: Plain old text.

FilePart: Binary data like images or documents (sent directly or linked via a web address).

DataPart: Structured information (using JSON).

This allows agents to communicate in rich ways, going beyond just text to share files, data, and more.

When a task is finished, the result is packaged as an Artifact. Like messages, Artifacts can also contain multiple parts, letting the remote agent send back complex results with various data types. This flexibility in sharing information is vital for sophisticated teamwork.

A2A uses common web technologies to make connections easy:

Standard Requests (JSON-RPC over HTTP/S): For typical, quick request-and-response interactions, it uses a simple JSON-RPC running over standard web connections (HTTP or secure HTTPS).

Streaming Updates (Server-Sent Events - SSE): For tasks that take longer, A2A can use SSE. This lets the remote agent "stream" updates back to the client over a persistent connection, useful for progress reports or partial results.

Push Notifications (Webhooks): If the remote agent needs to send an update later (asynchronously), it can use webhooks. This means it sends a notification to a specific web address provided by the client agent.

Developers can choose the best communication method for each task. For quick, one-time requests, tasks/send can be used, while for long-running tasks that require real-time updates, tasks/sendSubscribe is ideal. By leveraging familiar web technologies, A2A makes it easier for developers to integrate and ensures better compatibility with existing systems.

|

Aspect |

Strengths |

Considerations |

|

Interoperability |

Standardizes how agents publish capabilities and accept tasks, making cross‑vendor collaboration possible. |

Adoption depends on a critical mass of vendors implementing the spec. |

|

Task lifecycle |

Provides transparency and accountability in multi‑step workflows; tasks are trackable and resumable. |

Adds overhead compared with simple API calls; may be overkill for lightweight interactions. |

|

Security |

Supports proven authentication methods (OAuth 2.0, PKCE) and mandates explicit security declarations in agent cards. |

Requires developers to implement security flows correctly; misconfiguration may expose agents. |

|

Extensible channels |

Offers multiple communication methods (HTTP, SSE, webhooks) suited to different tasks. |

Developers must choose appropriate channels and handle failover between them. |

Security is a core part of A2A. The protocol includes robust methods for verifying agent identities (authentication) and controlling access (authorization).

The Agent Card plays a crucial role, outlining the specific security methods required by an agent. A2A supports widely trusted security protocols, including:

OAuth 2.0 methods (a standard for delegated access)

Standard HTTP authentication (e.g., Basic or Bearer tokens)

API Keys

A key security feature is support for PKCE (Proof Key for Code Exchange), an enhancement to OAuth 2.0 that improves security. These strong, standard security measures are essential for businesses to protect sensitive data and ensure secure communication between agents.

A2A is perfect for situations where multiple AI agents need to collaborate across different platforms or tools. Here are some potential applications:

Software Engineering: AI agents could assist with automated code review, bug detection, and code generation across different development environments and tools. For example, one agent could analyze code for syntax errors, another could check for security vulnerabilities, and a third could propose optimizations, all working together to streamline the development process.

Smarter Supply Chains: AI agents could monitor inventory, predict disruptions, automatically adjust shipping routes, and provide advanced analytics by collaborating across different logistics systems.

Collaborative Healthcare: Specialized AI agents could analyze different types of patient data (such as scans, medical history, and genetics) and work together via A2A to suggest diagnoses or treatment plans.

Research Workflows: AI agents could automate key steps in research. One agent finds relevant data, another analyzes it, a third runs experiments, and another drafts results. Together, they streamline the entire process through collaboration.

Cross-Platform Fraud Detection: AI agents could simultaneously analyze transaction patterns across different banks or payment processors, sharing insights through A2A to detect fraud more quickly.

These examples show A2A's power to automate complex, end-to-end processes that rely on the combined smarts of multiple specialized AI systems, boosting efficiency everywhere.

|

Scenario |

A2A Suitability |

|

Collaborating across vendors or clouds |

A2A excels when agents from different providers need to work together. The protocol’s partner list includes over 50 launch partners ranging from Atlassian and Salesforce to McKinsey and Deloitte, indicating broad industry support. |

|

Long‑running or conversational tasks |

Use A2A for workflows that require streaming updates, intermediate checkpoints or user prompts (via SSE or webhooks). |

|

Internal microservices |

If all agents belong to the same organization and share a common backend, simpler RPC or gRPC might suffice; A2A adds value primarily when interoperability is needed. |

|

High‑security environments |

A2A’s built‑in support for OAuth 2.0 and PKCE makes it suitable for regulated industries. |

Valuable Stats & Data: Adoption of agentic workflows is no longer theoretical. A survey of enterprise leaders found that 64 % of agent deployments focus on workflow automation, and 35 % of organizations using AI agents report cost savings through automation. Furthermore, 230,000 organizations use GitHub Copilot for Business and over 15 million developers rely on Copilot overall, highlighting the scale at which agent‑driven developer tools are used.

Expert Commentary: In its April 2025 announcement, Google explained that A2A was designed to embrace agentic capabilities, build on existing standards, and be secure by default. It emphasised long‑running tasks and modality‑agnostic communication. Analysts note that such design choices align with observed enterprise needs: 88 % of executives are either piloting or scaling autonomous agents and 46 % fear falling behind if they don’t adopt agent‑led technologies quickly. In other words, A2A is not just a technical specification—it’s a strategic response to growing demand for interoperable, multi‑agent systems.

Google’s Agent‑to‑Agent protocol defines how AI agents discover each other, communicate and manage tasks. It uses agent cards for capability discovery, tasks with clear lifecycles, multipart messages and artifacts for rich data exchange, and flexible channels (HTTP, SSE, webhooks). Security is baked in through OAuth 2.0, PKCE and API keys. A2A excels in cross‑vendor, long‑running or regulated environments, providing a universal language for agents.

Unpacking Anthropic's MCP: Giving Models Tools & Context

Unpacking Anthropic's MCP: Giving Models Tools & ContextAnthropic's Model Context Protocol (MCP) tackles a different but equally important challenge: helping LLM-based AI systems connect to the outside world while they're working, rather than enabling communication between multiple agents. The core idea is to provide language models with relevant information and access to external tools (such as APIs or functions). This allows models to go beyond their training data and interact with current or task-specific information.

Without a shared protocol like MCP, each AI vendor is forced to define its own way of integrating external tools. For example, if a developer wants to call a function like "generate image" from Clarifai, they must write vendor-specific code to interact with Clarifai’s API. The same is true for every other tool they might use, resulting in a fragmented system where teams must create and maintain separate logic for each provider. In some cases, models are even given direct access to systems or APIs — for example, calling terminal commands or sending HTTP requests without proper control or security measures.

MCP solves this problem by standardizing how AI systems interact with external resources. Rather than building new integrations for every tool, developers can use a shared protocol, making it easier to extend AI capabilities with new tools and data sources.

Here's how MCP enables this connection:

MCP uses a clear client-server structure:

MCP Host: This is the application where the AI model lives (e.g., Anthropic's Claude Desktop app, a coding assistant in your IDE, or a custom AI app).

MCP Client: Embedded within the Host, the Client manages the connection to a server.

MCP Server: This is a separate component that can run locally or in the cloud. It provides the tools, data (called Resources), or predefined instructions (called Prompts) that the AI model might need.

The Host's Client makes a dedicated, one-to-one connection to a Server. The Server then exposes its capabilities (tools, data) for the Client to use on behalf of the AI model. This setup keeps things modular and scalable – the AI app asks for help, and specialized servers provide it.

MCP offers flexibility in how clients and servers talk:

Local Connection (stdio): If the client and server are running on the same computer, they can use standard input/output (stdio) for very fast, low-latency communication. An added benefit is that locally hosted MCP servers can directly read from and write to the file system, avoiding the need to serialize file contents into the LLM context.

Network Connection (HTTP with SSE): For connections over a network (different machines or the internet), MCP uses standard HTTP with Server-Sent Events (SSE). This allows two-way communication, where the server can push updates to the client whenever needed (great for longer tasks or notifications).

Developers choose the transport based on where the components are running and what the application needs, optimizing for speed or network reach.

MCP Servers provide their capabilities through three core building blocks: Tools, Resources, and Prompts. Each one is controlled by a different part of the system.

Example: Clarifai provides an MCP Server that enables direct interaction with tools, models, and data resources on the Platform. For example, given a prompt to generate an image, the MCP Client can call the generate_image Tool. The Clarifai MCP Server runs a text-to-image model from the community and returns the result. This is an unofficial early preview and will be live soon.

These primitives provide a standard way for AI models to interact with the external world predictably.

Key Building Blocks. MCP exposes capabilities via three primitives:

|

Primitive |

Controlled by |

Description |

Example |

|

Tools (Model‑Controlled) |

AI model |

Executable operations the model can autonomously invoke. Each tool has a name, description and JSON input schema. |

A generate_image tool that produces an image from text. |

|

Resources (Application‑Controlled) |

Application/engineer |

Structured data (files, database records, documents) provided by the host. The model cannot independently choose resources; they are surfaced by the app. |

A vector database of previous user interactions to give the model memory. |

|

Prompts (User‑Controlled) |

End user |

Reusable templates that shape how the model communicates and operate. Prompts may include placeholders and references to resources. |

A prompt that instructs the model to write an empathetic customer‑support email, with placeholders for customer name and issue. |

| Aspect | Advantages | Considerations |

|---|---|---|

| Tool integration | Models can call external functions without bespoke code; developers define tool schemas and descriptions. | The model must decide when and how to use tools correctly; requires robust prompting and guardrails. |

| Contextual resources | Resources provide up‑to‑date data (files, documents, database entries) to enhance model outputs. | Models cannot autonomously discover new resources; application developers must curate them. |

| Prompt templates | Prompts enable reuse of best‑practice instructions and user control. | Poorly designed prompts can lead to incorrect or unsafe actions; there is a learning curve to craft effective templates. |

| Flexible transport | Offers stdio for local, low‑latency connections and HTTP/SSE for networked deployments. | SSE requires persistent connections; network latency may affect responsiveness. |

| Security | Because the client maintains a dedicated channel to the server, access control can be tightly managed; resources and tools can be scoped per user. | Centralized servers may become a single point of failure or bottleneck; additional measures may be needed for fault tolerance. |

| Scenario | MCP Suitability |

|---|---|

| Enhancing a single agent’s capabilities | MCP is ideal when a language model needs to fetch real‑time information, execute functions or access memory. For example, a customer‑support bot that retrieves order status from a database or calls a shipping API benefits from MCP. |

| Running offline or on‑device | MCP’s stdio transport allows local servers to interact with models without internet connectivity. |

| Variable toolset | When the set of tools/resources may change per user (e.g., different clients with different databases), MCP’s declarative interface simplifies updates. |

| Security and compliance | MCP gives developers fine‑grained control over which tools and data the model can access; sensitive resources can be withheld entirely. |

| Multi‑agent collaboration | If agents need to communicate with each other, MCP alone is insufficient; A2A (or a similar agent‑to‑agent protocol) should be layered on top. |

MCP opens up many possibilities by letting AI models tap into external tools and data:

Smarter Enterprise Assistants: Create AI helpers that can securely access company databases, documents, and internal APIs to answer employee questions or automate internal tasks.

Powerful Coding Assistants: AI coding tools can use MCP to access your entire codebase, documentation, and build systems, providing much more accurate suggestions and analysis.

Easier Data Analysis: Connect AI models directly to databases via MCP, allowing users to query data and generate reports using natural language.

Tool Integration: MCP makes it easier to connect AI to various developer platforms and services, enabling things like:

Automated data scraping from websites.

Real-time data processing (e.g., using MCP with Confluent to manage Kafka data streams via chat).

Giving AI persistent memory (e.g., using MCP with vector databases to let AI search past conversations or documents).

These examples show how MCP can dramatically boost the intelligence and usefulness of AI systems in many different areas.

Valuable Stats & Data: The momentum behind MCP is notable. Virtualization Review described how the protocol “has gone viral” since its launch in November 2024. It highlights that MCP has been integrated into Microsoft’s Visual Studio Code and embraced by AWS for agentic AI development. These early adoptions demonstrate that enterprises are eager for standardized ways to connect models to tools.

Analyst Commentary: Developers have traditionally written vendor‑specific code to integrate models with APIs. MCP’s declarative approach reduces that burden. Analysts note that a 71 % majority of employees prefer having human review of AI outputs, emphasising the need for a protocol that supports human‑in‑the‑loop workflows. MCP facilitates this by allowing an application to pause model execution when human confirmation is required and to provide context or prompts accordingly. Furthermore, 31 % of organizations block agents from accessing sensitive data—MCP’s explicit resource management helps enforce these policies.

Anthropic’s Model Context Protocol connects a single AI model to external tools, data and prompts. It defines a client–server architecture where the model (client) calls a server exposing tools (autonomous functions), resources (structured data) and prompts (user‑defined templates). MCP supports local and network communication and allows models to extend their capabilities beyond training data. It’s best suited to enhancing individual agents, with A2A used for inter‑agent communication.

-png.png?width=700&height=467&name=Model%20Contex%20protocol%20(1)-png.png)

So, are A2A and MCP competitors? Not really. Google has even stated they see A2A as complementing MCP, suggesting that advanced AI applications will likely need both. They recommend using MCP for tool access and A2A for agent-to-agent communication.

A useful way to think about it:

MCP provides vertical integration: Connecting an application (and its AI model) deeply with the specific tools and data it needs.

A2A provides horizontal integration: Connecting different, independent agents across various systems.

Imagine MCP gives an individual agent the knowledge and tools it needs to do its job well. Then, A2A provides the way for these well-equipped agents to collaborate as a team.

This suggests powerful ways they could be used together:

Let’s understand this with an example: an HR onboarding workflow.

An "Orchestrator" agent is in charge of onboarding a new employee.

It uses A2A to delegate tasks to specialized agents:

Tells the "HR Agent" to create the employee record.

Tells the "IT Agent" to provision necessary accounts (email, software access).

Tells the "Facilities Agent" to prepare a desk and equipment.

The "IT Agent," when provisioning accounts, might internally use MCP to:

Interact with the company's identity management system (using an MCP Tool).

Access information about standard software packages (using an MCP Resource).

In this scenario, A2A handles the high-level coordination between agents, while MCP handles the specific, low-level interactions with tools and data needed by individual agents. This layered approach allows for building more modular, scalable, and secure AI systems.

While these protocols are currently seen as complementary, it’s possible that, as they evolve, their functionalities may start to overlap in some areas. But for now, the clearest path forward seems to be using them together to tackle different parts of the AI communication puzzle.

| Question | Consideration |

|---|---|

| Do you need multi‑agent workflows? | If you orchestrate tasks across multiple specialized agents or vendors, A2A is essential. MCP alone cannot coordinate independent agents. |

| Does each agent need access to tools and data? | Use MCP to provide a consistent interface for tools, resources and prompts, ensuring each agent operates with the right context. |

| Are there regulatory requirements? | Combine A2A’s secure authentication schemes with MCP’s fine‑grained resource control to meet compliance standards. |

| How will humans interact? | If human‑in‑the‑loop validation is required, design your orchestration layer to handle input‑required states and to present prompts and resources to the user. |

| Scalability and Modularity | The layered approach (MCP for vertical integration, A2A for horizontal) supports modular growth. You can add new tools without affecting inter‑agent communication and vice versa. |

Valuable Stats & Data: Multi‑agent adoption is accelerating: 64 % of agent deployments are for workflow automation, and 88 % of executives are piloting or scaling autonomous agents. Additionally, over 50 launch partners, including major technology vendors (Atlassian, Salesforce, SAP) and consulting firms (Accenture, McKinsey, Deloitte), have committed to Google’s A2A protocol. These numbers suggest that the industry is aligning around layered agent architectures.

Analyst Commentary: Google’s announcement described A2A as complementing MCP, not competing with it. Industry observers see A2A and MCP as analogous to the transport and application layers of the internet: A2A handles routing and delivery between agents, while MCP provides the application logic and services. The Index.dev survey found that 51 % of companies employ multiple control mechanisms—a sign that layering protocols helps manage complexity. Experts caution that integrating both protocols requires thoughtful orchestration, robust governance and a focus on user trust.

A2A and MCP are complementary protocols. MCP equips individual agents with tools, data and prompts via a client–server model, while A2A connects those agents through standardized tasks and messages. Using both allows organizations to build modular, scalable AI systems where agents can act autonomously yet collaborate to achieve common goals.

-png.png?width=700&height=700&name=ChatGPT%20Image%20Sep%2026%2c%202025%2c%2007_09_14%20PM%20(1)-png.png)

Protocols like A2A and MCP are shaping how AI agents work. A2A helps agents talk to each other and coordinate tasks. MCP helps individual agents use tools, memory, and other external information to be more useful. When used together, they can make AI systems more powerful and flexible.

The next step is adoption. These protocols will only matter if developers start using them in real systems. There may be some competition between different approaches, but most experts think the best systems will use both A2A and MCP together.

As these protocols grow, they may take on new roles. The AI community will play a big part in deciding what comes next.

We'll be sharing more about MCP and A2A in the coming weeks. Follow us on X and LinkedIn, and join our Discord channel to stay updated!

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy