The European Conference on Information Retrieval (ECIR) attracts Europe’s hottest research talents in the area of information retrieval. This year it was held in Stavanger, Norway. The timing was interesting as Norway dropped all COVID restrictions in April, meaning the conference was mainly in person with support for live remote presentations. This was also one of the first IR conferences Clarifai attended in the past few years as we unveiled the advances in the IR field.

Here are 3 takeaways from the conference that we thought were the most thought-provoking.

Hiking in Stavanger, Norway

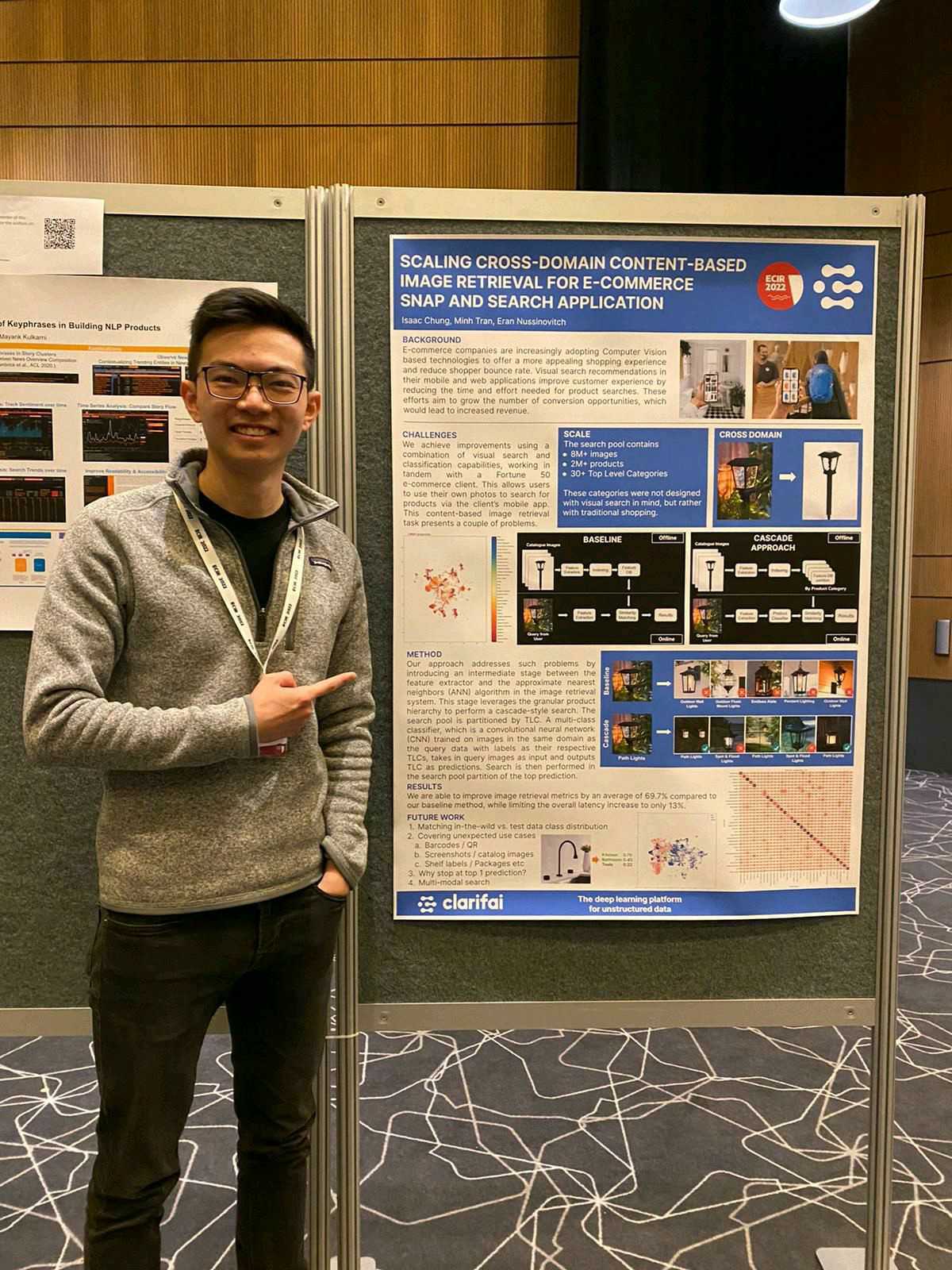

The vast majority of product discovery is done via navigating the product tree, i.e. clicking through categories and subcategories, then browsing through all products to find new styles and designs that tickle your fancy. This method, though practiced by many, is very time-consuming. You may have read our previous blog post which covers visual search for product discovery. In case you missed it, visual search involves using an image as the search query (e.g. an image taken from a mobile phone) and finding the most similar images in the collection (e.g. all images from a catalog). This allows users to search with images and bypasses the need to navigate through complex product trees. That’s why visual search is well-posed to disrupt the e-commerce industry. We presented at Industry Day on this very topic: Scaling Cross-Domain Content-Based Image Retrieval for E-commerce Snap and Search Application.

Our presentation on “Scaling Cross-Domain Content-Based Image Retrieval for E-commerce Snap and Search Application,” or the oh-so-catchy “SC-DC-BIRFE-CSASA”.

Our presentation on “Scaling Cross-Domain Content-Based Image Retrieval for E-commerce Snap and Search Application,” or the oh-so-catchy “SC-DC-BIRFE-CSASA”.

Even though a picture may be worth a thousand words, there may still be useful textual information given by products that we can leverage to enrich a product’s representation, e.g. short titles, descriptions, or other attributes metadata. We refer to using the combination of text and image information in search as “multimodal search”. An interesting paper at the conference titled Extending CLIP for Category-to-image Retrieval in E-commerce explores how well a product can be represented by combinations of its image, attributes, and title. The author compares the performance of cross-modal searching to searching via text with a best match algorithm. Significant improvements were observed when using all modes (images + title + attributes) compared to using only the image and only image and attributes. This work shows how promising product search can be when we are able to leverage more than what meets the eye.

Current basic versions of search rely on keyword matching (also referred to as lexical search) which often leads to irrelevant search matches. Instead of looking for literal matches of the query words, semantic search can take the intent and contextual meaning into consideration, which should give better search results. Spotify presented an fascinating Industry Day talk titled ‘Finding the Right Audio Content for You’, where one of the authors showed the impact of semantic search on retrieving more relevant results when searching for podcasts. To encourage research in semantic representation of audio and natural language processing, Spotify also released the 100K English Language Podcast Dataset, which contains about 50,000 hours of audio, and over 600 million transcribed words.

However, the performance of semantic search brought forward by deep retrieval models (e.g. BERT-based models) has been shown to be largely domain dependent, as discussed in another paper titled ‘Out-of-Domain Semantics to the Rescue! Zero-Shot Hybrid Retrieval Models.’ What this really tells us is that if you want good semantic search performance in podcast title searches, you need to train your deep retrieval models on podcast-related data. To generalize to other domains, e.g. using a model trained on one dataset on a different dataset, the authors found a hybrid approach that outperforms using only deep retrieval models or lexical models.

It is estimated that there are over 7000 languages being used in the world. Having access to more languages means being able to communicate with more people, users, and customers. However, this is a massive challenge due to the resource-heavy nature of training language models, especially if we target only one language or task at a time. As the development of Natural Language Processing (NLP) advances, more attention has been drawn to generalizing models to multiple tasks or multiple languages. In his keynote ‘Towards Language Technology for a Truly Multilingual World?’ Ivan Vulić discussed a few efficient approaches to multilingual and cross-lingual NLP. In particular, he talked about leveraging a modular architecture called ‘adaptors’ to drastically reduce the amount of training parameters in a model for chosen tasks and languages. This method essentially uses ‘high-resource’ languages to perform zero-shot transfer to ‘low-resource’ languages using a language-agnostic task adapter. While these methods seem promising in nudging the field towards better multilingual NLP, we need to keep in mind that there are still many challenges to be solved as many languages are still left behind and difficult to work with.

ECIR 2023 will be held in Dublin, Ireland, where we attended ACL 2022 last month and totally behaved ourselves. We look forward to seeing more advancements in the field of IR. We hope to see you there!

Taking a break in Dublin during ACL 2022

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy