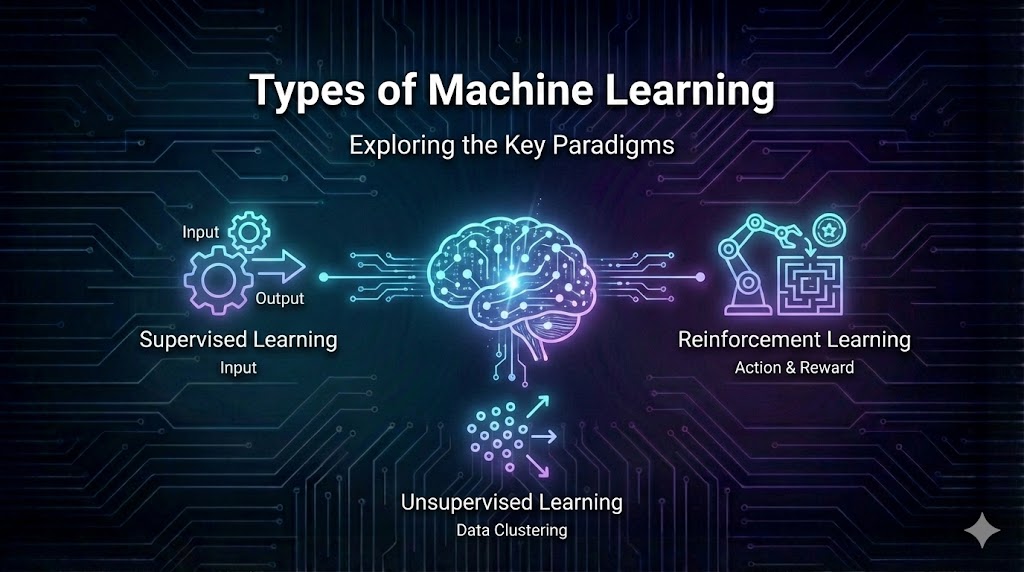

Machine learning (ML) has become the beating heart of modern artificial intelligence, powering everything from recommendation engines to self‑driving cars. Yet not all ML is created equal. Different learning paradigms tackle different problems, and choosing the right type of learning can make or break a project. As a leading AI platform, Clarifai offers tools across the spectrum of ML types, from supervised classification models to cutting‑edge generative agents. This article dives deep into the types of machine learning, summarizes key concepts, highlights emerging trends, and offers expert insights to help you navigate the evolving ML landscape in 2026.

|

ML Type |

High‑Level Purpose |

Typical Use Cases |

Clarifai Integration |

|

Supervised Learning |

Learn from labeled examples to map inputs to outputs |

Spam filtering, fraud detection, image classification |

Pre‑trained image and text classifiers; custom model training |

|

Unsupervised Learning |

Discover patterns or groups in unlabeled data |

Customer segmentation, anomaly detection, dimensionality reduction |

Embedding visualizations; feature learning |

|

Semi‑Supervised Learning |

Leverage small labeled sets with large unlabeled sets |

Speech recognition, medical imaging |

Bootstrapping models with unlabeled data |

|

Reinforcement Learning |

Learn through interaction with an environment using rewards |

Robotics, games, dynamic pricing |

Agentic workflows for optimization |

|

Deep Learning |

Use multi‑layer neural networks to learn hierarchical representations |

Computer vision, NLP, speech recognition |

Convolutional backbones, transformer‑based models |

|

Self‑Supervised & Foundation Models |

Pre‑train on unlabeled data; fine‑tune on downstream tasks |

Language models (GPT, BERT), vision foundation models |

Mesh AI model hub, retrieval‑augmented generation |

|

Transfer Learning |

Adapt knowledge from one task to another |

Medical imaging, domain adaptation |

Model Builder for fine‑tuning and fairness audits |

|

Federated & Edge Learning |

Train and infer on decentralized devices |

Mobile keyboards, wearables, smart cameras |

On‑device SDK, edge inference |

|

Generative AI & Agents |

Create new content or orchestrate multi‑step tasks |

Text, images, music, code; conversational agents |

Generative models, vector store and agent orchestration |

|

Explainable & Ethical AI |

Interpret model decisions and ensure fairness |

High‑impact decisions, regulated industries |

Monitoring tools, fairness assessments |

|

AutoML & Meta‑Learning |

Automate model selection and hyper‑parameter tuning |

Rapid prototyping, few‑shot learning |

Low‑code Model Builder |

|

Active & Continual Learning |

Select informative examples; learn from streaming data |

Real‑time personalization, fraud detection |

Continuous training pipelines |

|

Emerging Topics |

Novel trends like world models and small language models |

Digital twins, edge intelligence |

Research partnerships |

The rest of this article expands on each of these categories. Under each heading you’ll find a quick summary, an in‑depth explanation, creative examples, expert insights, and subtle integration points for Clarifai’s products.

Answer: Supervised learning is an ML paradigm in which a model learns a mapping from inputs to outputs using labeled examples. It’s akin to learning with a teacher: the algorithm is shown the correct answer for each input during training and gradually adjusts its parameters to minimize the difference between its predictions and the ground truth. Supervised methods power classification (predicting discrete labels) and regression (predicting continuous values), underpinning many of the AI services we interact with daily.

At its core, supervised learning treats data as a set of labeled pairs (x,y)(x, y)(x,y), where xxx denotes the input (features) and yyy denotes the desired output. The goal is to learn a function f:X→Yf: X \to Yf:X→Y that generalizes well to unseen inputs. Two major subclasses dominate:

Supervised learning’s strength lies in its predictability and interpretability. Because the model sees correct answers during training, it often achieves high accuracy on well‑defined tasks. However, this performance comes at a cost: labeled data are expensive to obtain, and models can overfit when the dataset does not represent real‑world diversity. Label bias—where annotators unintentionally embed their own assumptions—can also skew model outcomes.

Imagine you’re training an AI system to classify types of clouds—cumulus, cirrus, stratus—from satellite imagery. You assemble a dataset of 10,000 images labeled by meteorologists. A convolutional neural network extracts features like texture, brightness, and shape, mapping them to one of the three classes. With enough data, the model correctly identifies clouds in new weather satellite images, enabling better forecasting. But if the training set contains mostly daytime imagery, the model may struggle with night‑time conditions—a reminder of how crucial diverse labeling is.

Answer: Unsupervised learning discovers hidden patterns in unlabeled data. Instead of receiving ground truth labels, the algorithm looks for clusters, correlations, or lower‑dimensional representations. It’s like exploring a new city without a map—you wander around and discover neighborhoods based on their character. Algorithms like K‑means clustering, hierarchical clustering, and principal component analysis (PCA) help detect structure, reduce dimensionality, and identify anomalies in data streams.

Unsupervised algorithms operate without teacher guidance. The most common families are:

Because unsupervised learning doesn’t rely on labels, it excels at exploratory analysis and feature learning. However, evaluating unsupervised models is tricky: without ground truth, metrics like silhouette score or within‑cluster sum of squares become proxies for quality. Additionally, models can amplify existing biases if the data distribution is skewed.

Consider a streaming service with millions of songs and listening histories. By applying K‑means clustering to users’ play counts and song characteristics (tempo, mood, genre), the service discovers clusters of listeners: indie enthusiasts, classical purists, or hip‑hop fans. Without any labels, the system can automatically create personalized playlists and recommend new tracks that match each listener’s taste. Unsupervised learning becomes the backbone of the service’s recommendation engine.

Answer: Semi‑supervised learning bridges supervised and unsupervised paradigms. It uses a small set of labeled examples alongside a large pool of unlabeled data to train a model more efficiently than purely supervised methods. By combining the strengths of both worlds, semi‑supervised techniques reduce labeling costs while improving accuracy. They are particularly useful in domains like speech recognition or medical imaging, where obtaining labels is expensive or requires expert annotation.

Imagine you have 1,000 labeled images of handwritten digits and 50,000 unlabeled images. Semi‑supervised algorithms can use the labeled set to initialize a model and then iteratively assign pseudo‑labels to the unlabeled examples, gradually improving the model’s confidence. Key techniques include:

The appeal of semi‑supervised learning lies in its cost efficiency: researchers have shown that semi‑supervised models can achieve near‑supervised performance with far fewer labels. However, pseudo‑labels can propagate errors; therefore, careful confidence thresholds and active learning strategies are often employed to select the most informative unlabeled samples.

Developing a speech recognition system for a new language is difficult because transcribed audio is scarce. Semi‑supervised learning tackles this by first training a model on a small set of human‑labeled recordings. The model then transcribes thousands of hours of unlabeled audio, and its most confident transcriptions are used as pseudo‑labels for further training. Over time, the system’s accuracy rivals that of fully supervised models while using only a fraction of the labeled data.

Answer: Reinforcement learning (RL) is a paradigm where an agent interacts with an environment by taking actions and receiving rewards or penalties. Over time, the agent learns a policy that maximizes cumulative reward. RL underpins breakthroughs in game playing, robotics, and operations research. It is unique in that the model learns not from labeled examples but by exploring and exploiting its environment.

RL formalizes problems as Markov Decision Processes (MDPs) with states, actions, transition probabilities and reward functions. Key components include:

Popular algorithms include Q‑learning, Deep Q‑Networks (DQN), policy gradient methods and actor–critic architectures. For example, in the famous AlphaGo system, RL combined with Monte Carlo tree search learned to play Go at superhuman levels. RL also powers robotics control systems, recommendation engines, and dynamic pricing strategies.

However, RL faces challenges: sample inefficiency (requiring many interactions to learn), exploration vs. exploitation trade‑offs, and ensuring safety in real‑world applications. Current research introduces techniques like curiosity‑driven exploration and world models—internal simulators that predict environmental dynamics—to tackle these issues.

Consider the classic Taxi Drop‑Off Problem: an agent controlling a taxi must pick up passengers and drop them at designated locations in a grid world. With RL, the agent starts off wandering randomly, collecting rewards for successful drop‑offs and penalties for wrong moves. Over time, it learns the optimal routes. This toy problem illustrates how RL agents learn through trial and error. In real logistics, RL can optimize delivery drones, warehouse robots, or even traffic light scheduling to reduce congestion.

Answer: Deep learning uses multi‑layer neural networks to extract hierarchical features from data. By stacking layers of neurons, deep models learn complex patterns that shallow models cannot capture. This paradigm has revolutionized fields like computer vision, speech recognition, and natural language processing (NLP), enabling breakthroughs such as human‑level image classification and AI language assistants.

Deep learning extends traditional neural networks by adding numerous layers, enabling the model to learn from raw data. Key architectures include:

Despite their power, deep models demand large datasets and significant compute, raising concerns about sustainability. Researchers note that training compute requirements for state‑of‑the‑art models are doubling every five months, leading to skyrocketing energy consumption. Techniques like batch normalization, residual connections and transfer learning help mitigate training challenges. Clarifai’s platform offers pre‑trained vision models and allows users to fine‑tune them on their own datasets, reducing compute needs.

Suppose you want to build a dog‑breed identification app. Training a CNN from scratch on hundreds of breeds would be data‑intensive. Instead, you start with a pre‑trained ResNet trained on millions of images. You replace the final layer with one for 120 dog breeds and fine‑tune it using a few thousand labeled examples. In minutes, you achieve high accuracy—thanks to transfer learning. Clarifai’s Model Builder provides this workflow via a user‑friendly interface.

Answer: Self‑supervised learning (SSL) is a training paradigm where models learn from unlabeled data by solving proxy tasks—predicting missing words in a sentence or the next frame in a video. Foundation models build on SSL, training large networks on diverse unlabeled corpora to create general-purpose representations. They are then fine‑tuned or instruct‑tuned for specific tasks. Think of them as universal translators: once trained, they adapt quickly to new languages or domains.

In SSL, the model creates its own labels by masking parts of the input. Examples include:

Foundation models, often with billions of parameters, unify these techniques. They are pre‑trained on mixed data (text, images, code) and then adapted via fine‑tuning or instruction tuning. Advantages include:

However, foundation models raise issues like bias, hallucination, and massive compute demands. In 2023, Clarifai highlighted a scaling law indicating that training compute doubles every five months, challenging the sustainability of large models. Furthermore, adopting generative AI requires caution around data privacy and domain specificity: MIT Sloan notes that 64 % of senior data leaders view generative AI as transformative yet stress that traditional ML remains essential for domain‑specific tasks.

Imagine training a Vision Transformer (ViT) on millions of unlabeled chest X‑rays. By masking random patches and predicting pixel values, the model learns rich representations of lung structures. Once pre‑trained, the foundation model is fine‑tuned to detect pneumonia, lung nodules, or COVID‑19 with only a few thousand labeled scans. The resulting system offers high accuracy, reduces labeling costs and accelerates deployment. Clarifai’s Mesh AI would allow healthcare providers to harness such models securely, with built‑in privacy protections.

Answer: Transfer learning leverages knowledge gained from one task to boost performance on a related task. Instead of training a model from scratch, you start with a pre‑trained network and fine‑tune it on your target data. This approach reduces data requirements, accelerates training, and improves accuracy, particularly when labeled data are scarce. Transfer learning is a backbone of modern deep learning workflows.

There are two main strategies:

Transfer learning is powerful because it cuts training time and data needs. Researchers estimate that it reduces labeled data requirements by 80–90 %. It’s been successful in cross‑domain settings: applying a language model trained on general text to legal documents, or using a vision model trained on natural images for satellite imagery. However, domain shift can cause negative transfer when source and target distributions differ significantly.

A manufacturer wants to detect defects in machine parts. Instead of labeling tens of thousands of new images, engineers use a pre‑trained ResNet as a feature extractor and train a classifier on a few hundred labeled photos of defective and non‑defective parts. They then fine‑tune the network to adjust to the specific textures and lighting in their factory. The solution reaches production faster and with lower annotation costs. Clarifai’s Model Builder makes this process straightforward through a graphical interface.

Answer: Federated learning trains models across decentralized devices while keeping raw data on the device. Instead of sending data to a central server, each device trains a local model and shares only model updates (gradients). The central server aggregates these updates to form a global model. This approach preserves privacy, reduces latency, and enables personalization at the edge. Edge AI extends this concept by running inference locally, enabling smart keyboards, wearable devices and autonomous vehicles.

Federated learning works through a federated averaging algorithm: each client trains the model locally, and the server computes a weighted average of their updates. Key benefits include:

However, federated learning faces obstacles:

Edge AI leverages these principles for on‑device inference. Small language models (SLMs) and quantized neural networks allow sophisticated models to run on phones or tablets, as highlighted by researchers. European initiatives promote small and sustainable models to reduce energy consumption.

Imagine a consortium of hospitals wanting to build a predictive model for early sepsis detection. Due to privacy laws, patient data cannot be centralized. Federated learning enables each hospital to train a model locally on their patient records. Model updates are aggregated to improve the global model. No hospital shares raw data, yet the collaborative model benefits all participants. On the inference side, doctors use a tablet with an SLM that runs offline, delivering predictions during patient rounds. Clarifai’s mobile SDK facilitates such on‑device inference.

Answer: Generative AI models create new content—text, images, audio, video or code—by learning patterns from existing data. Agentic systems build on generative models to automate complex tasks: they plan, reason, use tools and maintain memory. Together, they represent the next frontier of AI, enabling everything from digital art and personalized marketing to autonomous assistants that coordinate multi‑step workflows.

Generative models include:

Retrieval‑Augmented Generation (RAG) enhances generative models by integrating vector databases. When the model needs factual grounding, it retrieves relevant documents and conditions its generation on those passages. According to research, 28 % of organizations currently use vector databases and 32 % plan to adopt them. Clarifai’s Vector Store module supports RAG pipelines, enabling clients to build knowledge‑driven chatbots.

Agentic systems orchestrate generative models, memory and external tools. They plan tasks, call APIs, update context and iterate until they reach a goal. Use cases include code assistants, customer support agents, and automated marketing campaigns. Agentic systems demand guardrails to prevent hallucinations, maintain privacy and respect intellectual property.

Generative AI adoption is accelerating: by 2026, up to 70 % of organizations are expected to employ generative AI, with cost reductions of around 57 %. Yet experts caution that generative AI should complement rather than replace traditional ML, especially for domain‑specific or sensitive tasks.

Imagine an online travel platform that uses an agentic system to plan user itineraries. The system uses a language model to chat with the user about preferences (destinations, budget, activities), a retrieval component to access reviews and travel tips from a vector store, and a booking API to reserve flights and hotels. The agent tracks user feedback, updates its knowledge base and offers real‑time recommendations. Clarifai’s Mesh AI and Vector Store provide the backbone for such an assistant, while built‑in guardrails enforce ethical responses and data compliance.

Answer: As ML systems impact high‑stakes decisions—loan approvals, medical diagnoses, hiring—the need for transparency, fairness and accountability grows. Explainable AI (XAI) methods shed light on how models make predictions, while ethical frameworks ensure that ML aligns with human values and regulatory standards. Without them, AI risks perpetuating biases or making decisions that harm individuals or society.

Explainable AI encompasses methods that make model decisions understandable to humans. Techniques include:

On the ethical front, concerns include bias, fairness, privacy, accountability and transparency. Regulations such as the EU AI Act and the U.S. AI Bill of Rights mandate risk assessments, data provenance, and human oversight. Ethical guidelines emphasize diversity in training data, fairness audits, and ongoing monitoring.

Clarifai supports ethical AI through features like model monitoring, fairness dashboards and data drift detection. Users can log inference requests, inspect performance across demographic groups and adjust thresholds or re‑train as necessary. The platform also offers safe content filters for generative models.

Imagine an HR department uses an ML model to shortlist job applicants. To ensure fairness, they implement SHAP analysis to identify which features (education, years of experience, etc.) impact predictions. They notice that graduates from certain universities receive consistently higher scores. After a fairness audit, they adjust the model and include additional demographic data to counteract bias. They also deploy a monitoring system that flags potential drift over time, ensuring the model remains fair. Clarifai’s monitoring tools make such audits accessible without deep technical expertise.

Answer: AutoML (Automated Machine Learning) aims to automate the selection of algorithms, architectures and hyper‑parameters. Meta‑learning (“learning to learn”) takes this a step further, enabling models to adapt rapidly to new tasks with minimal data. These technologies democratize AI by reducing the need for deep expertise and accelerating experimentation.

AutoML tools search across model architectures and hyper‑parameters to find high‑performing combinations. Strategies include grid search, random search, Bayesian optimization, and evolutionary algorithms. Neural architecture search (NAS) automatically designs network structures tailored to the problem.

Meta‑learning techniques train models on a distribution of tasks so they can quickly adapt to a new task with few examples. Methods such as Model‑Agnostic Meta‑Learning (MAML) and Reptile optimize for rapid adaptation, while contextual bandits integrate reinforcement learning with few‑shot learning.

Benefits of AutoML and meta‑learning include accelerated prototyping, reduced human bias in model selection, and greater accessibility for non‑experts. However, these systems require significant compute and may produce less interpretable models. Clarifai’s low‑code Model Builder offers AutoML features, enabling users to build and deploy models with minimal configuration.

A telecom company wants to predict customer churn but lacks ML expertise. By leveraging an AutoML tool, they upload their dataset and let the system explore various models and hyper‑parameters. The AutoML engine surfaces the top three models, including a gradient boosting machine with optimal settings. They deploy the model with Clarifai’s Model Builder, which monitors performance and retrains as necessary. Without deep ML knowledge, the company quickly implements a robust churn predictor.

Answer: Active learning selects the most informative samples for labeling, minimizing annotation costs. Online and continual learning allow models to learn incrementally from streaming data without retraining from scratch. These approaches are vital when data evolves over time or labeling resources are limited.

Active learning involves a model querying an oracle (e.g., a human annotator) for labels on data points with high uncertainty. By focusing on uncertain or diverse samples, active learning reduces the number of labeled examples needed to reach a desired accuracy.

Online learning updates model parameters on a per‑sample basis as new data arrives, making it suitable for streaming scenarios such as financial markets or IoT sensors.

Continual learning (or lifelong learning) trains models sequentially on tasks without forgetting previous knowledge. Techniques like Elastic Weight Consolidation (EWC) and memory replay mitigate catastrophic forgetting, where the model loses performance on earlier tasks when trained on new ones.

Applications include real‑time fraud detection, personalized recommendation systems that adapt to user behavior, and robotics where agents must operate in dynamic environments.

Imagine a credit card fraud detection model that must adapt to new scam patterns. Using active learning, the model highlights suspicious transactions with low confidence and asks fraud analysts to label them. These new labels are incorporated via online learning, updating the model in near real time. To ensure the system doesn’t forget past patterns, a continual learning mechanism retains knowledge of previous fraud schemes. Clarifai’s pipeline tools support such continuous training, integrating new data streams and re‑training models on the fly.

Answer: The ML landscape continues to evolve rapidly. Emerging topics like world models, small language models (SLMs), multimodal creativity, autonomous agents, edge intelligence, and AI for social good will shape the next decade. Staying informed about these trends helps organizations future‑proof their strategies.

World models and digital twins: Inspired by reinforcement learning research, world models allow agents to learn environment dynamics from video and simulation data, enabling more efficient planning and better safety. Digital twins create virtual replicas of physical systems for optimization and testing.

Small language models (SLMs): These compact models are optimized for efficiency and deployment on consumer devices. They consume fewer resources while maintaining strong performance.

Multimodal and generative creativity: Models that process text, images, audio and video simultaneously enable richer content generation. Diffusion models and multimodal transformers continue to push boundaries.

Autonomous agents: Beyond simple chatbots, agents with planning, memory and tool use capabilities are emerging. They integrate RL, generative models and vector databases to execute complex tasks.

Edge & federated advancements: The intersection of edge computing and AI continues to evolve, with SLMs and federated learning enabling smarter devices.

Explainable and ethical AI: Regulatory pressure and public concern drive investment in transparency, fairness and accountability.

AI for social good: Research highlights the importance of applying AI to health, environmental conservation, and humanitarian efforts.

Envision a smart city that maintains a digital twin: a virtual model of its infrastructure, traffic and energy use. World models simulate pedestrian and vehicle flows, optimizing traffic lights and reducing congestion. Edge devices like smart cameras run SLMs to process video locally, while federated learning ensures privacy for residents. Agents coordinate emergency responses and infrastructure maintenance. Clarifai collaborates with city planners to provide AI models and monitoring tools that underpin this digital ecosystem.

Answer: Selecting the right ML type depends on your data, problem formulation and constraints. Use supervised learning when you have labeled data and need straightforward predictions. Unsupervised and semi‑supervised learning help when labels are scarce or costly. Reinforcement learning is suited for sequential decision making. Deep learning excels in high‑dimensional tasks like vision and language. Transfer learning reduces data requirements, while federated learning preserves privacy. Generative AI and agents create content and orchestrate tasks, but require careful guardrails. The decision guide below helps map problems to paradigms.

Answer: Machine learning permeates industries—from healthcare and finance to manufacturing and marketing. Each ML type powers distinct solutions: supervised models detect disease from X‑rays; unsupervised algorithms segment customers; semi‑supervised methods tackle speech recognition; reinforcement learning optimizes supply chains; generative AI creates personalized content. Real‑world case studies illuminate how organizations leverage the right ML paradigm to solve their unique problems.

Answer: The field of machine learning evolves quickly. In recent years, research news has covered clarifications about ML model types, the rise of small language models, ethical and regulatory developments, and new training paradigms. Staying informed ensures that practitioners and business leaders make decisions based on the latest evidence.

Q1: Which type of machine learning should I start with as a beginner?

Start with supervised learning. It’s intuitive, has abundant educational resources, and is applicable to a wide range of problems with labeled data. Once comfortable, explore unsupervised and semi‑supervised methods to handle unlabeled datasets.

Q2: Is deep learning always better than traditional ML algorithms?

No. Deep learning excels in complex tasks like image and speech recognition but requires large datasets and compute. For smaller datasets or tabular data, simpler algorithms (e.g., decision trees, linear models) may perform better and offer greater interpretability.

Q3: How do I ensure my ML models are fair and unbiased?

Implement fairness audits during model development. Use techniques like SHAP or LIME to understand feature contributions, monitor performance across demographic groups, and retrain or adjust thresholds if biases appear. Clarifai provides tools for monitoring and fairness assessment.

Q4: Can I use generative AI safely in my business?

Yes, but adopt a responsible approach. Use retrieval‑augmented generation to ground outputs in factual sources, implement guardrails to prevent inappropriate content, and maintain human oversight. Follow domain regulations and privacy requirements.

Q5: What’s the difference between AutoML and transfer learning?

AutoML automates the process of selecting algorithms and hyper‑parameters for a given dataset. Transfer learning reuses a pre‑trained model’s knowledge for a new task. You can combine both by using AutoML to fine‑tune a pre‑trained model.

Q6: How will emerging trends like world models and SLMs impact AI development?

World models will enhance planning and simulation capabilities, particularly in robotics and autonomous systems. SLMs will enable more efficient deployment of AI on edge devices, expanding access to AI in resource‑constrained environments.

Machine learning encompasses a diverse ecosystem of paradigms, each suited to different problems and constraints. From the predictive precision of supervised learning to the creative power of generative models and the privacy protections of federated learning, understanding these types empowers practitioners to choose the right tool for the job. As the field advances, explainability, ethics and sustainability become paramount, and emerging trends like world models and small language models promise new capabilities and challenges.

To explore these methods hands‑on, consider experimenting with Clarifai’s platform. The company offers pre‑trained models, low‑code tools, vector stores, and agent orchestration frameworks to help you build AI solutions responsibly and efficiently. Continue learning by subscribing to research newsletters, attending conferences and staying curious. The ML journey is just beginning—and with the right knowledge and tools, you can harness AI to create meaningful impact.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy