Data is the fuel powering modern AI, but raw data alone isn’t enough. To build intelligent systems, you need high-quality labeled data—the cornerstone of machine learning success. In this guide, we’ll explore what data labeling is, why it matters, and how Clarifai’s Platform simplifies every step of the process, empowering businesses to create AI that works.

Data labeling is the process of tagging raw data — such as images, text, audio, or video — with meaningful labels to help AI models understand and interpret information. It serves as the backbone of machine learning, especially in supervised learning, where labeled data acts as the essential "teacher" guiding algorithms to recognize patterns and make accurate predictions.

Think of data labeling like teaching a child to recognize objects: you show them a picture of a dog, say “dog,” and repeat the process until they learn. Similarly, by tagging data with concepts like “dog,” “apple,” or “tumor,” AI models learn to identify patterns and make decisions based on that context.

Without high-quality labeled data, even the most advanced AI models struggle to produce reliable predictions. The accuracy, consistency, and richness of labeled data directly impact how quickly a model learns and how well it performs. Poor labels lead to poor models — making data labeling a mission-critical step in AI development.

Real-world applications of data labeling include:

- Medical imaging models trained to identify tumors and healthy tissues in diagnostic scans.

- Customer service chatbots that detect frustrated sentiment and prioritize urgent requests.

- Autonomous vehicles that rely on labeled data to recognize pedestrians, traffic signs, and road lanes for safe navigation.

No matter the industry, data labeling bridges the gap between raw data and actionable intelligence — turning information into insights that power AI-driven solutions.

The data labeling process follows a structured lifecycle to transform raw data into high-quality training datasets. Each step plays a crucial role in preparing data that enables machine learning models to learn and make accurate predictions.

The first step in the data labeling process is collecting raw data from various sources. This data can come from internal databases, APIs, IoT sensors, cameras, or third-party applications. The type of data collected depends on the specific AI project — for example, images for computer vision models, audio recordings for speech recognition, or text documents for natural language processing.

Once collected, the data is ingested into a centralized system where it can be organized and prepared for labeling. The ingestion process often involves cleaning the data, standardizing formats, and filtering out irrelevant or duplicate entries.

After ingestion, the raw data is ready for annotation and labeling. This step involves assigning descriptive tags or annotations that help machine learning models understand the content of the data. The labeling method used depends on the type of data and the complexity of the task.

Common annotation techniques include:

The annotation process can be performed manually by human annotators or accelerated using automated tools that generate initial labels for human review.

Quality control is a critical phase to ensure the accuracy and consistency of labeled data. Even small errors in labeling can significantly impact model performance. Quality control typically involves human review, where multiple annotators validate the labels assigned to data points. In some workflows, consensus-based methods are used, where a label is only accepted if several annotators agree on the outcome. Automated validation tools can also flag discrepancies and outliers for further inspection.

Establishing clear guidelines and quality metrics helps maintain labeling consistency across large datasets. Iterative feedback loops between annotators and quality reviewers further improve accuracy.

Once the labeled data passes quality checks, it is used to train machine learning models. The labeled dataset serves as the foundation for supervised learning algorithms, enabling models to learn patterns and make predictions. During model training, performance metrics such as accuracy, precision, and recall are evaluated against validation data.

If the model’s performance falls short, the labeling process may need to be refined. This iterative process involves relabeling certain data points, expanding the dataset, or adjusting labeling guidelines. Continuous iteration between data labeling and model training helps improve model accuracy over time.

Selecting the right data labeling method is crucial for building high-quality machine learning models. Different projects require different strategies based on factors like dataset size, complexity, and budget.

Here are the most common data labeling methods and when to use them:

Many AI projects use a hybrid approach, combining automated labeling for speed with human review to maintain quality—striking the right balance between efficiency and accuracy. Choosing the best data labeling method depends on your project’s complexity, scale, and quality requirements.

Data labeling platforms are essential for building high-performing AI models by providing accurate annotations for training data. Clarifai's data labeling platform combines automation with human review to deliver faster, more scalable, and high-quality annotations. The platform supports various data types such as images, videos, text, and audio, making it versatile for different AI use cases.

Clarifai optimizes the entire data labeling lifecycle with built-in AI assistance, collaborative workflows, and continuous feedback loops — all within a unified platform.

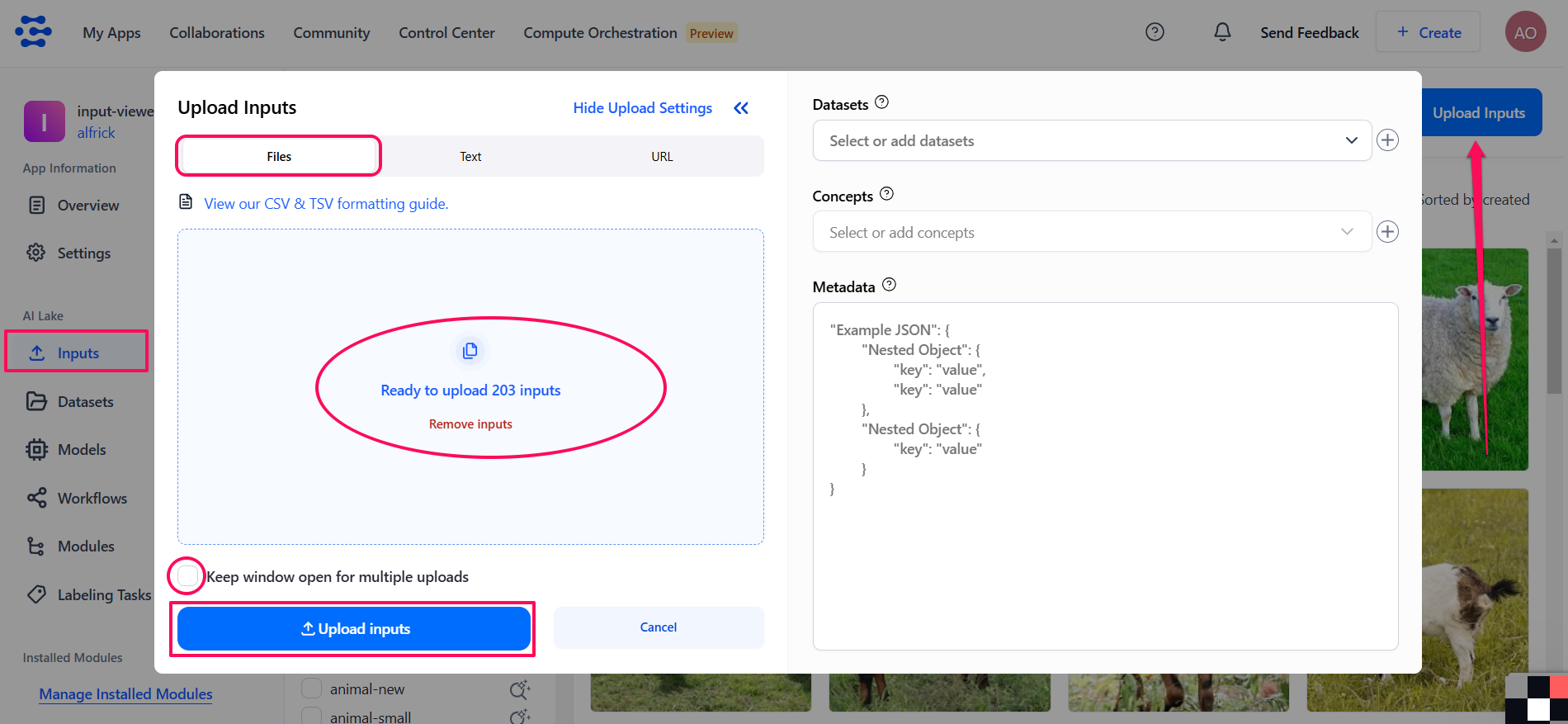

Effortlessly import and organize your data on the Clarifai Platform to kickstart your AI development journey. Whether you're working with images, videos, text, or audio, the platform provides multiple ways to upload and structure your datasets. You can upload data directly through the UI by simply dragging and dropping files, or automate the process via API integrations. For more advanced workflows, Clarifai offers SDKs in Python and Typescript to programmatically upload data from folders, URLs, or CSV files. You can also connect cloud storage platforms like AWS S3 and Google Cloud to sync your data automatically.

The platform supports various data types including images, videos, text, and audio, allowing you to work with diverse datasets in a unified environment. With batch upload capabilities, you can customize the number of files uploaded simultaneously and split large folders into smaller chunks to ensure efficient and reliable data import. The default batch size is 32, but it can be adjusted up to 128 based on your needs.

Clarifai enables you to enrich your data with annotations such as bounding boxes, masks, and text labels to provide more context for your AI models. You can also monitor the status of your uploads in real time and automatically retry failed uploads from log files to prevent data loss. Explore the full potential of data upload and annotation here.

Learn more on how you can upload data via SDK here.

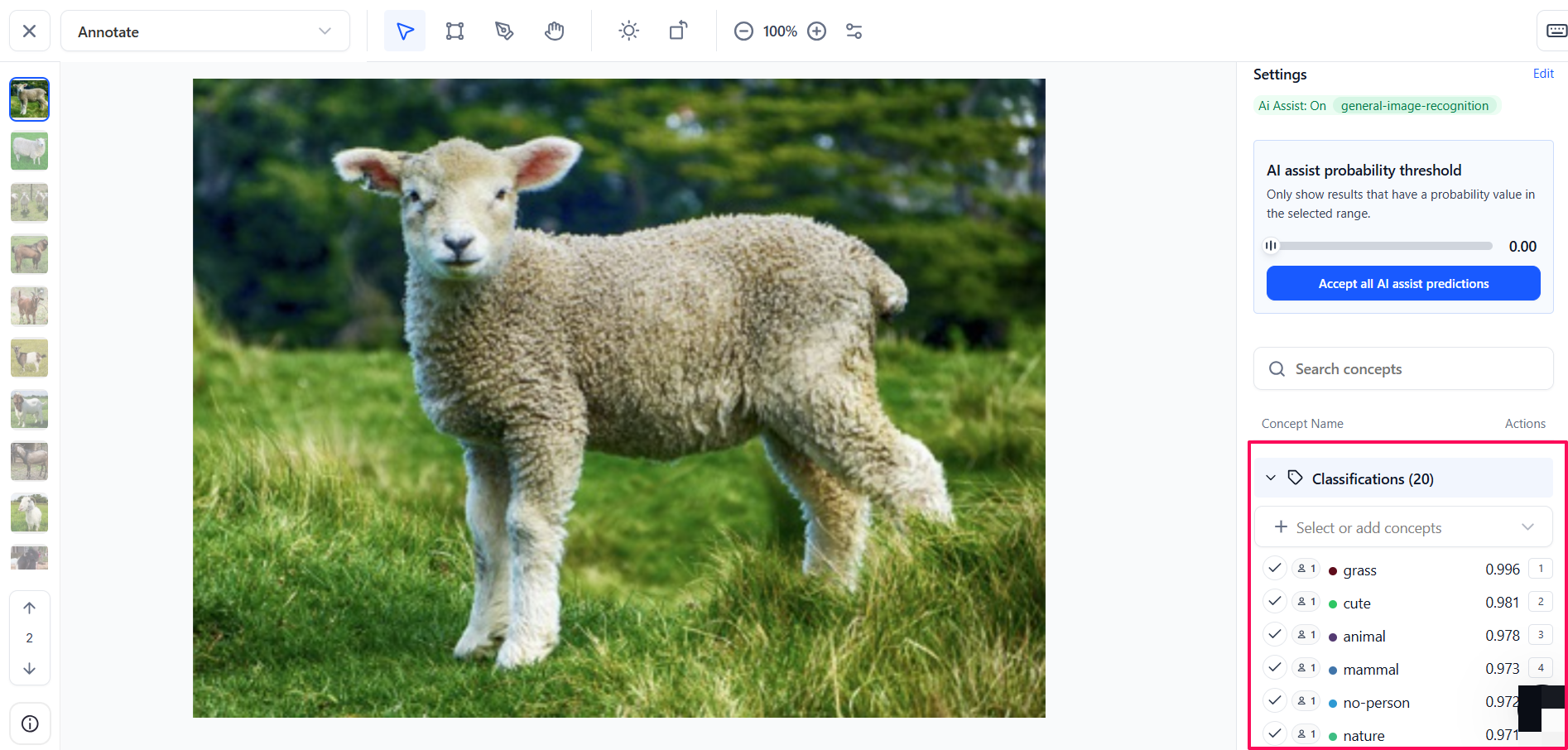

AI Assist accelerates manual labeling tasks by using model predictions to pre-label data automatically. Users can select any pre-trained model or custom model from Clarifai's model library to generate annotation suggestions, which can then be reviewed, corrected, and accepted.

The AI Assist workflow follows these steps:

AI Assist helps reduce annotation time significantly while ensuring consistent labeling across large datasets. The probability threshold feature gives users control over the balance between automation and accuracy.

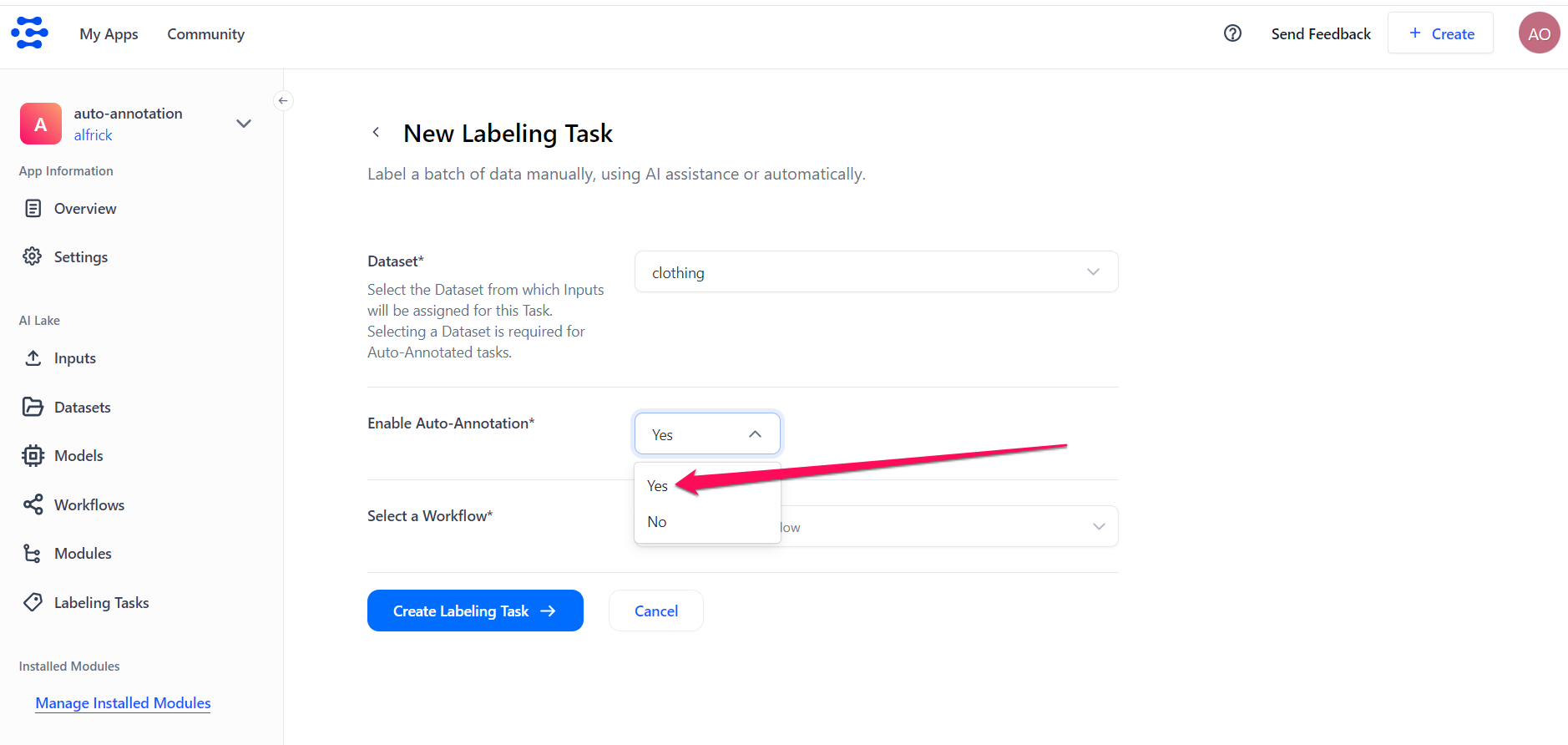

Auto Annotation enables fully automated data labeling by integrating model predictions into custom workflows. It automatically applies labels to inputs when model confidence scores meet a predefined threshold. If confidence scores fall below the threshold, the inputs are flagged for human review.

Auto Annotation workflows can be configured with:

For example, in an object detection workflow, Clarifai can automatically label detected objects with ANNOTATION_SUCCESS status if the confidence score is greater than 95%. If the score is lower, the annotation is marked as ANNOTATION_AWAITING_REVIEW for further validation.

Auto Annotation helps scale labeling projects while maintaining quality control through built-in review pipelines.

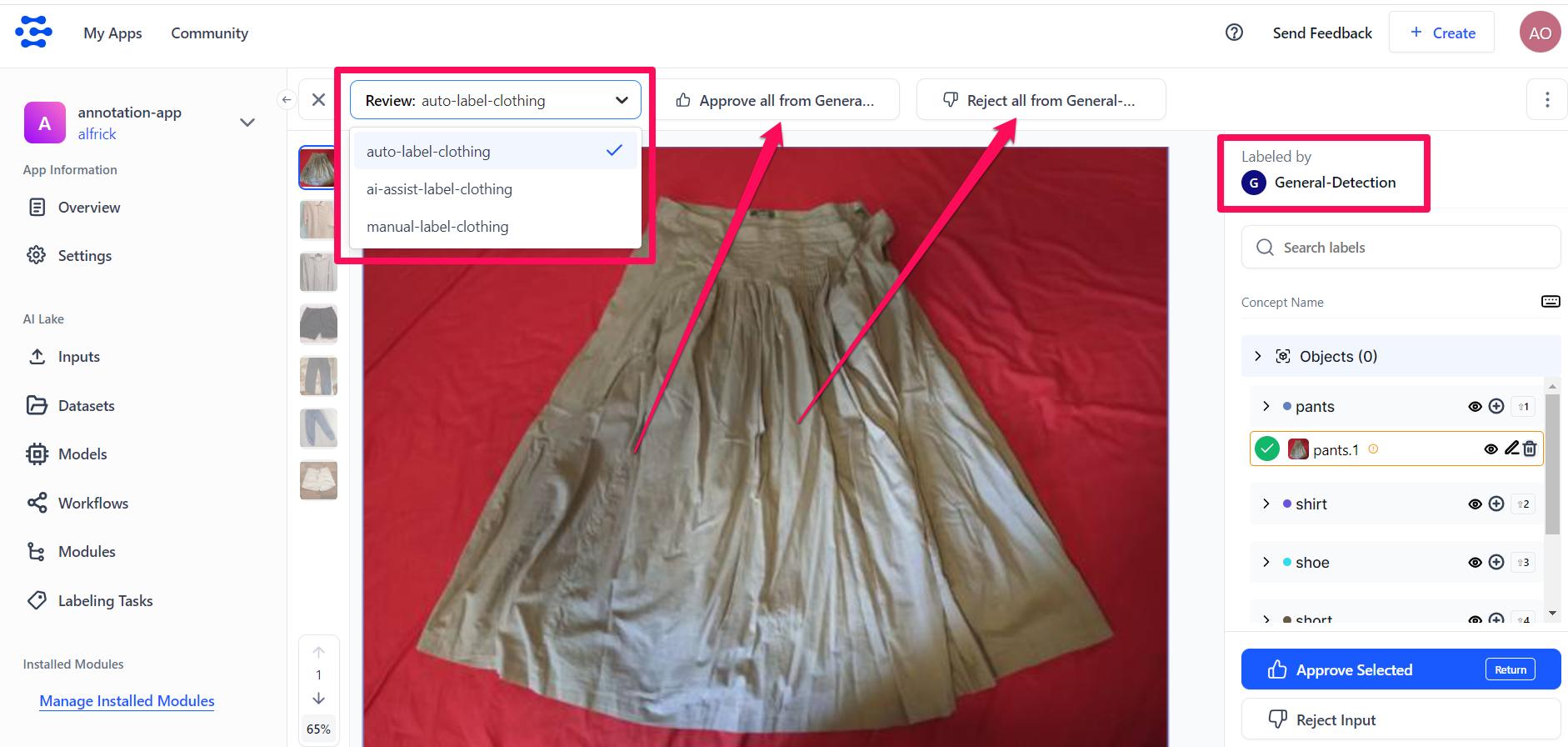

Clarifai's platform ensures data quality through multi-stage review pipelines that combine human validation with AI-based scoring. Users can configure custom workflows where annotations go through multiple reviewers before being finalized.

The AI will automatically compares annotations from different reviewers to detect inconsistencies and assign quality scores. If annotations don't meet predefined quality thresholds, they are flagged for re-review.

Review pipelines can be customized to:

Once data is labeled, users can directly train models within the Clarifai platform using the annotated datasets.

The feedback loop works as follows:

This iterative approach helps models become more accurate over time while reducing the need for manual annotation.

Data labeling is the foundation of creating accurate and intelligent AI models. Clarifai's platform makes the entire AI workflow seamless, from importing data to labeling, model training, and deployment. Whether you're working with images, videos, text, or audio, Clarifai helps you transform raw data into high-quality datasets faster and more efficiently.

Sign up for free today to get started and unlock the full potential of your data with Clarifai and join our Discord channel to connect with the community, share ideas, and get your questions answered!

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy