The company is a dedicated photo and video sharing service with over 100 million registered members. Their users upload over four million images and videos per day from the web, smartphones, and connected digital cameras.

Content moderation

Stock photography

Photography and Video Marketplace

While user-generated content is the company's bread and butter, it also posed a trust and safety risk of users who uploaded illegal or unwanted content. With a continual stream of content flowing in, it was impossible for a team of five human moderators to catch every image that went against their terms of service.

These moderators would manually review a queue of randomly selected images from just 1% of the two million image uploads each day. Not only were they potentially missing 99% of unwanted content uploaded to their site, but also suffered from an unrewarding workflow resulting in low productivity.

Their leading developer looked for a quick and easy way to implement machine learning-based image recognition technology into his tech stack. After ruling out building machine learning in-house because it was too costly and inefficient in the long-run, he decided using a computer vision API would be the best way to validate his idea and go-to-market quickly. He tested half a dozen computer vision APIs including Google Cloud Vision and Amazon Rekognition before deciding that Clarifai offered the best possible solution for their business.

He selected Clarifai based on its superior accuracy, ease of use, completeness of documentation, and the enthusiasm and professionalism of the Clarifai team. He was also excited about the wide range of pre-trained computer vision models Clarifai offered. These included the General Model that recognizes over 11,000 concepts and the Moderation Model that currently recognizes different levels of nudity along with gore and drugs.

With a product team of four, the company was able to implement a new content moderation workflow using Clarifai in 12 weeks from concept to internal rollout of the new moderation workflow process. With the new workflow resulting in increased productivity, 80% of their human moderation team was able to transition to full-time customer support.

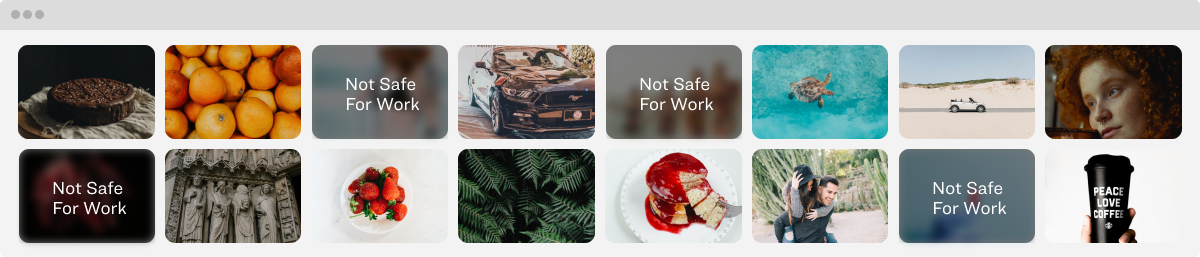

Currently, 100% of images uploaded every day on the company's platform pass through Clarifai’s NSFW filter in real-time. The images that are flagged "not safe for work" are then routed to the moderation queue where only one human moderator is required to review the content. Where’s the rest of the moderation team? They’re now doing customer support and making their customers' user experience even better.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy