Congratulations! You’ve decided to create a learning computer vision model for your program. How exciting! But now you have to make some decisions. How are you going to break down all the raw material into easily searched and properly organized content? Where do you even begin?

Before visual recognition can begin, you’re going to need to select the concepts that will make up your taxonomy. Concepts are the labels applied to images that will help categorize them, so your clients and audiences can easily search for them. They help to filter out any unwanted imagery. But when you’re faced with literally everything on the Internet, it may be hard to determine where to start.

Don’t worry. It’s not that hard when you remember a few basic ideas:

1) Consider Your Client — And Their Audience

The first thing you’ll need to assess when deciding which concepts to include in your model is who will be using the program and why. The audience demographic, as well as their intent on the site, will form the foundation of your concept library and help you come up with the core concepts. Considering the demographic of your client audience (say, if it’s going to be used by children) will also help you determine if you need to be able to weed out certain types of imagery.

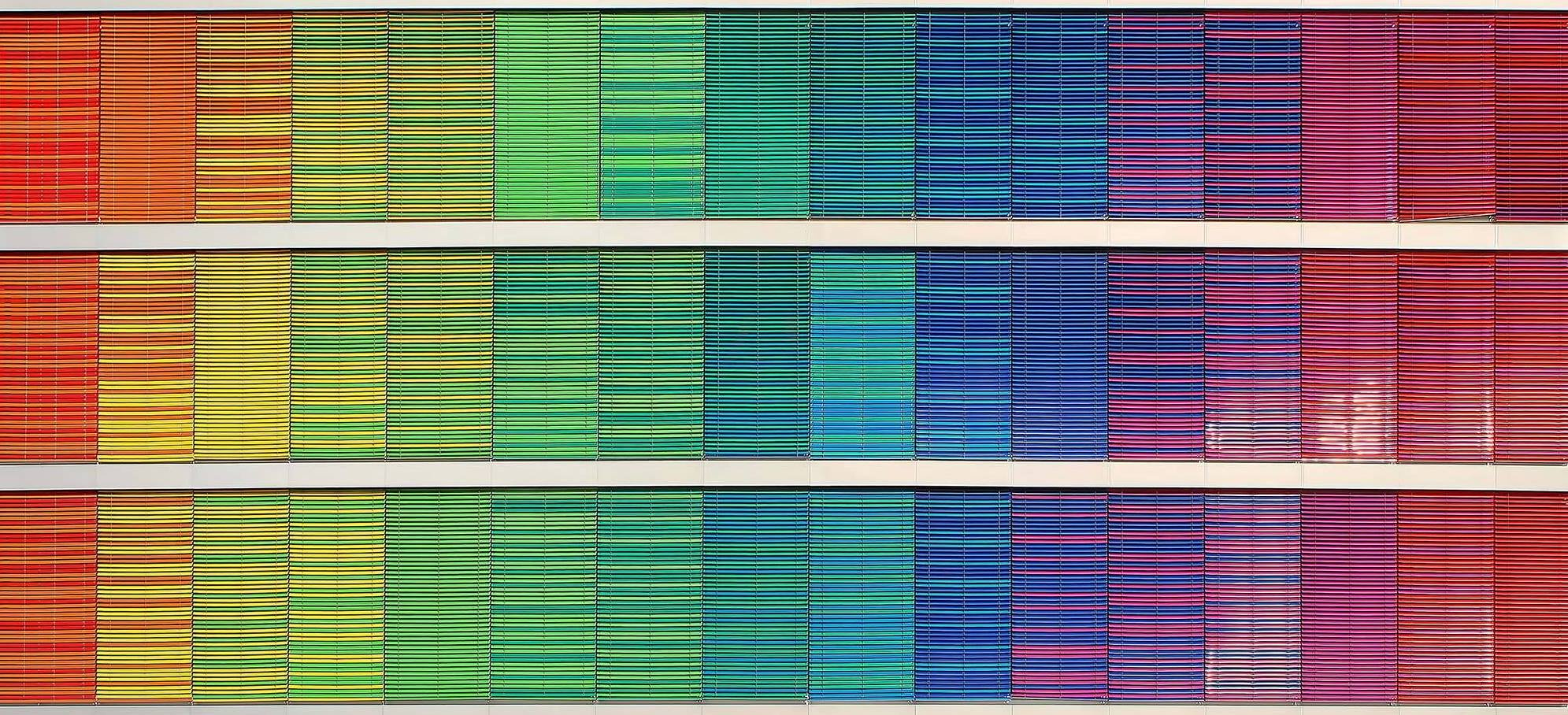

For example, let’s say your program is going to help people find images based on color. If color is your foundational idea, then you can start creating concepts for red, yellow, green, blue, and more. If you’re running a site for birdwatchers, you’ll probably want to start with types of birds.

2) Consider How Specific You Need To Be

Think about how detailed you need your list of concepts to be, and how delicately you need to break down your information. Is it going to be enough to label images as simply “red,” or do you need to go deeper? Do you need to divide “red” into “maroon” or “scarlet” or “fuchsia”? Can you get away with just using the concept “finch,” or do you need to get into the different types of finches?

Sometimes it can be tempting to break things down as far as they’ll go to be as precise as possible. Keep in mind, though, that the more concepts the model has, the harder it will be to train, and the more time it will take to get the AI to catch on to what you mean. Keeping a balance of precision and simplicity is important.

3) An “All” Or “None” Concept Can Help A Lot

In your batches of images, your program will likely come across images that have nothing to do with what you’re looking for. If you’re looking for images in color, what can you do with a black-and-white image? If you’re sorting images of birds, what do you do with an image of a bear?

Well, that’s where a concept like “None Of These” can come in handy. It creates a catch-all for images that don’t fit into any of the concepts you’re looking to work with, and makes it easy to exclude irrelevant material.

Images can also have more than one label: for example, an image with blue and green can be labeled as both “blue” and “green,” but if an image includes all your colors, it might start getting too complicated and confusing to add all your concepts. In this case, you might want to consider a concept like “multicolor” for images with many colors.

Images can also have more than one label: for example, an image with blue and green can be labeled as both “blue” and “green,” but if an image includes all your colors, it might start getting too complicated and confusing to add all your concepts. In this case, you might want to consider a concept like “multicolor” for images with many colors.

4) You Might Need A Concept For Inappropriate Imagery

It’s not nice to think about, but this is the Internet, and not everything you’ll find out there is appropriate to your needs (or sometimes, to anyone’s!). To avoid a potentially unpleasant encounter for your clients and audiences, consider creating a concept that can help filter out undesirable images and help your program learn what to immediately avoid in the future.

You can use a “none” filter for this purpose, too, but depending on your program, you may want to separate them further. If your client wants to have the option of seeing “none” concept images but still doesn’t want to see sensitive ones, a separation here might be useful. Using our color example, an innocuous but grayscale image could go into your “none” concept, while an inappropriate or troubling image could be placed in a concept with a label like “NSFW.”

Keep in mind that because visual learning is doing just that — learning — it may require you to keep a human eye on it to better finesse the filtering process.

While it may seem time-consuming, developing the right taxonomy for your model is critical for its success. When you do, you’ll get to reap the benefits of visual recognition and build better, smarter products for your customers.