This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.

We are introducing the Clarifai Reasoning Engine — a full-stack performance framework built to deliver record-setting inference speed and efficiency for reasoning and agentic AI workloads.

Unlike traditional inference systems that plateau after deployment, the Clarifai Reasoning Engine continuously learns from workload behavior, dynamically optimizing kernels, batching, and memory utilization. This adaptive approach means the system gets faster and more efficient over time, especially for repetitive or structured agentic tasks, without any trade-off in accuracy.

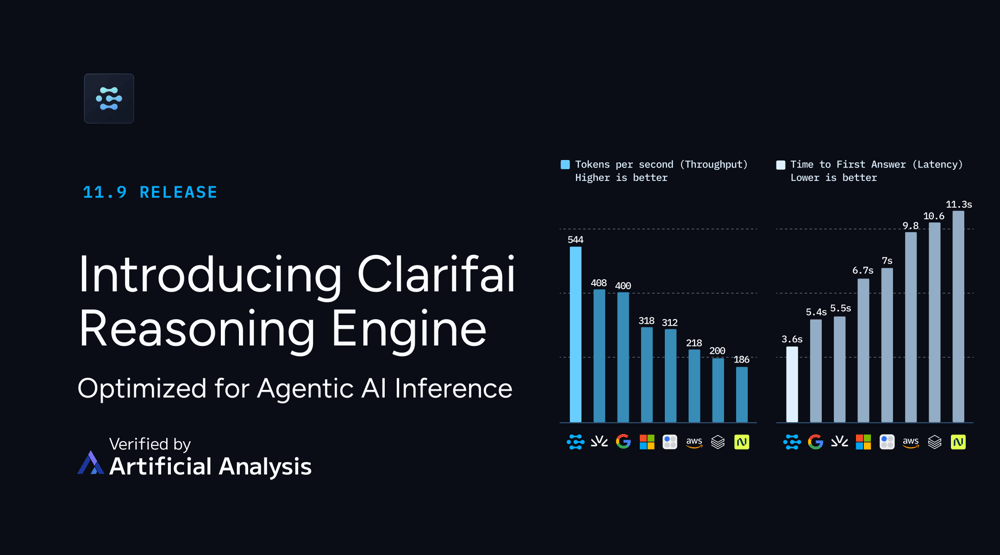

In recent benchmarks by Artificial Analysis on GPT-OSS-120B, the Clarifai Reasoning Engine set new industry records for GPU inference performance:

544 tokens/sec throughput — fastest GPU-based inference measured

0.36s time-to-first-token — near-instant responsiveness

$0.16 per million tokens — the lowest blended cost

These results not only outperformed every other GPU-based inference provider but also rivaled specialized ASIC accelerators, proving that modern GPUs, when paired with optimized kernels, can achieve comparable or even superior reasoning performance.

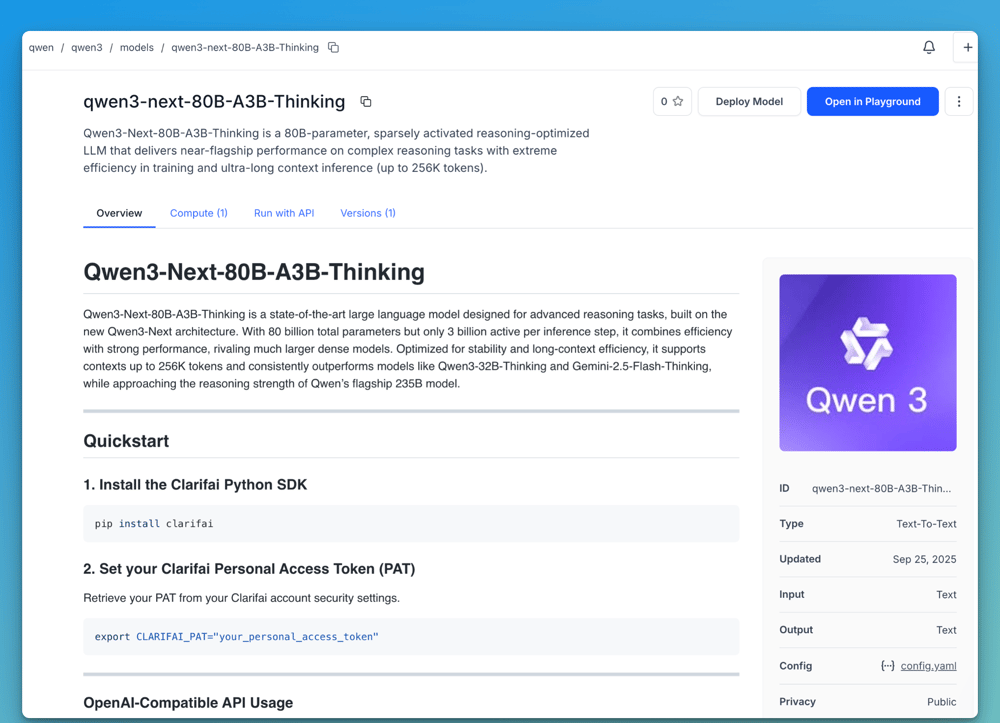

The Reasoning Engine’s design is model-agnostic. While GPT-OSS-120B served as the benchmark reference, the same optimizations have been extended to other large reasoning models like Qwen3-30B-A3B-Thinking-2507, where we observed a 60% improvement in throughput compared to the base implementation. Developers can also bring their own reasoning models and experience similar performance gains using Clarifai’s compute orchestration and kernel optimization stack.

At its core, the Clarifai Reasoning Engine represents a new standard for running reasoning and agentic AI workloads — faster, cheaper, adaptive, and open to any model.

Try the GPT-OSS-120B model directly on Clarifai and experience the performance of the Clarifai Reasoning Engine. You can also bring your own models or talk to our AI experts to apply these adaptive optimizations and see how they improve throughput and latency in real workloads.

Added support for initializing models with the vLLM, LMStudio, and Hugging Face toolkits for local runners.

We’ve added a Hugging Face Toolkit to the Clarifai CLI, making it easy to initialize, customize, and serve Hugging Face models through Local Runners.

You can now download and run supported Hugging Face models directly on your own hardware — laptops, workstations, or edge boxes — while exposing them securely via Clarifai’s public API. Your model runs locally, your data stays private, and the Clarifai platform handles routing, authentication, and governance.

Use local compute – Run open-weight models on your own GPUs or CPUs while keeping them accessible through the Clarifai API.

Preserve privacy – All inference happens on your machine; only metadata flows through Clarifai’s secure control plane.

Skip manual setup – Initialize a model directory with one CLI command; dependencies and configs are automatically scaffolded.

1. Install the Clarifai CLI

Make sure you have Python 3.11+ and the latest Clarifai CLI:

2. Authenticate with Clarifai

Log in and create a configuration context for your Local Runner:

You’ll be prompted for your User ID, App ID, and Personal Access Token (PAT), which you can also set as an environment variable:

3. Get your Hugging Face access token

If you’re using models from private repos, create a token at huggingface.co/settings/tokens and export it:

4. Initialize a model with the Hugging Face Toolkit

Use the new CLI flag --toolkit huggingface to scaffold a model directory.

This command generates a ready-to-run folder with model.py, config.yaml, and requirements.txt — pre-wired for Local Runners. You can modify model.py to fine-tune behavior or change checkpoints in config.yaml.

5. Install dependencies

6. Start your Local Runner

Your runner registers with Clarifai, and the CLI prints a ready-to-use public API endpoint.

7. Test your model

You can call it like any Clarifai-hosted model via SDK:

Behind the scenes, requests are routed to your local machine — the model runs entirely on your hardware. See the Hugging Face Toolkit documentation for the full setup guide, configuration options, and troubleshooting tips.

Run Hugging Face models on the high-performance vLLM inference engine

vLLM is an open-source runtime optimized for serving large language models with exceptional throughput and memory efficiency. Unlike typical runtimes, vLLM uses continuous batching and advanced GPU scheduling to deliver faster, cheaper inference—ideal for local deployments and experimentation.

With Clarifai’s vLLM Toolkit, you can initialize and run any Hugging Face-compatible model on your own machine, powered by vLLM’s optimized backend. Your model runs locally but behaves like any hosted Clarifai model through a secure public API endpoint.

Check out the vLLM Toolkit documentation to learn how to initialize and serve vLLM models with Local Runners.

Run open-weight models from LM Studio and expose them via Clarifai APIs

LM Studio is a popular desktop application for running and chatting with open-source LLMs locally—no internet connection required. With Clarifai’s LM Studio Toolkit, you can connect those locally running models to the Clarifai platform, making them callable via a public API while keeping data and execution fully on-device.

Developers can use this integration to extend LM Studio models into production-ready APIs with minimal setup.

Read the LM Studio Toolkit guide to see supported setups and how to run LM Studio models using Local Runners.

We’ve added several powerful new models optimized for reasoning, long-context tasks, and multi-modal capabilities:

We’ve added new cloud instances to give developers more options for GPU-based workloads:

B200 Instances – Competitively priced, operating from Seattle.

GH200 Instances – Powered by Vultr for high-performance tasks.

Learn more about Enterprise-Grade GPU Hosting for AI models and request access, or connect with our AI experts to discuss your workload needs.

Learn more about all SDK updates here.

With the Clarifai Reasoning Engine, you can run reasoning and agentic AI workloads faster, more efficiently, and at lower cost — all while maintaining full control over your models. The Reasoning Engine continuously optimizes for throughput and latency, whether you’re using GPT-OSS-120B, Qwen models, or your own custom models.

Bring your own models and see how adaptive optimizations improve performance in real workloads. Talk to our AI experts to learn how the Clarifai Reasoning Engine can optimize performance of your custom models.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy