This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.

This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.

RAG in 4 lines of code

RAG is an architecture that provides the most relevant and contextually important data to the LLMs when answering questions. You can use it for applications such as advanced question-answering systems, information retrieval systems, chatting with your data, and much more.

We have integrated the new RAG-Prompter operator model. You can now use the RAG-Prompter, an agent system operator in the Python SDK, to perform RAG tasks in just 4 lines of code.

Check out the following video that walks you through a step-by-step process of building a RAG system in 4 lines of code.

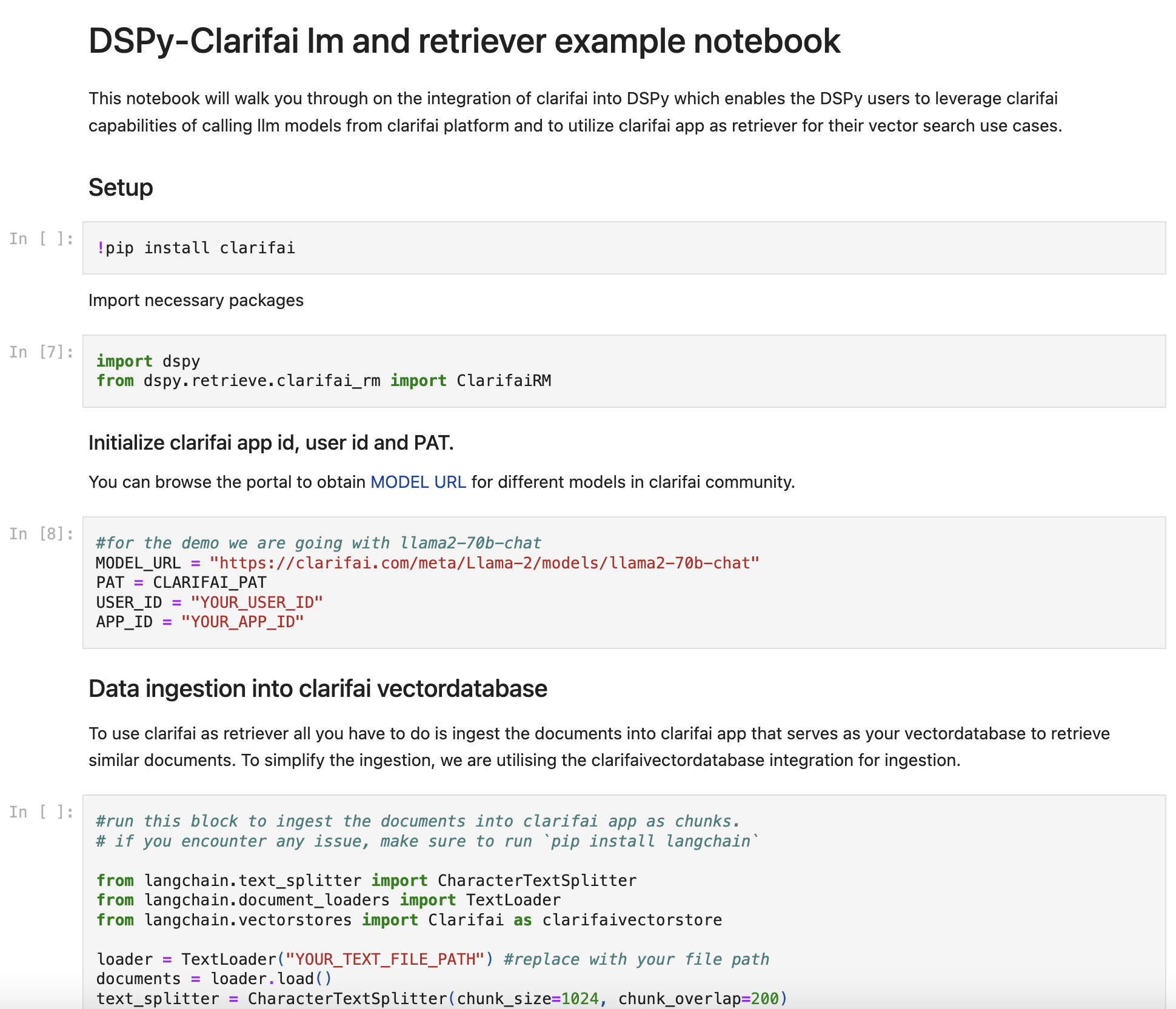

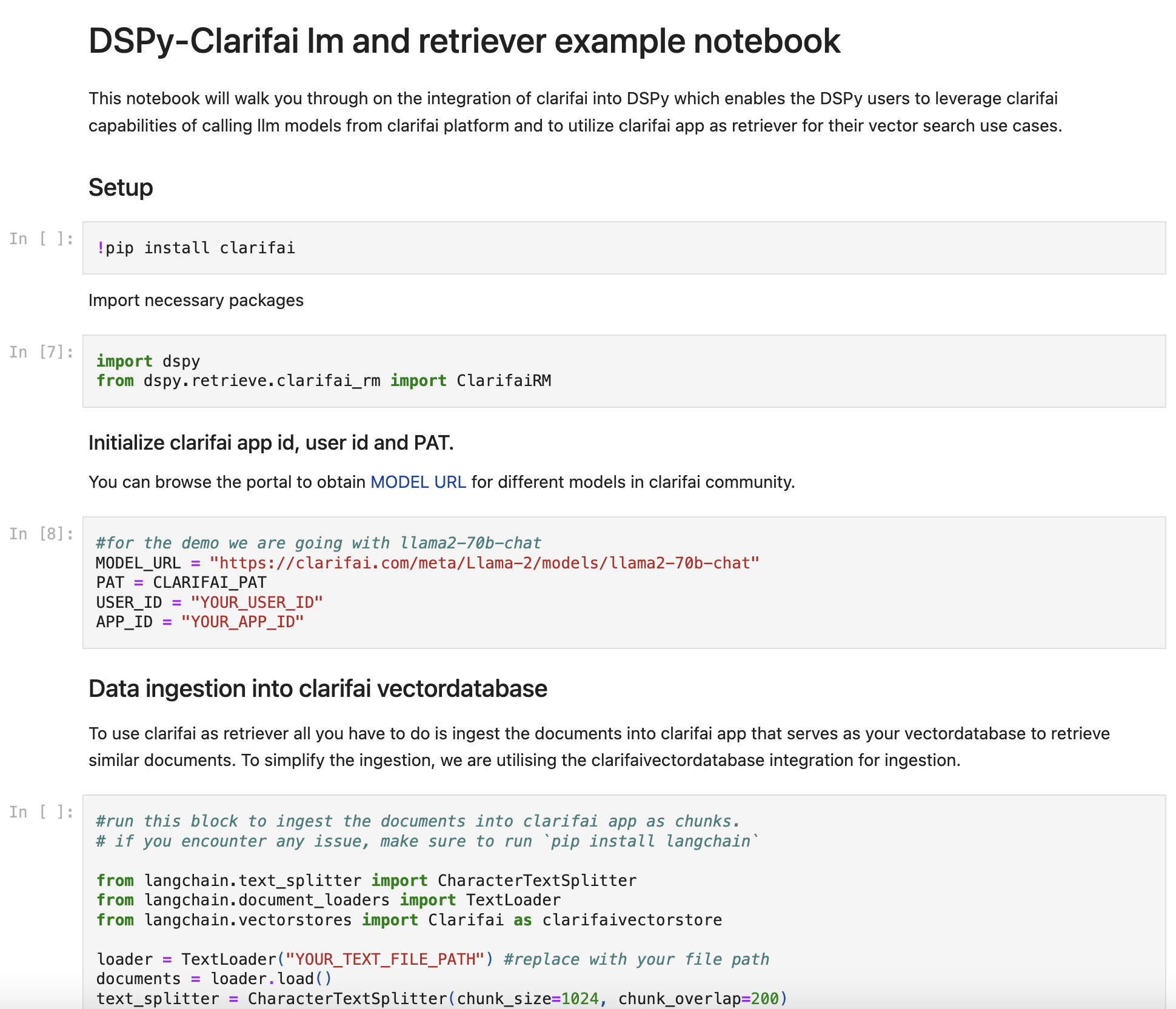

Integrated Clarifai into DSPy

- DSPy is the framework for solving advanced tasks with language and retrieval models. It unifies techniques for prompting and fine-tuning language models.

This integration, now a part of the recently released DSPy version 2.1.7, helps you consume Clarifai's LLM models and utilize your Clarifai apps as a vector search engine within DSPy. Clarifai is the only provider enabling users to harness multiple LLM models. You can get started on how to use DSPy with Clarifai here.

Introduced incremental training of model versions

- You can now update existing models with new data without retraining from scratch. After training a model version, a checkpoint file is automatically saved. You can initiate incremental training from that previously trained version checkpoint. Alternatively, you provide the URL of a checkpoint file from a supported 3rd party toolkit like HuggingFace or MMCV.

Introduced the ability to add inputs through cloud storage URLs

- You can now provide URLs from cloud storage platforms such as S3, GCP, and Azure, accompanied by the requisite access credentials. This functionality simplifies adding inputs to our platform, offering a more efficient alternative to the conventional method of utilizing PostInputs for individual inputs.

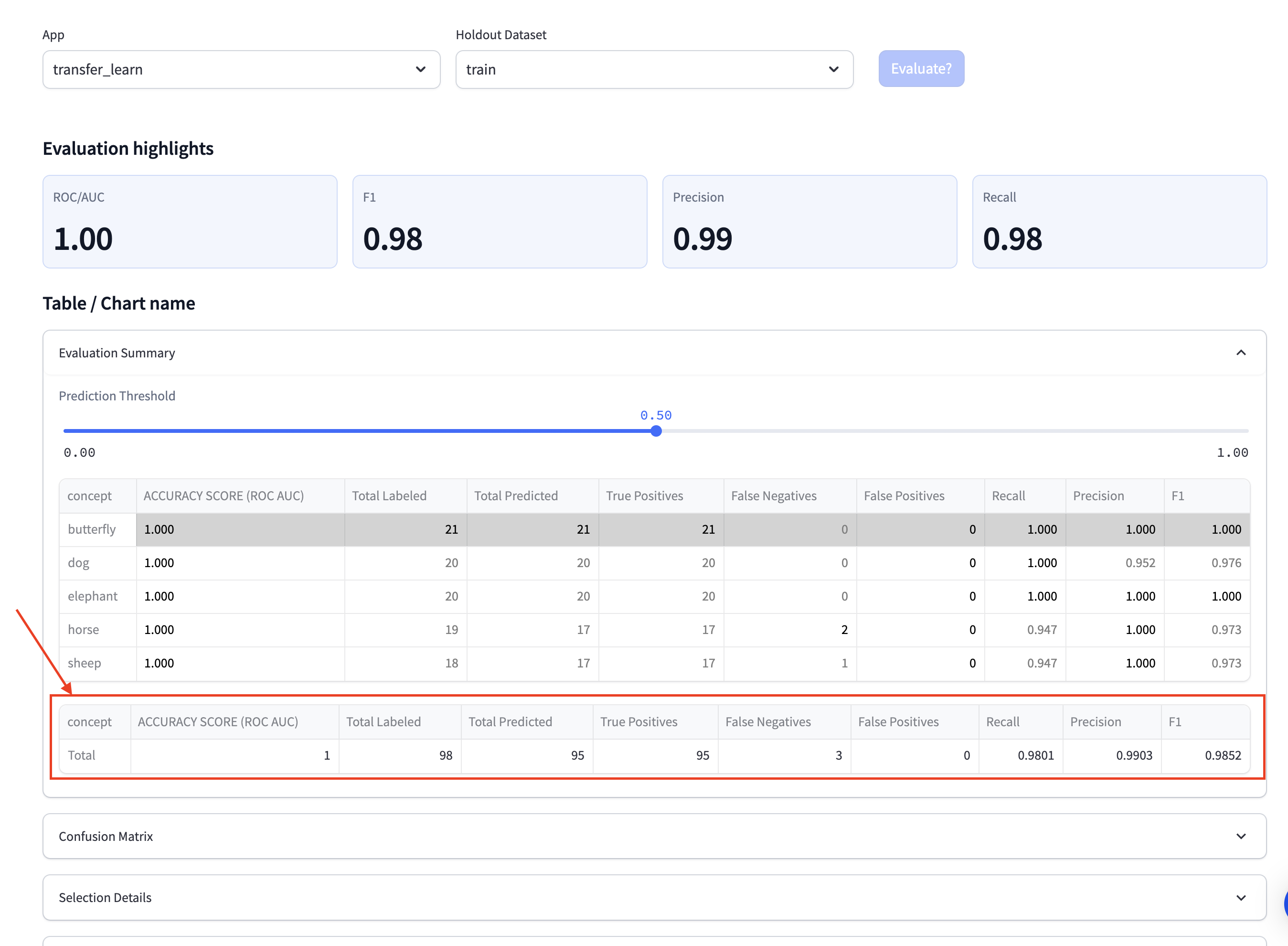

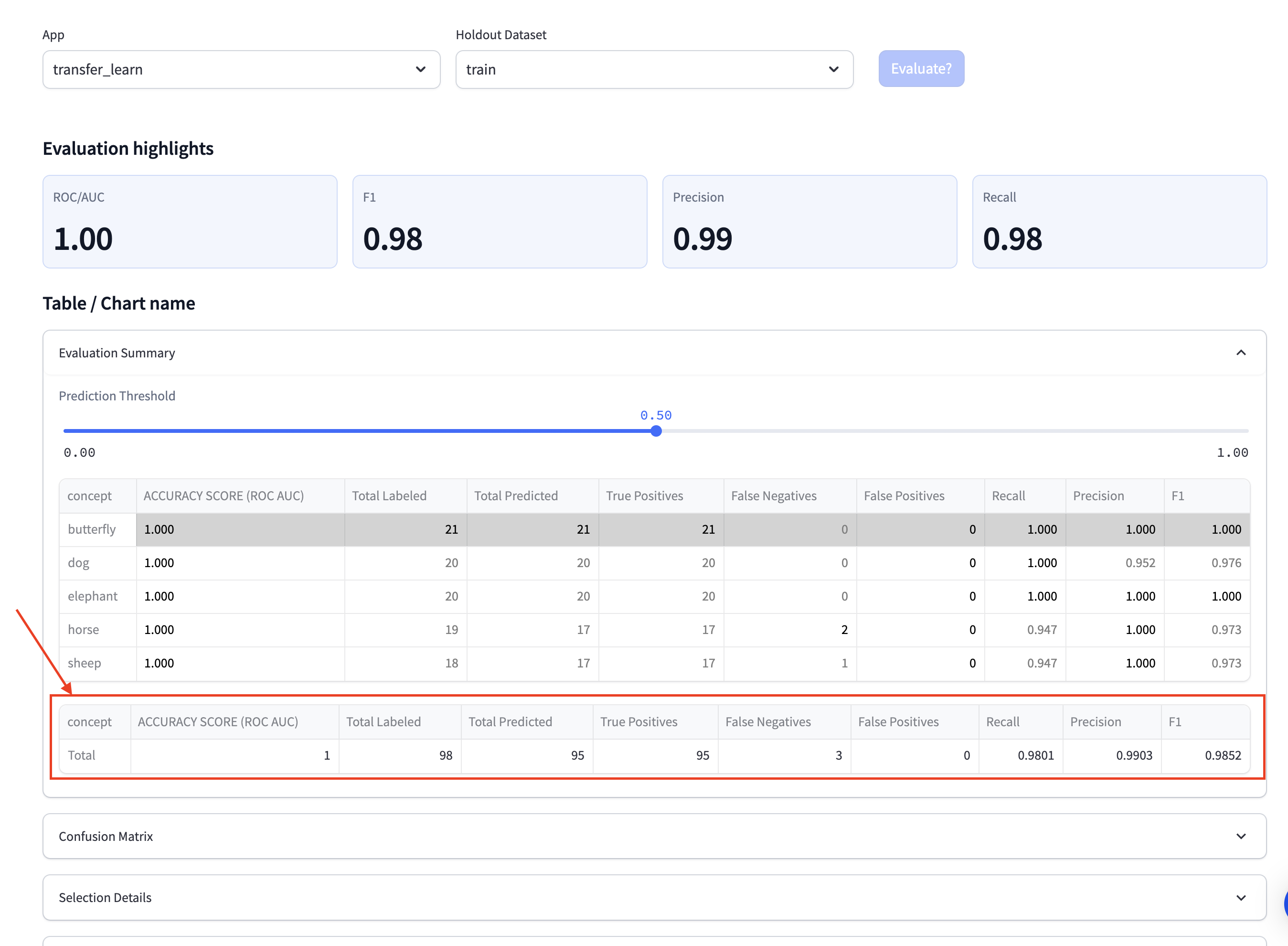

Enhanced the evaluation process for detector models

- Enriched the metrics by introducing additional fields, namely "Total Predicted," "True Positives," "False Negatives," and "False Positives." These additional metrics provide a more comprehensive and detailed assessment of a detector's performance.

- Previously, a multi-selector was used to select an Intersection over Union (IoU). We replaced that confusing selection with a radio button format, emphasizing a single, mutually exclusive choice for IoU selection.

- We also made other minor UI/UX improvements to ensure consistency with the evaluation process for classification models.

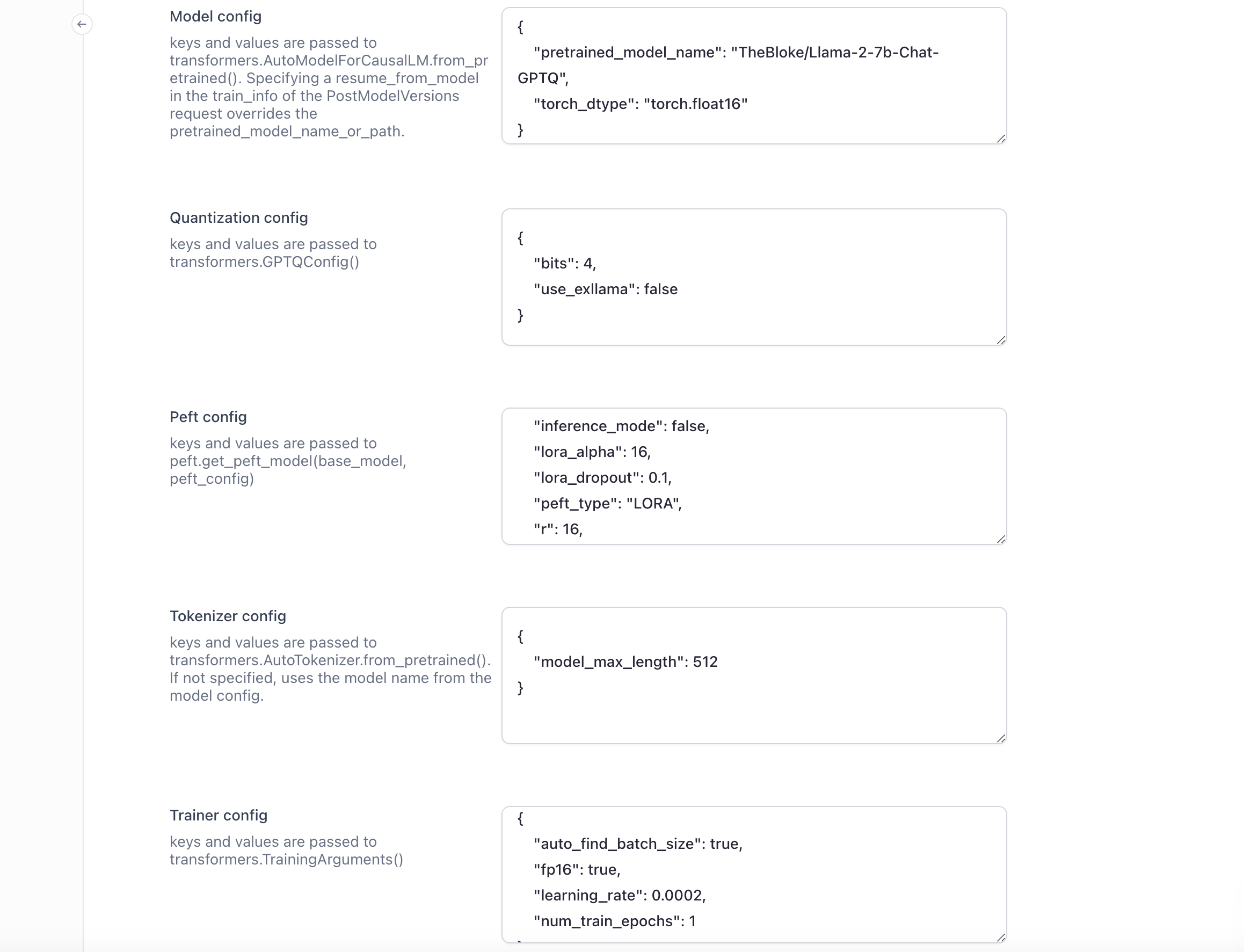

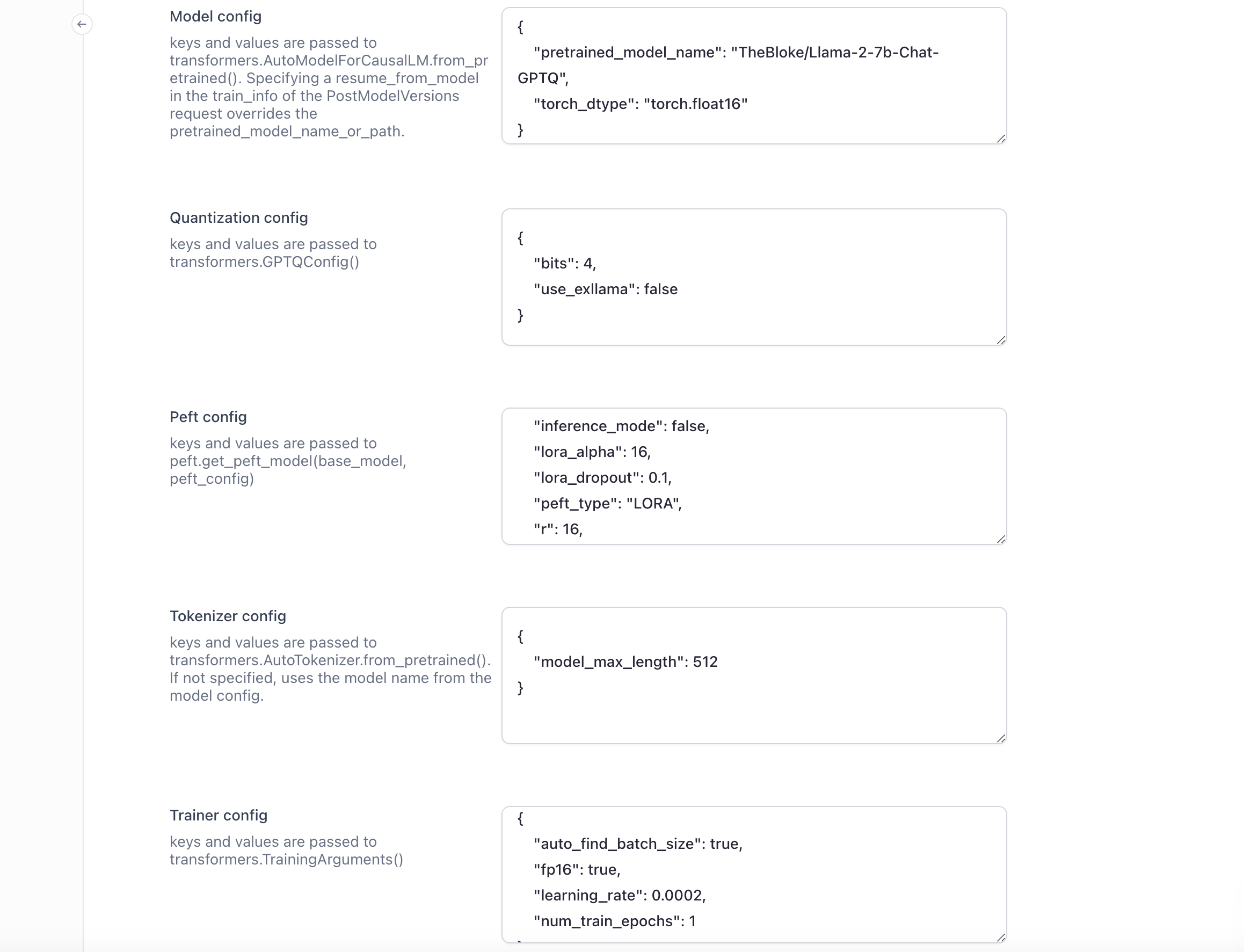

Made improvements to LLM fine-tuning

- Added support for CSV upload for streamlined data integration.

- Added more training templates to tailor the fine-tuning process to diverse use cases.

- Added advanced configuration options, including quantization parameters via GPTQ, which further empowers users to fine-tune models with heightened precision and efficiency.

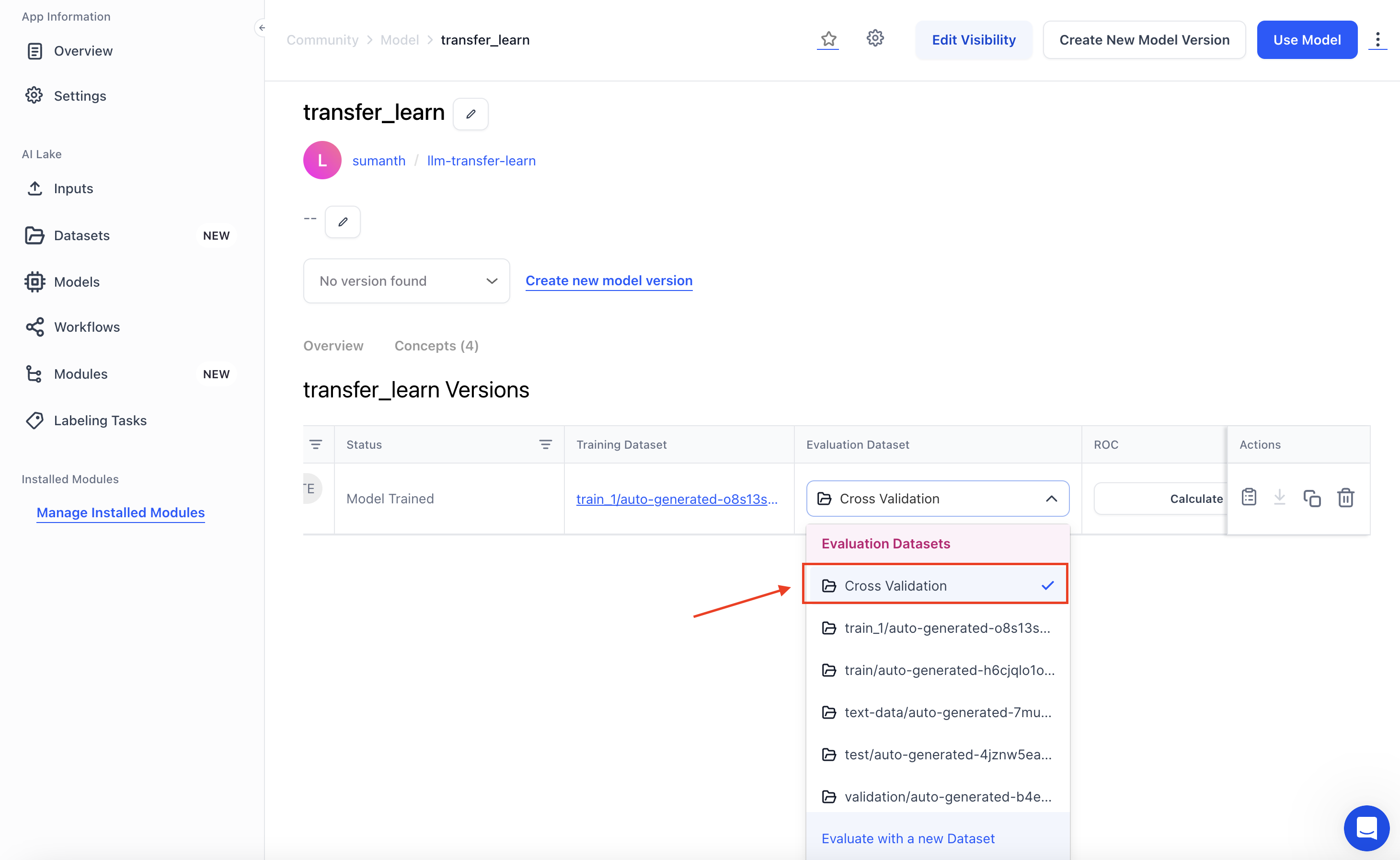

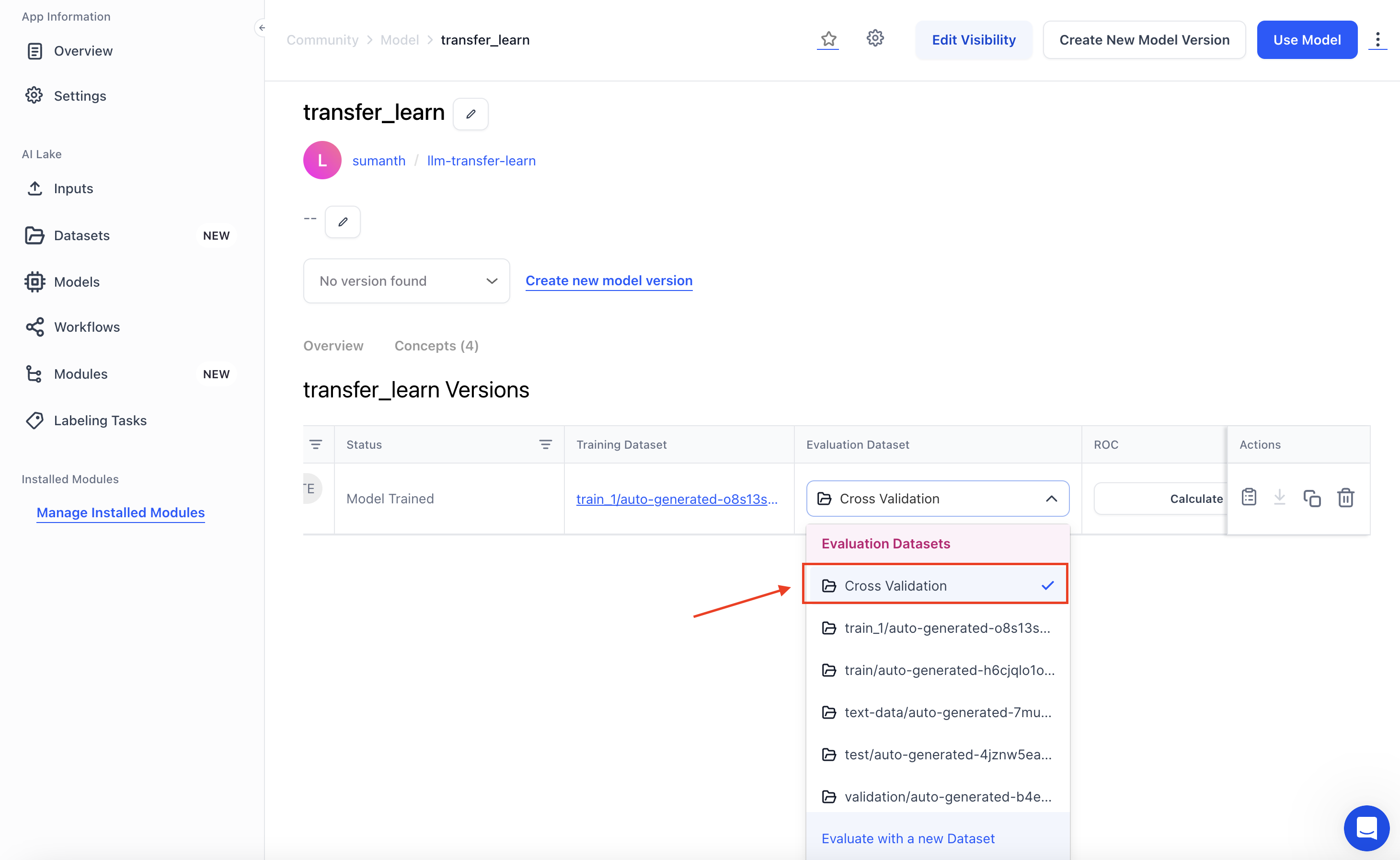

Improved the Model-Viewer's version table

- Cross-app evaluation is now supported in the model version tab to have a more cohesive experience with the Leaderboard.

- Users and collaborators with access permissions can also select datasets or dataset versions from org apps, ensuring a comprehensive evaluation across various contexts.

- This improvement lets users view training and evaluation data across different model versions in a centralized location, enhancing the overall version-tracking experience.

Improved the management of model annotations and associated assets

- Previously, when a model annotation was deleted, the corresponding model assets remained unaffected. If you now delete a model annotation, a simultaneous action will mark the associated model assets as deleted. This ensures the deletion process is comprehensive, avoiding lingering or orphaned assets.

Published several new, ground-breaking models

- Published Phi-2, a Clarifai-hosted, 2.7 billion-parameter large language model (LLM), achieving state-of-the-art performance in QA, chat, and code tasks. It is focused on high-quality training data and has demonstrated improved behavior in toxicity and bias.

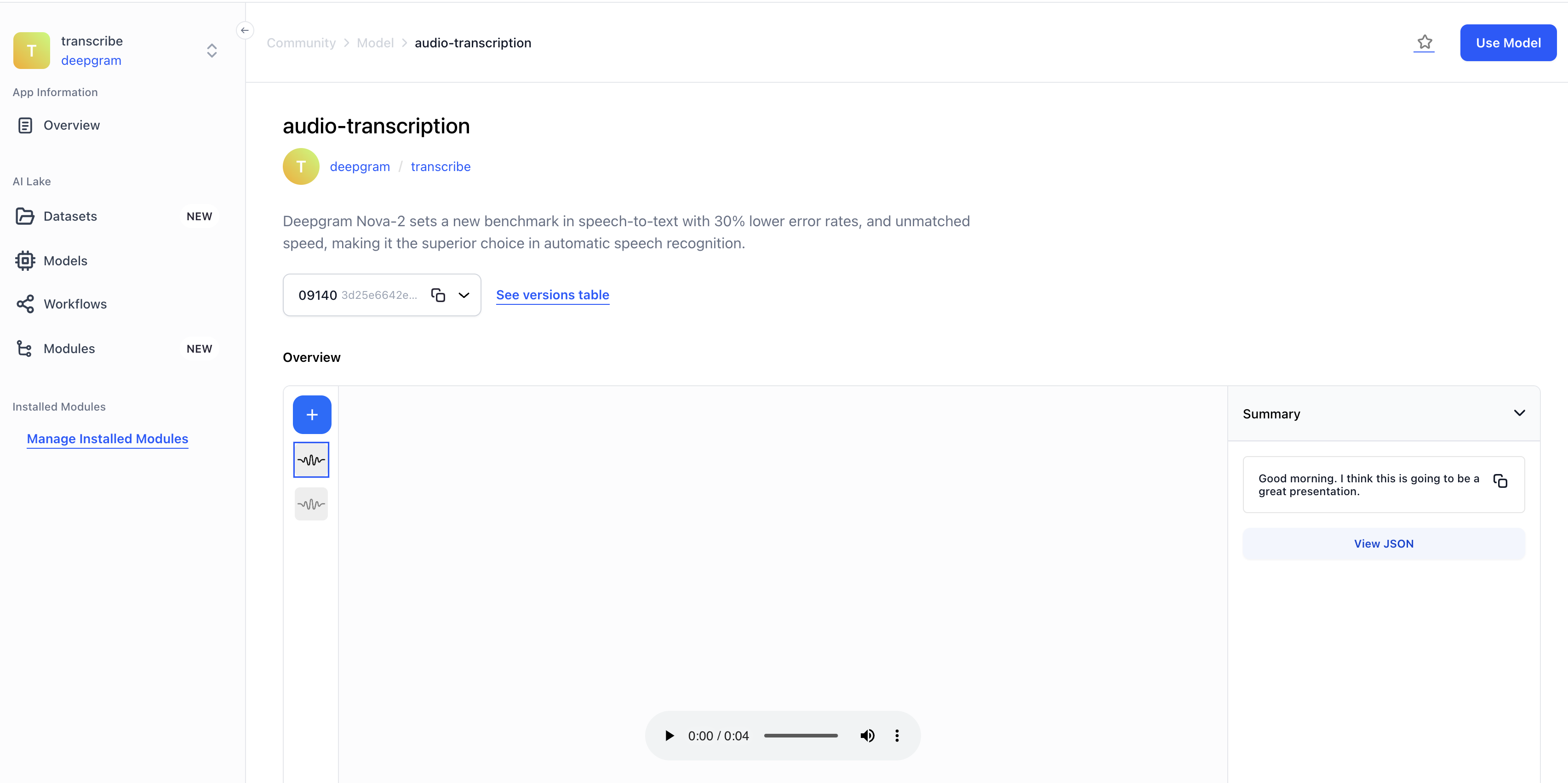

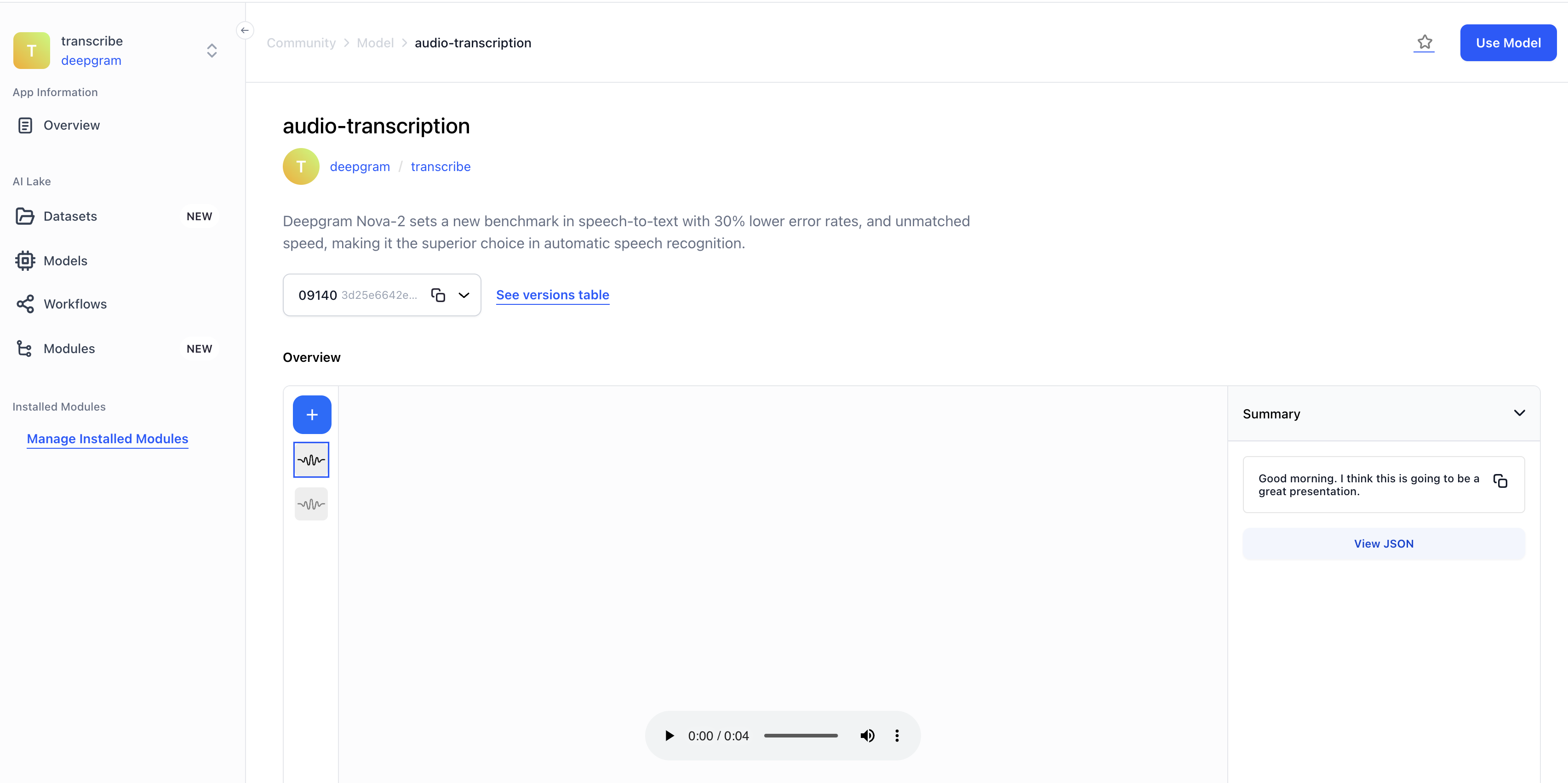

- Wrapped Deepgram Nova-2. It sets a new benchmark in speech-to-text with 30% lower error rates and unmatched speed, making it the superior choice in automatic speech recognition.

- Wrapped Deepgram Audio Summarization. It offers efficient and accurate summarization of audio content, automating call notes, meeting summaries, and podcast previews with superior transcription capabilities.

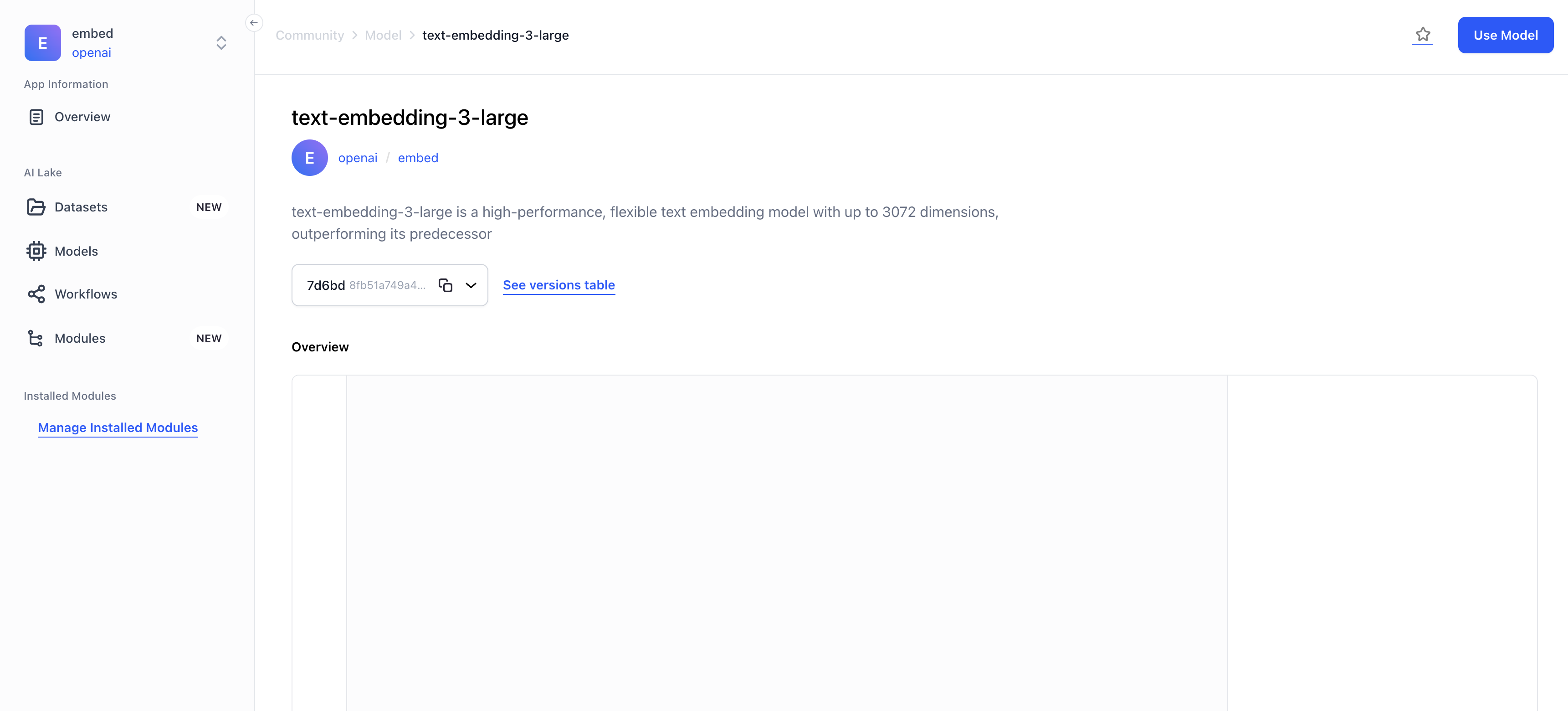

- Wrapped Text-Embedding-3-Large, a high-performance, flexible text embedding model with up to 3072 dimensions, outperforming its predecessor.

- Wrapped Text-Embedding-3-Small, a highly efficient, flexible model with improved performance over its predecessor, Text-Embedding-ADA-002, in various natural language processing tasks.

- Wrapped CodeLlama-70b-Instruct, a state-of-the-art AI model specialized in code generation and understanding based on natural language instructions.

- Wrapped CodeLlama-70b-Python, a state-of-the-art AI model specialized in Python code generation and understanding, excelling in accuracy and efficiency.

Improved the mobile version of the onboarding flow

- Updated the "create an app" guided tour modal for mobile platforms.

- Made other improvements such as updating the "Add a Model" modal and the "Find a Pre-Trained model" modal for mobile platforms.

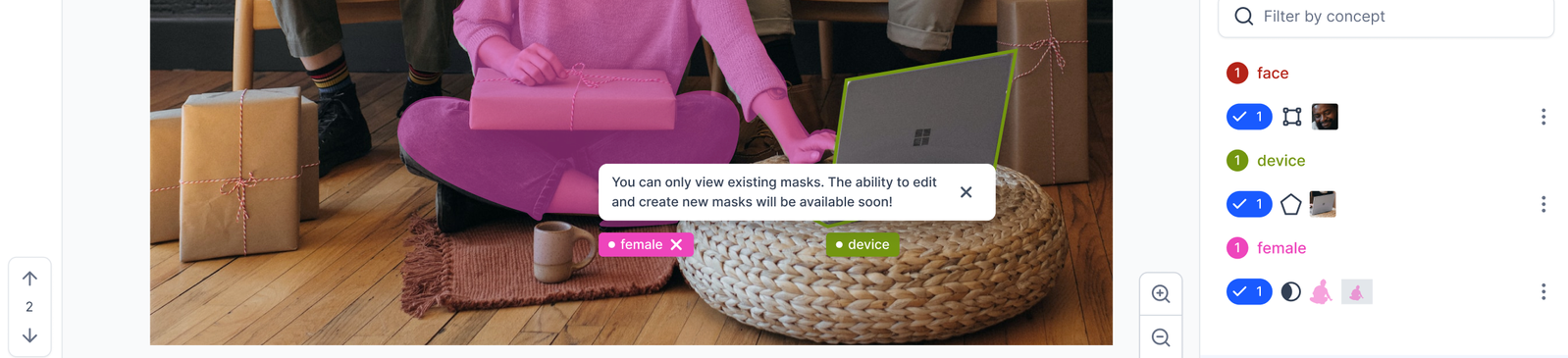

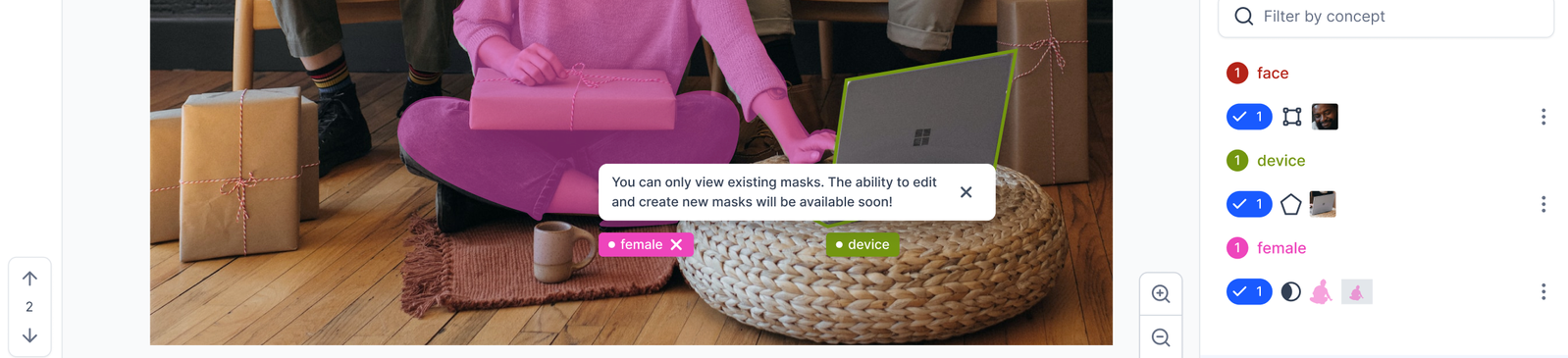

Added ability to minimally review existing image mask annotations on the Input-Viewer

- You can view your image mask annotations uploaded via the API.

- You can delete an entire image mask annotation on an input

- You can view the mask annotation items displayed on the Input-Viewer sidebar.

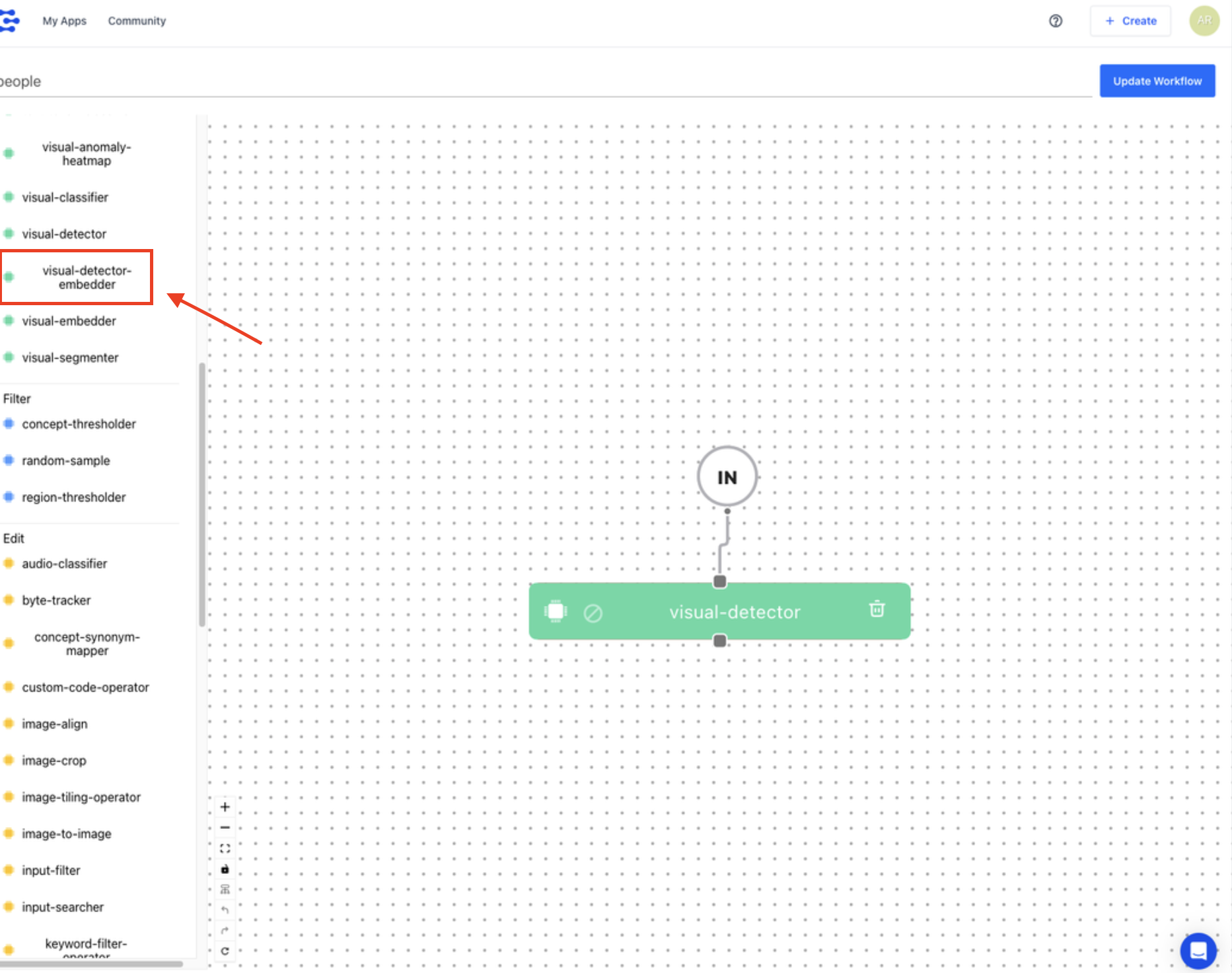

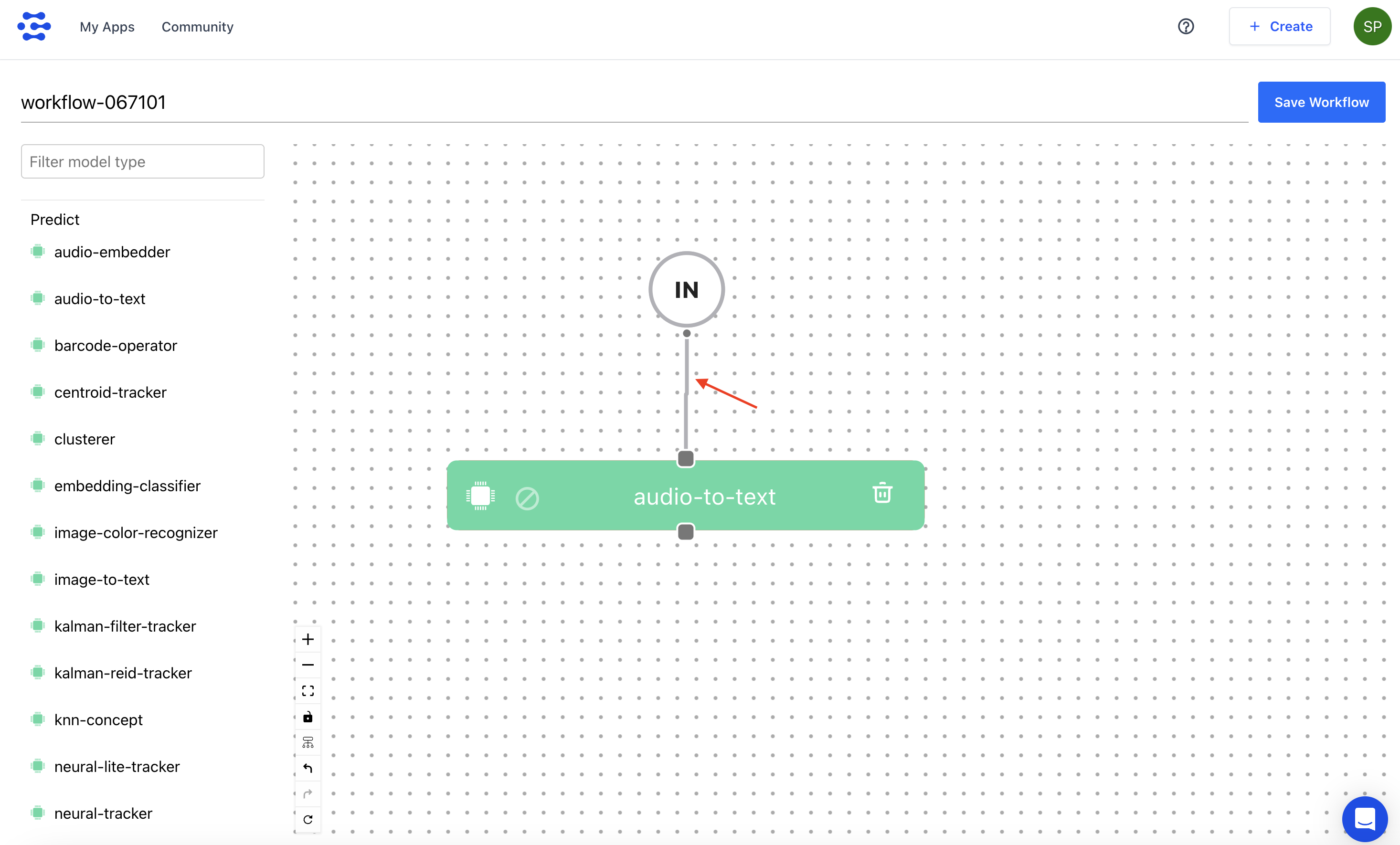

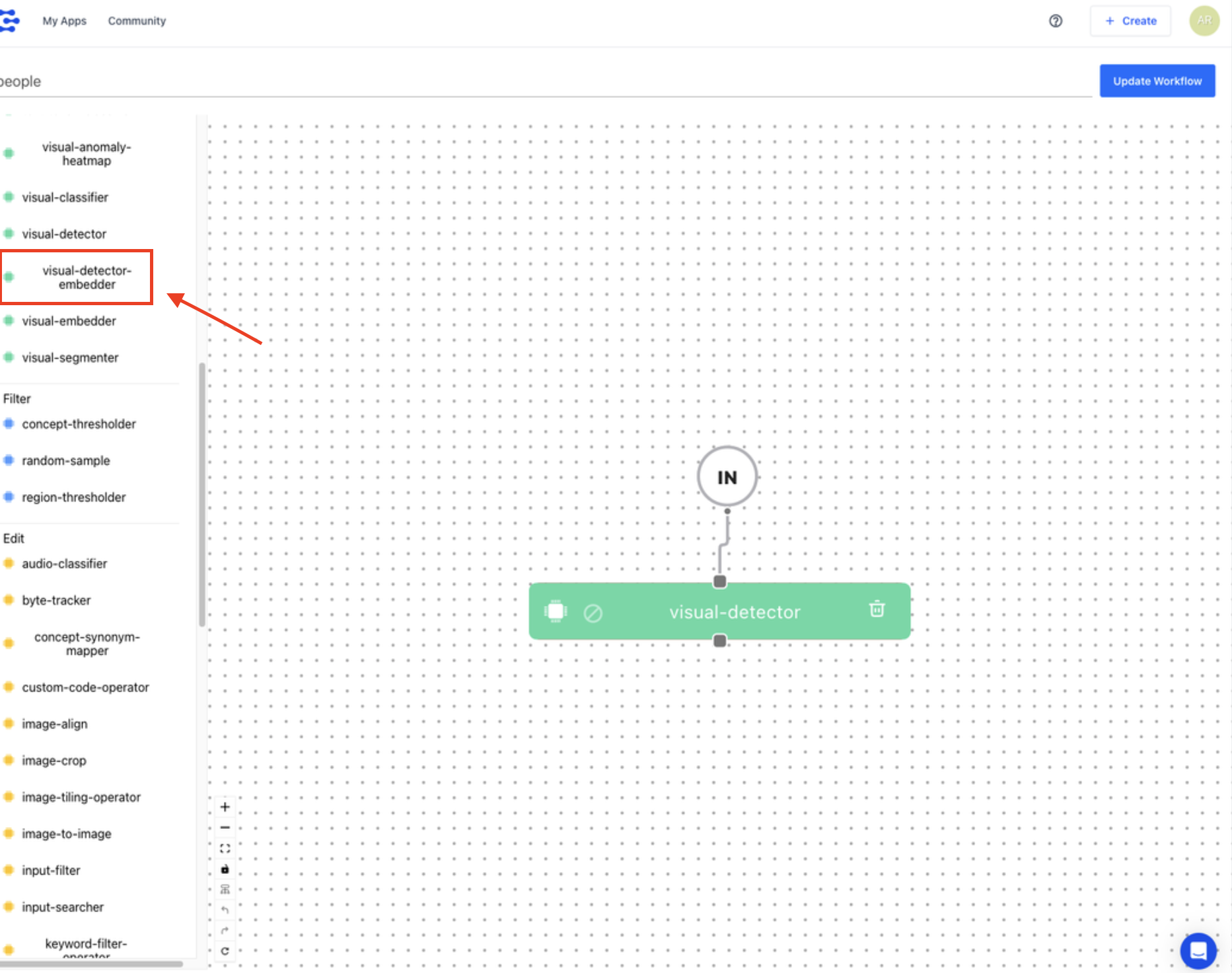

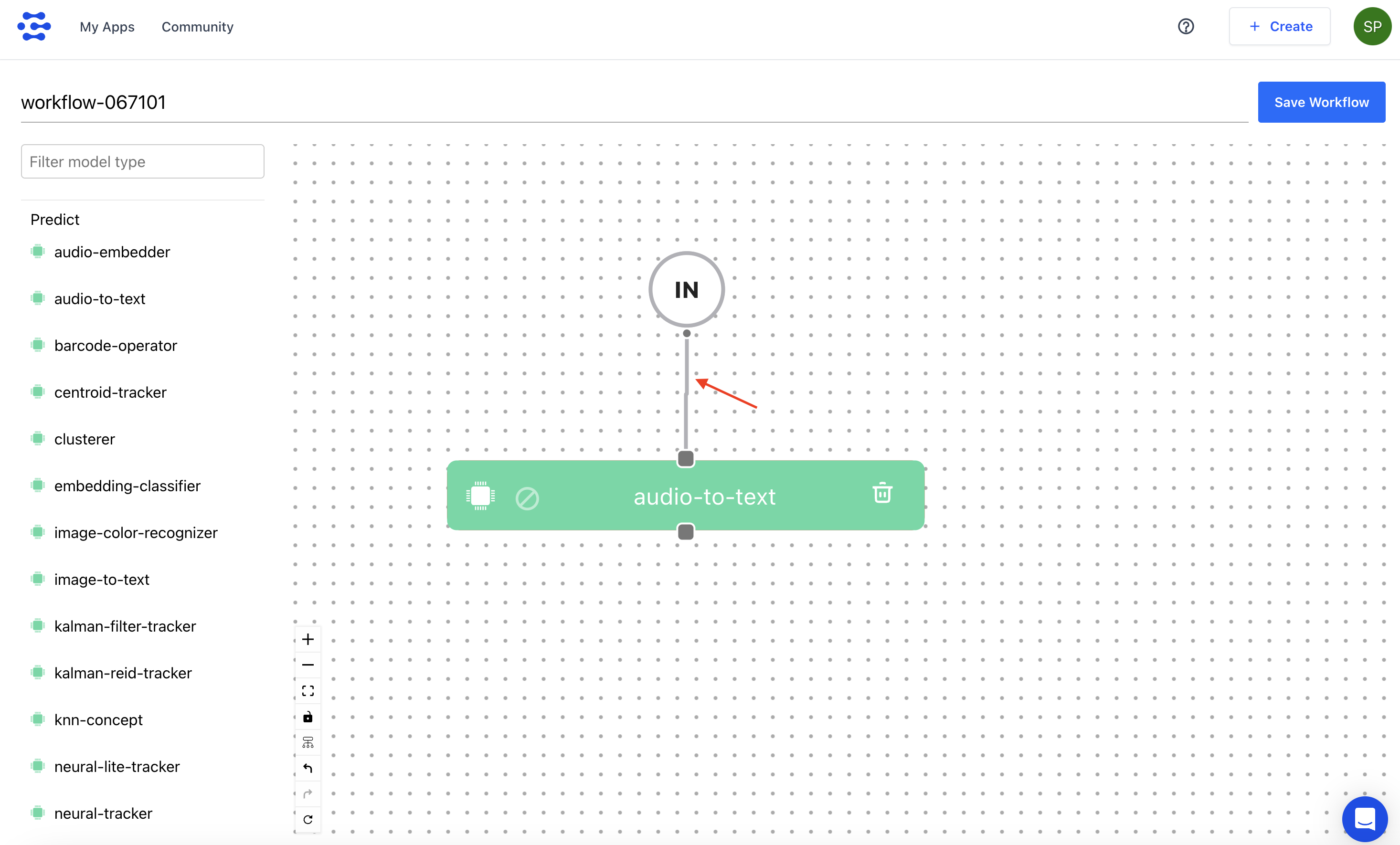

Made minor enhancements to the Workflow builder UI

- Rectified the alignment discrepancy in some left-side models to ensure uniform left alignment.

- Introduced an X or Close/Cancel button for improved user interaction and clarity.

- Ensured that users can easily straighten the line connecting two nodes.

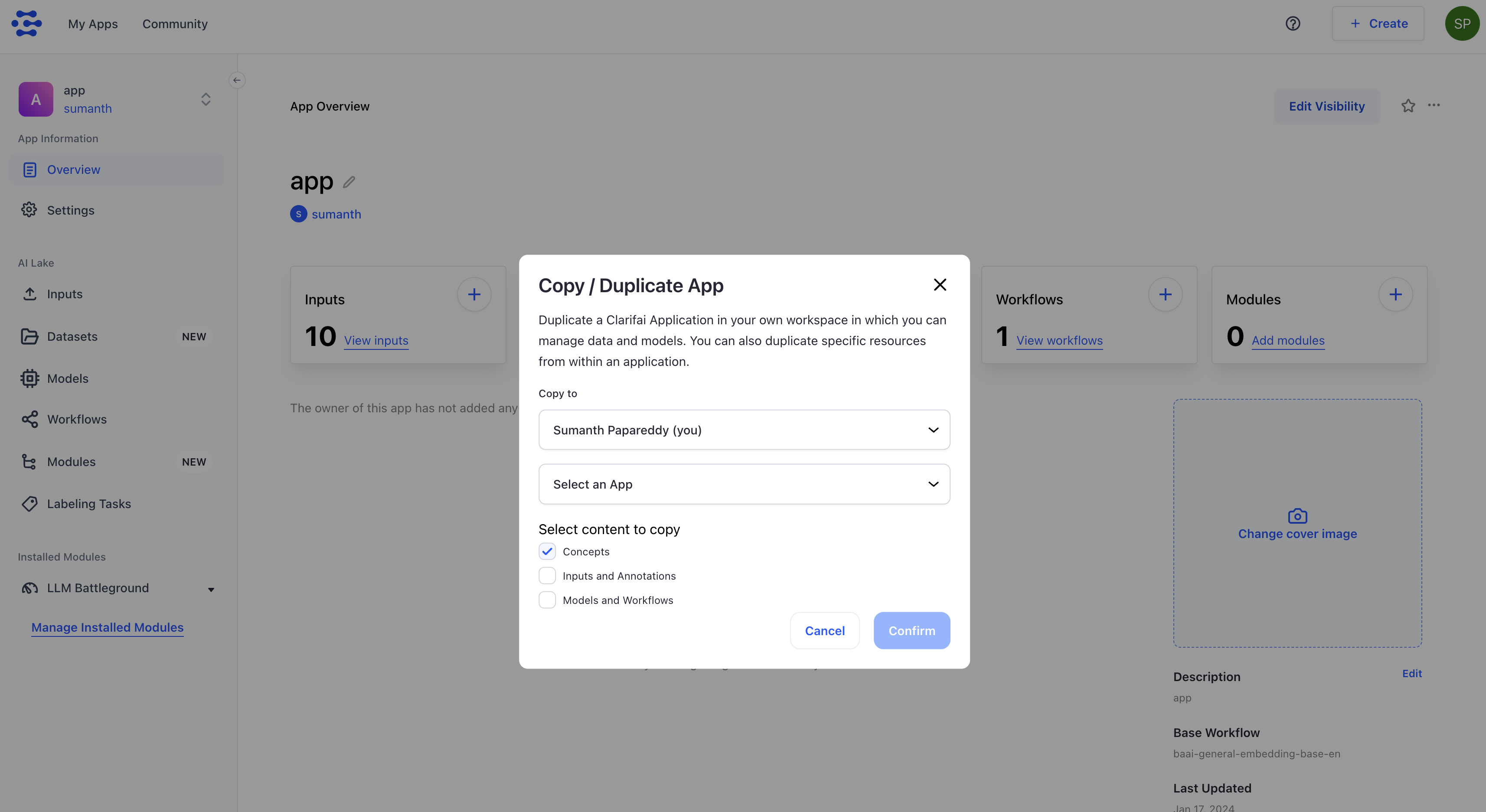

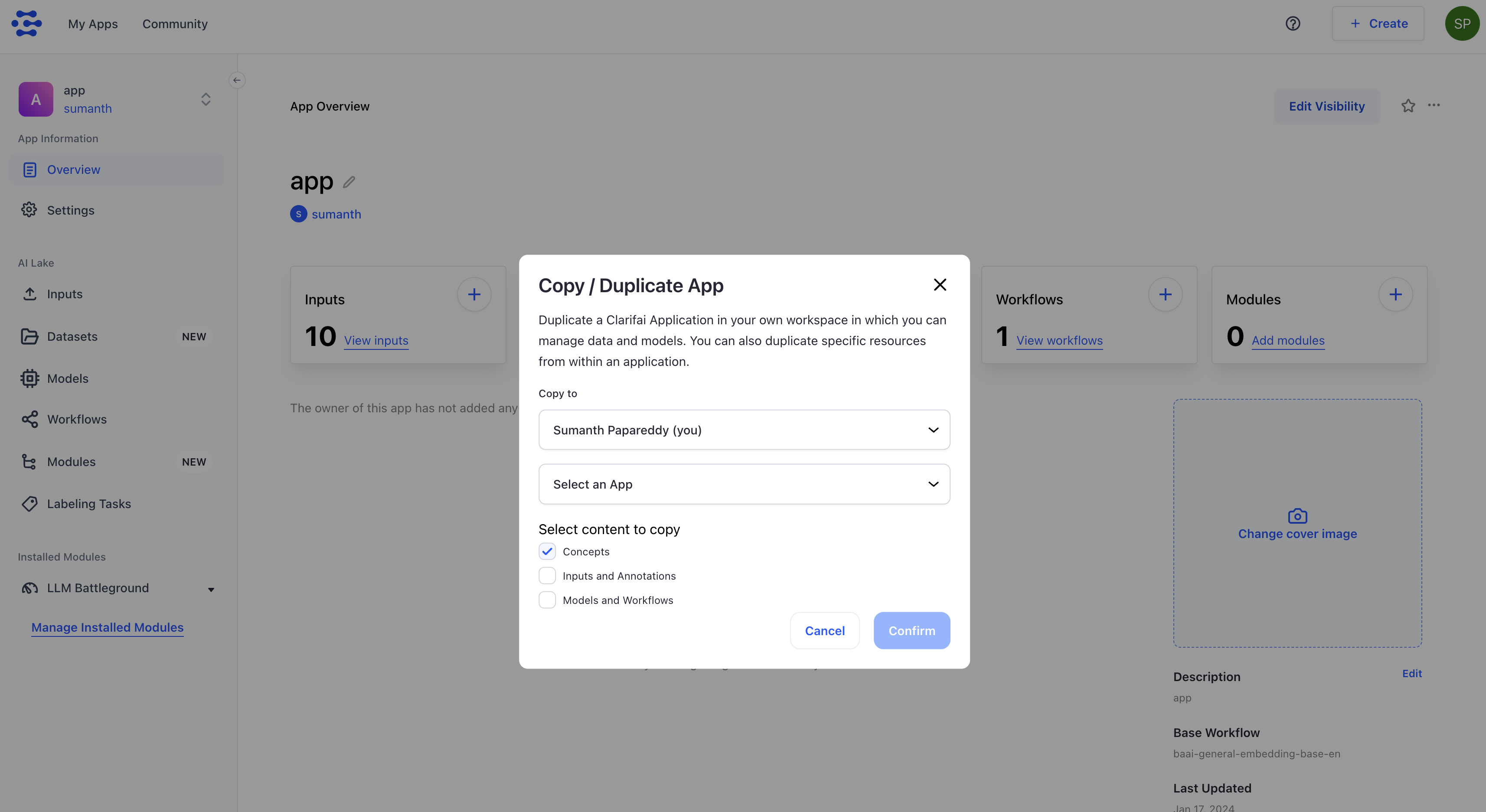

Added ability to copy an app to an organization

- Previously, in the Copy / Duplicate App modal, the dropdown for selecting users lacked an option for organizations. You can now select an organization directly from the dropdown list of potential destinations when copying or duplicating an app.

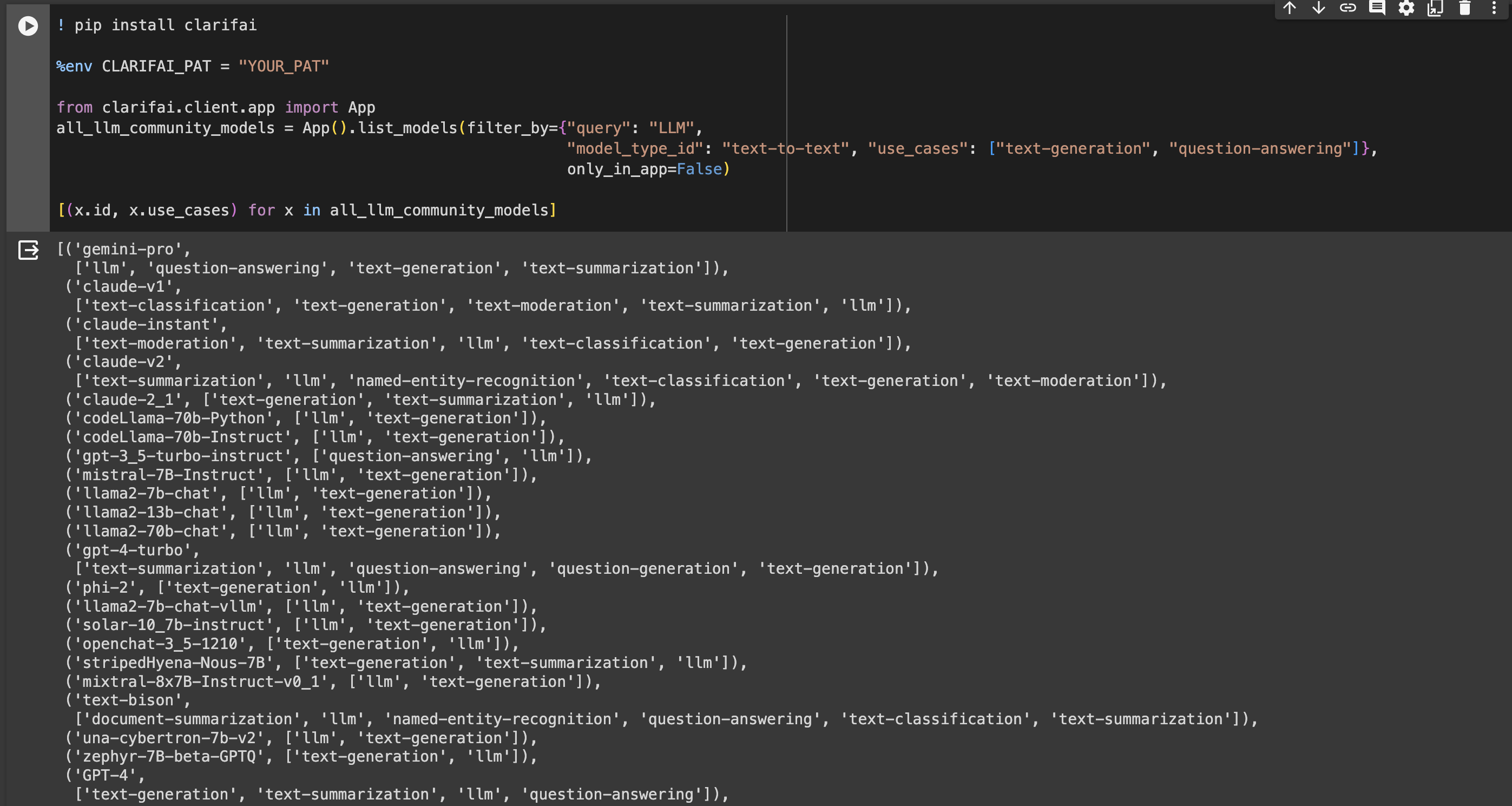

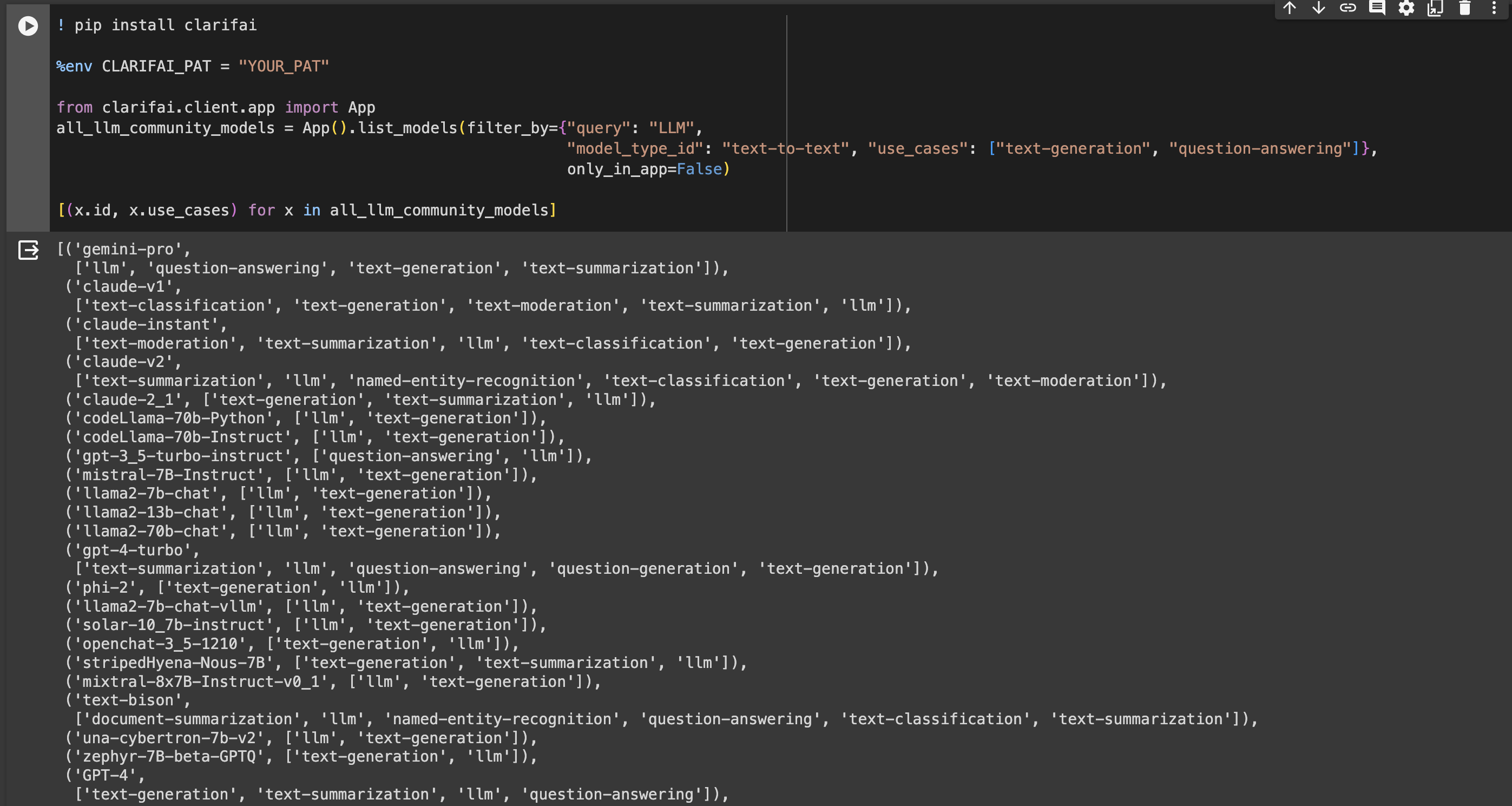

Improved the search behavior within the use_cases field

- Previously, the

use_cases field within the ListModels feature was configured as an AND search, unlike other fields such as input_fields and output_fields. We improved the use_cases attribute to operate with an OR logic, just like the other fields. This adjustment broadens the scope of search results, accommodating scenarios where models may apply to diverse use cases.

Changed the thumbnails for listing resources to use small versions of cover images

- Previously, the thumbnails for listing resources used large versions of cover images. We changed them to use the small versions—just like for other resources like Apps, Models, Workflows, Modules, and Datasets. We also made the change to the left sidebars.

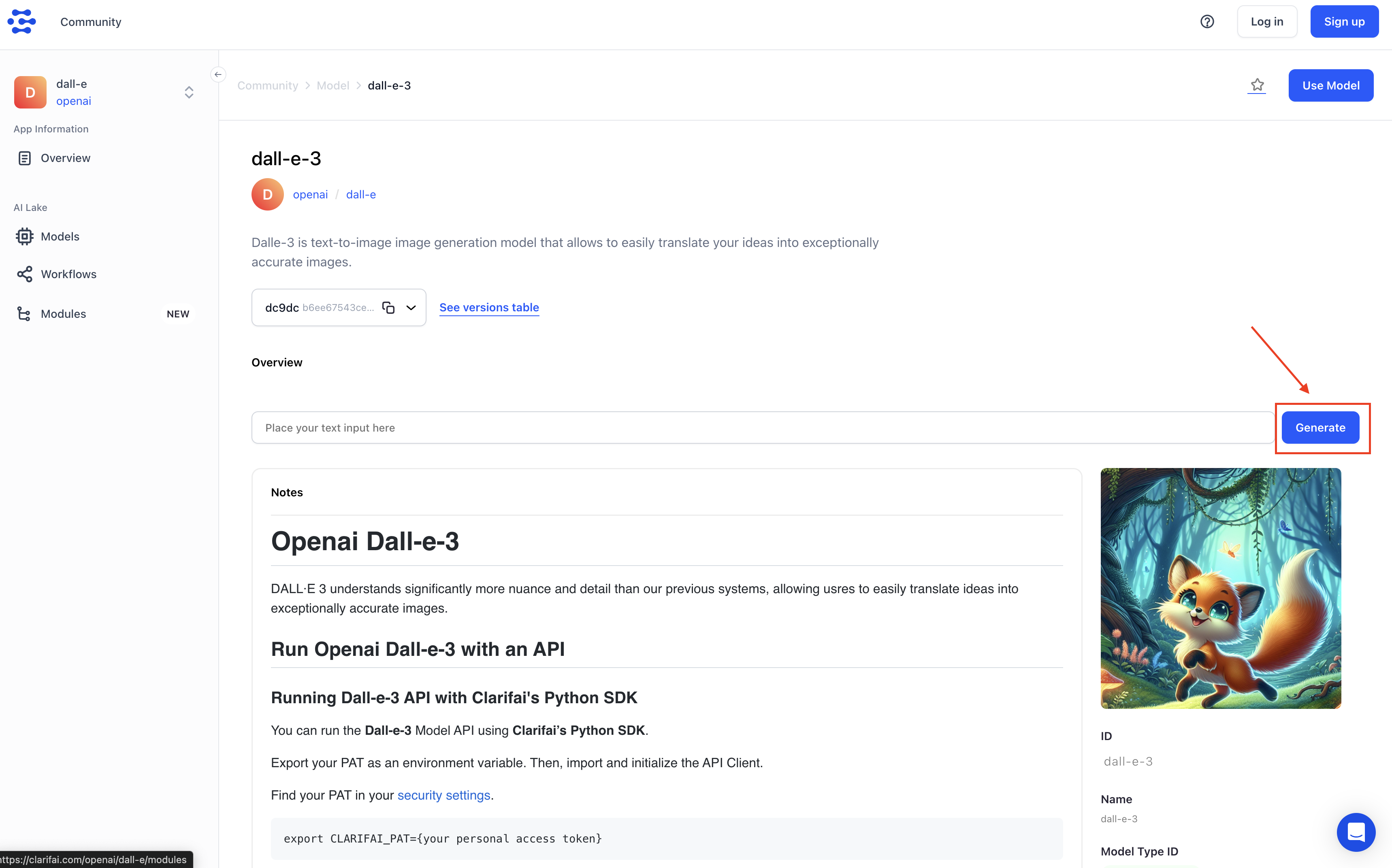

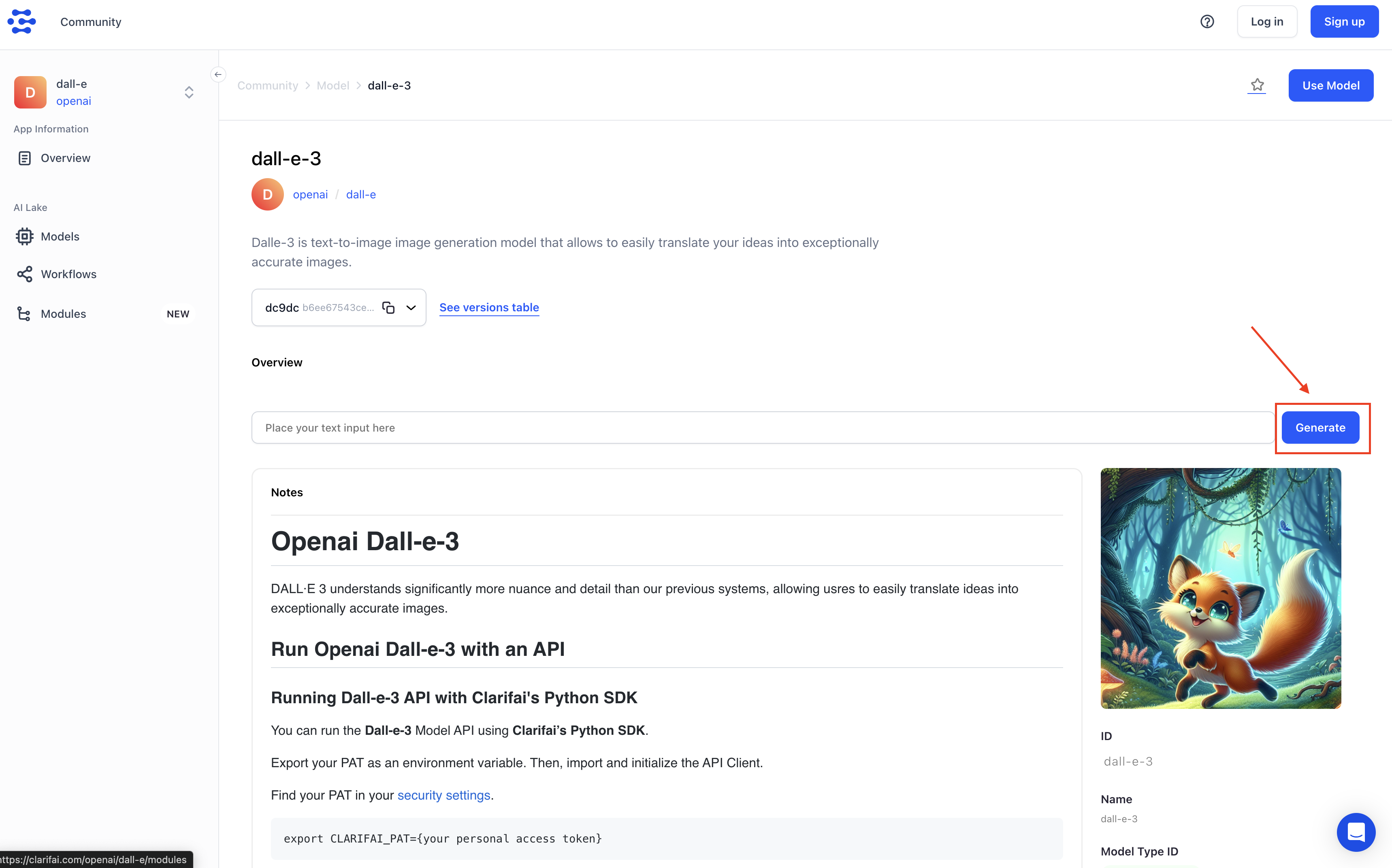

Implemented a modification to facilitate a more user-friendly experience for non-logged-in users interacting with text-to-image models

- Clicking the “Generate” button now triggers a login/sign-up pop-up modal. This guides users not currently logged in through the necessary authentication steps, ensuring a smoother transition into utilizing the model's functionality.

Fixed an issue where a user could get added multiple times to the same organization

- We implemented safeguards against the unintended duplication of users within an organization. Previously, if a user clicked the "Accept" button on the organization invitation page multiple times, they could be redundantly registered within the same organization. Consequently, the user interface exhibited numerous instances of the same organization.

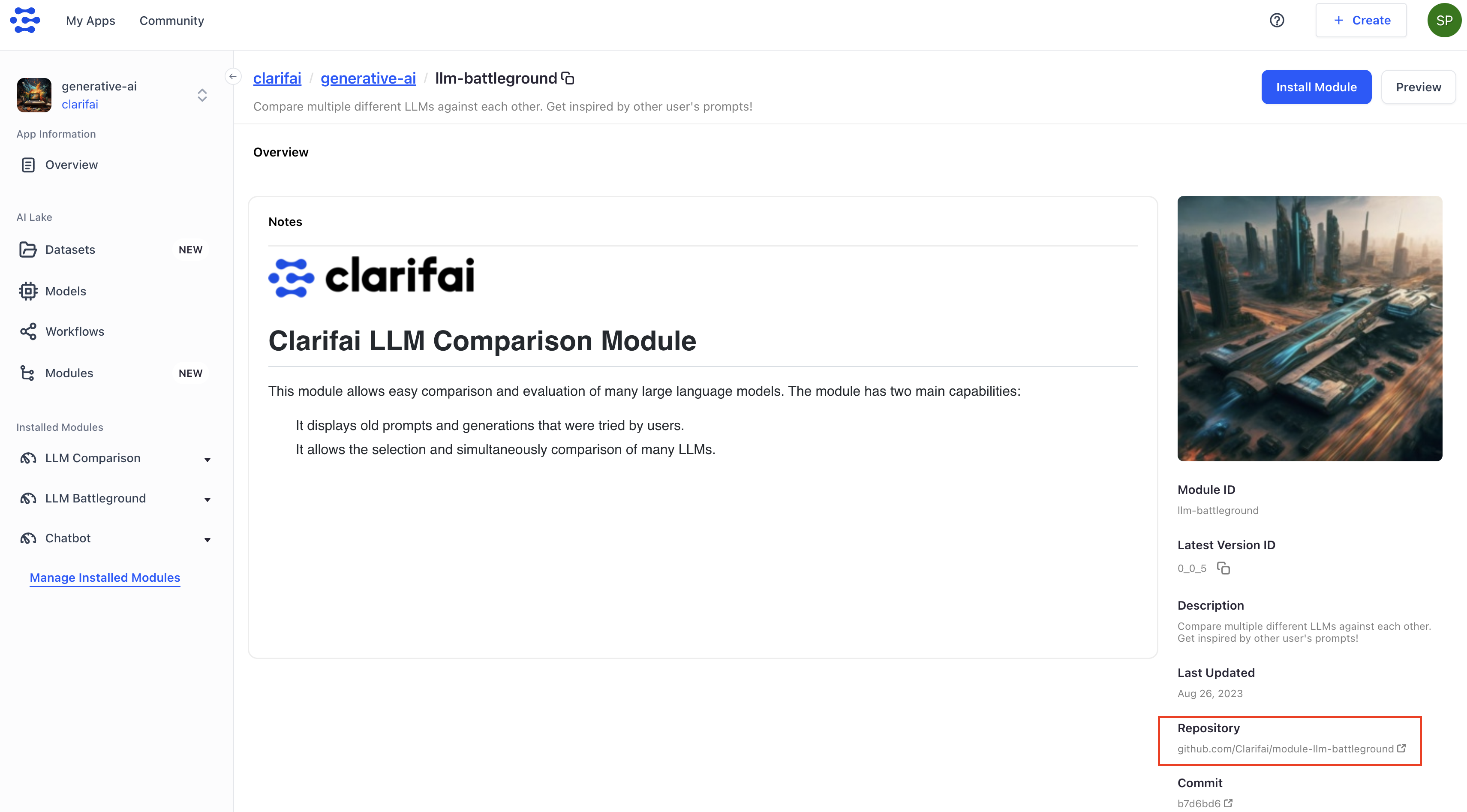

Improved the module installation process

- The modal has been refined to use app IDs, eliminating reliance on deprecated app names. Previously, the pop-up modal for installing a module into an app retained the usage of deprecated app names.

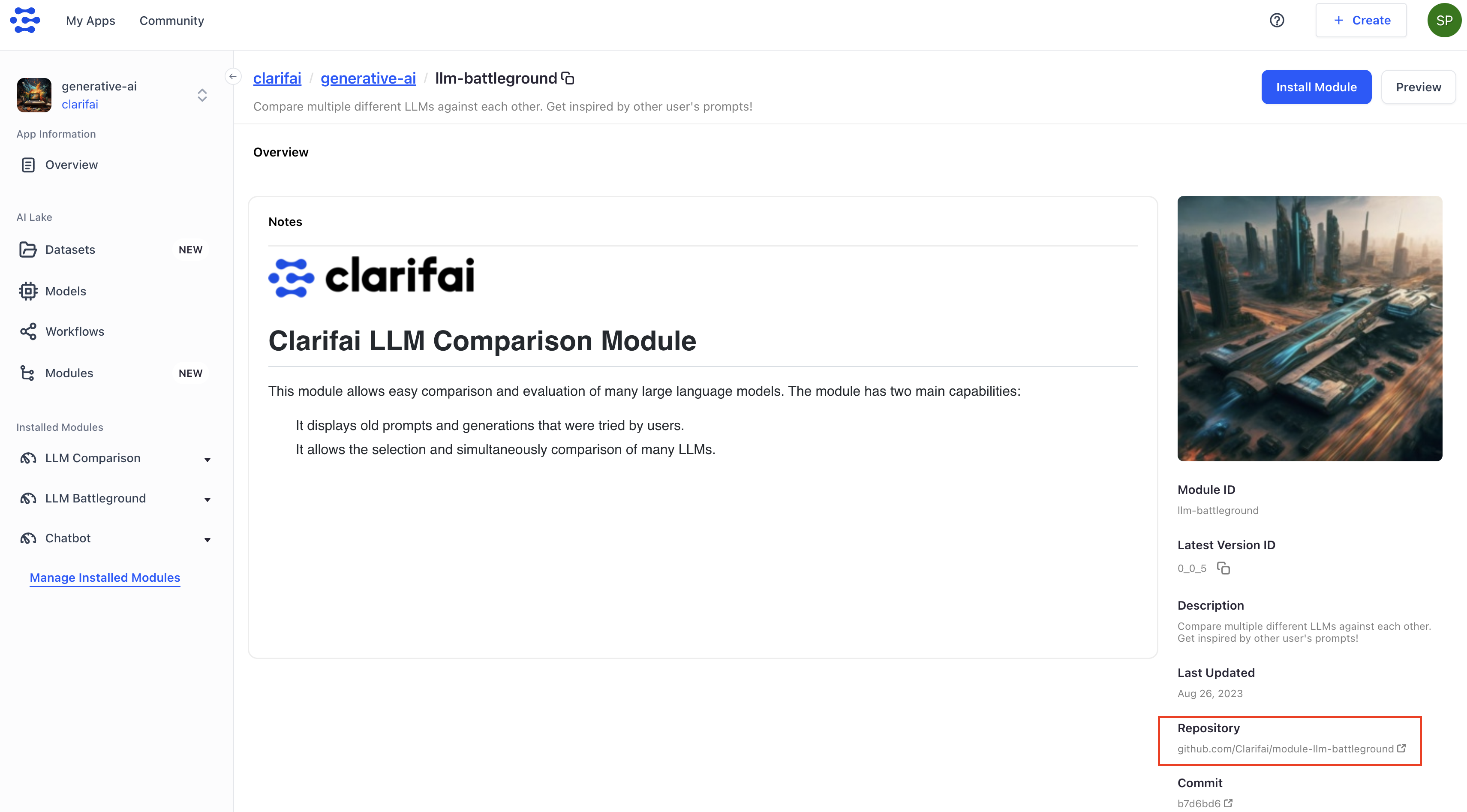

Improved the relevance of the link to GitHub on the module page

- Previously, a small GitHub button was at the top of any module’s overview page. We relocated it to the right-hand side, aligning it with other metadata such as description, thereby improving its clarity as a clickable link.

This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.

This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see the release notes.