Recently, as part of the New York Human Rights Watch Film Festival, I attended a screening of The Cleaners, a movie about how the big social and internet companies—Facebook, Google, and Twitter—moderate inappropriate content on their sites with teams tens of thousands of human moderators in the Philippines.

These controversial, and often clandestine, teams are trained to review and remove inappropriate content. The film takes you behind the scenes to show the perspective of these workers who are sworn to secrecy and bound by confidentiality agreements as part of their employment. The movie presents a balanced view of the pros, like improving safety and removing objectionable content, and the cons, which include the power these teams yield in deciding when and if to remove political/newsworthy content and also the mental cost of reviewing enormous amounts of sometimes horrifying content everyday.

I’ve worked my entire career in technology. I spent nearly seven years at Google, and I currently work for Clarifai, a computer vision company where one of the most important benefits of our technology is that it helps our customers moderate their content. However, this movie left me torn. I felt for the people who were repeatedly exposed to gruesome content: executions, child exploitation, terrorism, bullying, and more atrocities. On the other hand, the film highlighted members of these moderation teams expressing pride around the impact their work has in preventing users of social networks, billions of people (like you and me), from seeing explicit and upsetting content.

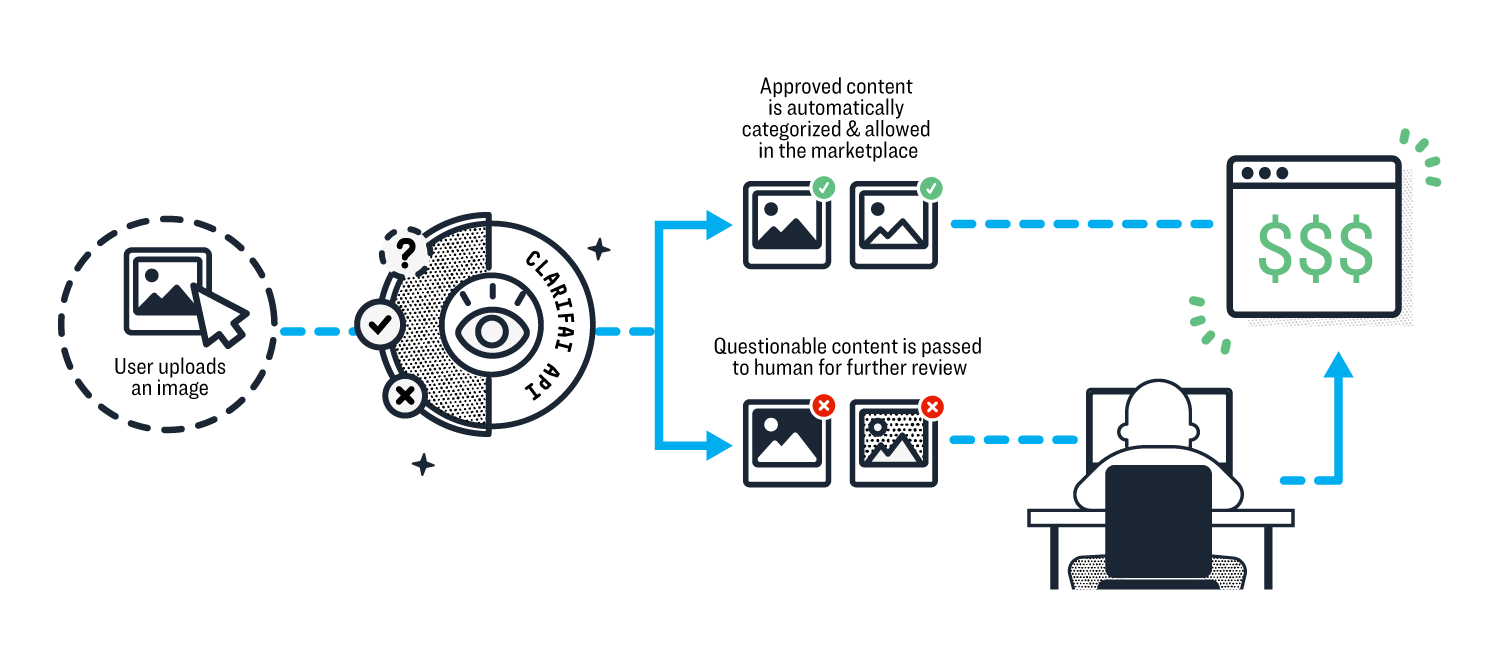

Moderation is important, not just for the big social networks but for any website that allows users to upload content. However, moderation does not have to be a task run solely through human labor. Many businesses already use technology to automate and improve this process today and as a result, see huge operational advantages.In The Cleaners, one moderator said “Computers can’t do what we do.” Computers CAN actually do this, but humans are still required for the edge cases where there is not certainty. This means that instead of humans reviewing 100% of the content, they can review as little as 10% if the models are trained to do the rest accurately, thus reducing the amount of objectionable content that they need to see and also providing a first pass at what the reason is for moderating it. Reducing the exposure both in terms of overall volume and the amount of time spent on each piece of content for human moderators would certainly be a socially benevolent use case. Furthermore, humans could focus on the content that requires a more nuanced approach such as news and political pieces.

The Opportunity:

There is a great opportunity to use AI to combat human trafficking, selling illegal products online, and eliminating graphic or violent content. Artificial intelligence is able to quickly identify and remove objectionable content, reducing the amount of moderation required of the human moderators. In addition to allowing for more efficiency, they will also no longer have to subject themselves to a deluge of potentially emotionally and mentally damaging material. The moderators who took pride in the positive impact of their work would still be able to contribute, but at less cost to their well-being.

There are also benefits to consumers who search for products on marketplaces and users who consume news and content from social sites and communities. People expect that a certain level of safety will be maintained whether they’re buying products online or checking their social media feeds.

Here is an example of how AI works to improve operational efficiency for an online marketplace:

- Step 1: A seller uploads an image of a watch to sell on a marketplace.

- Step 2: The image is then passed through the API, and a set of predictions are identify what is in the picture (in this case, 99% certain it is a watch).

- Step 3: Special models are then trained to specifically identify NSFW (not safe for work) content to predict when an image is likely to have inappropriate/unsafe content.

- Step 4: Because the technology is 1) confident that this is a watch and 2) there is a very low probability it is unsafe, it can be automatically posted for sale without the need of human oversight.

AI moderation models are so good at predicting what is safe and what is not, so businesses get huge operational efficiencies such as being able to handle, for instance, more than 20X more content than what can be handled manually by humans.

Clearly this is good for consumers (they will never encounter anything inappropriate), good for the marketplace (businesses save money by having to invest less in human moderation), and good for the seller (their items will not appear accurately and thus sell more efficiently). As social media grows up, it’s clear that moderation will be key. However, as The Cleaners shows, relying solely on human effort to do so is increasingly becoming an ethical issue (not even to mention the large monetary investment). With AI, however, these platforms will be able to negate these issues and free up their workforce to focus on more nuanced and critical tasks.