DeepSeek-OCR is an open-weight OCR model built to extract structured text, tables and mathematical content from complex documents with high accuracy. It uses a hybrid vision encoder influenced by SAM and CLIP, paired with the DeepSeek-3B-MoE decoder for efficient and scalable text generation.

You can run DeepSeek-OCR directly on Clarifai.

Playground: Try the model in the Clarifai Playground here. and upload any document to see how it handles layout, tables and formulas.

API access: Use the DeepSeek-OCR via API through Clarifai’s OpenAI-compatible endpoint.

In this blog, we will walk through how DeepSeek-OCR works, explore its benchmark performance and show you how to use the DeepSeek-OCR via API.

DeepSeek-OCR is a multi-modal model designed to convert complex images such as invoices, scientific papers, and handwritten notes into accurate, structured text.

Unlike traditional OCR systems that rely purely on convolutional networks for text detection and recognition, DeepSeek-OCR uses a transformer-based encoder-decoder architecture. This allows it to handle dense documents, tables, and mixed visual content more effectively while keeping GPU usage low.

Key features:

Processes images as vision tokens using a hybrid SAM + CLIP encoder.

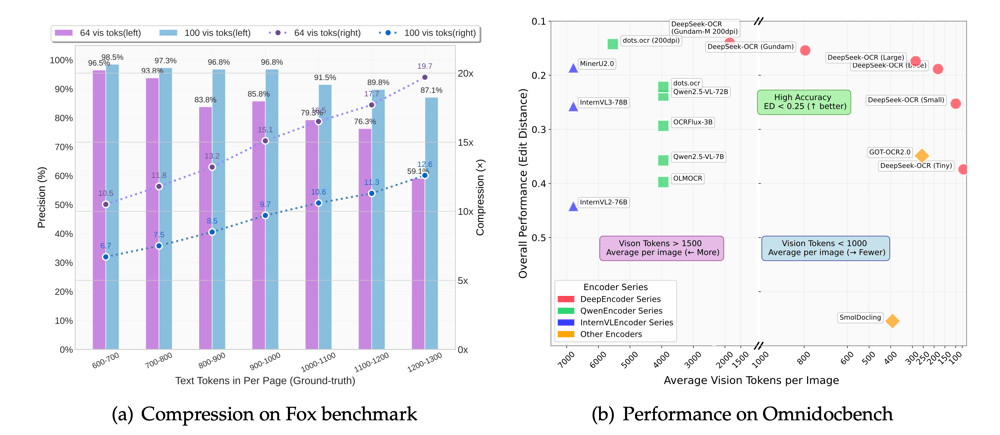

Compresses visual data by up to 10× with minimal accuracy loss.

Uses a 3B-parameter Mixture-of-Experts decoder, activating only 6 of 64 experts during inference for high efficiency.

Can process up to 200K pages per day on a single A100 GPU due to its optimized token compression and activation strategy.

You can access DeepSeek-OCR in two simple ways: through the Clarifai Playground or via the API.

The Playground provides a fast, interactive environment to test and explore model behavior. You can select the DeepSeek-OCR model directly from the community, upload an image such as an invoice, scanned document, or handwritten page, and add a relevant prompt describing what you want the model to extract or analyze. The output text is displayed in real time, allowing you to quickly verify accuracy and formatting.

Clarifai provides an OpenAI-compatible endpoint that allows you to call DeepSeek-OCR using the same Python or TypeScript client libraries you already use. Once you set your Personal Access Token (PAT) as an environment variable, you can call the model directly by specifying its URL.

Below are two ways to send an image input — either from a local file or via an image URL.

Option 1: Using a Local Image File

This example reads a local file (e.g., document.jpeg), encodes it in base64, and sends it to the model for OCR extraction.

Option 2: Using an Image URL

If your image is hosted online, you can directly pass its URL to the model.

You can use Clarifai’s OpenAI-compatible API with any TypeScript or JavaScript SDK. For example, the snippet below shows how you can use the Vercel AI SDK to access the DeepSeek-OCR.

Option 1: Using a Local Image File

Option 2: Using an Image URL

Clarifai’s OpenAI-compatible API lets you access DeepSeek-OCR using any language or SDK that supports the OpenAI format. You can experiment in the Clarifai Playground or integrate it directly into your applications. Learn more about the Open AI Compatabile API in the documentation here.

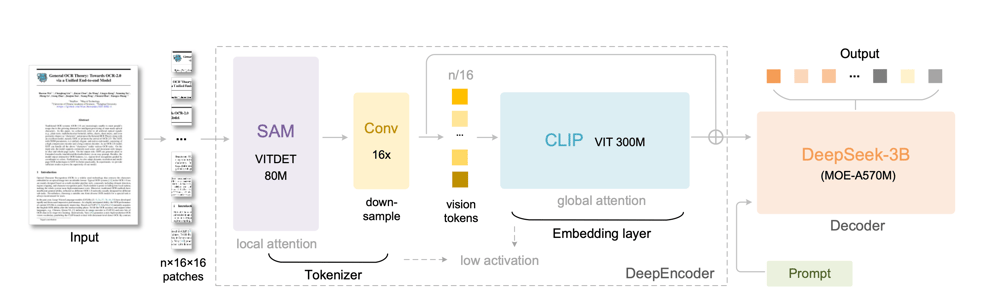

DeepSeek-OCR uses an end-to-end vision and language architecture designed for documents that contain dense text, tables, handwriting, formulas and multi-column layouts. Instead of following the traditional approach where text is first detected and then recognized, the model processes the entire page at once and generates structured text directly. This gives it much better consistency on complex PDFs and real-world scans.

Image Source: DeepSeek-OCR Research Paper

The DeepEncoder is a 380M-parameter vision backbone that transforms raw images into compact visual embeddings.

Patch Embedding: The input image is divided into 16×16 patches.

Local Attention (SAM - ViTDet):

SAM applies local attention to capture fine-grained features such as font style, handwriting, edges, and texture details within each region of the image. This helps preserve spatial precision at a local level.

Downsampling: The patch embeddings are downsampled 16× via convolution to reduce the total number of visual tokens and improve efficiency.

Global Attention (CLIP - ViT):

CLIP introduces global attention, enabling the model to understand document layout, structure, and semantic relationships across sections of the image.

Compact Visual Embeddings:

The encoder produces a sequence of vision tokens that are roughly 10× smaller than equivalent text tokens, resulting in high compression and faster decoding.

The encoded visual tokens are passed to a Mixture-of-Experts Transformer Decoder, which converts them into readable text.

Expert Activation: 6 out of 64 experts are activated per token, along with 2 shared experts (about 570M active parameters).

Text Generation: Transformer layers decode the visual embeddings into structured text sequences, capturing plain text, formulas, tables, and layout information.

Efficiency and Scale: Although the total model size is 3B parameters, only a fraction is active during inference, providing 3B-scale performance at <600M active cost.

The full pipeline follows a simple flow. The image is split into patches. These patches are processed with local and global attention. The resulting embeddings are compressed into a compact sequence of visual tokens. These tokens are then decoded into structured text using sparse expert activation. There are no separate detection boxes, heuristics or post-processing stages that traditional OCR systems usually require. Everything is learned as a unified system, which makes the model more robust on noisy scans and documents that mix many different content types.

DeepSeek-OCR performs well because each component supports the others. Local and global attention give the encoder both fine visual detail and a clear understanding of page-level structure. Compression keeps the number of tokens small enough for fast inference. The Mixture of Experts decoder provides high capacity without the cost of running a dense model. And the structured output format makes it possible to reconstruct tables, formulas and multi-column layouts precisely.

DeepSeek-OCR delivers high-performance document understanding through its vision-token compression and MoE decoding architecture. To get the most from it in your workflows, focus on a combination of solid baseline configuration and smart preprocessing.

For deterministic, repeatable output when calling the API or inference endpoint, set temperature = 0.

Follow the model card or repo for any recommended max_tokens, batch size or chunking settings (the official repo indicates token-compression ratios but does not publish a fixed “8,000 token window” recommendation).

Although the formal documentation does not publish extensive image-preprocessing guidelines, good practice suggests the following steps can improve OCR results:

Deskew and straighten scanned pages (e.g., using Hough-transform or OpenCV) to reduce tilted text and improve recognition.

Binarize images (convert to high-contrast black-and-white) where appropriate, especially on older scans with faded text, to make character boundaries more distinct.

If input resolution is very low, a moderate upscale (1.5× to 2×) may help readability; avoid overly increasing DPI (beyond ~300-600) because processing cost rises and gains taper off.

Resize very large images (for example, >2000 px on the longest side) to a more manageable size to avoid memory issues during encoding and compression.

If your document set uses unusual fonts, heavy handwriting, multiple languages or non-standard layouts (for example, engineering diagrams, formula-rich scientific papers), consider fine-tuning on a representative dataset. While the public materials do not publish exact fine-tuning gains, empirical evidence from practical guides suggest significant improvement is possible.

In your fine-tuning dataset, include samples that reflect your real-world deployment (handwriting, low quality scans, mixed language pages) so the model adapts to your domain rather than the general training set.

The model uses visual token compression, which significantly reduces token count compared to text-only approaches. This makes it efficient for processing large documents or long multi-page files while keeping compute costs low.

It preserves complex layout structures such as tables, multi-column pages, handwritten notes and embedded formulas, which many traditional OCR systems fail to reconstruct reliably.

The architecture supports very high throughput. A single GPU can process large document batches at scale, making the model suitable for enterprise ingestion pipelines and automated archival systems.

DeepSeek-OCR is open-weight, which gives teams full flexibility to integrate, customize and fine-tune the model in their own environments.

The model outputs structured content that can be easily converted into JSON, Markdown or other downstream-friendly formats, helping automate data extraction, search and indexing workflows.

Invoices and receipts: Extract line items, totals, vendor information and table regions from scanned receipts or invoices while retaining structure.

Forms and identity documents: Capture field values, checkboxes, signatures and structured sections from onboarding forms, KYC files or scanned IDs.

Scientific papers and technical reports: Handle PDFs with formulas, equations, figures and multi-column layouts without losing document structure.

Mobile document capture: Process photos of documents taken in varied environments, including skewed or low-quality captures from field operations.

Archive digitization and compliance workflows: Convert large collections of historical documents, regulatory filings or legal paperwork into searchable structured formats with high throughput and consistent accuracy.

DeepSeek-OCR is more than a breakthrough in document understanding. It redefines how multimodal models process visual information by combining SAM’s fine-grained visual precision, CLIP’s global layout reasoning, and a Mixture-of-Experts decoder for efficient text generation. Through Clarifai, you can experiment DeepSeek-OCR in the Playground, integrate it directly via the OpenAI-compatible API.

Learn more:

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy