AI in 2026 is shifting from raw text generators to agents that act and reason. Experts predict a focus on sustained reasoning and multi-step planning in AI agents. In practice, this means LLMs must think before they speak, breaking tasks into steps and verifying logic before outputting answers. Indeed, recent analyses argue that 2026 will be defined by reasoning-first LLMs-models that intentionally use internal deliberation loops to improve correctness. These models will power autonomous agents, self-debugging code assistants, strategic planners, and more.

At the same time, real-world AI deployment now demands rigor: “the question is no longer ‘Can AI do this?’ but ‘How well, at what cost, and for whom?’”. Thus, open models that deliver high-quality reasoning and practical efficiency are critical.

Reasoning-centric LLMs matter because many emerging applications- from advanced QA and coding to AI-driven research-require multi-turn logical chains. For example, agentic workflows rely on models that can plan and verify steps over long contexts. Benchmarks of 2025 show that specialized reasoning models now rival proprietary systems on math, logic, and tool-using tasks. In short, reasoning LLMs are the engines behind next-gen AI agents and decision-makers.

In this blog, we will explore the top 10 open-source reasoning LLMs of 2026, their benchmark performance, architectural innovations, and deployment strategies.

Reasoning LLMs are models tuned or designed to excel at multi-step, logic-driven tasks (puzzles, advanced math, iterative problem-solving) rather than one-shot Q&A. They typically generate intermediate steps or thoughts in their outputs.

For instance, answering “If a train goes 60 mph for 3 hours, how far?” requires computing distance = speed×time before answering-a simple reasoning task. A true reasoning model would explicitly include the computation step in its response. More complex tasks similarly demand chain-of-thought. In practice, reasoning LLMs often have thinking mode: either they output their chain-of-thought in text, or they run hidden iterations of inference internally.

Modern reasoning models are those refined to excel at complex tasks best solved with intermediate steps, such as puzzles, math proofs, and coding challenges. They typically include explicit reasoning content in the response. Importantly, not all LLMs need to be reasoning LLMs: simpler tasks like translation or trivia don’t require them. In fact, using a heavy reasoning model everywhere can be wasteful or even “overthinking.” The key is matching tools to tasks. But for advanced agentic and STEM applications, these reasoning-specialist LLMs are essential.

Reasoning LLMs often employ specialized architectures and training:

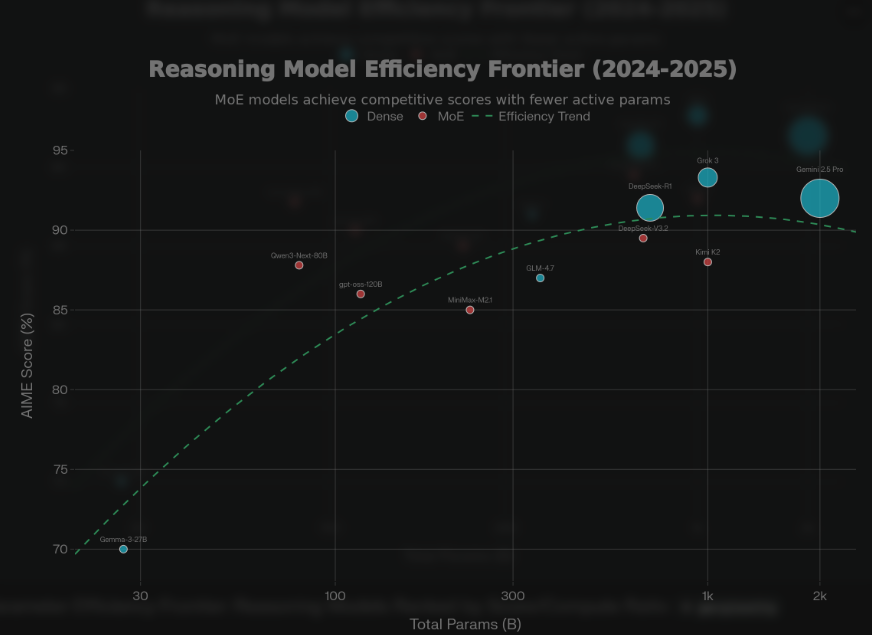

Collectively, these patterns - MoE scaling, huge contexts, chain-of-thought training, and careful tuning - define today’s reasoning LLM architectures.

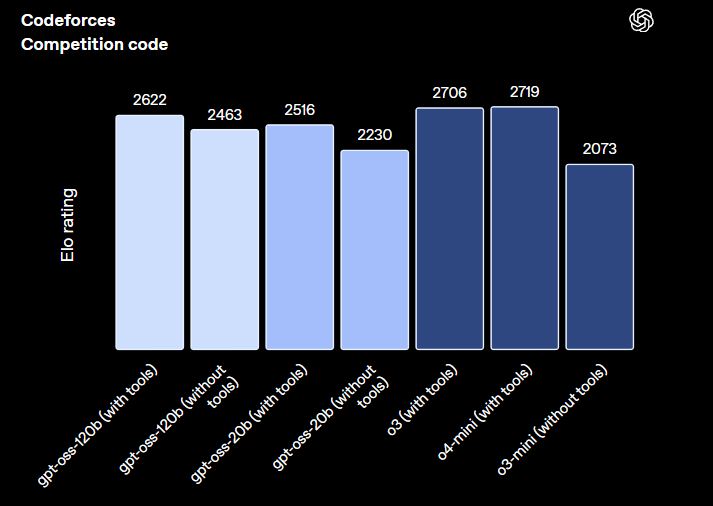

GPT-OSS-120B is a production-ready open-weight model released in 2025. It uses a Mixture-of-Experts (MoE) design with 117B total / 5.1B active parameters.

GPT-OSS-120B achieves near-parity with OpenAI’s o4-mini on core reasoning benchmarks, while running on a single 80GB GPU. It also outperforms other open models of similar size on reasoning and tool use.

It also comes in a 20B version optimized for efficiency: the 20B model matches o3-mini and can run on just 16GB of RAM, making it ideal for local or edge use. Both models support chain-of-thought with <think> tags and full tool integration via APIs. They support high instruction-following quality and are fully Apache-2.0 licensed.

Key specs:

|

Variant |

Total Params |

Active Params |

Min VRAM (quantized) |

Target Hardware |

Latency Profile |

|

gpt-oss-120B |

117B |

5.1B |

80GB |

1x H100/A100 80GB |

180-220 t/s |

|

gpt-oss-20B |

21B |

3.6B |

16GB |

RTX 4070/4060 Ti |

45-55 t/s |

Optimized for latency

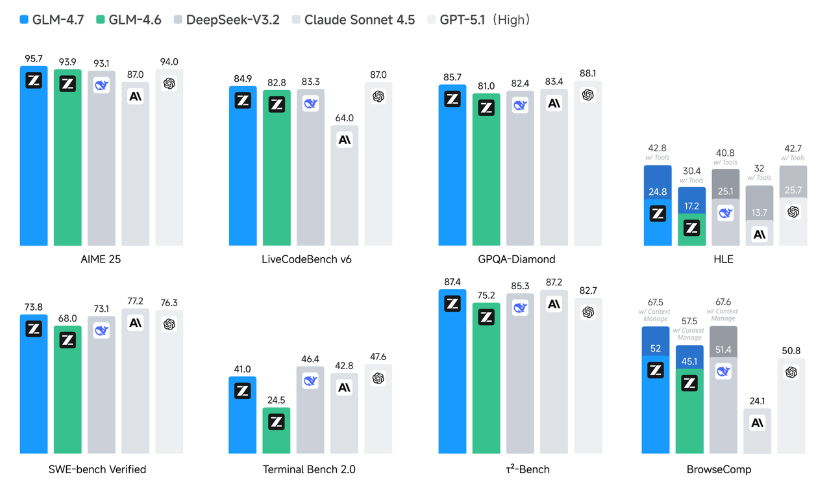

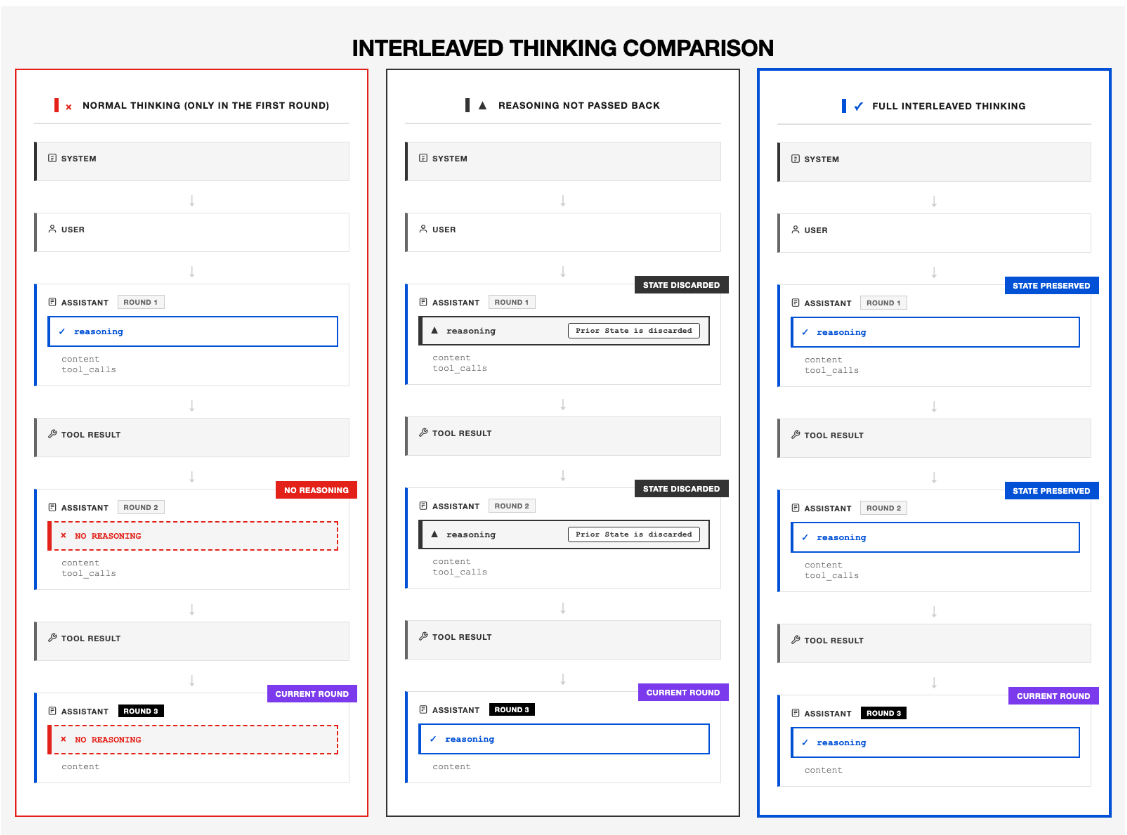

GLM-4.7 is a 355B-parameter open model with task-oriented reasoning enhancements. It was designed not just for Q&A but for end-to-end agentic coding and problem-solving. GLM-4.7 introduces “think-before-acting” and multi-turn reasoning controls to stabilize complex tasks. For example, it implements “Interleaved Reasoning”, meaning it performs a chain-of-thought before every tool call or response. It also has “Retention-Based” and “Round-Level” reasoning modes to keep or skip inner monologue as needed. These features let it adaptively trade latency for accuracy.

Performance‑wise, GLM‑4.7 leads open-source models across reasoning, coding, and agent tasks. On the Humanity’s Last Exam (HLE) benchmark with tool use, it scores ~42.8 %, a significant improvement over GLM‑4.6 and competitive with other high-performing open models. In coding, GLM‑4.7 achieves ~84.9 % on LiveCodeBench v6 and ~73.8 % on SWE-Bench Verified, surpassing earlier GLM releases.

The model also demonstrates robust agent capability on benchmarks such as BrowseComp and τ²‑Bench, showcasing multi-step reasoning and tool integration. Together, these results reflect GLM-4.7’s broad capability across logic, coding, and agent workflows, in an open-weight model released under the MIT license.

Key Specs

Strengths

Weaknesses

Kimi K2 Thinking is a trillion-parameter Mixture-of-Experts model designed specifically for deep reasoning and tool use. It features approximately 1 trillion total parameters but activates only 32 billion per token across 384 experts. The model supports a native context window of 256K tokens, which extends to 1 million tokens using Yarn. Kimi K2 was trained in INT4 precision, delivering up to 2x faster inference speeds.

The architecture is fully agentic and always thinks first. According to the model card, Kimi K2-Thinking only supports thinking mode, where the system prompt automatically inserts a <think> tag. Every output includes internal reasoning content by default.

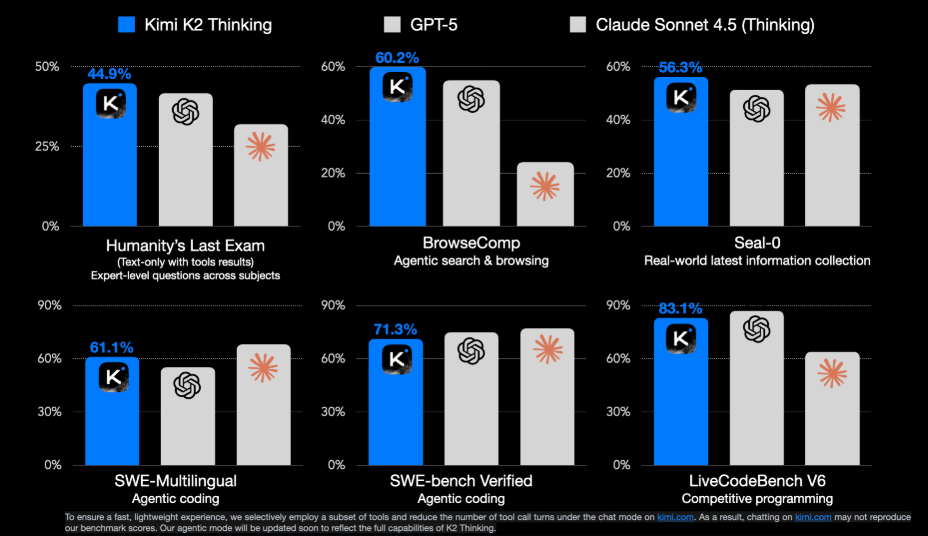

Kimi K2 Thinking leads across the shown benchmarks, scoring 44.9% on Humanity’s Last Exam, 60.2% on BrowseComp, and 56.3% on Seal-0 for real-world information collection. It also performs strongly in agentic coding and multilingual tasks, achieving 61.1% on SWE-Multilingual, 71.3% on SWE-bench Verified, and 83.1% on LiveCodeBench V6.

Overall, these results show Kimi K2 Thinking outperforming GPT-5 and Claude Sonnet 4.5 across reasoning, agentic, and coding evaluations.

Key Specs

Strengths

Weaknesses:

MiniMax-M2.1 is another agentic LLM geared toward tool-interactive reasoning. It uses a 230B total param design with only 10B activated per token, implying a large MoE or similar sparsity.

The model supports interleaved reasoning and action, allowing it to reason, call tools, and react to observations across extended agent loops. This makes it well-suited for tasks involving long sequences of actions, such as web navigation, multi-file coding, or structured research tasks.

MiniMax reports strong internal results on agent benchmarks such as SWE-Bench, BrowseComp, and xBench. In practice, M2.1 is often paired with inference engines like vLLM to support function calling and multi-turn agent execution.

DeepSeek-R1-Distill-Qwen3-8B represents one of the most impressive achievements in efficient reasoning models. Released in May 2025 as part of the DeepSeek-R1-0528 update, this 8-billion parameter model demonstrates that advanced reasoning capabilities can be successfully distilled from massive models into compact, accessible formats without significant performance degradation.

The model was created by distilling chain-of-thought reasoning patterns from the full 671B parameter DeepSeek-R1-0528 model and applying them to fine-tune Alibaba's Qwen3-8B base model. This distillation process used approximately 800,000 high-quality reasoning samples generated by the full R1 model, focusing on mathematical problem-solving, logical inference, and structured reasoning tasks. The result is a model that achieves state-of-the-art performance among 8B-class models while requiring only a single GPU to run.

Performance-wise, DeepSeek-R1-Distill-Qwen3-8B delivers results that defy its compact size. It outperforms Google's Gemini 2.5 Flash on AIME 2025 mathematical reasoning tasks and nearly matches Microsoft's Phi 4 reasoning model on HMMT benchmarks. Perhaps most remarkably, this 8B model matches the performance of Qwen3-235B-Thinking on certain reasoning tasks—a 235B parameter model. The R1-0528 update significantly improved reasoning depth, with accuracy on AIME 2025 jumping from 70% to 87.5% compared to the original R1 release.

The model runs efficiently on a single GPU with 40-80GB VRAM (such as an NVIDIA H100 or A100), making it accessible to individual researchers, small teams, and organizations without massive compute infrastructure. It supports the same advanced features as the full R1-0528 model, including system prompts, JSON output, and function calling—capabilities that make it practical for production applications requiring structured reasoning and tool integration.

Key Specs

Strengths

Weaknesses

DeepSeek’s V3 series (codename “Terminus”) builds on the R1 models and is designed for agentic AI workloads. It uses a Mixture-of-Experts transformer with ~671B total parameters and ~37B active parameters per token.

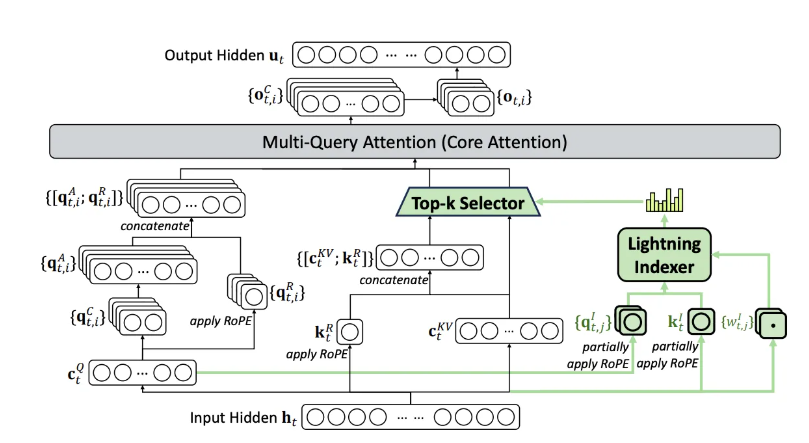

DeepSeek-V3.2 introduces a Sparse Attention architecture for long-context scaling. It replaces full attention with an indexer-selector mechanism, reducing quadratic attention cost while maintaining accuracy close to dense attention.

As shown in the below figure, the attention layer combines Multi-Query Attention, a Lightning Indexer, and a Top-K Selector. The indexer identifies relevant tokens, and attention is computed only over the selected subset, with RoPE applied for positional encoding.

The model is trained with large-scale reinforcement learning on tasks such as math, coding, logic, and tool use. These skills are integrated into a shared model using Group Relative Policy Optimization.

Fig- Attention-architecture of deepseek-v3.2

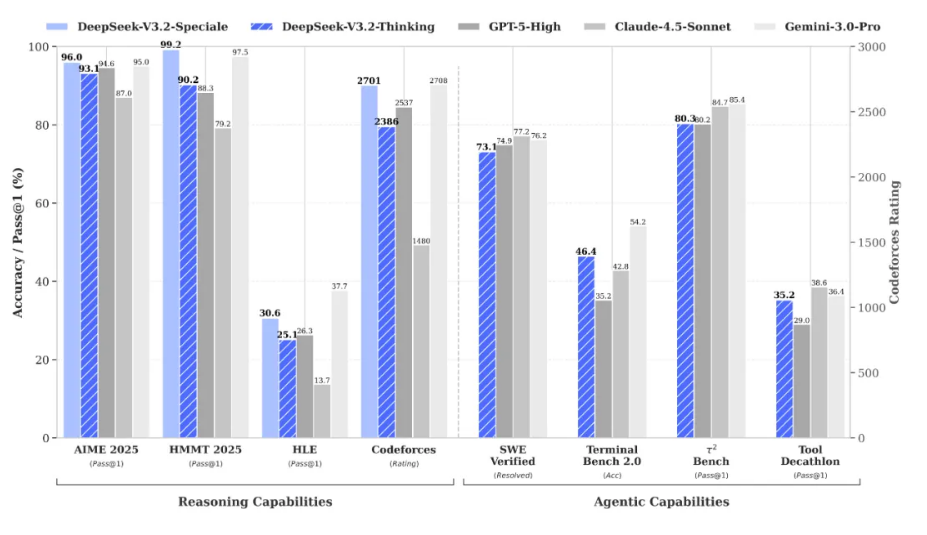

DeepSeek reports that V3.2 achieves reasoning performance comparable to leading proprietary models on public benchmarks. The V3.2-Speciale variant is further optimized for deep multi-step reasoning.

DeepSeek-V3.2 is MIT-licensed, available via production APIs, and outperforms V3.1 on mixed reasoning and agent tasks.

Key specs

Strengths

Weaknesses

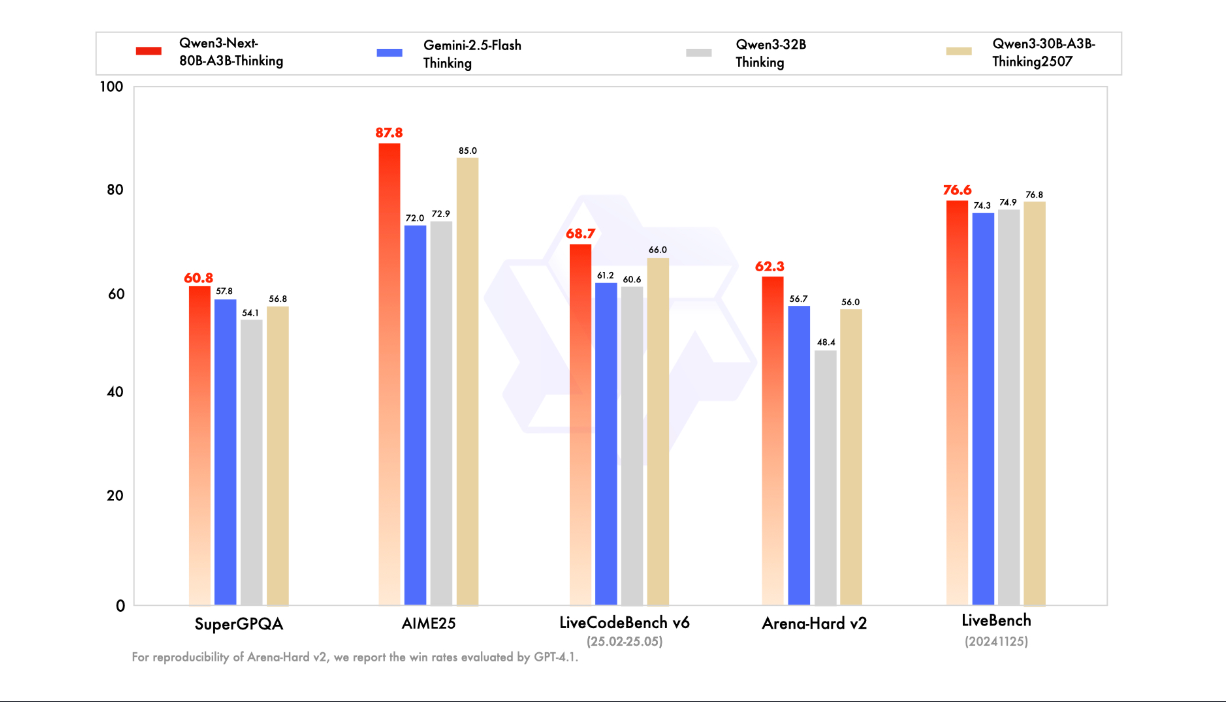

Qwen3-Next is Alibaba’s next-gen open model series emphasizing both scale and efficiency. The 80B-A3B-Thinking variant is specially designed for complex reasoning: it combines hybrid attention (linearized + sparse mechanisms) with a high-sparsity MoE. Its specs are striking: 80B total parameters, but only ~3B active (512 experts with 10 active). This yields very fast inference. Qwen3-Next also uses multi-token prediction (MTP) during training for speed.

Benchmarks show Qwen3-Next-80B performing excellently on multi-hop tasks. The model card highlights that it outperforms earlier Qwen-30B and Qwen-32B thinking models, and even outperforms the proprietary Gemini-2.5-Flash on several benchmarks. For example, it gets ~87.8% on AIME25 (math) and ~73.9% on HMMT25, better than Gemini-2.5-Flash’s 72.0% and 73.9% respectively. It also shows strong performance on MMLU and coding tests.

Key specs: 80B total, 3B active. 48 layers, hybrid layout with 262K native context. Fully Apache-2.0 licensed.

Strengths: Excellent reasoning & coding performance per compute (beats larger models on many tasks); huge context; extremely efficient (10× speed up for >32K context vs older Qwens).

Weaknesses: As a MoE model, it may require specific runtime support; “Thinking” mode adds complexity (always generates a <think> block and requires specific prompting).

Qwen3-235B-A22B represents Alibaba's most advanced open reasoning model to date. It uses a massive Mixture-of-Experts architecture with 235 billion total parameters but activates only 22 billion per token, achieving an optimal balance between capability and efficiency. The model employs the same hybrid attention mechanism as Qwen3-Next-80B (combining linearized and sparse attention) but scales it to handle even more complex reasoning chains.

The "A22B" designation refers to its 22B active parameters across a highly sparse expert system. This design allows the model to maintain reasoning quality comparable to much larger dense models while keeping inference costs manageable. Qwen3-235B-A22B supports dual-mode operation: it can run in standard mode for quick responses or switch to "thinking mode" with explicit chain-of-thought reasoning for complex tasks.

Performance-wise, Qwen3-235B-A22B excels across mathematical reasoning, coding, and multi-step logical tasks. On AIME 2025, it achieves approximately 89.2%, outperforming many proprietary models. It scores 76.8% on HMMT25 and maintains strong performance on MMLU-Pro (78.4%) and coding benchmarks like HumanEval (91.5%). The model's long-context capability extends to 262K tokens natively, with optimized handling for extended reasoning chains.

The architecture incorporates multi-token prediction during training, which improves both training efficiency and the model's ability to anticipate reasoning paths. This makes it particularly effective for tasks requiring forward planning, such as complex mathematical proofs or multi-file code refactoring.

Key Specs

Strengths

Weaknesses

MiMo-V2-Flash represents an aggressive push toward ultra-efficient reasoning through a 309 billion parameter Mixture-of-Experts architecture that activates only 15 billion parameters per token. This 20:1 sparsity ratio is among the highest in production reasoning models, enabling inference speeds of approximately 150 tokens per second while maintaining competitive performance on mathematical and coding benchmarks.

The model uses a sparse gating mechanism that dynamically routes tokens to specialized expert networks. This architecture allows MiMo-V2-Flash to achieve remarkable cost efficiency, operating at just 2.5% of Claude's inference cost while delivering comparable performance on specific reasoning tasks. The model was trained with a focus on mathematical reasoning, coding, and structured problem-solving.

MiMo-V2-Flash delivers impressive benchmark results, achieving 94.1% on AIME 2025, placing it among the top performers for mathematical reasoning. In coding tasks, it scores 73.4% on SWE-Bench Verified and demonstrates strong performance on standard programming benchmarks. The model supports a 128K token context window and is released under an open license permitting commercial use.

However, real-world performance reveals some limitations. Community testing indicates that while MiMo-V2-Flash excels on mathematical and coding benchmarks, it can struggle with instruction following and general-purpose tasks outside its core training distribution. The model performs best when tasks closely match mathematical competitions or coding challenges but shows inconsistent quality on open-ended reasoning tasks.

Key Specs

Strengths

Weaknesses

Mistral AI's Ministral 14B Reasoning represents a breakthrough in compact reasoning models. With only 14 billion parameters, it achieves reasoning performance that rivals models 5-10× its size, making it the most efficient model in this top-10 list. Ministral 14B is part of the broader Mistral 3 family and inherits architectural innovations from Mistral Large 3 while optimizing for deployment in resource-constrained environments.

The model employs a dense transformer architecture with specialized reasoning training. Unlike larger MoE models, Ministral achieves its efficiency through careful dataset curation and reinforcement learning focused specifically on mathematical and logical reasoning tasks. This targeted approach allows it to punch well above its weight class on reasoning benchmarks.

Remarkably, Ministral 14B achieves approximately 85% accuracy on AIME 2025, a leading result for any model under 30B parameters and competitive with models several times larger. It also scores 68.2% on GPQA Diamond and 82.7% on MATH-500, demonstrating broad reasoning capability across different problem types. On coding benchmarks, it achieves 78.5% on HumanEval, making it suitable for AI-assisted development workflows.

The model's small size enables deployment scenarios impossible for larger models. It can run effectively on a single consumer GPU (RTX 4090, A6000) with 24GB VRAM, or even on high-end laptops with quantization. Inference speeds reach 40-60 tokens per second on consumer hardware, making it practical for real-time interactive applications. This accessibility opens reasoning-first AI to a much broader range of developers and use cases.

Key Specs

Strengths

Weaknesses

|

Model |

Architecture |

Params (Total / Active) |

Context Length |

License |

Notable Strengths |

|

GPT-OSS-120B |

Sparse / MoE-style |

~117B / ~5.1B |

~128K |

Apache-2.0 |

Efficient GPT-level reasoning; single-GPU feasibility; agent-friendly |

|

GLM-4.7 (Zhipu AI) |

MoE Transformer |

~355B / ~32B |

~200K input / 128K output |

MIT |

Strong open coding + math reasoning; built-in tool & agent APIs |

|

Kimi K2 Thinking (Moonshot AI) |

MoE (≈384 experts) |

~1T / ~32B |

256K (up to 1M via Yarn) |

Apache-2.0 |

Exceptional deep reasoning and long-horizon tool use; INT4 efficiency |

|

MiniMax-M2.1 |

MoE (agent-optimized) |

~230B / ~10B |

Long (not publicly specified) |

MIT |

Engineered for agentic workflows; strong long-horizon reasoning |

|

DeepSeek-R1 (distilled) |

Dense Transformer (distilled) |

8B / 8B |

128K |

MIT |

Matches 235B models on reasoning; runs on single GPU; 87.5% AIME 2025 |

|

DeepSeek-V3.2 (Terminus) |

MoE + Sparse Attention |

~671B / ~37B |

Up to ~1M (sparse) |

MIT |

State-of-the-art open agentic reasoning; long-context efficiency |

|

Qwen3-Next-80B-Thinking |

Hybrid MoE + hybrid attention |

80B / ~3B |

~262K native |

Apache-2.0 |

Extremely compute-efficient reasoning; strong math & coding |

|

Qwen3-235B-A22B |

Hybrid MoE + dual-mode |

~235B / ~22B |

~262K native |

Apache-2.0 |

Exceptional math reasoning (89.2% AIME); dual-mode flexibility |

|

Ministral 14B Reasoning |

Dense Transformer |

~14B / ~14B |

128K |

Apache-2.0 |

Best-in-class efficiency; 85% AIME at 14B; runs on consumer GPUs |

|

MiMo-V2-Flash |

Ultra-sparse MoE |

~309B / ~15B |

128K |

MIT |

Ultra-efficient (2.5% Claude cost); 150 t/s; 94.1% AIME 2025 |

Open-source reasoning models have advanced quickly, but running them efficiently remains a real challenge. Agentic and reasoning workloads are fundamentally token-intensive. They involve long contexts, multi-step planning, repeated tool calls, and iterative execution. As a result, they burn through tokens rapidly and become expensive and slow when run on standard inference setups.

The Clarifai Reasoning Engine is built specifically to address this problem. It is optimized for agentic and reasoning workloads, using optimized kernels and adaptive techniques that improve throughput and latency over time without compromising accuracy. Combined with Compute Orchestration, Clarifai dynamically manages how these workloads run across GPUs, enabling high throughput, low latency, and predictable costs even as reasoning depth increases.

These optimizations are reflected in real benchmarks. In evaluations published by Artificial Analysis on GPT-OSS-120B, Clarifai achieved industry-leading results, exceeding 500 tokens per second with a time to first token of around 0.3 seconds. The results highlight how execution and orchestration choices directly impact the viability of large reasoning models in production.

In parallel, the platform continues to add and update support for top open-source reasoning models in the community. You can try these models directly in the Playground or access them through the API and integrate them into their own applications. The same infrastructure also supports deploying custom or self-hosted models, making it easy to evaluate, compare, and run reasoning workloads under consistent conditions.

As reasoning models continue to evolve in 2026, the ability to run them efficiently and affordably will be the real differentiator.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy