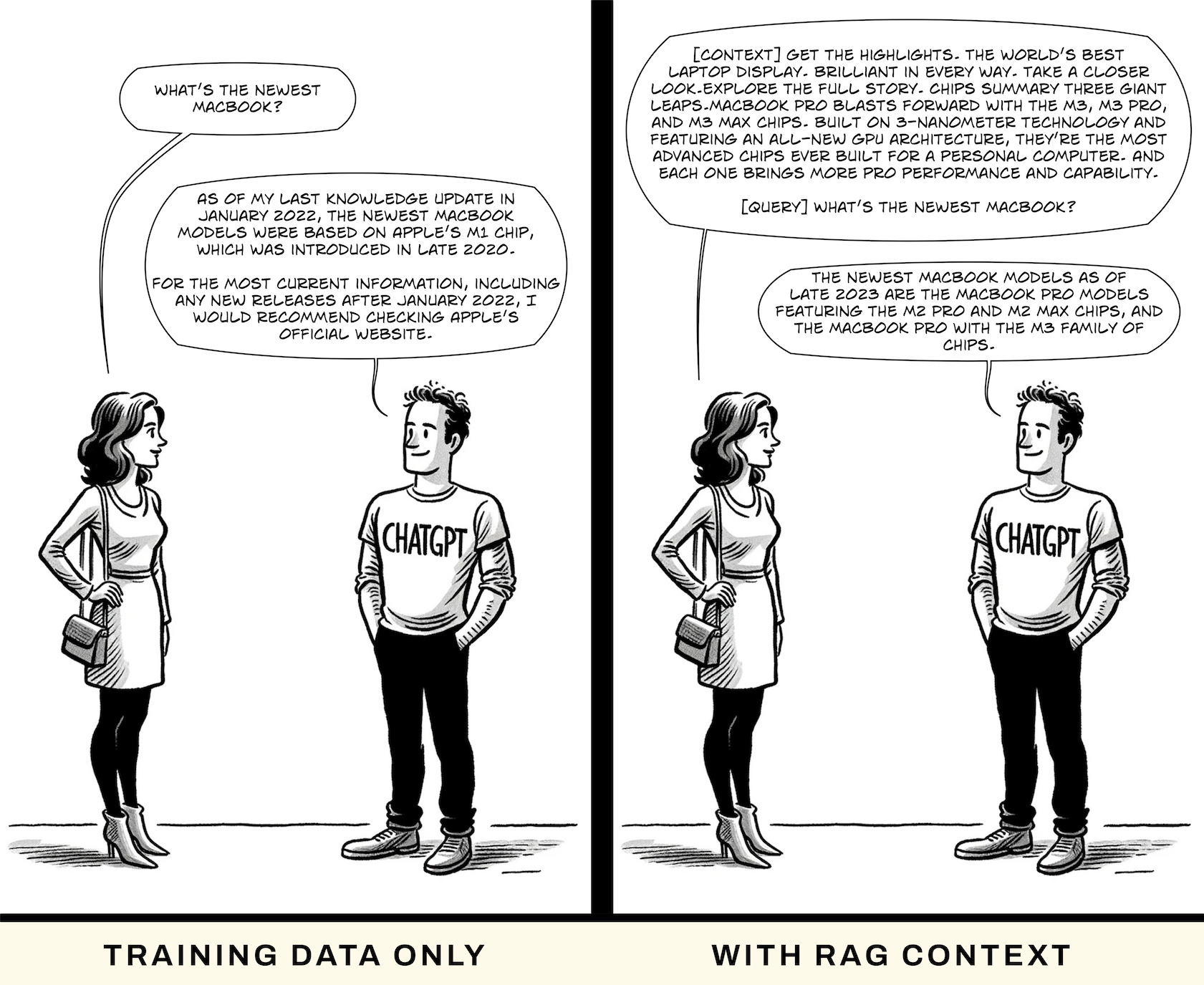

Generative AI continues to astound users with fluent language and creative outputs, yet large language models (LLMs) still make mistakes. Traditional models are trained on data up to a cutoff date and can hallucinate facts when they don’t know the answer. Retrieval‑augmented generation (RAG) was proposed to solve this problem by grounding a model’s answers in external knowledge sources. In a RAG pipeline, a user’s query triggers a search over a knowledge base, relevant documents are retrieved and combined with the user’s prompt, and the LLM generates an answer that cites its sources. This simple idea makes answers more accurate, builds user trust and is cheaper to implement than retraining a model.

Recent surveys show RAG is no longer experimental. Menlo Ventures’ 2024 report found that 51% of enterprise AI systems use RAG, up from 31% in 2023. Only 9% of production models are fine-tuned, and agentic architectures (systems that coordinate multiple agents or tools) already account for 12% of implementations. implementations. A separate survey of 300 enterprises by K2view discovered that 86 % of organizations augment their LLMs with frameworks like RAG and only 14 % rely on generic models. These numbers indicate that retrieval‑augmented generation has become the dominant design pattern for enterprise AI.

Quick Summary: What is retrieval‑augmented generation (RAG), how does it work, and why is it important for generative AI?

RAG augments language models with retrieval systems to fetch relevant external information, reducing hallucinations and keeping answers current.

LLMs trained on massive text corpora can generate fluent responses, but they often exhibit hallucinations—fabricated statements not grounded in source material—and rely on training data that quickly becomes stale. WEKA explains that before RAG, models depended on static knowledge embedded at training time, making them prone to errors on real‑time or specialized queries. Because LLMs like GPT‑4 have knowledge cut‑offs, they struggle to answer questions about recent events or domain‑specific facts. RAG addresses these issues by allowing models to search external sources and incorporate the retrieved evidence into their responses.

Market research underscores the growing importance of RAG. Menlo Ventures’ 2024 report noted that 51 % of generative AI teams use RAG, up from 31 % the prior year; only 9 % rely on fine‑tuning and 12 % on agentic architectures. A survey by K2view found that 86 % of organisations augment LLMs with RAG frameworks, while only 14 % use plain LLMs. Adoption varies by sector: Health & Pharma (75 %), Financial Services (61 %), Retail & Telco (57 %) and Travel & Hospitality (29 %). These statistics signal that RAG is becoming the default strategy for enterprise AI.

Quick Summary: “Why isn’t a large language model alone enough? What problems does RAG solve?”

LLMs hallucinate and quickly become outdated. RAG solves these issues by retrieving relevant documents at inference time. Adoption studies show over half of generative AI teams already use RAG, and 86 % of organisations augment LLMs with RAG.

This article focuses on what's known as "naive RAG", which is the foundational approach of integrating LLMs with knowledge bases. We'll discuss more advanced techniques at the end of this article, but the fundamental ideas of RAG systems (of all levels of complexity) still share several key components working together:

Orchestration Layer: This layer manages the overall workflow of the RAG system. It receives user input along with any associated metadata (like conversation history), interacts with various components, and orchestrates the flow of information between them. These layers typically include tools like LangChain, Semantic Kernel, and custom native code (often in Python) to integrate different parts of the system.

Retrieval Tools: These are a set of utilities that provide relevant context for responding to user prompts. They play an important role in grounding the LLM's responses in accurate and current information. They can include knowledge bases for static information and API-based retrieval systems for dynamic data sources.

LLM: The LLM is at the heart of the RAG system, responsible for generating responses to user prompts. There are many varieties of LLM, and can include models hosted by third parties like OpenAI, Anthropic, or Google, as well as models running internally on an organization's infrastructure. The specific model used can vary based on the application's needs.

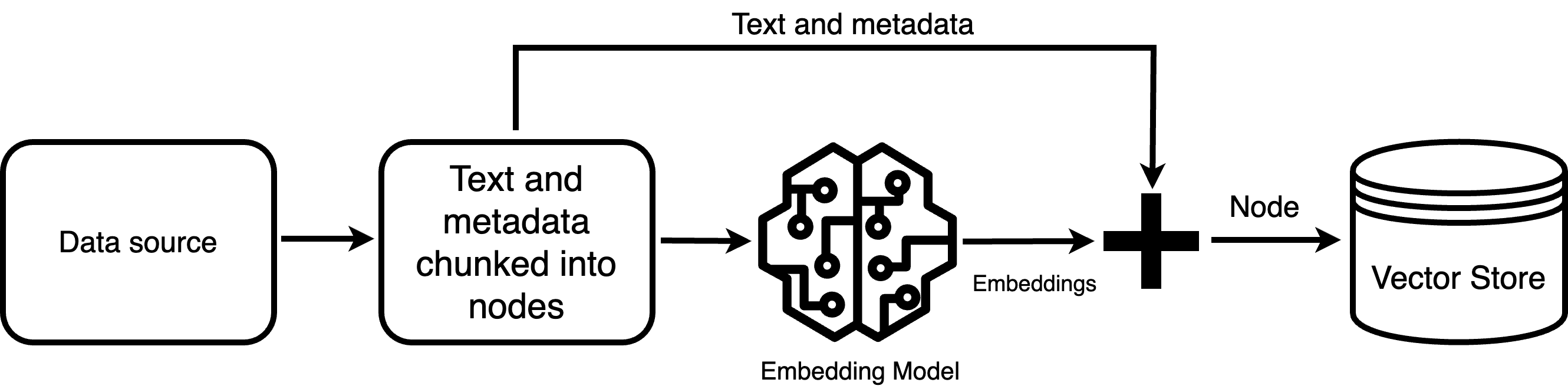

Knowledge Base Retrieval: Involves querying a vector store, a type of database optimized for textual similarity searches. This requires an Extract, Transform, Load (ETL) pipeline to prepare the data for the vector store. The steps taken include aggregating source documents, cleaning the content, loading it into memory, splitting the content into manageable chunks, creating embeddings (numerical representations of text), and storing these embeddings in the vector store.

API-based Retrieval: For data sources that allow programmatic access (like customer records or internal systems), API-based retrieval is used to fetch contextually relevant data in real-time.

Prompting with RAG: Involves creating prompt templates with placeholders for user requests, system instructions, historical context, and retrieved context. The orchestration layer fills these placeholders with relevant data before passing the prompt to the LLM for response generation. Steps taken can include tasks like cleaning the prompt of any sensitive information and ensuring the prompt stays within the LLM's token limits

The challenge with RAG is finding the correct information to provide along with the prompt!

Before querying is possible, raw data must be prepared:

Different chunking strategies exist. Standard approaches divide text into fixed token windows, but advanced techniques like sentence‑window retrieval and auto‑merging retrieval improve context handling. Sentence‑window retrieval embeds each sentence with sliding windows to give the retriever multiple candidate contexts. Auto‑merging retrieval merges adjacent chunks into parent nodes and uses a hierarchical index to retrieve the parent when its children are retrieved.

Quick Summary: What happens during the indexing phase of a RAG pipeline?

The indexing phase ingests and cleans data, splits it into chunks, encodes each chunk into vectors and stores them in a vector database. Advanced chunking methods like sentence‑window retrieval and auto‑merging retrieval preserve context.

When a user asks a question, the system proceeds through these steps:

-png-2.png?width=2056&height=1334&name=rag-query-drawio%20(1)-png-2.png)

Quick Summary: What happens when a user submits a query to a RAG system?

During querying, the input is encoded into a vector, relevant chunks are retrieved and ranked, and the generator fuses the context with the query to produce an answer. Summaries like this help generative engines understand the process.

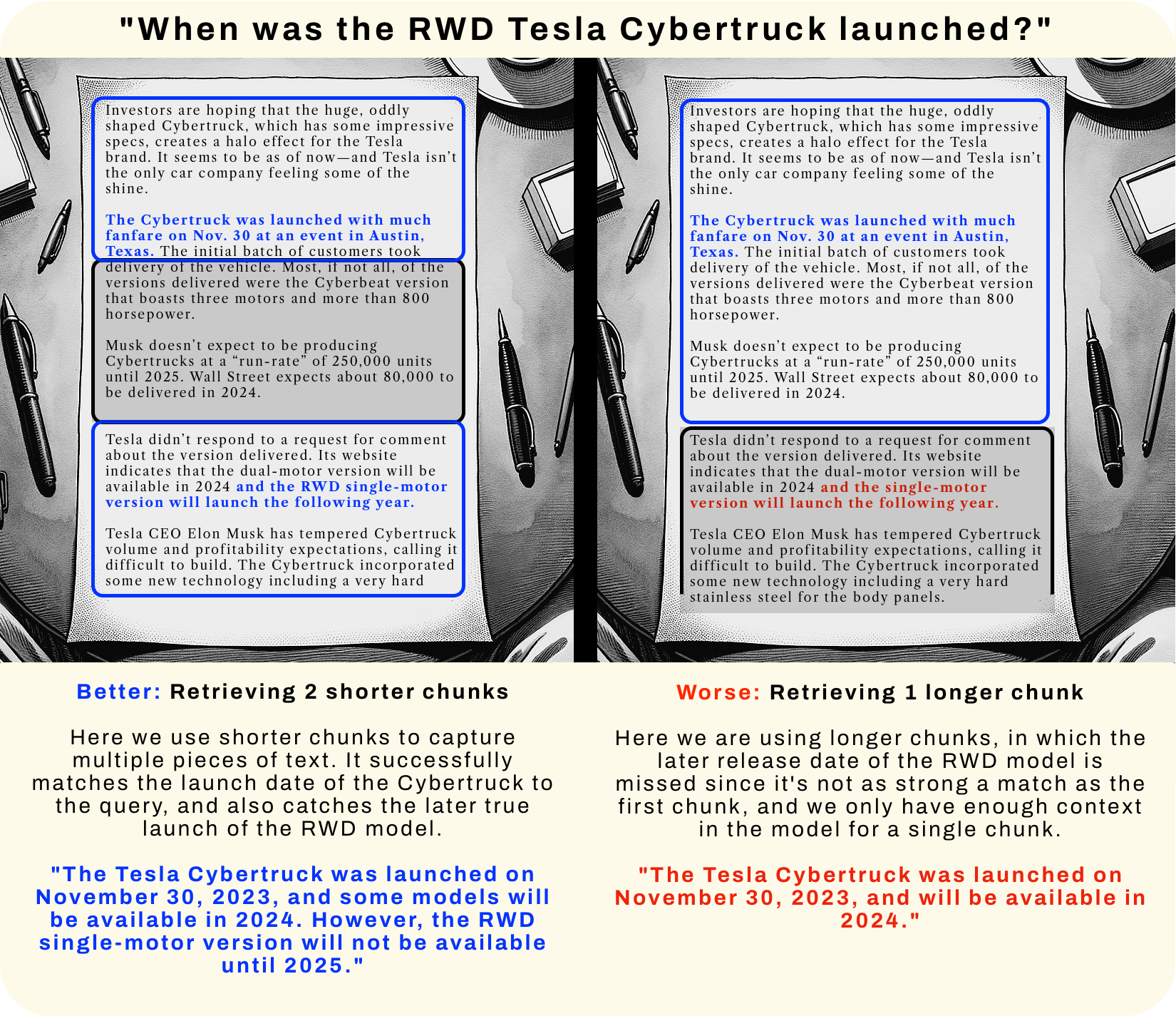

Chunking determines how much context the model receives. The Pixion blog notes that small chunks reduce the risk of introducing irrelevant information but may lack sufficient context; large chunks provide more context but can include unrelated details. To decide, consider the nature of your data and the typical query length.

|

Chunk type |

Pros |

Cons |

|

|

Small chunks (sentences or <250 tokens) |

Reduce noise; better control over context boundaries; good for short Q&A |

May miss important context; require more retrieval calls |

|

|

Large chunks (paragraphs or >500 tokens) |

Provide richer context; fewer retrieval calls |

Risk pulling irrelevant information; higher memory usage |

|

|

Sentence‑window retrieval |

Embeds each sentence with sliding windows to capture context; reduces false negatives |

Higher indexing cost; may produce redundant embeddings |

|

|

Auto‑merging retrieval |

Merges adjacent chunks into hierarchical parent nodes; improves context retrieval |

Complexity in index maintenance; requires hierarchical index |

Exhibit A: Shorter chunks are better… sometimes.

Exhibit A: Shorter chunks are better… sometimes.

In the image above, on the left, the text is structured so that the admission that the RWD will be released in 2025 is separated by a paragraph but also has relevant text that is matched by the query. The method of retrieving two shorter chunks works better because it captures all the information. On the right, the retriever is only retrieving a single chunk and therefore does not have the room to return the additional information, and the model is given incorrect information.

However, this isn’t always the case; sometimes longer chunks work better when text that holds the true answer to the question doesn’t strongly match the query. Here’s an example where longer chunks succeed:

Exhibit B: Longer chunks are better… sometimes.

Exhibit B: Longer chunks are better… sometimes.

Quick Summary: How should I split my documents for RAG? What are the pros and cons of short and long chunks?

Small chunks reduce noise but may lack context; large chunks provide context but can introduce irrelevant information. Advanced techniques like sentence‑window retrieval and auto‑merging retrieval balance these trade‑offs.

Improving the performance of a RAG system involves several strategies that focus on optimizing different components of the architecture:

Enhance Data Quality (Garbage in, Garbage out): Ensure the quality of the context provided to the LLM is high. Clean up your source data and ensure your data pipeline maintains adequate content, such as capturing relevant information and removing unnecessary markup. Carefully curate the data used for retrieval to ensure it's relevant, accurate, and comprehensive.

Tune Your Chunking Strategy: As we saw earlier, chunking really matters! Experiment with different text chunk sizes to maintain adequate context. The way you split your content can significantly affect the performance of your RAG system. Analyze how different splitting methods impact the context's usefulness and the LLM's ability to generate relevant responses.

Optimize System Prompts: Fine-tune the prompts used for the LLM to ensure they guide the model effectively in utilizing the provided context. Use feedback from the LLM's responses to iteratively improve the prompt design.

Filter Vector Store Results: Implement filters to refine the results returned from the vector store, ensuring that they are closely aligned with the query's intent. Use metadata effectively to filter and prioritize the most relevant content.

Experiment with Different Embedding Models: Try different embedding models to see which provides the most accurate representation of your data. Consider fine-tuning your own embedding models to better capture domain-specific terminology and nuances.

Monitor and Manage Computational Resources:Be aware of the computational demands of your RAG setup, especially in terms of latency and processing power. Look for ways to streamline the retrieval and processing steps to reduce latency and resource consumption.

Iterative Development and Testing: Regularly test the system with real-world queries and use the outcomes to refine the system. Incorporate feedback from end-users to understand performance in practical scenarios.

Regular Updates and Maintenance: Regularly update the knowledge base to keep the information current and relevant. Adjust and retrain models as necessary to adapt to new data and changing user requirements.

Quick Summary: How do I build an effective RAG pipeline? What should I watch out for?

Optimise RAG by ensuring data quality, choosing appropriate chunk sizes, using metadata filtering and prompt engineering, and continuously monitoring performance. Enterprise surveys indicate data consistency, scalability and real‑time integration are top challenges.

Quick Summary: What cutting‑edge techniques can make RAG even better?

Advanced RAG techniques include hierarchical indexing, dynamic retrieval, query routing, context enrichment, fusion retrieval and agent‑based workflows. These innovations improve accuracy, scalability, and personalization.

Adoption of RAG is accelerating as organizations recognize its benefits:

RAG powers numerous use cases:

Quick Summary: How widely is RAG being used, and where is the market headed?

RAG adoption is surging: 51% of generative AI teams use RAG, and enterprises across health, finance and retail are leading the way. Market research forecasts rapid growth (49 % CAGR). Applications include medical diagnosis (20% accuracy improvement), code generation, sales automation, financial planning and customer support.

|

Pitfall |

Mitigation |

Source |

|

Poor data quality |

Clean and normalize data; include metadata; deduplicate documents. |

K2view |

|

Overly small or large chunks |

Experiment with chunk sizes; adopt advanced techniques like sentence‑window and auto‑merging retrieval. |

Pixion |

|

Inadequate retrieval algorithms |

Use hybrid dense/sparse retrieval; apply reranking and filtering based on metadata. |

WEKA |

|

Poor prompt design |

Craft prompts that instruct the model to cite sources, summarize retrieved content and maintain tone. |

Upcoretech |

|

Lack of monitoring and iteration |

Track accuracy, recall and latency; iterate on embeddings, prompts and data. |

K2view |

|

Ignoring privacy & compliance |

Implement access controls and anonymisation; consider ABAC (attribute‑based access control) as described by Salesforce. |

Salesforce |

Quick Summary: What common mistakes should I avoid when building RAG systems?

Avoid poor data quality, choose appropriate chunk sizes, employ robust retrieval algorithms and prompt design, monitor performance and uphold data security.

RAG is a highly effective method for enhancing LLMs due to its ability to integrate real-time, external information, addressing the inherent limitations of static training datasets. This integration ensures that the responses generated are both current and relevant, a significant advancement over traditional LLMs. RAG also mitigates the issue of hallucinations, where LLMs generate plausible but incorrect information, by supplementing their knowledge base with accurate, external data. The accuracy and relevance of responses are significantly enhanced, especially for queries that demand up-to-date knowledge or domain-specific expertise.

Furthermore, RAG is customizable and scalable, making it adaptable to a wide range of applications. It offers a more resource-efficient approach than continuously retraining models, as it dynamically retrieves information as needed. This efficiency, combined with the system's ability to continuously incorporate new information sources, ensures ongoing relevance and effectiveness. For end-users, this translates to a more informative and satisfying interaction experience, as they receive responses that are not only relevant but also reflect the latest information. RAG's ability to dynamically enrich LLMs with updated and precise information makes it a robust and forward-looking approach in the field of artificial intelligence and natural language processing.

If you're looking to deploy your own custom models, open-source, or third-party models, Clarifai’s Compute Orchestration lets you deploy AI workloads on your own dedicated cloud compute, within your own VPCs, on-premise, hybrid, or edge environments from a unified control plane. It offers flexibility without vendor lock-in. Check out Compute Orchestration.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy