This blog post focuses on new features and improvements. For a comprehensive list including bug fixes, please see the release notes.

API

Added flexible API key selection

- For third-party wrapped models, like those provided by OpenAI, Anthropic, Cohere, and others, you can now choose to utilize their API keys as an option, in addition to using the default Clarifai keys. This flexibility allows you to integrate your preferred services and APIs into your workflow, enhancing the versatility of our platform. You can learn how to add them here.

Training Time Estimator

Introduced a Training Time Estimator for both the API and the Portal

- This feature provides users with approximate training time estimates before initiating the training process. The estimate is displayed above the "train" button, rounded down to the nearest hour with 15-minute increments.

- It offers users transparency in expected training costs. We currently charge $4 per hour.

Billing

Expanded access to the deep fine-tune feature

This integration is achieved via the Clarifai Python SDK and it is available here.

- Previously exclusive to professional and enterprise plans, the deep fine-tune feature is now accessible for all pay-as-you-grow plans.

- Additionally, to provide more flexibility, all users on pay-as-you-grow plans now receive a monthly free 1-hour quota for deep fine-tuning.

Added an invoicing table to the billing section of the user’s profile

This integration is achieved via the Clarifai Python SDK and it is available here.

- This new feature provides you with a comprehensive and organized view of your invoices, allowing you to easily track, manage, and access billing-related information.

New Published Models

Published several new, ground-breaking models

- Wrapped Cohere Embed-v3, a state-of-the-art embedding model that excels in semantic search and retrieval-augmentation generation systems, offering enhanced content quality assessment and efficiency.

- Wrapped Cohere Embed-Multilingual-v3, a versatile embedding model designed for multilingual applications, offering state-of-the-art performance across various languages.

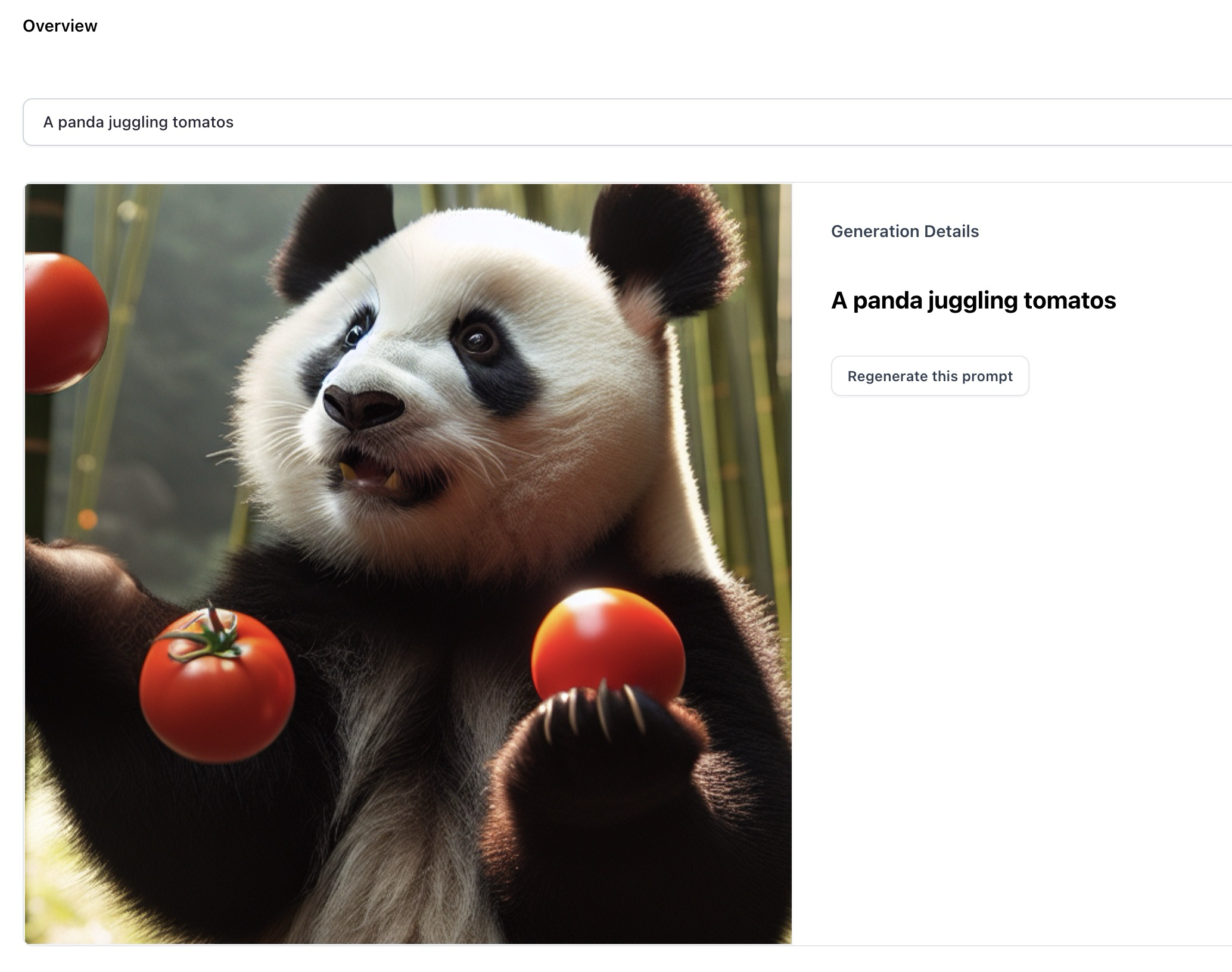

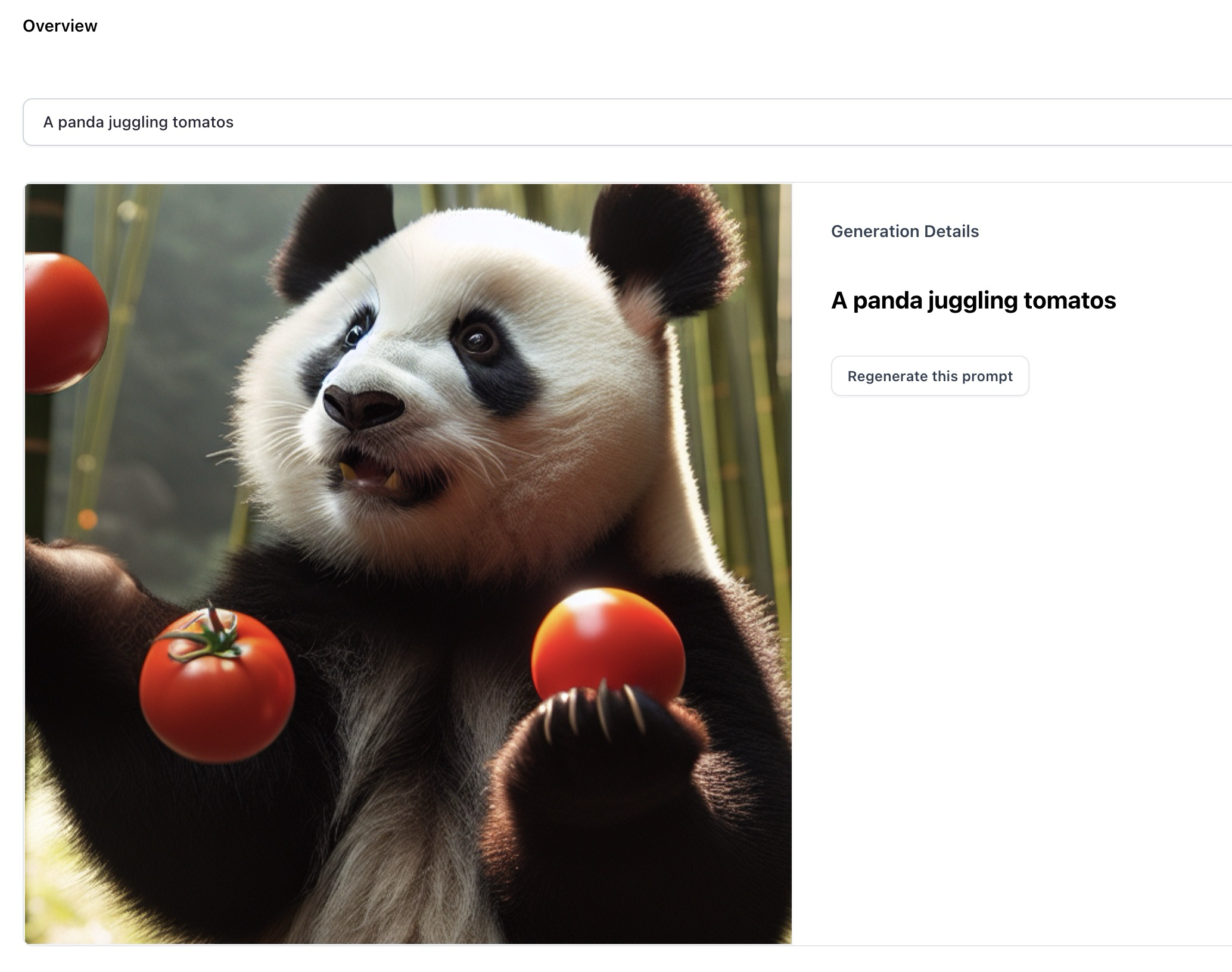

- Wrapped Dalle-3, a text-to-image generation model that allows you to easily translate ideas into exceptionally accurate images.

- Wrapped OpenAI TTS-1, a versatile text-to-speech solution with six voices, multilingual support, and applications in real-time audio generation across various use cases.

- Wrapped OpenAI TTS-1-HD, which comes with improved audio quality as compared to OpenAI TTS-1.

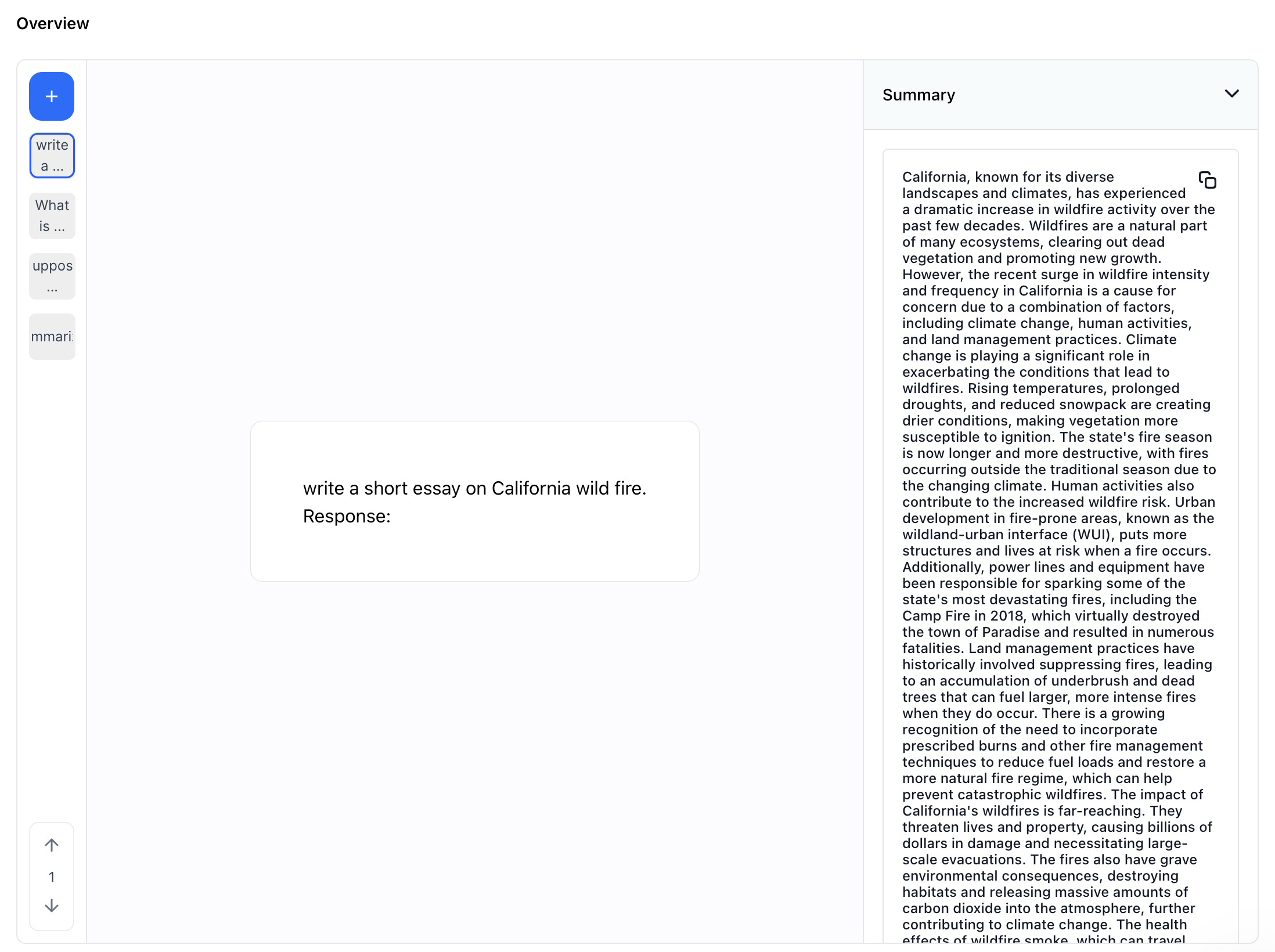

- Wrapped GPT-4 Turbo, an advanced language model, surpassing GPT-4 with a 128K context window, optimized performance, and knowledge incorporation up to April 2023.

- Wrapped GPT-3_5-turbo, an OpenAI’s generative language model that provides insightful responses. It’s a new version supporting a default 16K context window with improved instruction following capabilities.

- Wrapped GPT-4 Vision, which extends GPT-4's capabilities regarding understanding and answering questions about images—expanding its capabilities beyond just processing text.

- Wrapped Claude 2.1, an advanced language model with a 200K token context window, a 2x decrease in hallucination rates, and improved accuracy.

This integration is achieved via the Clarifai Python SDK and it is available here.

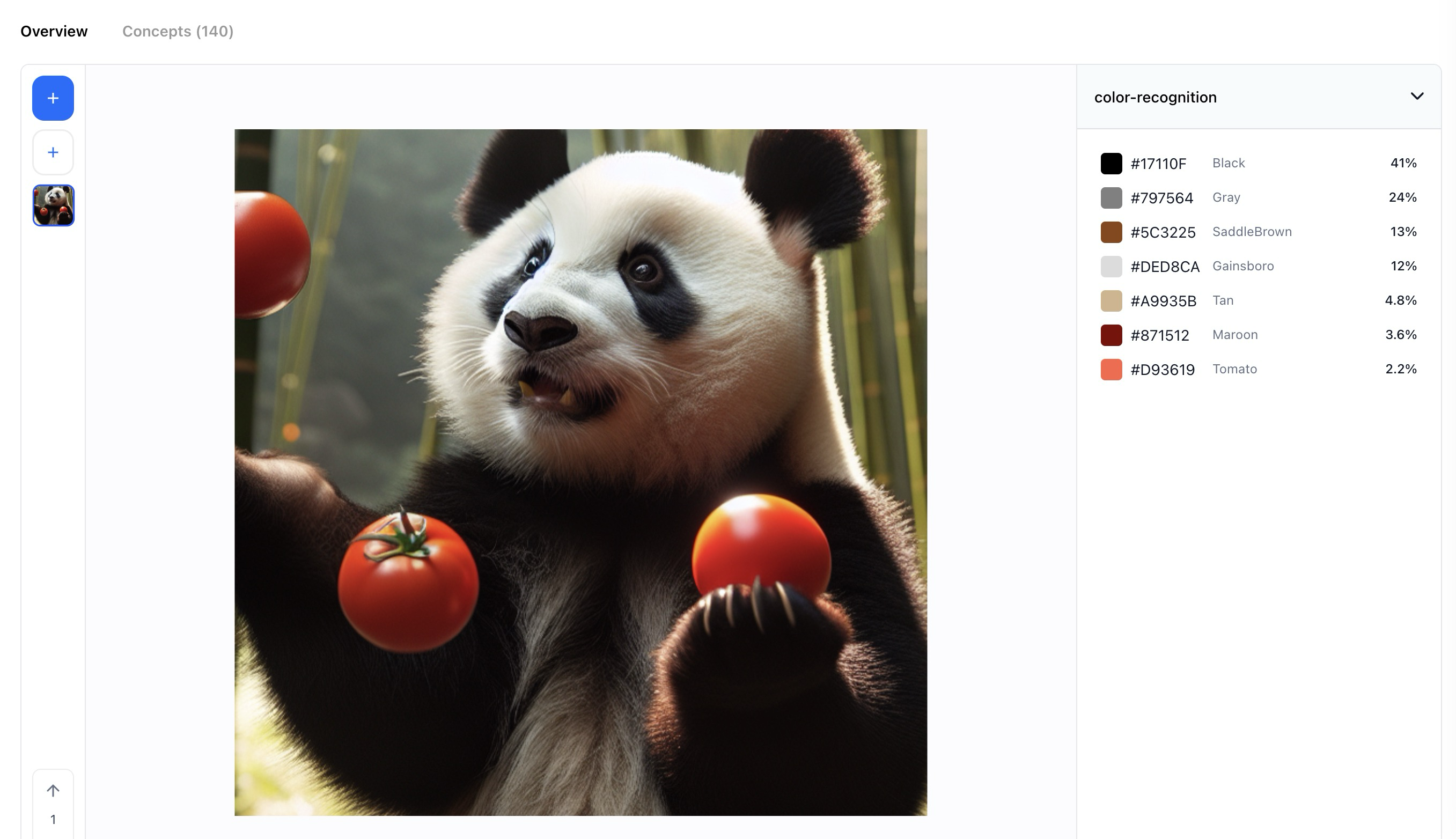

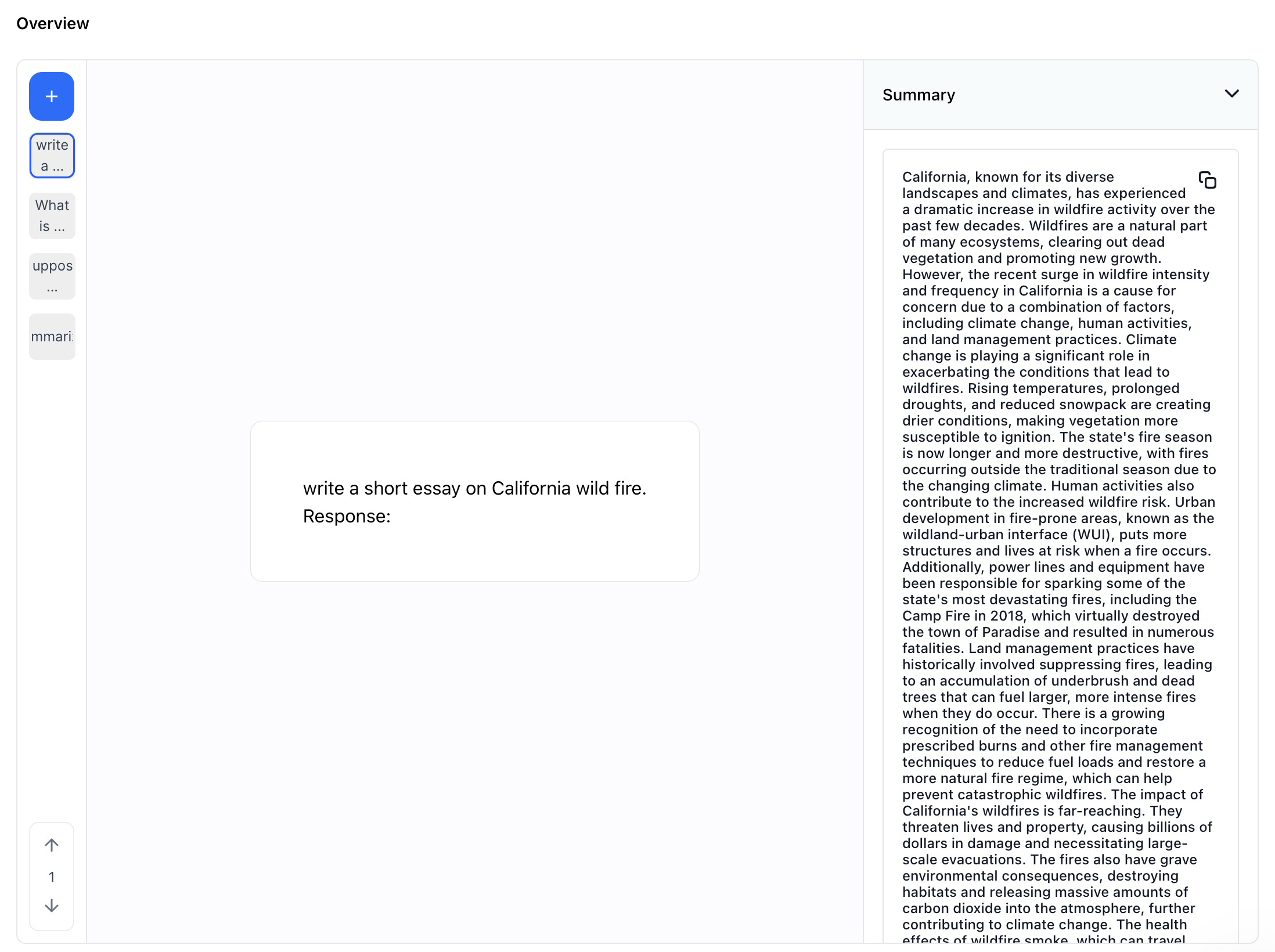

- We enhanced the UI of the color recognition model for superior performance and accuracy.

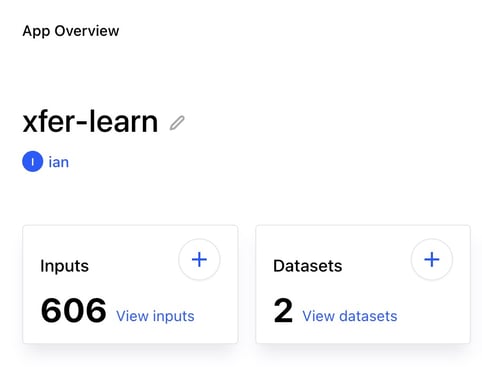

Multimodal-to-Text

Introduced multimodal-to-text model type

- This model type handles both text and image inputs, and generates text outputs. For example, you can use the openai-gpt-4-vision model to process both text and image inputs (via the API) and image inputs (via the UI).

Text Generation

[Developer Preview] Added Llama2 and Mistral base models for text generation fine-tuning

- We've renamed the text-to-text model type to "Text Generator" and added Llama2 7/13B and Mistral models with GPTQ-Lora, featuring enhanced support for quantized/mixed-precision training techniques.

Python SDK

Added model training to the Python SDK

- You can now use the SDK to perform model training tasks. Example notebooks for model training and evaluation are available here.

Added CRUD operations for runners

- We’ve added CRUD (Create, Read, Update, Delete) operations for runners. Users can now easily manage runners, including creating, listing, and deleting operations, providing a more comprehensive and streamlined experience within the Python SDK.

Apps

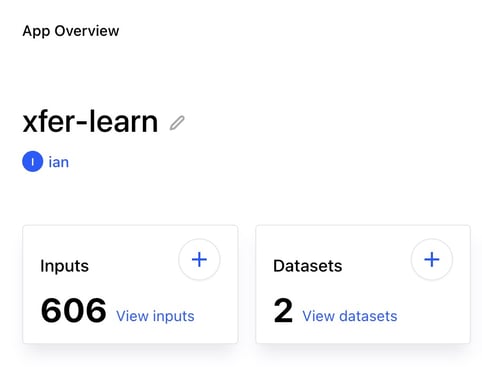

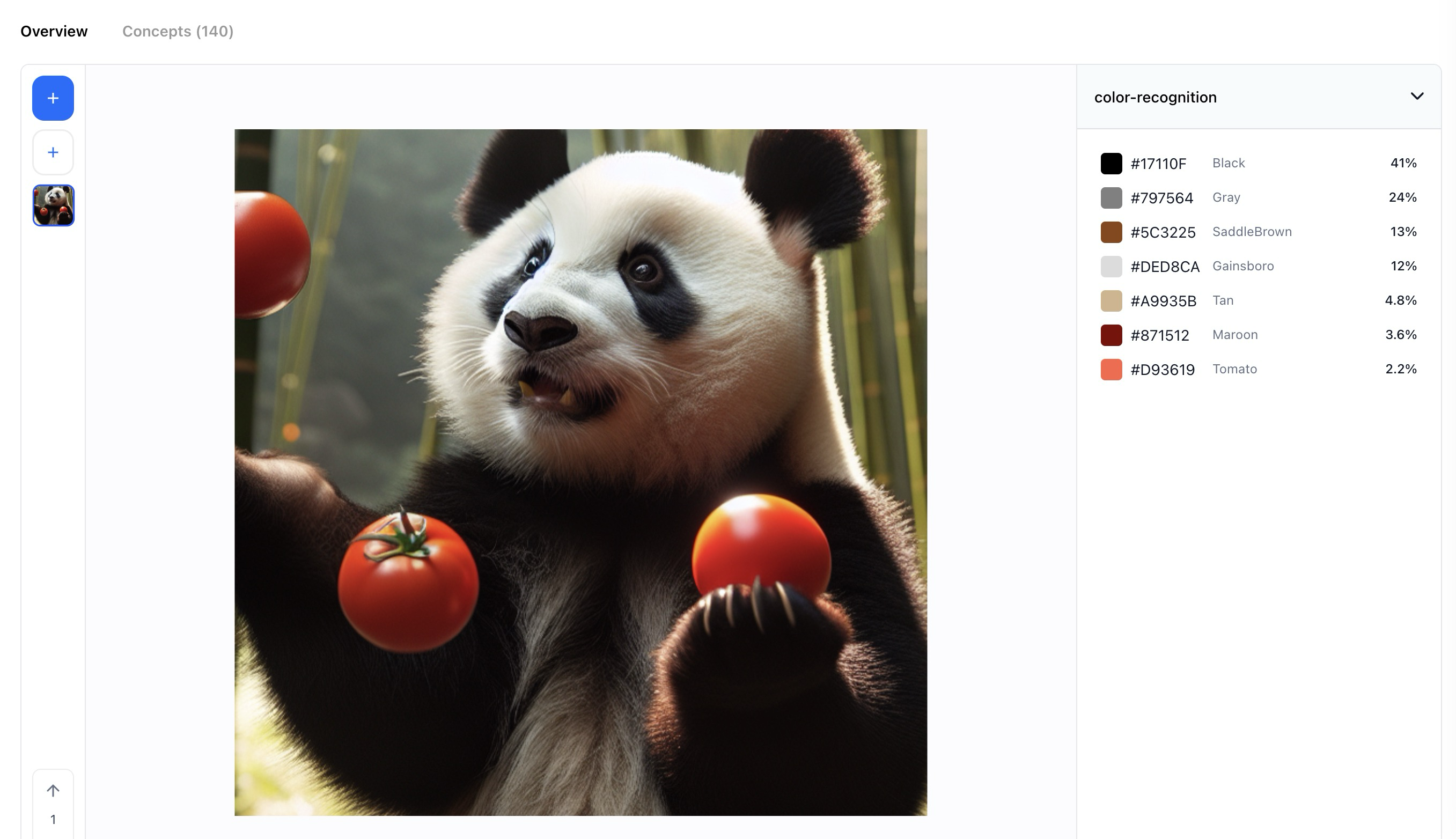

Added a section on the App Overview page that shows the number of inputs

- Similar to other resource counts, we added a count for the number of inputs in your app. Since the number of inputs could be huge, we round the displayed number to the nearest thousand or nearest decimal. Nonetheless, there is a tooltip that you can hover over to show the exact number of inputs within your app.

Optimized loading time for applications with large inputs

- Previously, applications with an extensive number of inputs, such as 1.3 million images, experienced prolonged loading times. Users can now experience faster and more efficient loading of applications even when dealing with substantial amounts of data.

Improved the functionality of the concept selector

- We’ve enhanced the concept selector such that pasting a text replaces spaces with hyphens. We’ve also restricted user inputs to alphabetic characters and allowed manual entry of dashes.

- The changes apply to various locations within an application for consistent and improved behavior.

Models

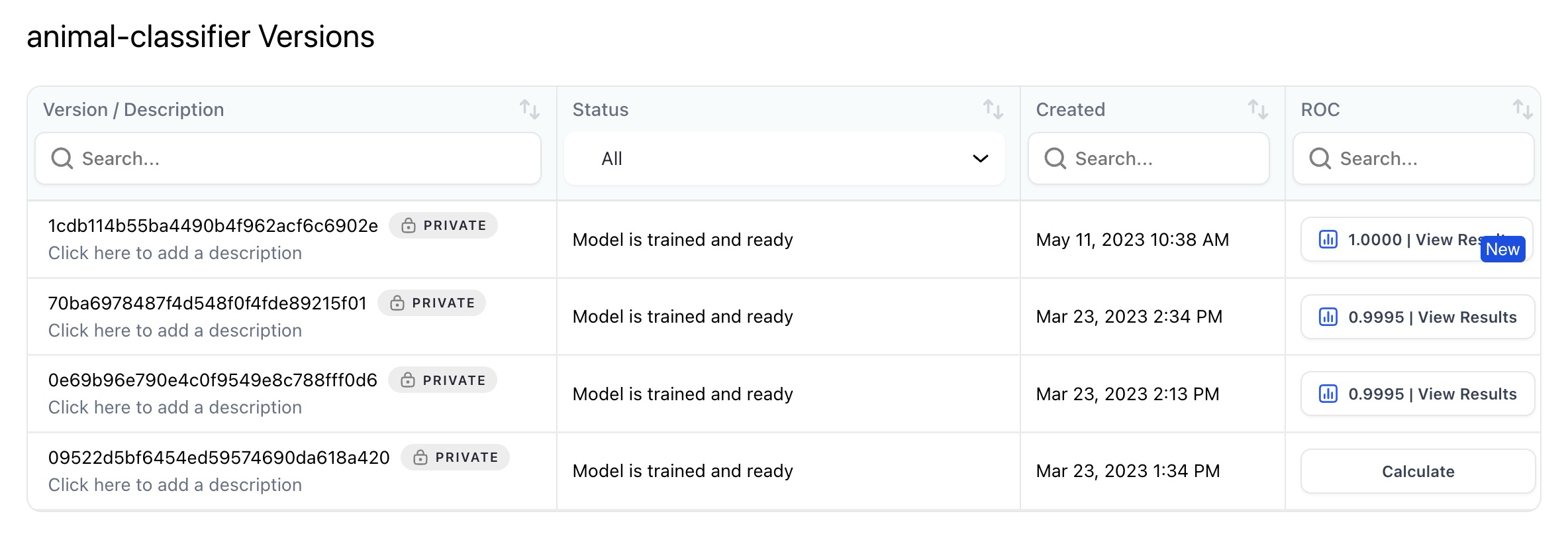

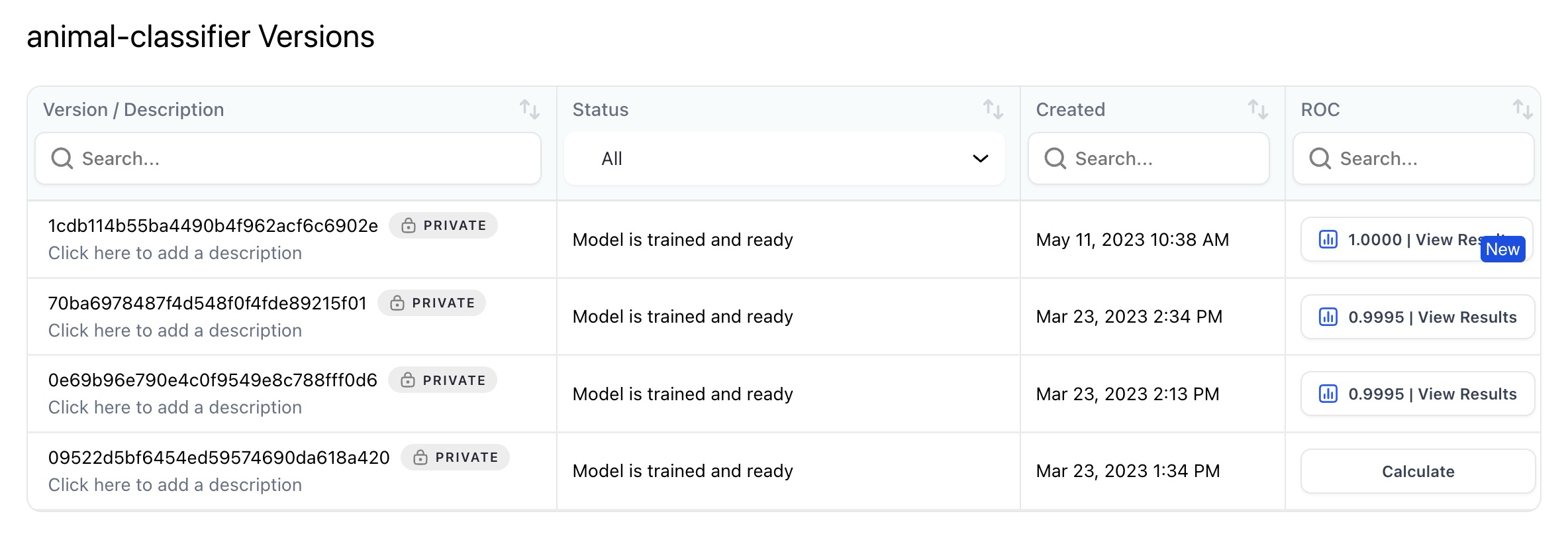

Improved the Model-Viewer's version table

- Cross-app evaluation is now supported in the model version tab to have a more cohesive experience with the leaderboard.

- Users, and collaborators with access permissions, can also select datasets or dataset versions from org apps, ensuring a comprehensive evaluation across various contexts.

- This improvement allows users to view both training and evaluation data across different model versions in a centralized location, enhancing the overall version tracking experience.

Community

Removed pinning of resources

- With the advancement of the starring functionality, pinning is no longer necessary. We removed it.

Added ability to delete a cover image

- You can now remove a cover image from any resource—apps, models, workflows, datasets, and modules.

Community

Improved bulk labeling notifications in the Input-Manager

- Users now receive a prompt toast message pop-up, confirming the successful labeling of selected inputs. This improvement ensures users receive immediate feedback, providing confidence and transparency in the bulk labeling process.

Enabled deletion of annotations directly from smart search results in the Input-Manager

- After conducting a ranked search (search by image) and switching to Object Mode, the delete icon is now active on individual tiles. Additionally, for users opting for bulk actions with two or more selected tiles, the delete button is now fully functional.

Added a pop-up toast for successful label addition or removal

- Implemented a pop-up toast message to confirm the successful addition or removal of labels when labeling inputs via grid view. The duration of the message has been adjusted for optimal visibility, enhancing user feedback and streamlining the labeling experience.

Allowed users to edit or remove objects directly from smart search results in the user interface (UI)

- Previously, users were limited to only viewing annotations from a smart object search, with the ability to edit or remove annotations disabled. Now, users have the capability to both edit and remove annotations directly from smart object search results.

- Users can now have a consistent and informative editing experience, even when ranking is applied during annotation searches.

Improved the stability of search results in the Input-Manager

- Previously, users encountered flaky search results in the Input-Manager, specifically when performing multiple searches and removing search queries. For example, if they searched for terms like #apple and #apple-tree, removed all queries, and then attempted to search for #apple again, it would be missing from the search results.

- Users can now expect stable and accurate search results even after removing search queries.

Organization Settings and Management

[Enterprise] Added a multi-org membership functionality

- Users can now create, join, and engage with multiple organizations. Previously, a user’s membership was limited to only one organization at any given time.

Added Org initials on the icon invites

- Organization’s initials are now appearing on the icon for inviting new members to join the organization. We replaced the generic blue icon with the respective organization initials for a more personalized representation—just like in the icons for user/org circles.

Labeling Tasks

Added ability to fetch the labeling metrics specifically tied to a designated task on a given dataset

- To access the metrics for a specific task, simply click on the ellipsis icon located at the end of the row corresponding to that task on the Tasks page. Then, select the "View Task metrics" option.

- This introduced functionality empowers labeling task managers with a convenient method to gauge task progress and evaluate outcomes. It enables efficient monitoring of label view counts, providing valuable insights into the effectiveness and status of labeling tasks within the broader dataset context.

- In the task creation screen, when a user selects

Worker Strategy = Partitioned, we now hide the Review Strategy dropdown, set task.review.strategy = CONSENSUS, and set task.review.consensus_strategy_info.approval_threshold = 1.

- Users now have the flexibility to conduct task consensus reviews with an approval threshold set to 1.

- We have optimized the assignment logic for partitioned tasks by ensuring that each input is assigned to only one labeler at a time, enhancing the efficiency and organization of the labeling process.

Enhanced submit button functionality for improved user experience

- In labeling mode, processing inputs too quickly could lead to problems, and there could also be issues related to poor network performance. Therefore, we’ve made the following improvements to the "Submit" button:

- Upon clicking the button, it is immediately disabled, accompanied by a visual change in color.

- The button remains disabled while the initial labels are still loading and while the labeled inputs are still being submitted. In the latter case, the button label dynamically changes to “Submitting.”

- The button is re-enabled promptly after the submitted labels have been processed and the page is fully prepared for the user's next action.

Modules

Introduced automatic retrying on MODEL_DEPLOYING status in LLM modules

- This improvement enhances the reliability of predictions in LLM modules. Now, when a MODEL_DEPLOYING status is received, a retry mechanism is automatically initiated for predictions. This ensures a more robust and consistent user experience by handling deployment status dynamically and optimizing the prediction process in LLM modules.

Improved caching in Geoint module using app state hash

- We’ve enhanced the overall caching mechanism for the Geoint module for visual searches.

- We improved the module for a more refined and enhanced user experience.