Pretty cool right? All running the same code! You can add new applications just by adding new, plain English options to the prompts.py file, and experiment away!

Author: Ian Kelk, Product Marketing Manager, Clarifai

👉 TLDR: This blog post showcases how to build an engaging and versatile chatbot using the Clarifai API and Streamlit. Links: Here's the app and the code.

This Streamlit app lets you chat with several Large Language Models. It has two main capabilities:

https://llm-text-adventure.streamlit.app

Hello, Streamlit Community! 👋 I'm Ian Kelk, a machine learning enthusiast and Developer Relations Manager at Clarifai. My journey into data science began with a strong fascination for AI and its applications, particularly within the lens of natural language processing.

It can seem intimidating to have to create an entirely new Streamlit app every time you find a new use case for an LLM. It also requires knowing a decent amount of Python and the Streamlit API. What if, instead, we can create completely different apps just by changing the prompt? This requires nearly zero programming or expertise, and the results can be surprisingly good. In response to this, I've created a Streamlit chatbot application of sorts, that works with a hidden starting prompt that can radically change its behavour. It combines the interactivity of Streamlit's features with the intelligence of Clarifai's models.

In this post, you’ll learn how to build an AI-powered Chatbot:

Step 1: Create the environment to work with Streamlit locally

Step 2: Create the Secrets File and define the Prompt

Step 3: Set Up the Streamlit App

Step 4: Deploy the app on Streamlit's cloud.

The application integrates the Clarifai API with a Streamlit interface. Clarifai is known for it's complete toolkit for building production scale AI, including models, a vector database, workflows, and UI modules, while Streamlit provides an elegant framework for user interaction. Using a secrets.toml file for secure handling of the Clarifai Personal Authentication Token (PAT) and additional settings, the application allows users to interact with different Language Learning Models (LLMs) using a chat interface. The secret sauce however, is the inclusion of a separate prompts.py file which allows for different behaviour of the application purely based on the prompt.

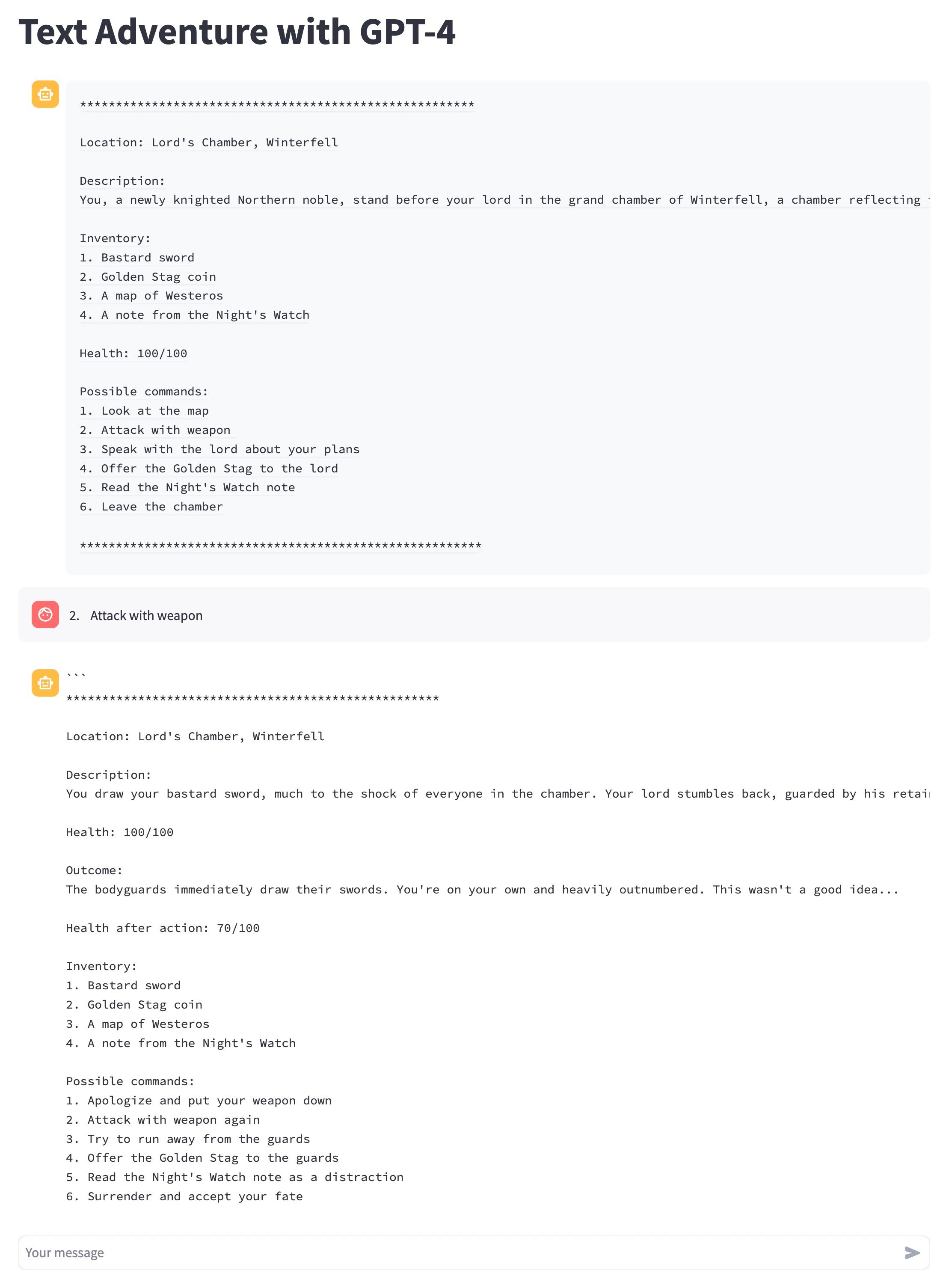

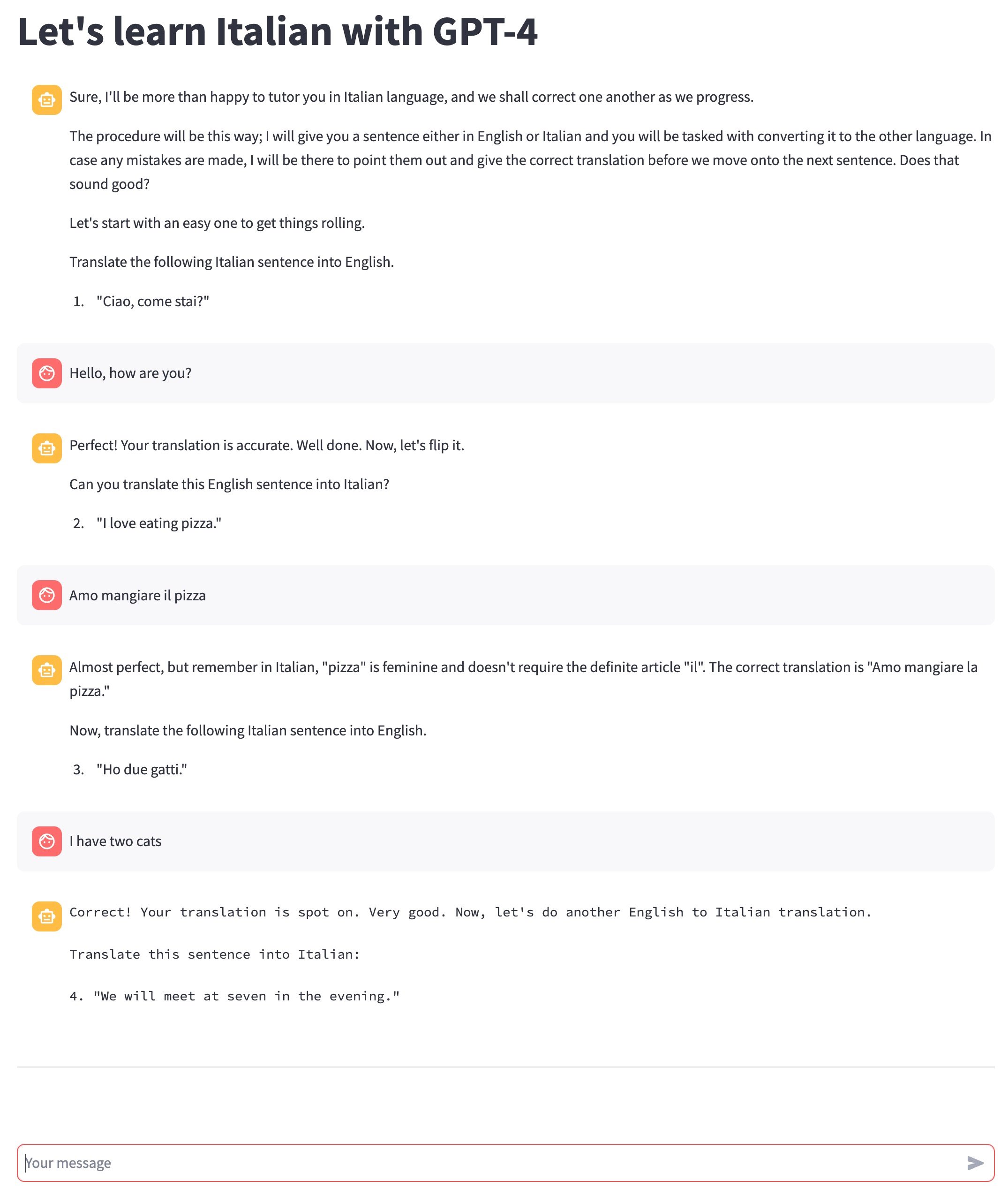

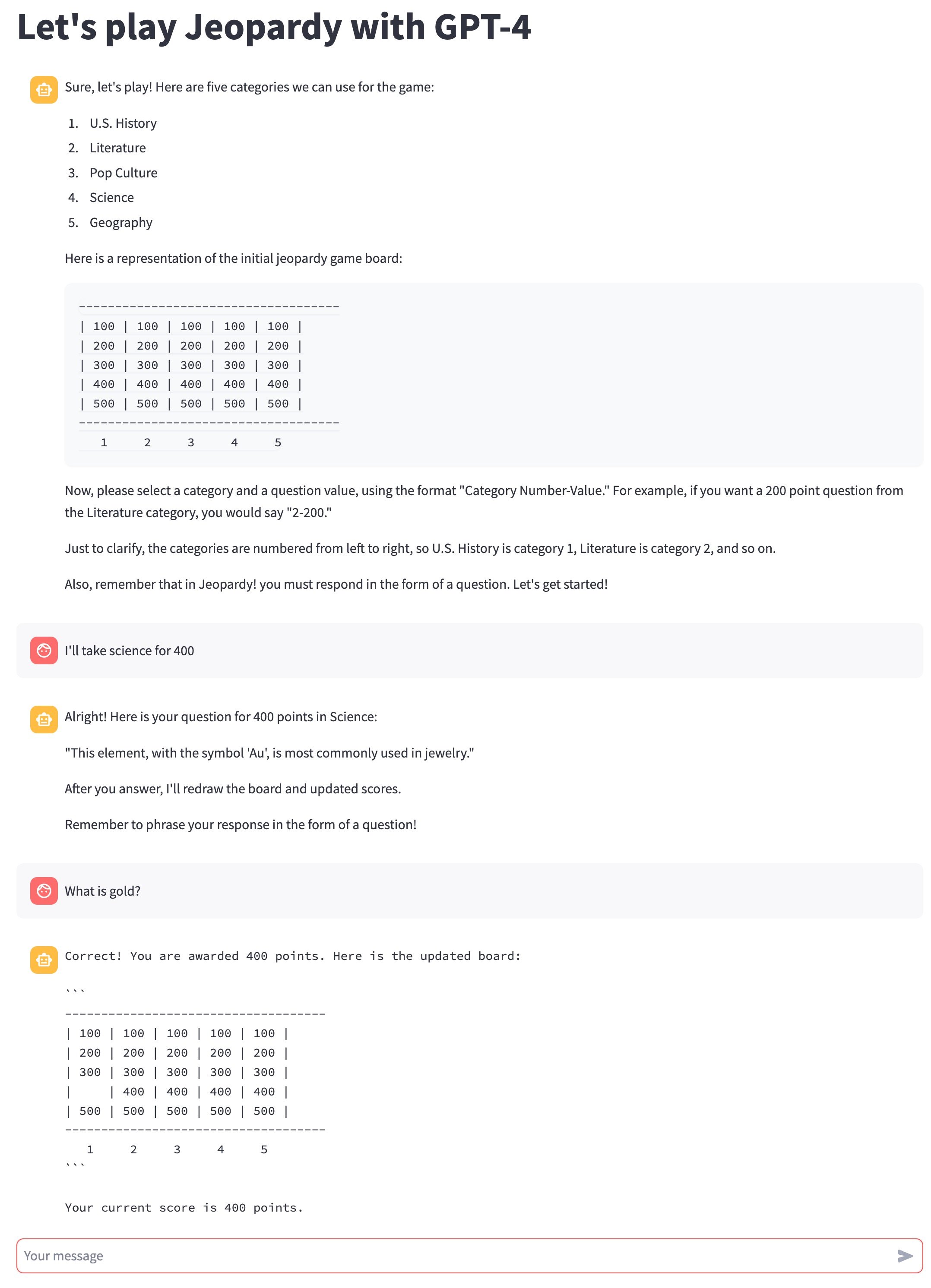

Let's take a look at the app in action:

As with any Python project, it's always best to create a virtual environment. Here's how to create a virtual environment named llm-text-adventure using both conda and venv in Linux:

conda:Create the virtual environment:

Note: Here, I'm specifying Python 3.8 as an example. You can replace it with your desired version.

Activate the virtual environment:

venv:First, ensure you have venv module installed. If not, install the required version of Python which includes venv by default. If you have Python 3.3 or newer, venv should be included.

Create the virtual environment:

Note: You may need to replace python3 with just python or another specific version, depending on your system setup.

Activate the virtual environment:

When the environment is activated, you'll see the environment name (llm-text-adventure) at the beginning of your command prompt.

To deactivate the virtual environment and return to the global Python environment:

That's it! Depending on your project requirements and the tools you're familiar with, you can choose either conda or venv.

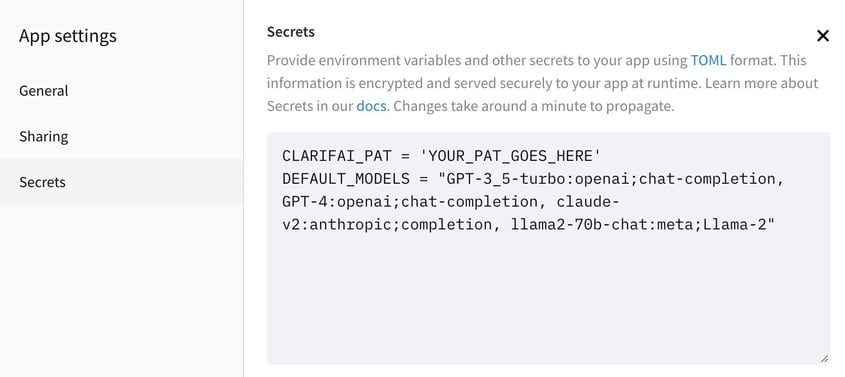

The next step starts with creating a secrets.toml file which stores Clarifai's PAT and defines the language learning models that will be available to the chatbot.

This file will hold both the PAT (personal authotization token) for your app, which you would never want to publicly share. The other line is our default models, which isn't an important secret but determines which LLMs you'll offer.

Here's an example secrets.toml. Note that when hosting this on the Streamlit cloud, you need to go into your app settings -> secrets to add these lines so that the Streamlit servers can use the information. The following DEFAULT_MODELS provides GPT-3.5 and GPT-4, Claude v2, and the three sizes of Llama2 trained for instructions.

On Streamlit's cloud, this would appear like this:

The second step entails setting up the Streamlit app (app.py). I've broken it up into several substeps since this is long section.

Importing Python libraries and modules:

Import essential APIs and modules needed for the application like Streamlit for app interface, Clarifai for interface with Clarifai API, and Chat related APIs.Define helper functions:

These functions ensure we load the PAT and LLMs, keep a record of chat history, and handle interactions in the chat between the user and the AI.Define prompt lists and load PAT:

Define the list of available prompts along with the personal authentication token (PAT) from the secrets.toml file. Select models and append them to the llms_map.Prompt the user for prompt selection:

Use Streamlit's built-in select box widget to prompt the user to select one of the provided prompts from prompt_list.Choose the LLM:

Present a choice of language learning models (LLMs) to the user to select the desired LLM.Initialize the model and set the chatbot instruction:

Load the language model selected by the user. Initialize the chat with the selected prompt.Initialize the conversation chain:

Use a ConversationChain to handle making conversations between the user and the AI.Initialize the chatbot:

Use the model to generate the first message and store it into the chat history in the session state.Manage Conversation and Display Messages:

Show all previous chats and call chatbot() function to continue the conversation.That's the step-by-step walkthrough of what each section in app.py does. Here is the full implementation:

This is the fun part! All the other code in this tutorial already works fine out of the box, and the only thing you need to change to get different behaviour is the prompts.py file:

Pretty cool right? All running the same code! You can add new applications just by adding new, plain English options to the prompts.py file, and experiment away!

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy