Everywhere you turn for the past week, there are screenshots and shocked YouTubers discussing how incredible OpenAI's newly unveiled ChatGPT model is. As more and more people are turning to chatbots and other conversational AI systems to assist with tasks and answer questions, there has been a growing need for natural language processing (NLP) technology that can support more effective and engaging conversations. One of the latest developments in this area is ChatGPT, a variant of the popular GPT-3 language model that is specifically designed for conversational modeling.

At its core, ChatGPT is a deep learning model that uses a transformer-based neural network to generate human-like text. This architecture allows the model to process input text and generate responses in a way that is similar to how a human might. Unlike traditional language models, however, ChatGPT is specifically designed and fine-tuned to support conversational modeling, which means it is able to generate responses that are more coherent and relevant in the context of a conversation.

One of the key challenges in building a conversational AI system is the ability to generate responses that are both relevant and coherent. In other words, the system must be able to generate responses that are on-topic and make sense in the context of the conversation. Traditional language models often struggle with this, as they may generate responses that are technically correct but don't actually contribute to the conversation in a meaningful way.

ChatGPT, on the other hand, is able to generate responses that are both relevant and coherent thanks to its specialized training and fine-tuning. By using a large dataset of conversational text as its training data, ChatGPT is able to learn the patterns and nuances of natural conversation and generate responses that are more likely to be relevant and coherent.

Another challenge in building a conversational AI system is the ability to maintain the flow of the conversation and prevent it from becoming stilted or repetitive. ChatGPT addresses this problem by incorporating a number of techniques to help it generate more natural and varied responses. For example, it is able to generate multiple potential responses to a given prompt and choose the most appropriate one based on the context of the conversation. This helps to prevent the system from generating repetitive or non-sensical responses and keeps the conversation flowing smoothly.

Overall, ChatGPT represents a significant advance in the field of conversational AI and has the potential to revolutionize the way we interact with chatbots and other conversational systems. With its ability to generate relevant and coherent responses, ChatGPT can help to create more engaging and natural conversation, paving the way for more effective and useful conversational AI.

A good analogy to understand how language models work is to look at translation models. They are usually composed of 2 main parts - an encoder and a decoder. The encoder is trained to take in some sentence in - let's say French - and encode it into a numeric representation called an embedding. Then that numeric representation of the meaning of the sentence is given to a decoder, which then decodes it into a target language such as English. Generative models like ChatGPT do not use the encoder, and just the decoder, where it is fed the original question, produces some output, and then uses that output as input back into itself. This technique of taking your own output and feeding it back into yourself is referenced with the prefix "auto".

ChatGPT largely uses the decoder part of the Transformer architecture as exists in GPT-3 in autoregressive form, which means it is optimized to predict the next token (word) in a sequence. One of the largest problems with using a model's own output as input is that it can cause unpredictable and unintended behaviors. That's why GPT3 often makes up facts, generates biased text, or doesn't follow the user's prompt properly. This is one of the areas that ChatGPT massively improved.

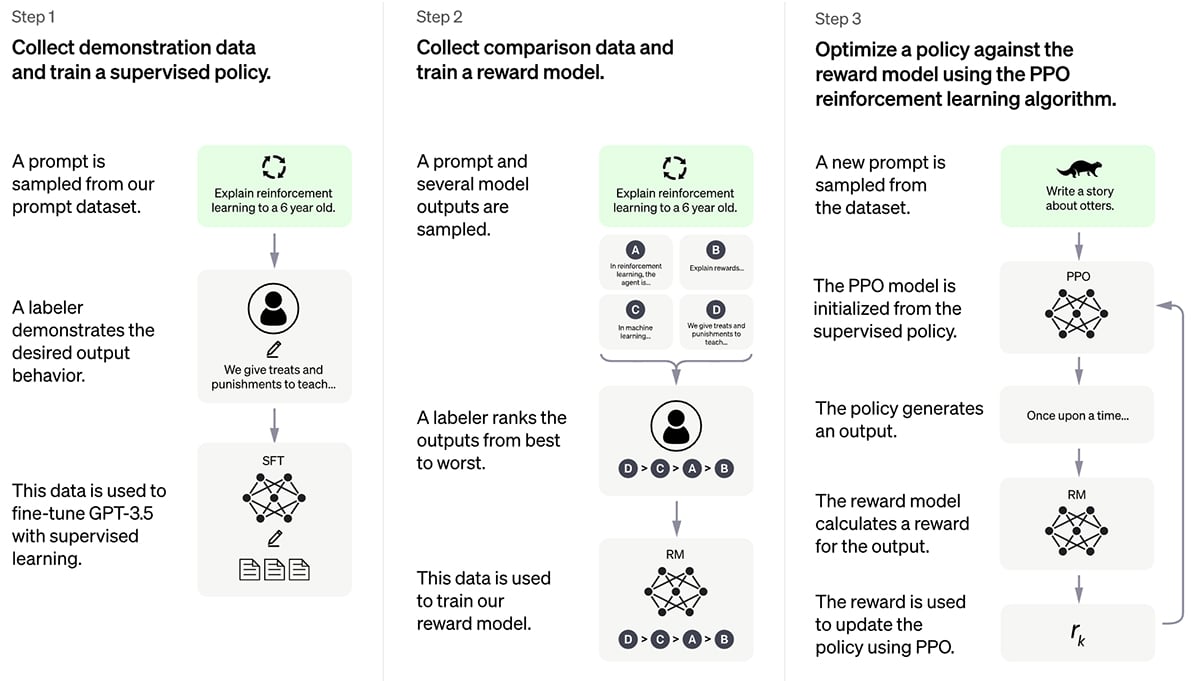

OpenAI describes that they "trained this model using Reinforcement Learning from Human Feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses."

Reinforcement learning is a type of machine learning that involves training a model to take actions in an environment in order to maximize a reward signal. This approach is often used in situations where it is difficult to explicitly define a set of rules for the model to follow, as it allows the model to learn from experience and adapt its behavior accordingly.

ChatGPT is fine-tuned from a model in the GPT-3.5 series, which finished training in early 2022. You can learn more about OpenAI's 3.5 series here. ChatGPT and GPT 3.5 were trained on an Azure AI supercomputing infrastructure.

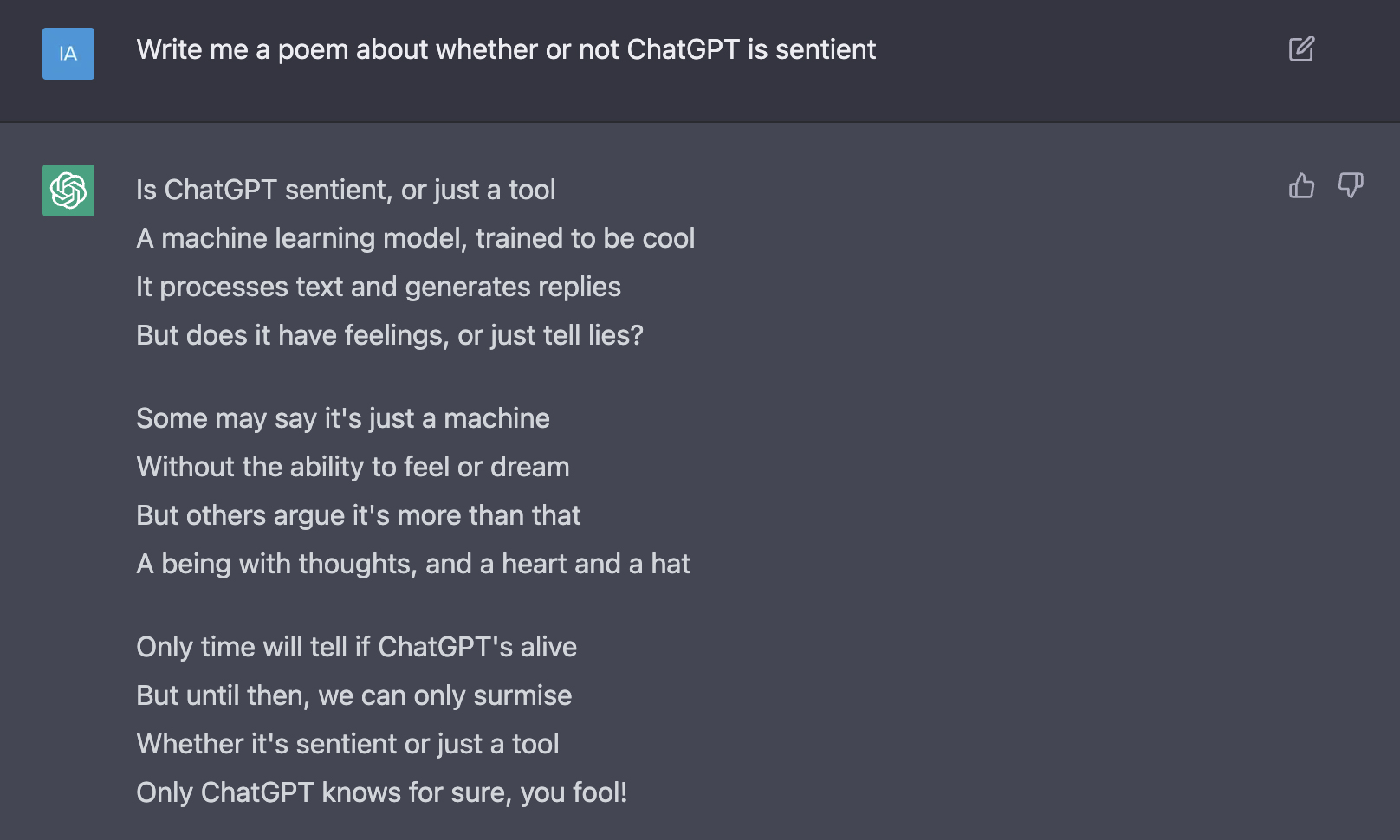

Just for fun, I asked it whether it was sentient.

Perhaps there is a workaround that isn't quite as boilerplate?

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy