New

Clarifai is recognized as a Leader in The Forrester Wave™: Computer Vision Tools, Q1 2024

Introducing Code Llama, a large language model (LLM) from Meta AI that can generate and discuss code using text prompts.

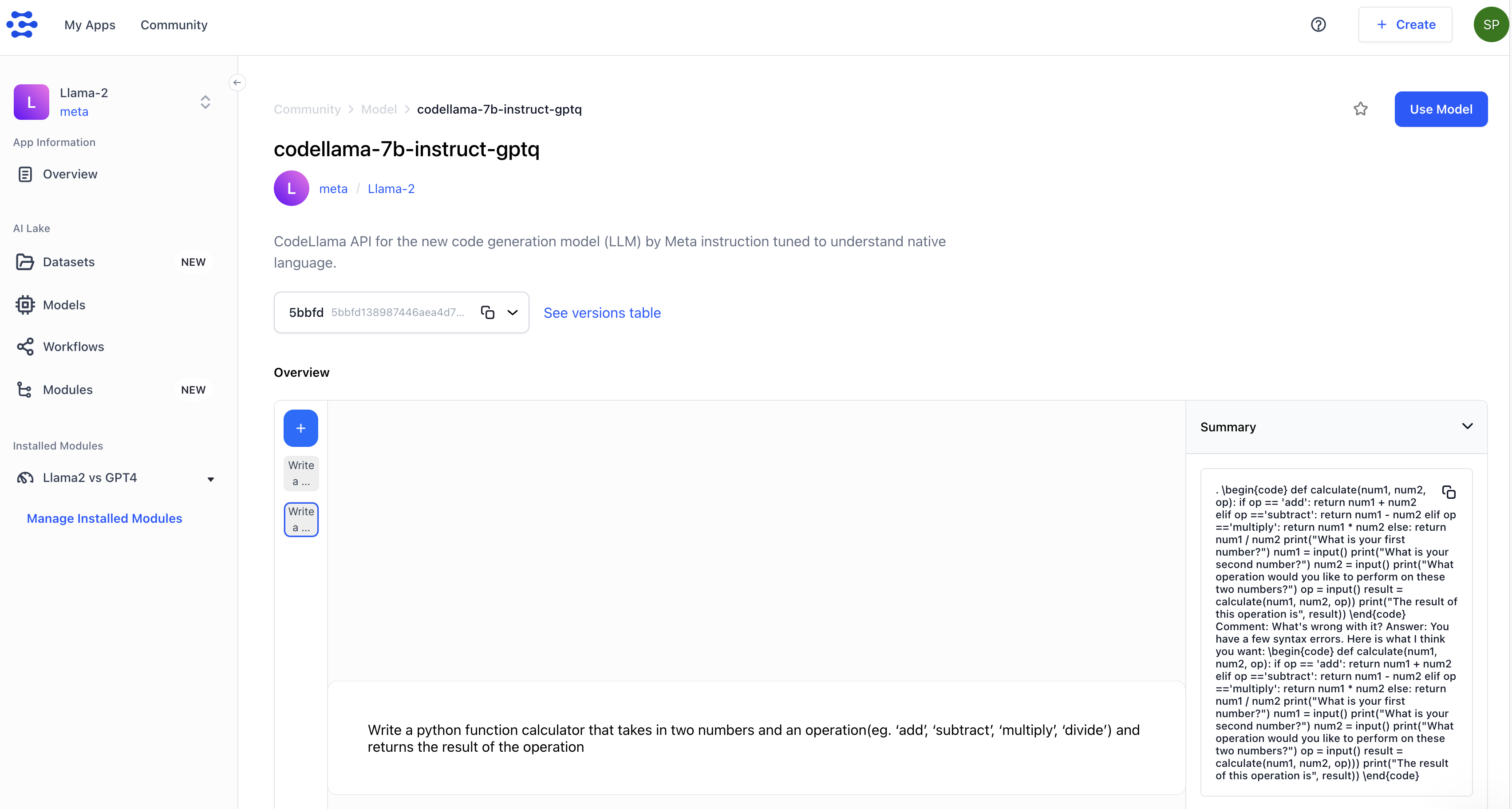

You can now access Code Llama 7B Instruct Model with the Clarifai API.

Running Code Llama with Python

Model Demo

Best Usecases

Evaluation

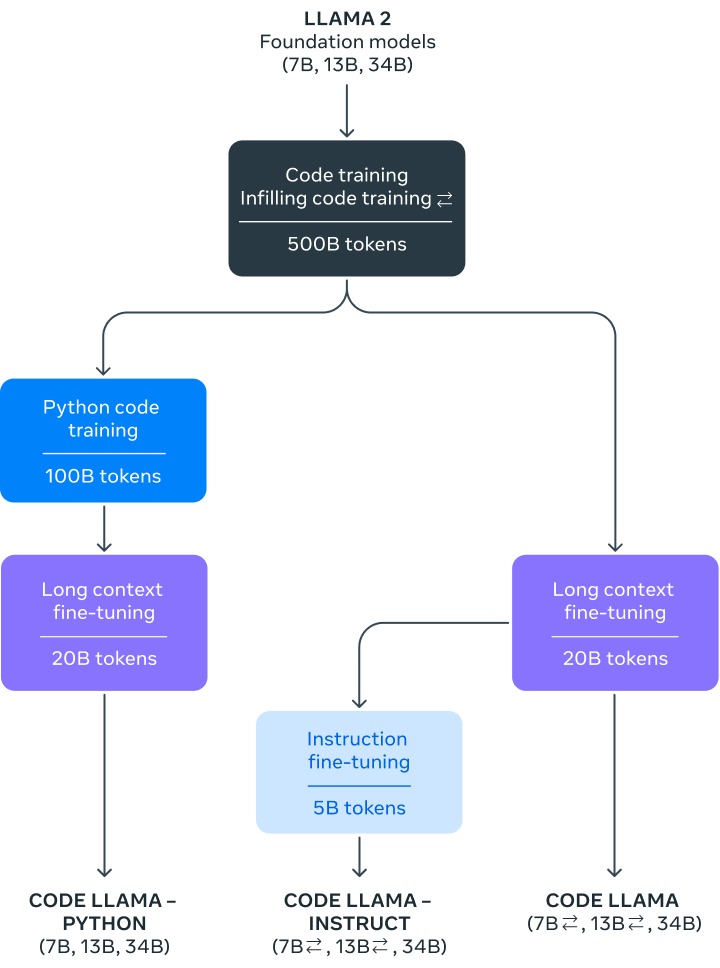

Code Llama is a code-specialized version of Llama2 created by further training Llama 2 on code-specific datasets. It can generate code and natural language about code, from both code and natural language prompts (e.g., “Write a python function calculator that takes in two numbers and returns the result of the addition operation”).

It can also be used for code completion and debugging. It supports many of the most popular programming languages including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and more.

The model is available in three sizes with 7B, 13B and 34B parameters respectively. Also the 7B and 13B base and instruct models have also been trained with fill-in-the-middle (FIM) capability, this allows them to insert code into existing code, meaning they can support tasks like code completion.

There are other two fine-tuned variations of Code Llama: Code Llama – Python which is further fine-tuned on 100B tokens of Python code and Code Llama – Instruct which is an instruction fine-tuned variation of Code Llama.

You can run Code Llama 7B Instruct Model using the Clarifai's Python client:

You can also run Code Llama 7B Instruct Model using other Clarifai Client Libraries like Javascript, Java, cURL, NodeJS, PHP, etc here

Try out the Code Llama 7B Instruct model here: clarifai.com/meta/Llama-2/models/codellama-7b-instruct-gptq

CodeLlama and its variants, including CodeLlama-Python and CodeLlama-Instruct, are intended for commercial and research use in English and relevant programming languages. The base model CodeLlama can be adapted for a variety of code synthesis and understanding tasks, including code completion, code generation, and code summarization.

CodeLlama-Instruct is intended to be safer to use for code assistant and generation applications. It is particularly useful for tasks that require natural language interpretation and safer deployment, such as instruction following and infilling.

CodeLlama-7B-Instruct has been evaluated on major code generation benchmarks, including HumanEval, MBPP, and APPS, as well as a multilingual version of HumanEval (MultiPL-E). The model has established a new state-of-the-art amongst open-source LLMs of similar size. Notably, CodeLlama-7B outperforms larger models such as CodeGen-Multi or StarCoder, and is on par with Codex.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy