“90 Percent of the Big Data We Generate Is an Unstructured Mess” - PC Mag

“80 to 90 percent of data generated and collected by organizations, is unstructured” - MongoDB

“Big Data and unstructured data often go together. Unstructured data comprises the vast majority of data found in an organization.” - Merrill Lynch

“Volumes are growing rapidly — many times faster than the rate of growth for structured databases. The global datasphere will grow to 163 zettabytes by 2025 and the majority of that will be unstructured.” - IDC and Seagate

Most data is not organized in a pre-defined way. We call this data unstructured, because it lacks a useful organizational structure. Without a useful organizational structure, your data is probably not going to do you much good. You can think of unstructured data as a house without an address: the house might be nice, but no one is going to use it if they don’t know where it is.

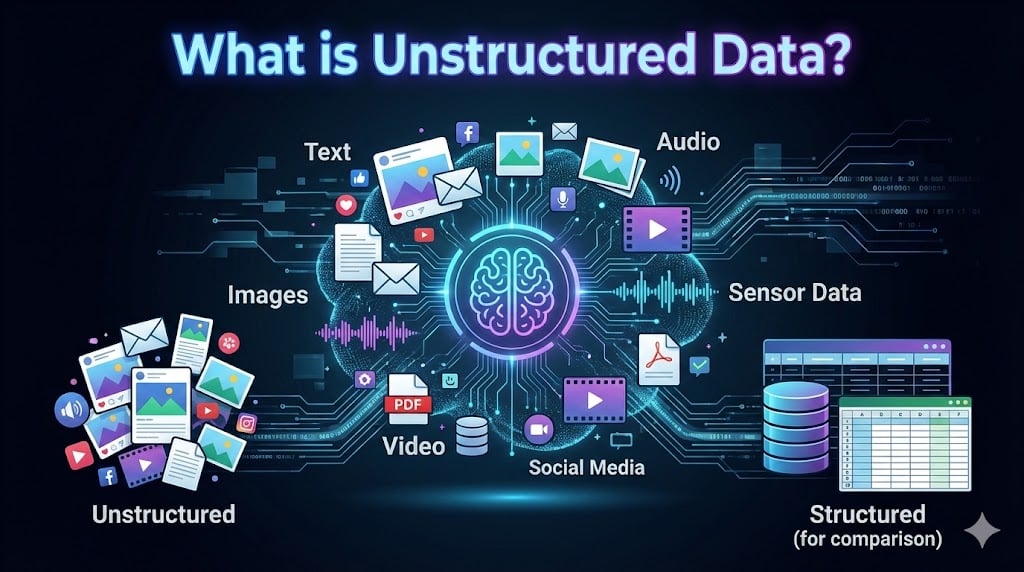

Broadly speaking, there are two different sources of unstructured data: There is unstructured data generated by people, and unstructured data that is generated by machines.

Digital photos, audio, and video files are some of the most common types of unstructured data that we create in our daily lives. This data is sometimes private, it’s sometimes shared on social media channels, photo sharing sites and YouTube. It's sometimes created by professional media and entertainment organizations. Many business documents are also unstructured, such as records and invoices.

There is also a huge amount of unstructured data that is machine generated. Scientific data, digital surveillance, satellite imagery, geo-spatial data, weather data and various types of sensor data are all generated automatically by machines.

Modern organizations are inundated with unstructured data because both people and machines are prolific creators of digital content:

The sheer volume of data is staggering. Analysts estimate that the global datasphere stood at 149 zettabytes in 2024 and will reach 181 zettabytes in 2025. Research suggests that 80 % of enterprise data is unstructured and that the volume of unstructured data is growing three times faster than structured data. Unstructured content therefore dominates our digital universe.

Table 1 – Common sources of unstructured data

|

Source type |

Examples |

Potential business value |

Data complexity |

|

Human‑generated |

Photos and videos from smartphones; emails; PDFs; scanned invoices; social media posts and comments; chat transcripts |

Insights into customer sentiment; marketing campaign effectiveness; legal documentation; brand reputation |

Highly variable file formats and content; may include multiple languages or media types |

|

Machine‑generated |

IoT sensor readings; satellite images; security camera footage; audio recordings from call centres; website click streams |

Predictive maintenance; anomaly detection; resource optimization; environmental monitoring |

Often high‑frequency and large‑volume streams; may require real‑time processing |

|

Semi‑structured (metadata) |

JSON/XML documents with tags; log files with time stamps; EXIF data in images; email headers |

Some fields can be queried directly; supports basic search and filtering |

Contains both structured attributes and free‑form text; requires parsing to fully exploit |

A useful way to think about unstructured data ingestion is to break the process into stages:

Analysts repeatedly warn that unstructured data sprawl is a growing risk. A 2024 Market Pulse Survey found that 71 % of enterprises struggle to manage and protect unstructured data. Without proper governance, 21 % of all data remains completely unprotected and 54 % of company data is stale, exposing organizations to compliance and security issues. Industry experts advise creating comprehensive data catalogs and applying AI‑driven classification to reduce these risks.

Unstructured data comes in many formats, and it’s a real challenge for conventional software to ingest, process, and analyze. This lack of organization results in irregularities and ambiguities that have made this kind of data useless for companies using conventional approaches to data analysis. Lack of consistent internal structure doesn’t conform to what typical data mining systems can work with.

With the help of AI and machine learning, new tools are emerging that can search through vast quantities of unstructured data to uncover beneficial and actionable business intelligence. AI-powered technology like Clarifai’s Spacetime visual search functions at near real-time speed and custom training can automatically identify the patterns and insights they uncover in unstructured data. In effect, these AI systems can help you transform unstructured data into structured data.

Artificial intelligence excels at recognizing patterns in images, text, speech and video. Different AI techniques are applied depending on the data type:

These AI models require training data. Techniques such as supervised learning (using labeled datasets), self‑supervised learning (learning from data without labels) and transfer learning allow models to generalize. Once trained, models can turn raw images into structured labels or convert audio recordings into transcribed text.

|

Data type |

Example AI methods |

Pros |

Cons |

|

Text (documents, emails, social posts) |

Tokenization, Transformers, Named‑Entity Recognition, Sentiment Analysis |

Captures meaning and context; can handle multilingual content; enables semantic search |

Requires preprocessing (cleaning, stop‑word removal); models may produce biased results if training data is skewed |

|

Images |

Convolutional neural networks (CNNs), Vision Transformers, Object Detection (YOLO, Faster R‑CNN) |

High accuracy in recognizing objects and scenes; automates inspection and classification |

Requires large labeled datasets; computationally intensive to train |

|

Video |

Spatiotemporal CNNs, 3D Convolutions, Recurrent Neural Networks (RNNs) |

Detects motion and temporal patterns; useful for surveillance and sports analytics |

Processing long videos is resource‑heavy; annotation is time‑consuming |

|

Audio |

Recurrent neural networks (RNNs), Transformers, Spectrogram‑based CNNs |

Enables speech‑to‑text transcription; identifies anomalies in machine sounds |

Background noise and accents can reduce accuracy; training data must capture variability |

A systematic pipeline ensures that AI models deliver reliable insights:

Why use AI now? AI adoption is accelerating: more than three‑quarters of organizations use AI somewhere in their business, and investments are soaring. However, the majority of enterprises still struggle to manage unstructured data, highlighting the need for governance and data preparation.

Structure makes data easier to parse and analyze. A clear pattern or pathway for locating data makes data easy to access.

Once records are held in separate tables based on their categories, it is straightforward to insert, delete or update records that are subjected to the latest business requirements. Any number of new or existing tables or columns of data can be inserted or modified depending on the conditions provided.

Using join queries and conditional statements one can combine all, or any number of related tables in order to fetch the required data. Resulting data can be modified based on the values from any column, on any number of columns, which permits the user to effortlessly recover the relevant data as the result. It allows one to pick on the desired columns to be incorporated in the outcome so that only appropriate data will be displayed. Data can be deduplicated (de-duped), and noisy, irrelevant data can be eliminated.

Structured databases can grow and be modified over time. Changes can be made to a database configuration as well, which can be applied without difficulty devoid of crashing the data or the other parts of the database.

Increased security is also possible once data is structured. It is possible to tag some data categories as confidential and others not. When a data analyst tries to login with a username and password, boundaries can be set for their level of access, by providing admission only to the categories that they are allowed to work on, depending on their access level.

Challenges of structuring unstructured data:

|

Aspect |

Advantages |

Limitations |

|

Analysis & insight |

Enables efficient querying, joins and aggregations; supports business intelligence and reporting; easier to apply statistical models |

May oversimplify complex information; subtle relationships or sentiments can be lost |

|

Data management |

Deduplicates and normalizes records; easier to enforce data quality and integrity rules; supports scalability and versioning |

Requires significant preprocessing effort (OCR, NLP, labeling); ongoing maintenance is necessary |

|

Security & compliance |

Fine‑grained access controls on tables and columns; data can be tagged as confidential and masked or encrypted |

Initial classification mistakes could expose sensitive information; additional overhead to maintain access policies |

|

Performance & integration |

Integrates with relational databases and cloud warehouses; faster query performance; supports indexing and caching |

Structured schemas can be inflexible; schema changes require migrations and can slow development |

Experts emphasize that structuring data is not merely a technical task; it is a governance and cultural challenge. Chief data officers often struggle to balance agility with compliance, especially when dealing with personal or regulated information. Thought leaders recommend automating classification using machine learning to reduce manual burden and implementing metadata‑rich catalogs to track the source and lineage of structured records.

It is probably important to point out that there is a lot of data out there that comes with some organizing properties, even though it may not be fully classified and structured in all of the ways that you want. Oftentimes, data contains internal tags and markings that allow for grouping and hierarchies. Native metadata allows for basic classification and keyword searches. It is common for semi-structured data to come in the form of image and video metadata, email, XML, JSON, or NoSQL.

Semi‑structured data sits between structured and unstructured content. It contains self‑describing tags or markers that provide some organization but does not fit neatly into relational tables. Common formats include:

Because semi‑structured files include metadata, they can often be ingested by document databases or NoSQL systems without strict schemas. Tools such as MongoDB, Amazon DynamoDB and Apache CouchDB store documents as key‑value pairs and allow queries over the embedded fields. Semi‑structured data is especially common in modern web applications and IoT platforms.

|

Property |

Structured |

Semi‑structured |

Unstructured |

|

Schema |

Fixed schema (tables with defined columns) |

Flexible schema with tags or key‑value pairs |

No predefined schema |

|

Examples |

SQL databases, spreadsheets, ERP data |

JSON, XML, CSV with variable columns, log files, email metadata |

Images, videos, audio, social media posts, free‑form text |

|

Storage systems |

Relational databases (MySQL, PostgreSQL) |

Document stores (MongoDB), key‑value databases (Redis), data lakes |

Object stores (S3, Azure Blob), file systems, data lakes |

|

Querying |

SQL; can perform joins and aggregations |

SQL‑like (e.g., MongoDB query language) or search APIs; supports hierarchical queries |

Requires search indexes, ML models or manual scanning |

|

Advantages |

Easy to search and analyze; efficient storage and indexing |

Flexible structure; can accommodate diverse data; easier to add new attributes |

Rich context; can capture nuance and multi‑modal information |

|

Challenges |

Inflexible; schema changes are costly |

Data may be inconsistent; still requires parsing; limited support for joins |

Hard to search; requires AI for interpretation; higher storage costs |

Data management professionals note that semi‑structured data offers a practical compromise. It preserves some structure through tags while allowing flexibility for diverse content. Document databases, graph databases and search engines (e.g., Elasticsearch) make it easier to query semi‑structured fields. However, developers must still implement validation and indexing to ensure that key attributes can be retrieved efficiently. When designing analytics solutions, teams should evaluate whether semi‑structured formats provide enough organization or whether a full transformation to structured data is necessary.

With recent advances in machine learning and AI, the wealth of information hiding away in unstructured data stores can now be used to guide business decisions, and create a whole new generation of products and services. Companies can tap into value-laden data like customer interactions, rich media, and social network conversations.

© 2026 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy